Xiyue Guo

SGFormer: Satellite-Ground Fusion for 3D Semantic Scene Completion

Mar 21, 2025

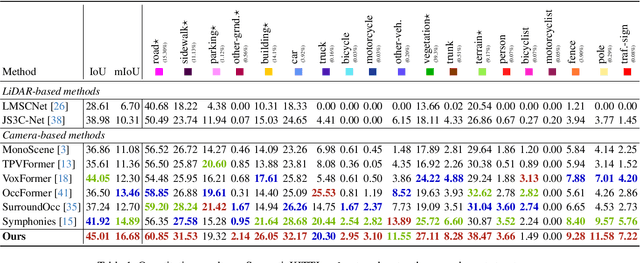

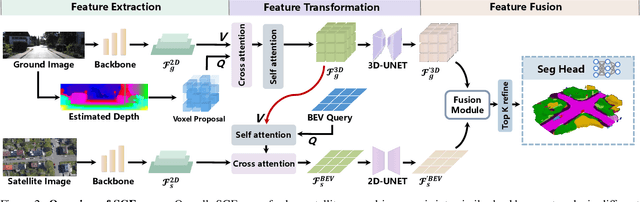

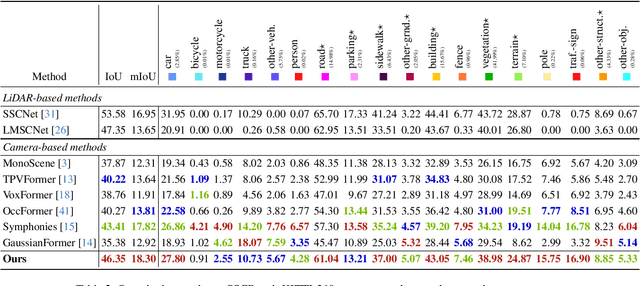

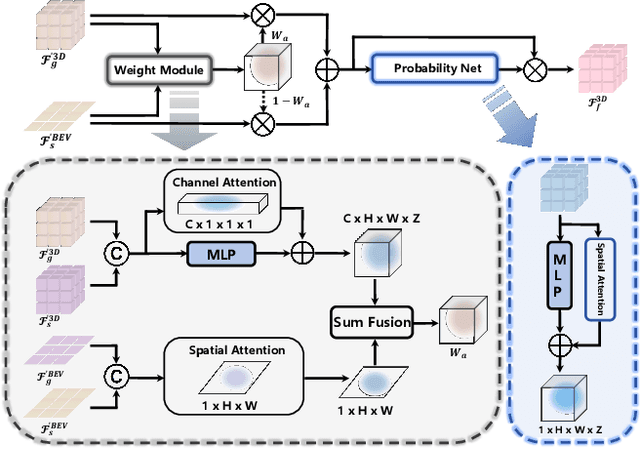

Abstract:Recently, camera-based solutions have been extensively explored for scene semantic completion (SSC). Despite their success in visible areas, existing methods struggle to capture complete scene semantics due to frequent visual occlusions. To address this limitation, this paper presents the first satellite-ground cooperative SSC framework, i.e., SGFormer, exploring the potential of satellite-ground image pairs in the SSC task. Specifically, we propose a dual-branch architecture that encodes orthogonal satellite and ground views in parallel, unifying them into a common domain. Additionally, we design a ground-view guidance strategy that corrects satellite image biases during feature encoding, addressing misalignment between satellite and ground views. Moreover, we develop an adaptive weighting strategy that balances contributions from satellite and ground views. Experiments demonstrate that SGFormer outperforms the state of the art on SemanticKITTI and SSCBench-KITTI-360 datasets. Our code is available on https://github.com/gxytcrc/SGFormer.

Descriptor Distillation for Efficient Multi-Robot SLAM

Mar 15, 2023

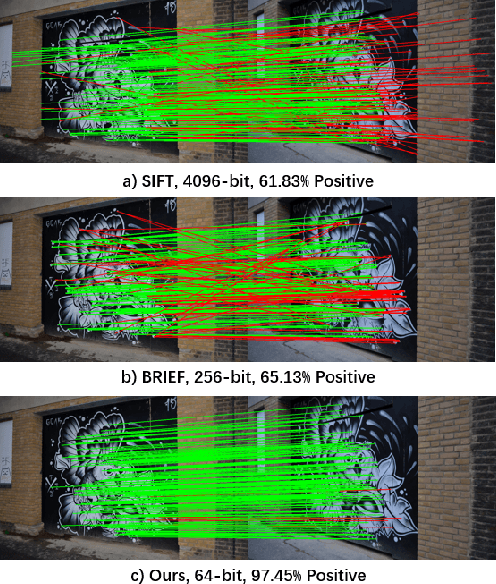

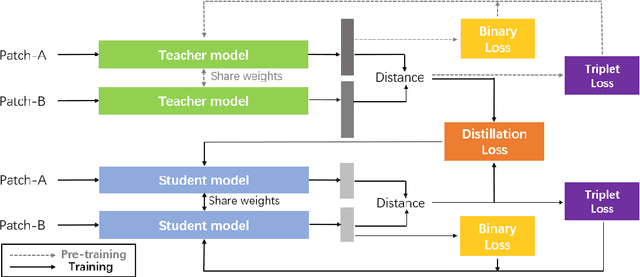

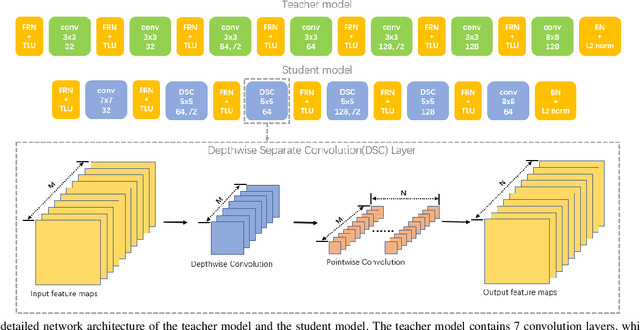

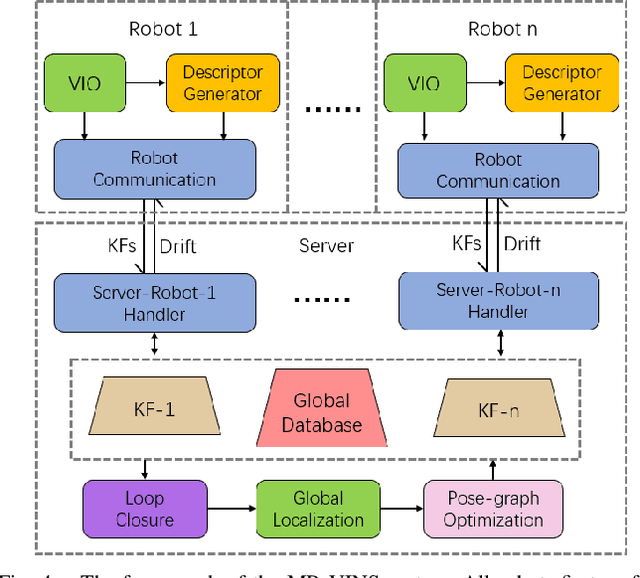

Abstract:Performing accurate localization while maintaining the low-level communication bandwidth is an essential challenge of multi-robot simultaneous localization and mapping (MR-SLAM). In this paper, we tackle this problem by generating a compact yet discriminative feature descriptor with minimum inference time. We propose descriptor distillation that formulates the descriptor generation into a learning problem under the teacher-student framework. To achieve real-time descriptor generation, we design a compact student network and learn it by transferring the knowledge from a pre-trained large teacher model. To reduce the descriptor dimensions from the teacher to the student, we propose a novel loss function that enables the knowledge transfer between two different dimensional descriptors. The experimental results demonstrate that our model is 30% lighter than the state-of-the-art model and produces better descriptors in patch matching. Moreover, we build a MR-SLAM system based on the proposed method and show that our descriptor distillation can achieve higher localization performance for MR-SLAM with lower bandwidth.

MultiRoboLearn: An open-source Framework for Multi-robot Deep Reinforcement Learning

Sep 28, 2022

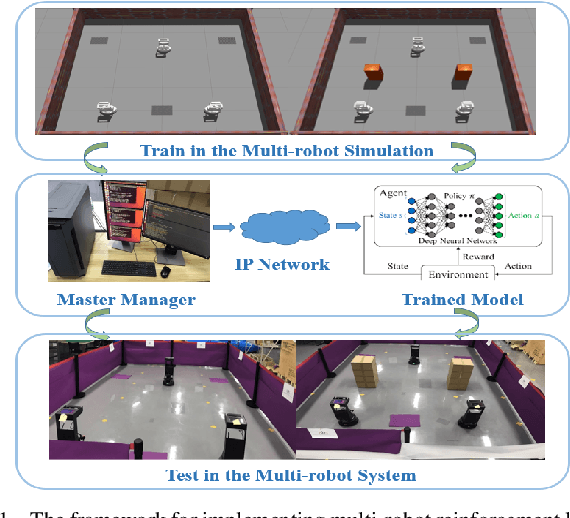

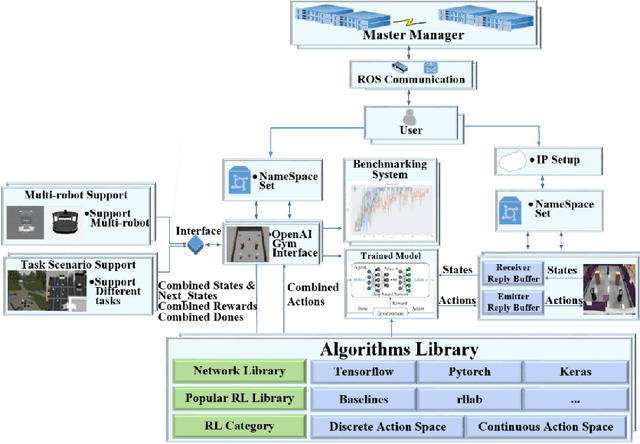

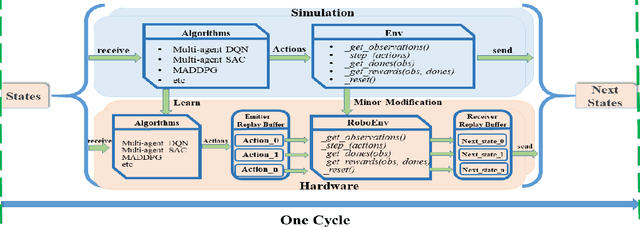

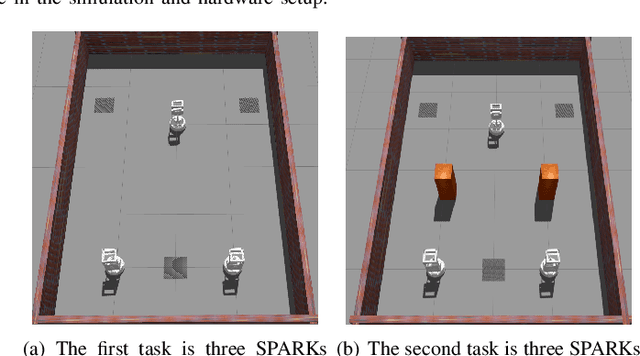

Abstract:It is well known that it is difficult to have a reliable and robust framework to link multi-agent deep reinforcement learning algorithms with practical multi-robot applications. To fill this gap, we propose and build an open-source framework for multi-robot systems called MultiRoboLearn1. This framework builds a unified setup of simulation and real-world applications. It aims to provide standard, easy-to-use simulated scenarios that can also be easily deployed to real-world multi-robot environments. Also, the framework provides researchers with a benchmark system for comparing the performance of different reinforcement learning algorithms. We demonstrate the generality, scalability, and capability of the framework with two real-world scenarios2 using different types of multi-agent deep reinforcement learning algorithms in discrete and continuous action spaces.

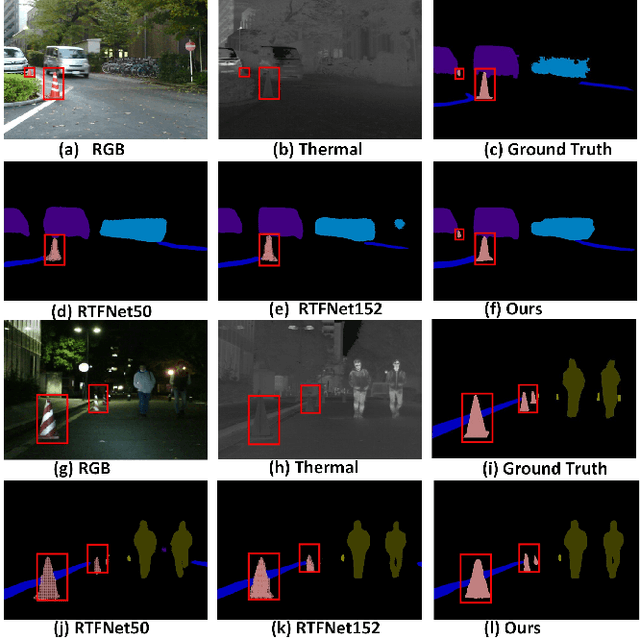

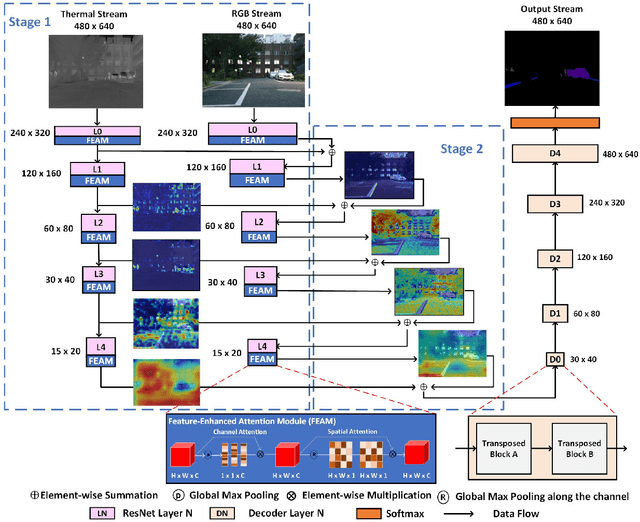

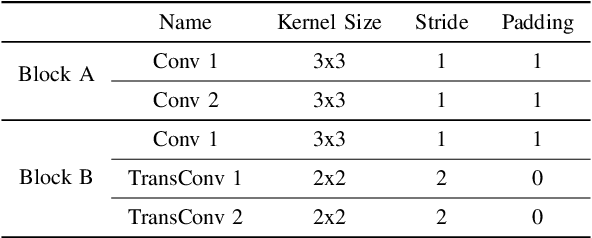

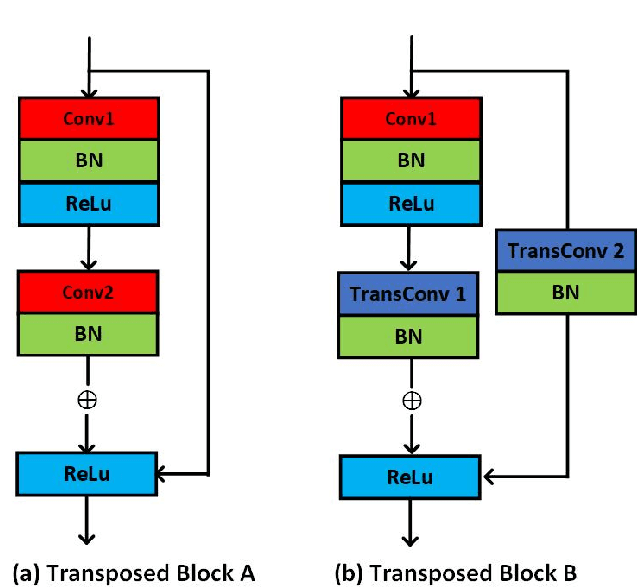

FEANet: Feature-Enhanced Attention Network for RGB-Thermal Real-time Semantic Segmentation

Oct 18, 2021

Abstract:The RGB-Thermal (RGB-T) information for semantic segmentation has been extensively explored in recent years. However, most existing RGB-T semantic segmentation usually compromises spatial resolution to achieve real-time inference speed, which leads to poor performance. To better extract detail spatial information, we propose a two-stage Feature-Enhanced Attention Network (FEANet) for the RGB-T semantic segmentation task. Specifically, we introduce a Feature-Enhanced Attention Module (FEAM) to excavate and enhance multi-level features from both the channel and spatial views. Benefited from the proposed FEAM module, our FEANet can preserve the spatial information and shift more attention to high-resolution features from the fused RGB-T images. Extensive experiments on the urban scene dataset demonstrate that our FEANet outperforms other state-of-the-art (SOTA) RGB-T methods in terms of objective metrics and subjective visual comparison (+2.6% in global mAcc and +0.8% in global mIoU). For the 480 x 640 RGB-T test images, our FEANet can run with a real-time speed on an NVIDIA GeForce RTX 2080 Ti card.

Boosting Light-Weight Depth Estimation Via Knowledge Distillation

May 13, 2021

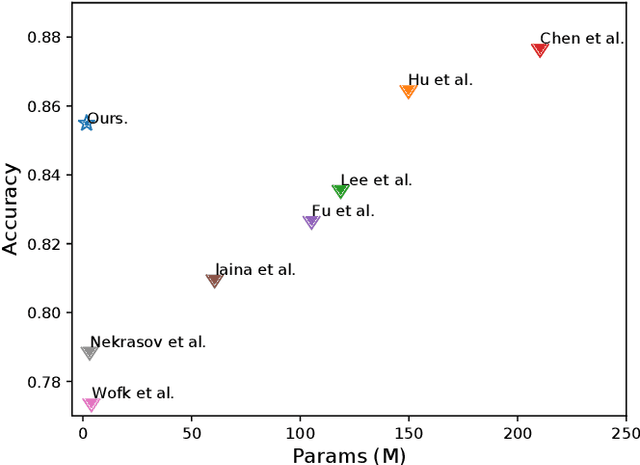

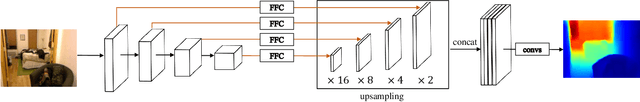

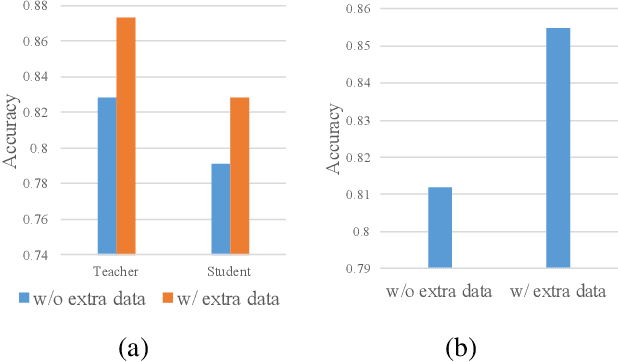

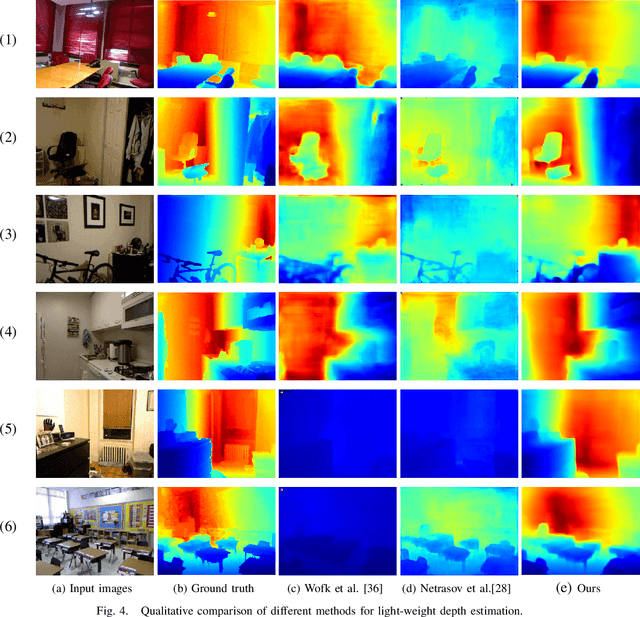

Abstract:The advanced performance of depth estimation is achieved by the employment of large and complex neural networks. While the performance has still been continuously improved, we argue that the depth estimation has to be accurate and efficient. It's a preliminary requirement for real-world applications. However, fast depth estimation tends to lower the performance as the trade-off between the model's capacity and accuracy. In this paper, we attempt to archive highly accurate depth estimation with a light-weight network. To this end, we first introduce a compact network that can estimate a depth map in real-time. We then technically show two complementary and necessary strategies to improve the performance of the light-weight network. As the number of real-world scenes is infinite, the first is the employment of auxiliary data that increases the diversity of training data. The second is the use of knowledge distillation to further boost the performance. Through extensive and rigorous experiments, we show that our method outperforms previous light-weight methods in terms of inference accuracy, computational efficiency and generalization. We can achieve comparable performance compared to state-of-the-of-art methods with only 1% parameters, on the other hand, our method outperforms other light-weight methods by a significant margin.

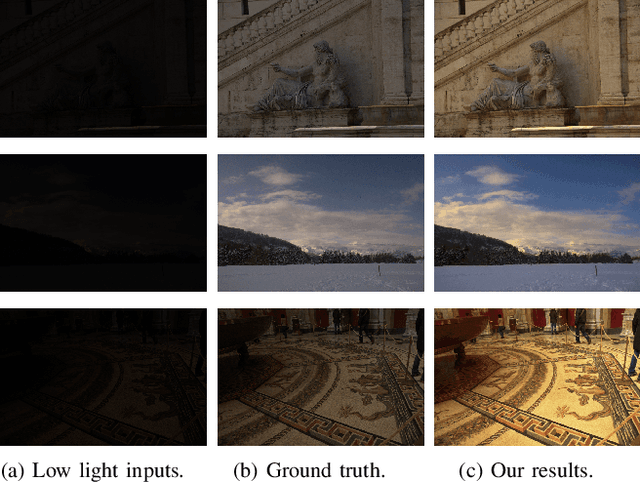

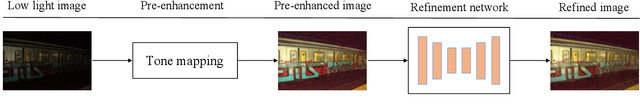

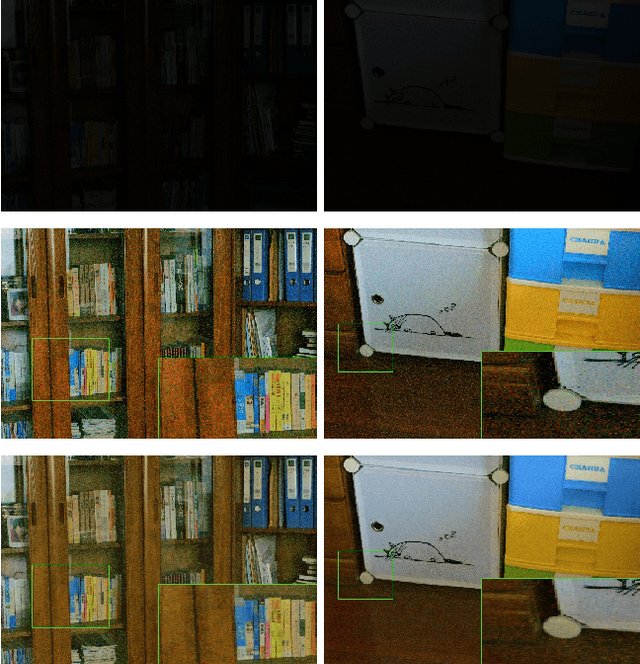

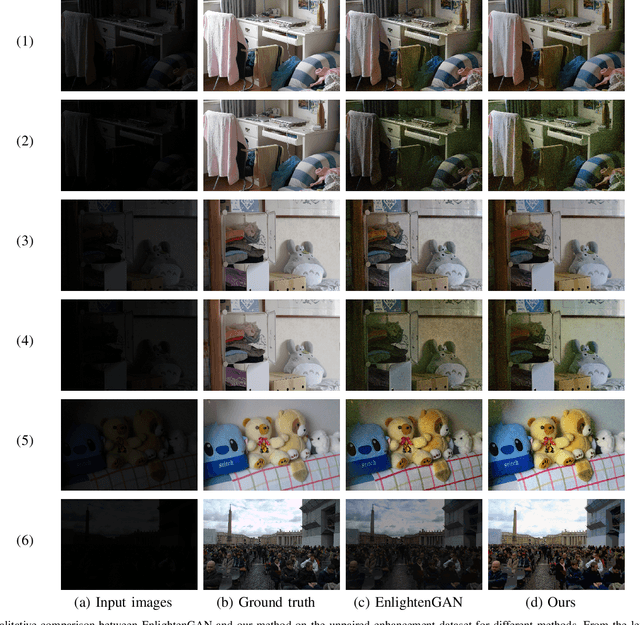

A Two-stage Unsupervised Approach for Low light Image Enhancement

Oct 20, 2020

Abstract:As vision based perception methods are usually built on the normal light assumption, there will be a serious safety issue when deploying them into low light environments. Recently, deep learning based methods have been proposed to enhance low light images by penalizing the pixel-wise loss of low light and normal light images. However, most of them suffer from the following problems: 1) the need of pairs of low light and normal light images for training, 2) the poor performance for dark images, 3) the amplification of noise. To alleviate these problems, in this paper, we propose a two-stage unsupervised method that decomposes the low light image enhancement into a pre-enhancement and a post-refinement problem. In the first stage, we pre-enhance a low light image with a conventional Retinex based method. In the second stage, we use a refinement network learned with adversarial training for further improvement of the image quality. The experimental results show that our method outperforms previous methods on four benchmark datasets. In addition, we show that our method can significantly improve feature points matching and simultaneous localization and mapping in low light conditions.

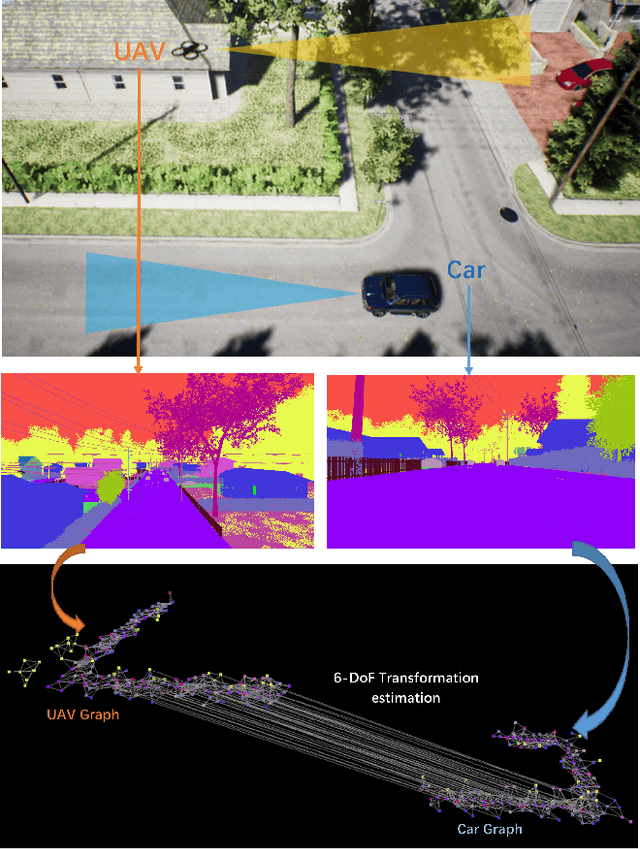

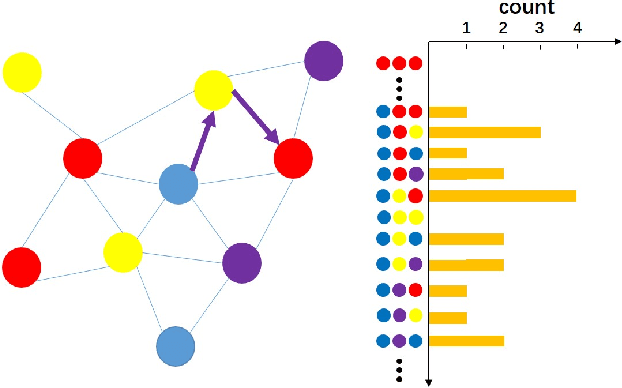

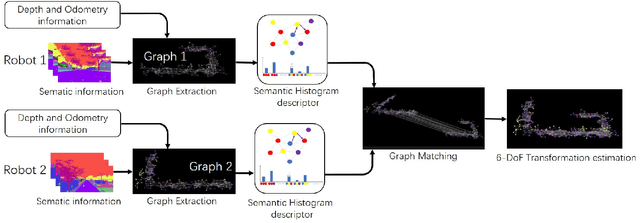

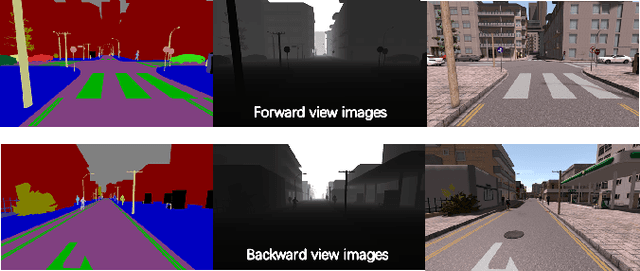

Semantic Histogram Based Graph Matching for Real-Time Multi-Robot Global Localization in Large Scale Environment

Oct 19, 2020

Abstract:The core problem of visual multi-robot simultaneous localization and mapping (MR-SLAM) is how to efficiently and accurately perform multi-robot global localization (MR-GL). The difficulties are two-fold. The first is the difficulty of global localization for significant viewpoint difference. Appearance-based localization methods tend to fail under large viewpoint changes. Recently, semantic graphs have been utilized to overcome the viewpoint variation problem. However, the methods are highly time-consuming, especially in large-scale environments. This leads to the second difficulty, which is how to perform real-time global localization. In this paper, we propose a semantic histogram-based graph matching method that is robust to viewpoint variation and can achieve real-time global localization. Based on that, we develop a system that can accurately and efficiently perform MR-GL for both homogeneous and heterogeneous robots. The experimental results show that our approach is about 30 times faster than Random Walk based semantic descriptors. Moreover, it achieves an accuracy of 95% for global localization, while the accuracy of the state-of-the-art method is 85%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge