Tin lun Lam

Whole-Body Control for Velocity-Controlled Mobile Collaborative Robots Using Coupling Dynamic Movement Primitives

Mar 07, 2022

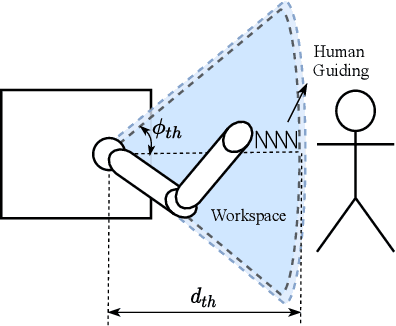

Abstract:In this paper, we propose a unified whole-body control framework for velocity-controlled mobile collaborative robots which can distribute task motion into the base and arm according to specific task requirements by adjusting weighting factors. Our framework focuses on addressing two challenging issues in whole-body coordination: 1) different dynamic characteristics of base and arm; 2) avoidance of violating constraints of both safety and configuration. In addition, our controller involves Coupling Dynamic Movement Primitive to enable the essential compliance capabilities for collaboration and interaction applications, such as obstacle avoidance, hand guiding, and force control. Based on these, we design a motion mode of intuitive physical human-robot interaction through an adjusting strategy of the weighting factors. The proposed controller is in closed-form and thus quite computationally efficient against the-state-of-art optimization-based methods. Experimental results of a real mobile collaborative robot validate the effectiveness of the proposed controller. The documented video of the experiments is available at https://youtu.be/x2Z593dV9C8.

A Two-stage Unsupervised Approach for Low light Image Enhancement

Oct 20, 2020

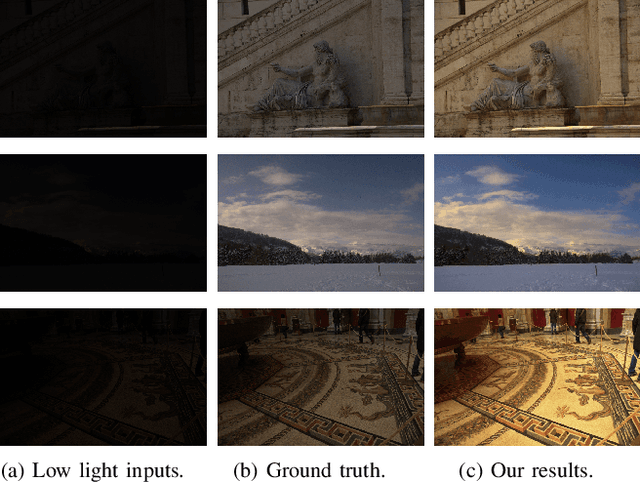

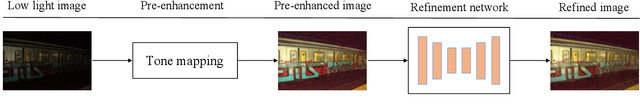

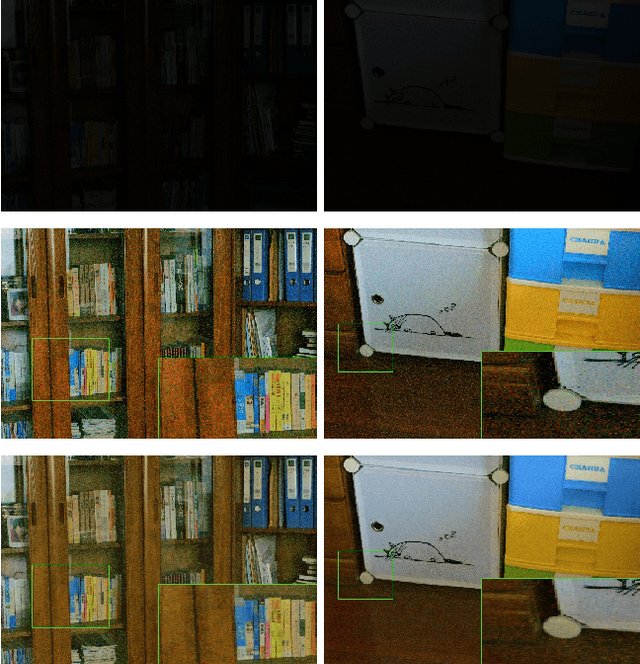

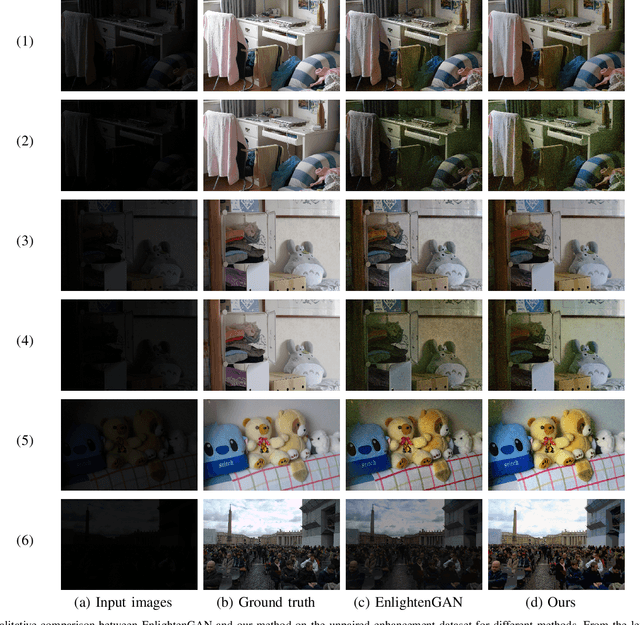

Abstract:As vision based perception methods are usually built on the normal light assumption, there will be a serious safety issue when deploying them into low light environments. Recently, deep learning based methods have been proposed to enhance low light images by penalizing the pixel-wise loss of low light and normal light images. However, most of them suffer from the following problems: 1) the need of pairs of low light and normal light images for training, 2) the poor performance for dark images, 3) the amplification of noise. To alleviate these problems, in this paper, we propose a two-stage unsupervised method that decomposes the low light image enhancement into a pre-enhancement and a post-refinement problem. In the first stage, we pre-enhance a low light image with a conventional Retinex based method. In the second stage, we use a refinement network learned with adversarial training for further improvement of the image quality. The experimental results show that our method outperforms previous methods on four benchmark datasets. In addition, we show that our method can significantly improve feature points matching and simultaneous localization and mapping in low light conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge