Xiongwei Zhao

Harbin Institute of Technology

OMUDA: Omni-level Masking for Unsupervised Domain Adaptation in Semantic Segmentation

Dec 13, 2025Abstract:Unsupervised domain adaptation (UDA) enables semantic segmentation models to generalize from a labeled source domain to an unlabeled target domain. However, existing UDA methods still struggle to bridge the domain gap due to cross-domain contextual ambiguity, inconsistent feature representations, and class-wise pseudo-label noise. To address these challenges, we propose Omni-level Masking for Unsupervised Domain Adaptation (OMUDA), a unified framework that introduces hierarchical masking strategies across distinct representation levels. Specifically, OMUDA comprises: 1) a Context-Aware Masking (CAM) strategy that adaptively distinguishes foreground from background to balance global context and local details; 2) a Feature Distillation Masking (FDM) strategy that enhances robust and consistent feature learning through knowledge transfer from pre-trained models; and 3) a Class Decoupling Masking (CDM) strategy that mitigates the impact of noisy pseudo-labels by explicitly modeling class-wise uncertainty. This hierarchical masking paradigm effectively reduces the domain shift at the contextual, representational, and categorical levels, providing a unified solution beyond existing approaches. Extensive experiments on multiple challenging cross-domain semantic segmentation benchmarks validate the effectiveness of OMUDA. Notably, on the SYNTHIA->Cityscapes and GTA5->Cityscapes tasks, OMUDA can be seamlessly integrated into existing UDA methods and consistently achieving state-of-the-art results with an average improvement of 7%.

An Iterative Task-Driven Framework for Resilient LiDAR Place Recognition in Adverse Weather

Apr 21, 2025Abstract:LiDAR place recognition (LPR) plays a vital role in autonomous navigation. However, existing LPR methods struggle to maintain robustness under adverse weather conditions such as rain, snow, and fog, where weather-induced noise and point cloud degradation impair LiDAR reliability and perception accuracy. To tackle these challenges, we propose an Iterative Task-Driven Framework (ITDNet), which integrates a LiDAR Data Restoration (LDR) module and a LiDAR Place Recognition (LPR) module through an iterative learning strategy. These modules are jointly trained end-to-end, with alternating optimization to enhance performance. The core rationale of ITDNet is to leverage the LDR module to recover the corrupted point clouds while preserving structural consistency with clean data, thereby improving LPR accuracy in adverse weather. Simultaneously, the LPR task provides feature pseudo-labels to guide the LDR module's training, aligning it more effectively with the LPR task. To achieve this, we first design a task-driven LPR loss and a reconstruction loss to jointly supervise the optimization of the LDR module. Furthermore, for the LDR module, we propose a Dual-Domain Mixer (DDM) block for frequency-spatial feature fusion and a Semantic-Aware Generator (SAG) block for semantic-guided restoration. In addition, for the LPR module, we introduce a Multi-Frequency Transformer (MFT) block and a Wavelet Pyramid NetVLAD (WPN) block to aggregate multi-scale, robust global descriptors. Finally, extensive experiments on the Weather-KITTI, Boreas, and our proposed Weather-Apollo datasets demonstrate that, demonstrate that ITDNet outperforms existing LPR methods, achieving state-of-the-art performance in adverse weather. The datasets and code will be made publicly available at https://github.com/Grandzxw/ITDNet.

UGNA-VPR: A Novel Training Paradigm for Visual Place Recognition Based on Uncertainty-Guided NeRF Augmentation

Mar 27, 2025

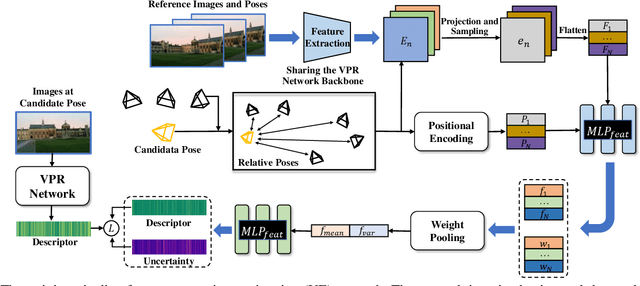

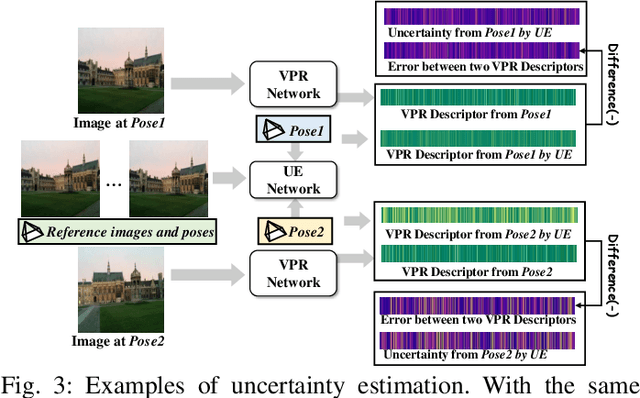

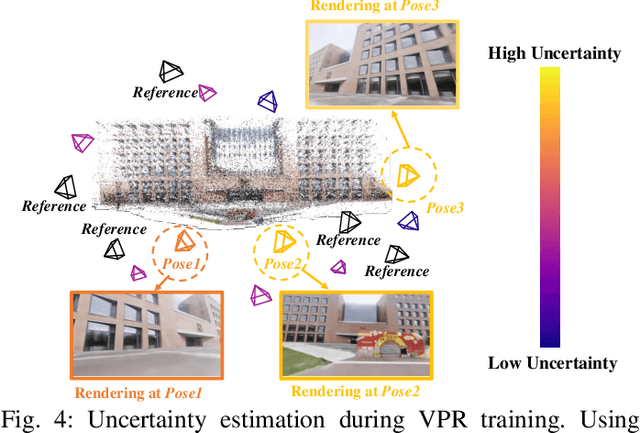

Abstract:Visual place recognition (VPR) is crucial for robots to identify previously visited locations, playing an important role in autonomous navigation in both indoor and outdoor environments. However, most existing VPR datasets are limited to single-viewpoint scenarios, leading to reduced recognition accuracy, particularly in multi-directional driving or feature-sparse scenes. Moreover, obtaining additional data to mitigate these limitations is often expensive. This paper introduces a novel training paradigm to improve the performance of existing VPR networks by enhancing multi-view diversity within current datasets through uncertainty estimation and NeRF-based data augmentation. Specifically, we initially train NeRF using the existing VPR dataset. Then, our devised self-supervised uncertainty estimation network identifies places with high uncertainty. The poses of these uncertain places are input into NeRF to generate new synthetic observations for further training of VPR networks. Additionally, we propose an improved storage method for efficient organization of augmented and original training data. We conducted extensive experiments on three datasets and tested three different VPR backbone networks. The results demonstrate that our proposed training paradigm significantly improves VPR performance by fully utilizing existing data, outperforming other training approaches. We further validated the effectiveness of our approach on self-recorded indoor and outdoor datasets, consistently demonstrating superior results. Our dataset and code have been released at \href{https://github.com/nubot-nudt/UGNA-VPR}{https://github.com/nubot-nudt/UGNA-VPR}.

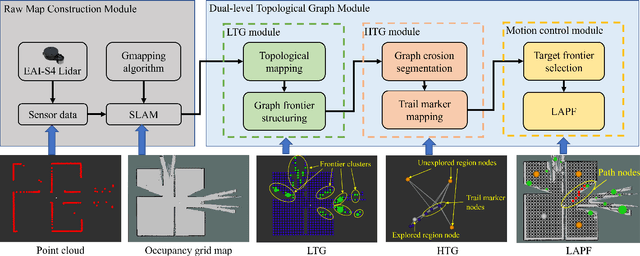

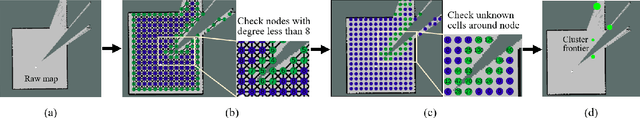

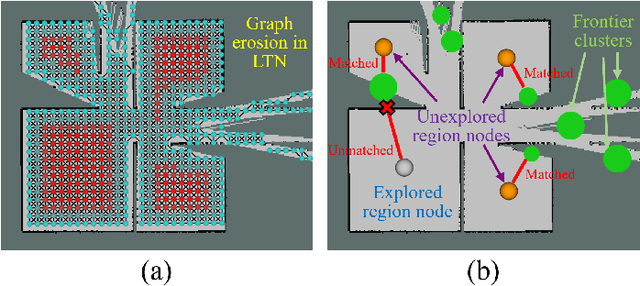

DART: Dual-level Autonomous Robotic Topology for Efficient Exploration in Unknown Environments

Mar 17, 2025

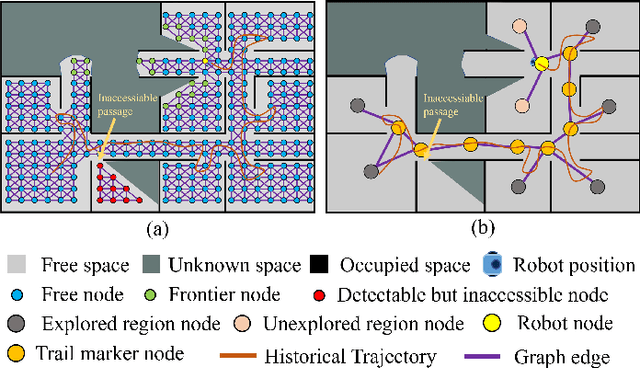

Abstract:Conventional algorithms in autonomous exploration face challenges due to their inability to accurately and efficiently identify the spatial distribution of convex regions in the real-time map. These methods often prioritize navigation toward the nearest or information-rich frontiers -- the boundaries between known and unknown areas -- resulting in incomplete convex region exploration and requiring excessive backtracking to revisit these missed areas. To address these limitations, this paper introduces an innovative dual-level topological analysis approach. First, we introduce a Low-level Topological Graph (LTG), generated through uniform sampling of the original map data, which captures essential geometric and connectivity details. Next, the LTG is transformed into a High-level Topological Graph (HTG), representing the spatial layout and exploration completeness of convex regions, prioritizing the exploration of convex regions that are not fully explored and minimizing unnecessary backtracking. Finally, an novel Local Artificial Potential Field (LAPF) method is employed for motion control, replacing conventional path planning and boosting overall efficiency. Experimental results highlight the effectiveness of our approach. Simulation tests reveal that our framework significantly reduces exploration time and travel distance, outperforming existing methods in both speed and efficiency. Ablation studies confirm the critical role of each framework component. Real-world tests demonstrate the robustness of our method in environments with poor mapping quality, surpassing other approaches in adaptability to mapping inaccuracies and inaccessible areas.

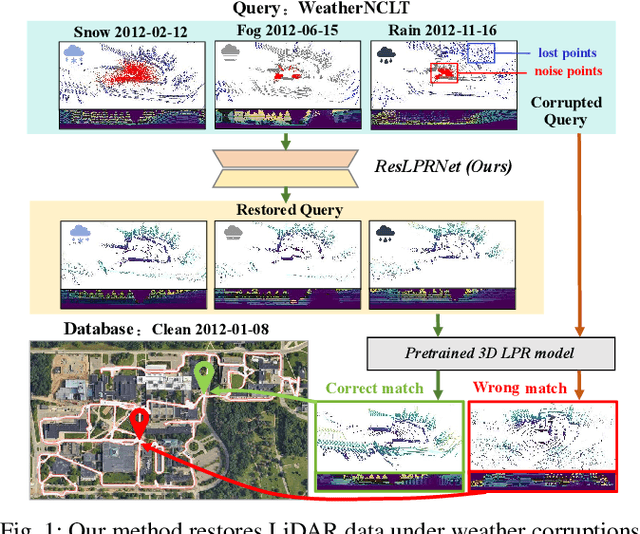

ResLPR: A LiDAR Data Restoration Network and Benchmark for Robust Place Recognition Against Weather Corruptions

Mar 16, 2025

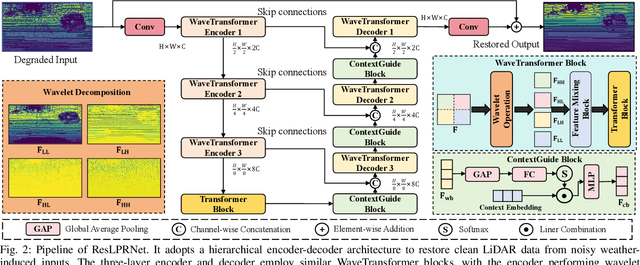

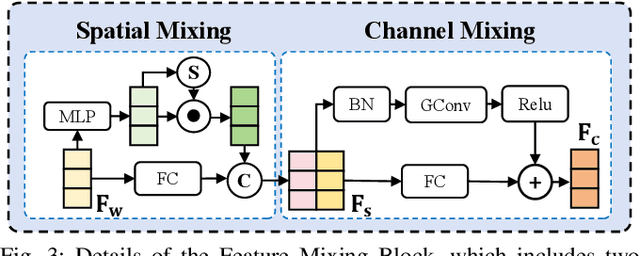

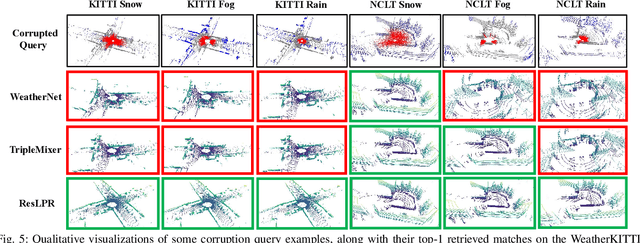

Abstract:LiDAR-based place recognition (LPR) is a key component for autonomous driving, and its resilience to environmental corruption is critical for safety in high-stakes applications. While state-of-the-art (SOTA) LPR methods perform well in clean weather, they still struggle with weather-induced corruption commonly encountered in driving scenarios. To tackle this, we propose ResLPRNet, a novel LiDAR data restoration network that largely enhances LPR performance under adverse weather by restoring corrupted LiDAR scans using a wavelet transform-based network. ResLPRNet is efficient, lightweight and can be integrated plug-and-play with pretrained LPR models without substantial additional computational cost. Given the lack of LPR datasets under adverse weather, we introduce ResLPR, a novel benchmark that examines SOTA LPR methods under a wide range of LiDAR distortions induced by severe snow, fog, and rain conditions. Experiments on our proposed WeatherKITTI and WeatherNCLT datasets demonstrate the resilience and notable gains achieved by using our restoration method with multiple LPR approaches in challenging weather scenarios. Our code and benchmark are publicly available here: https://github.com/nubot-nudt/ResLPR.

SplatPose: Geometry-Aware 6-DoF Pose Estimation from Single RGB Image via 3D Gaussian Splatting

Mar 07, 2025Abstract:6-DoF pose estimation is a fundamental task in computer vision with wide-ranging applications in augmented reality and robotics. Existing single RGB-based methods often compromise accuracy due to their reliance on initial pose estimates and susceptibility to rotational ambiguity, while approaches requiring depth sensors or multi-view setups incur significant deployment costs. To address these limitations, we introduce SplatPose, a novel framework that synergizes 3D Gaussian Splatting (3DGS) with a dual-branch neural architecture to achieve high-precision pose estimation using only a single RGB image. Central to our approach is the Dual-Attention Ray Scoring Network (DARS-Net), which innovatively decouples positional and angular alignment through geometry-domain attention mechanisms, explicitly modeling directional dependencies to mitigate rotational ambiguity. Additionally, a coarse-to-fine optimization pipeline progressively refines pose estimates by aligning dense 2D features between query images and 3DGS-synthesized views, effectively correcting feature misalignment and depth errors from sparse ray sampling. Experiments on three benchmark datasets demonstrate that SplatPose achieves state-of-the-art 6-DoF pose estimation accuracy in single RGB settings, rivaling approaches that depend on depth or multi-view images.

MQADet: A Plug-and-Play Paradigm for Enhancing Open-Vocabulary Object Detection via Multimodal Question Answering

Feb 26, 2025Abstract:Open-vocabulary detection (OVD) is a challenging task to detect and classify objects from an unrestricted set of categories, including those unseen during training. Existing open-vocabulary detectors are limited by complex visual-textual misalignment and long-tailed category imbalances, leading to suboptimal performance in challenging scenarios. To address these limitations, we introduce MQADet, a universal paradigm for enhancing existing open-vocabulary detectors by leveraging the cross-modal reasoning capabilities of multimodal large language models (MLLMs). MQADet functions as a plug-and-play solution that integrates seamlessly with pre-trained object detectors without substantial additional training costs. Specifically, we design a novel three-stage Multimodal Question Answering (MQA) pipeline to guide the MLLMs to precisely localize complex textual and visual targets while effectively enhancing the focus of existing object detectors on relevant objects. To validate our approach, we present a new benchmark for evaluating our paradigm on four challenging open-vocabulary datasets, employing three state-of-the-art object detectors as baselines. Experimental results demonstrate that our proposed paradigm significantly improves the performance of existing detectors, particularly in unseen complex categories, across diverse and challenging scenarios. To facilitate future research, we will publicly release our code.

TripleMixer: A 3D Point Cloud Denoising Model for Adverse Weather

Aug 25, 2024

Abstract:LiDAR sensors are crucial for providing high-resolution 3D point cloud data in autonomous driving systems, enabling precise environmental perception. However, real-world adverse weather conditions, such as rain, fog, and snow, introduce significant noise and interference, degrading the reliability of LiDAR data and the performance of downstream tasks like semantic segmentation. Existing datasets often suffer from limited weather diversity and small dataset sizes, which restrict their effectiveness in training models. Additionally, current deep learning denoising methods, while effective in certain scenarios, often lack interpretability, complicating the ability to understand and validate their decision-making processes. To overcome these limitations, we introduce two large-scale datasets, Weather-KITTI and Weather-NuScenes, which cover three common adverse weather conditions: rain, fog, and snow. These datasets retain the original LiDAR acquisition information and provide point-level semantic labels for rain, fog, and snow. Furthermore, we propose a novel point cloud denoising model, TripleMixer, comprising three mixer layers: the Geometry Mixer Layer, the Frequency Mixer Layer, and the Channel Mixer Layer. These layers are designed to capture geometric spatial information, extract multi-scale frequency information, and enhance the multi-channel feature information of point clouds, respectively. Experiments conducted on the WADS dataset in real-world scenarios, as well as on our proposed Weather-KITTI and Weather-NuScenes datasets, demonstrate that our model achieves state-of-the-art denoising performance. Additionally, our experiments show that integrating the denoising model into existing segmentation frameworks enhances the performance of downstream tasks.The datasets and code will be made publicly available at https://github.com/Grandzxw/TripleMixer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge