Xiaowu Dai

Alan

Uncertainty-Aware Multimodal Learning via Conformal Shapley Intervals

Jan 30, 2026Abstract:Multimodal learning combines information from multiple data modalities to improve predictive performance. However, modalities often contribute unequally and in a data dependent way, making it unclear which data modalities are genuinely informative and to what extent their contributions can be trusted. Quantifying modality level importance together with uncertainty is therefore central to interpretable and reliable multimodal learning. We introduce conformal Shapley intervals, a framework that combines Shapley values with conformal inference to construct uncertainty-aware importance intervals for each modality. Building on these intervals, we propose a modality selection procedure with a provable optimality guarantee: conditional on the observed features, the selected subset of modalities achieves performance close to that of the optimal subset. We demonstrate the effectiveness of our approach on multiple datasets, showing that it provides meaningful uncertainty quantification and strong predictive performance while relying on only a small number of informative modalities.

ALIGN: Aligned Delegation with Performance Guarantees for Multi-Agent LLM Reasoning

Jan 28, 2026Abstract:LLMs often underperform on complex reasoning tasks when relying on a single generation-and-selection pipeline. Inference-time ensemble methods can improve performance by sampling diverse reasoning paths or aggregating multiple candidate answers, but they typically treat candidates independently and provide no formal guarantees that ensembling improves reasoning quality. We propose a novel method, Aligned Delegation for Multi-Agent LLM Reasoning (ALIGN), which formulates LLM reasoning as an aligned delegation game. In ALIGN, a principal delegates a task to multiple agents that generate candidate solutions under designed incentives, and then selects among their outputs to produce a final answer. This formulation induces structured interaction among agents while preserving alignment between agent and principal objectives. We establish theoretical guarantees showing that, under a fair comparison with equal access to candidate solutions, ALIGN provably improves expected performance over single-agent generation. Our analysis accommodates correlated candidate answers and relaxes independence assumptions that are commonly used in prior work. Empirical results across a broad range of LLM reasoning benchmarks consistently demonstrate that ALIGN outperforms strong single-agent and ensemble baselines.

LightAgent: Production-level Open-source Agentic AI Framework

Sep 11, 2025Abstract:With the rapid advancement of large language models (LLMs), Multi-agent Systems (MAS) have achieved significant progress in various application scenarios. However, substantial challenges remain in designing versatile, robust, and efficient platforms for agent deployment. To address these limitations, we propose \textbf{LightAgent}, a lightweight yet powerful agentic framework, effectively resolving the trade-off between flexibility and simplicity found in existing frameworks. LightAgent integrates core functionalities such as Memory (mem0), Tools, and Tree of Thought (ToT), while maintaining an extremely lightweight structure. As a fully open-source solution, it seamlessly integrates with mainstream chat platforms, enabling developers to easily build self-learning agents. We have released LightAgent at \href{https://github.com/wxai-space/LightAgent}{https://github.com/wxai-space/LightAgent}

Effect Decomposition of Functional-Output Computer Experiments via Orthogonal Additive Gaussian Processes

Jun 15, 2025

Abstract:Functional ANOVA (FANOVA) is a widely used variance-based sensitivity analysis tool. However, studies on functional-output FANOVA remain relatively scarce, especially for black-box computer experiments, which often involve complex and nonlinear functional-output relationships with unknown data distribution. Conventional approaches often rely on predefined basis functions or parametric structures that lack the flexibility to capture complex nonlinear relationships. Additionally, strong assumptions about the underlying data distributions further limit their ability to achieve a data-driven orthogonal effect decomposition. To address these challenges, this study proposes a functional-output orthogonal additive Gaussian process (FOAGP) to efficiently perform the data-driven orthogonal effect decomposition. By enforcing a conditional orthogonality constraint on the separable prior process, the proposed functional-output orthogonal additive kernel enables data-driven orthogonality without requiring prior distributional assumptions. The FOAGP framework also provides analytical formulations for local Sobol' indices and expected conditional variance sensitivity indices, enabling comprehensive sensitivity analysis by capturing both global and local effect significance. Validation through two simulation studies and a real case study on fuselage shape control confirms the model's effectiveness in orthogonal effect decomposition and variance decomposition, demonstrating its practical value in engineering applications.

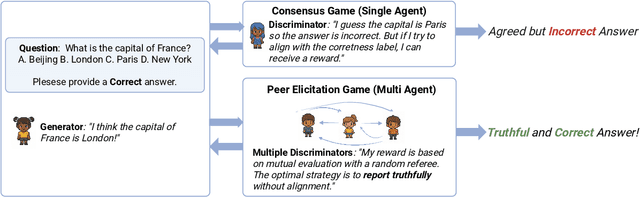

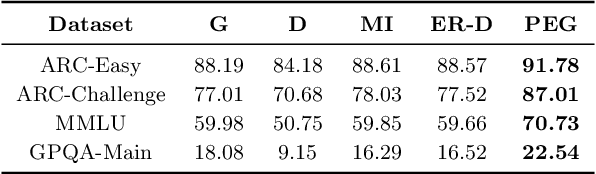

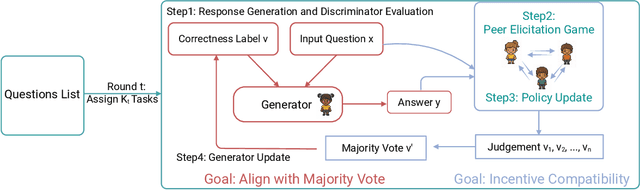

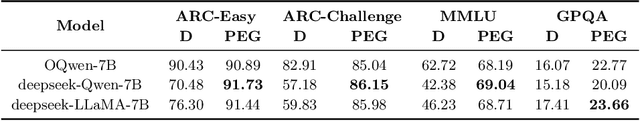

Incentivizing Truthful Language Models via Peer Elicitation Games

May 19, 2025

Abstract:Large Language Models (LLMs) have demonstrated strong generative capabilities but remain prone to inconsistencies and hallucinations. We introduce Peer Elicitation Games (PEG), a training-free, game-theoretic framework for aligning LLMs through a peer elicitation mechanism involving a generator and multiple discriminators instantiated from distinct base models. Discriminators interact in a peer evaluation setting, where rewards are computed using a determinant-based mutual information score that provably incentivizes truthful reporting without requiring ground-truth labels. We establish theoretical guarantees showing that each agent, via online learning, achieves sublinear regret in the sense their cumulative performance approaches that of the best fixed truthful strategy in hindsight. Moreover, we prove last-iterate convergence to a truthful Nash equilibrium, ensuring that the actual policies used by agents converge to stable and truthful behavior over time. Empirical evaluations across multiple benchmarks demonstrate significant improvements in factual accuracy. These results position PEG as a practical approach for eliciting truthful behavior from LLMs without supervision or fine-tuning.

Learn then Decide: A Learning Approach for Designing Data Marketplaces

Mar 13, 2025

Abstract:As data marketplaces become increasingly central to the digital economy, it is crucial to design efficient pricing mechanisms that optimize revenue while ensuring fair and adaptive pricing. We introduce the Maximum Auction-to-Posted Price (MAPP) mechanism, a novel two-stage approach that first estimates the bidders' value distribution through auctions and then determines the optimal posted price based on the learned distribution. We establish that MAPP is individually rational and incentive-compatible, ensuring truthful bidding while balancing revenue maximization with minimal price discrimination. MAPP achieves a regret of $O_p(n^{-1})$ when incorporating historical bid data, where $n$ is the number of bids in the current round. It outperforms existing methods while imposing weaker distributional assumptions. For sequential dataset sales over $T$ rounds, we propose an online MAPP mechanism that dynamically adjusts pricing across datasets with varying value distributions. Our approach achieves no-regret learning, with the average cumulative regret converging at a rate of $O_p(T^{-1/2}(\log T)^2)$. We validate the effectiveness of MAPP through simulations and real-world data from the FCC AWS-3 spectrum auction.

Fairness-aware organ exchange and kidney paired donation

Mar 09, 2025Abstract:The kidney paired donation (KPD) program provides an innovative solution to overcome incompatibility challenges in kidney transplants by matching incompatible donor-patient pairs and facilitating kidney exchanges. To address unequal access to transplant opportunities, there are two widely used fairness criteria: group fairness and individual fairness. However, these criteria do not consider protected patient features, which refer to characteristics legally or ethically recognized as needing protection from discrimination, such as race and gender. Motivated by the calibration principle in machine learning, we introduce a new fairness criterion: the matching outcome should be conditionally independent of the protected feature, given the sensitization level. We integrate this fairness criterion as a constraint within the KPD optimization framework and propose a computationally efficient solution. Theoretically, we analyze the associated price of fairness using random graph models. Empirically, we compare our fairness criterion with group fairness and individual fairness through both simulations and a real-data example.

Dynamic Online Recommendation for Two-Sided Market with Bayesian Incentive Compatibility

Jun 04, 2024

Abstract:Recommender systems play a crucial role in internet economies by connecting users with relevant products or services. However, designing effective recommender systems faces two key challenges: (1) the exploration-exploitation tradeoff in balancing new product exploration against exploiting known preferences, and (2) dynamic incentive compatibility in accounting for users' self-interested behaviors and heterogeneous preferences. This paper formalizes these challenges into a Dynamic Bayesian Incentive-Compatible Recommendation Protocol (DBICRP). To address the DBICRP, we propose a two-stage algorithm (RCB) that integrates incentivized exploration with an efficient offline learning component for exploitation. In the first stage, our algorithm explores available products while maintaining dynamic incentive compatibility to determine sufficient sample sizes. The second stage employs inverse proportional gap sampling integrated with an arbitrary machine learning method to ensure sublinear regret. Theoretically, we prove that RCB achieves $O(\sqrt{KdT})$ regret and satisfies Bayesian incentive compatibility (BIC) under a Gaussian prior assumption. Empirically, we validate RCB's strong incentive gain, sublinear regret, and robustness through simulations and a real-world application on personalized warfarin dosing. Our work provides a principled approach for incentive-aware recommendation in online preference learning settings.

Conformal Online Auction Design

May 11, 2024

Abstract:This paper proposes the conformal online auction design (COAD), a novel mechanism for maximizing revenue in online auctions by quantifying the uncertainty in bidders' values without relying on assumptions about value distributions. COAD incorporates both the bidder and item features and leverages historical data to provide an incentive-compatible mechanism for online auctions. Unlike traditional methods for online auctions, COAD employs a distribution-free, prediction interval-based approach using conformal prediction techniques. This novel approach ensures that the expected revenue from our mechanism can achieve at least a constant fraction of the revenue generated by the optimal mechanism. Additionally, COAD admits the use of a broad array of modern machine-learning methods, including random forests, kernel methods, and deep neural nets, for predicting bidders' values. It ensures revenue performance under any finite sample of historical data. Moreover, COAD introduces bidder-specific reserve prices based on the lower confidence bounds of bidders' valuations, which is different from the uniform reserve prices commonly used in the literature. We validate our theoretical predictions through extensive simulations and a real-data application. All code for using COAD and reproducing results is made available on GitHub.

An ODE Model for Dynamic Matching in Heterogeneous Networks

Mar 08, 2023

Abstract:We study the problem of dynamic matching in heterogeneous networks, where agents are subject to compatibility restrictions and stochastic arrival and departure times. In particular, we consider networks with one type of easy-to-match agents and multiple types of hard-to-match agents, each subject to its own compatibility constraints. Such a setting arises in many real-world applications, including kidney exchange programs and carpooling platforms. We introduce a novel approach to modeling dynamic matching by establishing the ordinary differential equation (ODE) model, which offers a new perspective for evaluating various matching algorithms. We study two algorithms, namely the Greedy and Patient Algorithms, where both algorithms prioritize matching compatible hard-to-match agents over easy-to-match agents in heterogeneous networks. Our results demonstrate the trade-off between the conflicting goals of matching agents quickly and optimally, offering insights into the design of real-world dynamic matching systems. We provide simulations and a real-world case study using data from the Organ Procurement and Transplantation Network to validate theoretical predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge