Xiaoming Zhai

Human-AI Collaborative Inductive Thematic Analysis: AI Guided Analysis and Human Interpretive Authority

Jan 17, 2026Abstract:The increasing use of generative artificial intelligence (GenAI) in qualitative research raises important questions about analytic practice and interpretive authority. This study examines how researchers interact with an Inductive Thematic Analysis GPT (ITA-GPT), a purpose-built AI tool designed to support inductive thematic analysis through structured, semi-automated prompts aligned with reflexive thematic analysis and verbatim coding principles. Guided by a Human-Artificial Intelligence Collaborative Inductive Thematic Analysis (HACITA) framework, the study focuses on analytic process rather than substantive findings. Three experienced qualitative researchers conducted ITA-GPT assisted analyses of interview transcripts from education research in the Ghanaian teacher education context. The tool supported familiarization, verbatim in vivo coding, gerund-based descriptive coding, and theme development, while enforcing trace to text integrity, coverage checks, and auditability. Data sources included interaction logs, AI-generated tables, researcher revisions, deletions, insertions, comments, and reflexive memos. Findings show that ITA-GPT functioned as a procedural scaffold that structured analytic workflow and enhanced transparency. However, interpretive authority remained with human researchers, who exercised judgment through recurrent analytic actions including modification, deletion, rejection, insertion, and commenting. The study demonstrates how inductive thematic analysis is enacted through responsible human AI collaboration.

Q-realign: Piggybacking Realignment on Quantization for Safe and Efficient LLM Deployment

Jan 13, 2026Abstract:Public large language models (LLMs) are typically safety-aligned during pretraining, yet task-specific fine-tuning required for deployment often erodes this alignment and introduces safety risks. Existing defenses either embed safety recovery into fine-tuning or rely on fine-tuning-derived priors for post-hoc correction, leaving safety recovery tightly coupled with training and incurring high computational overhead and a complex workflow. To address these challenges, we propose \texttt{Q-realign}, a post-hoc defense method based on post-training quantization, guided by an analysis of representational structure. By reframing quantization as a dual-objective procedure for compression and safety, \texttt{Q-realign} decouples safety alignment from fine-tuning and naturally piggybacks into modern deployment pipelines. Experiments across multiple models and datasets demonstrate that our method substantially reduces unsafe behaviors while preserving task performance, with significant reductions in memory usage and GPU hours. Notably, our approach can recover the safety alignment of a fine-tuned 7B LLM on a single RTX 4090 within 40 minutes. Overall, our work provides a practical, turnkey solution for safety-aware deployment.

AutoSCORE: Enhancing Automated Scoring with Multi-Agent Large Language Models via Structured Component Recognition

Sep 26, 2025

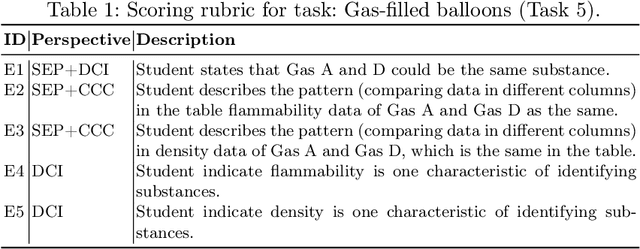

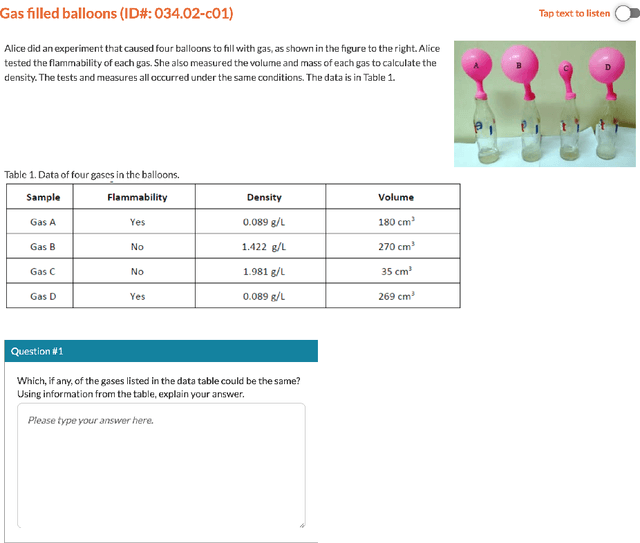

Abstract:Automated scoring plays a crucial role in education by reducing the reliance on human raters, offering scalable and immediate evaluation of student work. While large language models (LLMs) have shown strong potential in this task, their use as end-to-end raters faces challenges such as low accuracy, prompt sensitivity, limited interpretability, and rubric misalignment. These issues hinder the implementation of LLM-based automated scoring in assessment practice. To address the limitations, we propose AutoSCORE, a multi-agent LLM framework enhancing automated scoring via rubric-aligned Structured COmponent REcognition. With two agents, AutoSCORE first extracts rubric-relevant components from student responses and encodes them into a structured representation (i.e., Scoring Rubric Component Extraction Agent), which is then used to assign final scores (i.e., Scoring Agent). This design ensures that model reasoning follows a human-like grading process, enhancing interpretability and robustness. We evaluate AutoSCORE on four benchmark datasets from the ASAP benchmark, using both proprietary and open-source LLMs (GPT-4o, LLaMA-3.1-8B, and LLaMA-3.1-70B). Across diverse tasks and rubrics, AutoSCORE consistently improves scoring accuracy, human-machine agreement (QWK, correlations), and error metrics (MAE, RMSE) compared to single-agent baselines, with particularly strong benefits on complex, multi-dimensional rubrics, and especially large relative gains on smaller LLMs. These results demonstrate that structured component recognition combined with multi-agent design offers a scalable, reliable, and interpretable solution for automated scoring.

Rethinking the Potential of Layer Freezing for Efficient DNN Training

Aug 20, 2025Abstract:With the growing size of deep neural networks and datasets, the computational costs of training have significantly increased. The layer-freezing technique has recently attracted great attention as a promising method to effectively reduce the cost of network training. However, in traditional layer-freezing methods, frozen layers are still required for forward propagation to generate feature maps for unfrozen layers, limiting the reduction of computation costs. To overcome this, prior works proposed a hypothetical solution, which caches feature maps from frozen layers as a new dataset, allowing later layers to train directly on stored feature maps. While this approach appears to be straightforward, it presents several major challenges that are severely overlooked by prior literature, such as how to effectively apply augmentations to feature maps and the substantial storage overhead introduced. If these overlooked challenges are not addressed, the performance of the caching method will be severely impacted and even make it infeasible. This paper is the first to comprehensively explore these challenges and provides a systematic solution. To improve training accuracy, we propose \textit{similarity-aware channel augmentation}, which caches channels with high augmentation sensitivity with a minimum additional storage cost. To mitigate storage overhead, we incorporate lossy data compression into layer freezing and design a \textit{progressive compression} strategy, which increases compression rates as more layers are frozen, effectively reducing storage costs. Finally, our solution achieves significant reductions in training cost while maintaining model accuracy, with a minor time overhead. Additionally, we conduct a comprehensive evaluation of freezing and compression strategies, providing insights into optimizing their application for efficient DNN training.

Using Large Language Models to Assess Teachers' Pedagogical Content Knowledge

May 25, 2025Abstract:Assessing teachers' pedagogical content knowledge (PCK) through performance-based tasks is both time and effort-consuming. While large language models (LLMs) offer new opportunities for efficient automatic scoring, little is known about whether LLMs introduce construct-irrelevant variance (CIV) in ways similar to or different from traditional machine learning (ML) and human raters. This study examines three sources of CIV -- scenario variability, rater severity, and rater sensitivity to scenario -- in the context of video-based constructed-response tasks targeting two PCK sub-constructs: analyzing student thinking and evaluating teacher responsiveness. Using generalized linear mixed models (GLMMs), we compared variance components and rater-level scoring patterns across three scoring sources: human raters, supervised ML, and LLM. Results indicate that scenario-level variance was minimal across tasks, while rater-related factors contributed substantially to CIV, especially in the more interpretive Task II. The ML model was the most severe and least sensitive rater, whereas the LLM was the most lenient. These findings suggest that the LLM contributes to scoring efficiency while also introducing CIV as human raters do, yet with varying levels of contribution compared to supervised ML. Implications for rater training, automated scoring design, and future research on model interpretability are discussed.

Artificial Intelligence Bias on English Language Learners in Automatic Scoring

May 15, 2025Abstract:This study investigated potential scoring biases and disparities toward English Language Learners (ELLs) when using automatic scoring systems for middle school students' written responses to science assessments. We specifically focus on examining how unbalanced training data with ELLs contributes to scoring bias and disparities. We fine-tuned BERT with four datasets: responses from (1) ELLs, (2) non-ELLs, (3) a mixed dataset reflecting the real-world proportion of ELLs and non-ELLs (unbalanced), and (4) a balanced mixed dataset with equal representation of both groups. The study analyzed 21 assessment items: 10 items with about 30,000 ELL responses, five items with about 1,000 ELL responses, and six items with about 200 ELL responses. Scoring accuracy (Acc) was calculated and compared to identify bias using Friedman tests. We measured the Mean Score Gaps (MSGs) between ELLs and non-ELLs and then calculated the differences in MSGs generated through both the human and AI models to identify the scoring disparities. We found that no AI bias and distorted disparities between ELLs and non-ELLs were found when the training dataset was large enough (ELL = 30,000 and ELL = 1,000), but concerns could exist if the sample size is limited (ELL = 200).

Understanding University Students' Use of Generative AI: The Roles of Demographics and Personality Traits

May 03, 2025Abstract:The use of generative AI (GAI) among university students is rapidly increasing, yet empirical research on students' GAI use and the factors influencing it remains limited. To address this gap, we surveyed 363 undergraduate and graduate students in the United States, examining their GAI usage and how it relates to demographic variables and personality traits based on the Big Five model (i.e., extraversion, agreeableness, conscientiousness, and emotional stability, and intellect/imagination). Our findings reveal: (a) Students in higher academic years are more inclined to use GAI and prefer it over traditional resources. (b) Non-native English speakers use and adopt GAI more readily than native speakers. (c) Compared to White, Asian students report higher GAI usage, perceive greater academic benefits, and express a stronger preference for it. Similarly, Black students report a more positive impact of GAI on their academic performance. Personality traits also play a significant role in shaping perceptions and usage of GAI. After controlling demographic factors, we found that personality still significantly predicts GAI use and attitudes: (a) Students with higher conscientiousness use GAI less. (b) Students who are higher in agreeableness perceive a less positive impact of GAI on academic performance and express more ethical concerns about using it for academic work. (c) Students with higher emotional stability report a more positive impact of GAI on learning and fewer concerns about its academic use. (d) Students with higher extraversion show a stronger preference for GAI over traditional resources. (e) Students with higher intellect/imagination tend to prefer traditional resources. These insights highlight the need for universities to provide personalized guidance to ensure students use GAI effectively, ethically, and equitably in their academic pursuits.

Knowledge Distillation and Dataset Distillation of Large Language Models: Emerging Trends, Challenges, and Future Directions

Apr 20, 2025

Abstract:The exponential growth of Large Language Models (LLMs) continues to highlight the need for efficient strategies to meet ever-expanding computational and data demands. This survey provides a comprehensive analysis of two complementary paradigms: Knowledge Distillation (KD) and Dataset Distillation (DD), both aimed at compressing LLMs while preserving their advanced reasoning capabilities and linguistic diversity. We first examine key methodologies in KD, such as task-specific alignment, rationale-based training, and multi-teacher frameworks, alongside DD techniques that synthesize compact, high-impact datasets through optimization-based gradient matching, latent space regularization, and generative synthesis. Building on these foundations, we explore how integrating KD and DD can produce more effective and scalable compression strategies. Together, these approaches address persistent challenges in model scalability, architectural heterogeneity, and the preservation of emergent LLM abilities. We further highlight applications across domains such as healthcare and education, where distillation enables efficient deployment without sacrificing performance. Despite substantial progress, open challenges remain in preserving emergent reasoning and linguistic diversity, enabling efficient adaptation to continually evolving teacher models and datasets, and establishing comprehensive evaluation protocols. By synthesizing methodological innovations, theoretical foundations, and practical insights, our survey charts a path toward sustainable, resource-efficient LLMs through the tighter integration of KD and DD principles.

Efficient Multi-Task Inferencing: Model Merging with Gromov-Wasserstein Feature Alignment

Mar 12, 2025

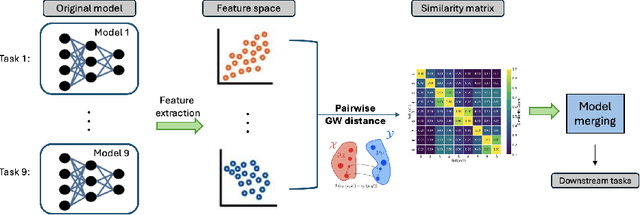

Abstract:Automatic scoring of student responses enhances efficiency in education, but deploying a separate neural network for each task increases storage demands, maintenance efforts, and redundant computations. To address these challenges, this paper introduces the Gromov-Wasserstein Scoring Model Merging (GW-SMM) method, which merges models based on feature distribution similarities measured via the Gromov-Wasserstein distance. Our approach begins by extracting features from student responses using individual models, capturing both item-specific context and unique learned representations. The Gromov-Wasserstein distance then quantifies the similarity between these feature distributions, identifying the most compatible models for merging. Models exhibiting the smallest pairwise distances, typically in pairs or trios, are merged by combining only the shared layers preceding the classification head. This strategy results in a unified feature extractor while preserving separate classification heads for item-specific scoring. We validated our approach against human expert knowledge and a GPT-o1-based merging method. GW-SMM consistently outperformed both, achieving a higher micro F1 score, macro F1 score, exact match accuracy, and per-label accuracy. The improvements in micro F1 and per-label accuracy were statistically significant compared to GPT-o1-based merging (p=0.04, p=0.01). Additionally, GW-SMM reduced storage requirements by half without compromising much accuracy, demonstrating its computational efficiency alongside reliable scoring performance.

Interpreting and Steering LLMs with Mutual Information-based Explanations on Sparse Autoencoders

Feb 21, 2025

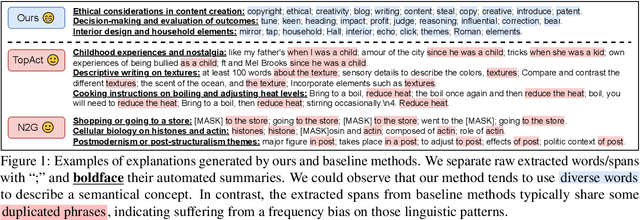

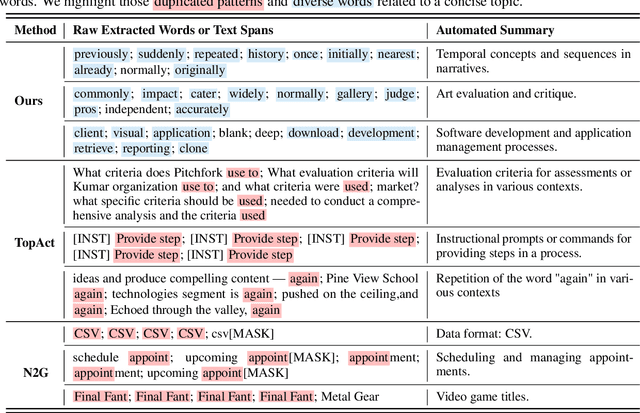

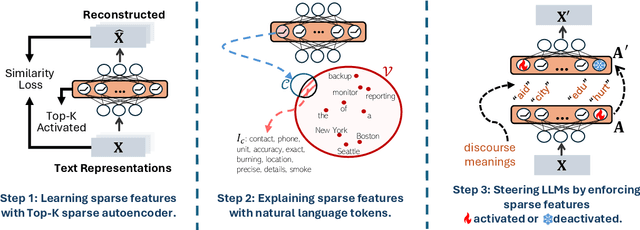

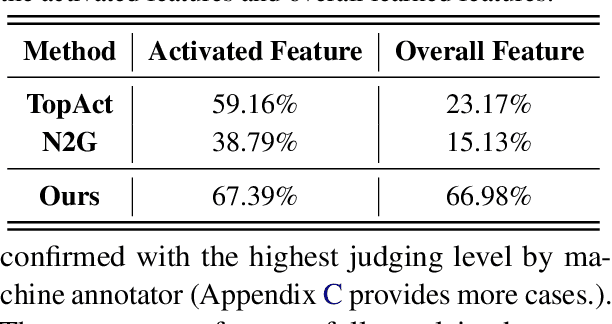

Abstract:Large language models (LLMs) excel at handling human queries, but they can occasionally generate flawed or unexpected responses. Understanding their internal states is crucial for understanding their successes, diagnosing their failures, and refining their capabilities. Although sparse autoencoders (SAEs) have shown promise for interpreting LLM internal representations, limited research has explored how to better explain SAE features, i.e., understanding the semantic meaning of features learned by SAE. Our theoretical analysis reveals that existing explanation methods suffer from the frequency bias issue, where they emphasize linguistic patterns over semantic concepts, while the latter is more critical to steer LLM behaviors. To address this, we propose using a fixed vocabulary set for feature interpretations and designing a mutual information-based objective, aiming to better capture the semantic meaning behind these features. We further propose two runtime steering strategies that adjust the learned feature activations based on their corresponding explanations. Empirical results show that, compared to baselines, our method provides more discourse-level explanations and effectively steers LLM behaviors to defend against jailbreak attacks. These findings highlight the value of explanations for steering LLM behaviors in downstream applications. We will release our code and data once accepted.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge