Xiaoming Yuan

Stability and Generalization of Nonconvex Optimization with Heavy-Tailed Noise

Jan 27, 2026Abstract:The empirical evidence indicates that stochastic optimization with heavy-tailed gradient noise is more appropriate to characterize the training of machine learning models than that with standard bounded gradient variance noise. Most existing works on this phenomenon focus on the convergence of optimization errors, while the analysis for generalization bounds under the heavy-tailed gradient noise remains limited. In this paper, we develop a general framework for establishing generalization bounds under heavy-tailed noise. Specifically, we introduce a truncation argument to achieve the generalization error bound based on the algorithmic stability under the assumption of bounded $p$th centered moment with $p\in(1,2]$. Building on this framework, we further provide the stability and generalization analysis for several popular stochastic algorithms under heavy-tailed noise, including clipped and normalized stochastic gradient descent, as well as their mini-batch and momentum variants.

BestServe: Serving Strategies with Optimal Goodput in Collocation and Disaggregation Architectures

Jun 06, 2025Abstract:Serving large language models (LLMs) to millions of users requires efficient resource allocation and parallelism strategies. It is a labor intensive trial-and-error process to find such a strategy. We present BestServe, a novel framework for ranking serving strategies by estimating goodput under various operating scenarios. Supporting both collocated and disaggregated architectures, BestServe leverages an inference simulator built on an adapted roofline model and CPU-GPU dispatch dynamics. Our framework determines the optimal strategy in minutes on a single standard CPU, eliminating the need for costly benchmarking, while achieving predictions within a $20\%$ error margin. It appeals to be practical for rapid deployment planning because of its lightweight design and strong extensibility.

SPAP: Structured Pruning via Alternating Optimization and Penalty Methods

May 06, 2025

Abstract:The deployment of large language models (LLMs) is often constrained by their substantial computational and memory demands. While structured pruning presents a viable approach by eliminating entire network components, existing methods suffer from performance degradation, reliance on heuristic metrics, or expensive finetuning. To address these challenges, we propose SPAP (Structured Pruning via Alternating Optimization and Penalty Methods), a novel and efficient structured pruning framework for LLMs grounded in optimization theory. SPAP formulates the pruning problem through a mixed-integer optimization model, employs a penalty method that effectively makes pruning decisions to minimize pruning errors, and introduces an alternating minimization algorithm tailored to the splittable problem structure for efficient weight updates and performance recovery. Extensive experiments on OPT, LLaMA-3/3.1/3.2, and Qwen2.5 models demonstrate SPAP's superiority over state-of-the-art methods, delivering linear inference speedups (1.29$\times$ at 30% sparsity) and proportional memory reductions. Our work offers a practical, optimization-driven solution for pruning LLMs while preserving model performance.

FASP: Fast and Accurate Structured Pruning of Large Language Models

Jan 16, 2025

Abstract:The rapid increase in the size of large language models (LLMs) has significantly escalated their computational and memory demands, posing challenges for efficient deployment, especially on resource-constrained devices. Structured pruning has emerged as an effective model compression method that can reduce these demands while preserving performance. In this paper, we introduce FASP (Fast and Accurate Structured Pruning), a novel structured pruning framework for LLMs that emphasizes both speed and accuracy. FASP employs a distinctive pruning structure that interlinks sequential layers, allowing for the removal of columns in one layer while simultaneously eliminating corresponding rows in the preceding layer without incurring additional performance loss. The pruning metric, inspired by Wanda, is computationally efficient and effectively selects components to prune. Additionally, we propose a restoration mechanism that enhances model fidelity by adjusting the remaining weights post-pruning. We evaluate FASP on the OPT and LLaMA model families, demonstrating superior performance in terms of perplexity and accuracy on downstream tasks compared to state-of-the-art methods. Our approach achieves significant speed-ups, pruning models such as OPT-125M in 17 seconds and LLaMA-30B in 15 minutes on a single NVIDIA RTX 4090 GPU, making it a highly practical solution for optimizing LLMs.

A Convex-optimization-based Layer-wise Post-training Pruner for Large Language Models

Aug 07, 2024

Abstract:Pruning is a critical strategy for compressing trained large language models (LLMs), aiming at substantial memory conservation and computational acceleration without compromising performance. However, existing pruning methods often necessitate inefficient retraining for billion-scale LLMs or rely on heuristic methods such as the optimal brain surgeon framework, which degrade performance. In this paper, we introduce FISTAPruner, the first post-training pruner based on convex optimization models and algorithms. Specifically, we propose a convex optimization model incorporating $\ell_1$ norm to induce sparsity and utilize the FISTA solver for optimization. FISTAPruner incorporates an intra-layer cumulative error correction mechanism and supports parallel pruning. We comprehensively evaluate FISTAPruner on models such as OPT, LLaMA, LLaMA-2, and LLaMA-3 with 125M to 70B parameters under unstructured and 2:4 semi-structured sparsity, demonstrating superior performance over existing state-of-the-art methods across various language benchmarks.

Adapprox: Adaptive Approximation in Adam Optimization via Randomized Low-Rank Matrices

Mar 22, 2024Abstract:As deep learning models exponentially increase in size, optimizers such as Adam encounter significant memory consumption challenges due to the storage of first and second moment data. Current memory-efficient methods like Adafactor and CAME often compromise accuracy with their matrix factorization techniques. Addressing this, we introduce Adapprox, a novel approach that employs randomized low-rank matrix approximation for a more effective and accurate approximation of Adam's second moment. Adapprox features an adaptive rank selection mechanism, finely balancing accuracy and memory efficiency, and includes an optional cosine similarity guidance strategy to enhance stability and expedite convergence. In GPT-2 training and downstream tasks, Adapprox surpasses AdamW by achieving 34.5% to 49.9% and 33.8% to 49.9% memory savings for the 117M and 345M models, respectively, with the first moment enabled, and further increases these savings without the first moment. Besides, it enhances convergence speed and improves downstream task performance relative to its counterparts.

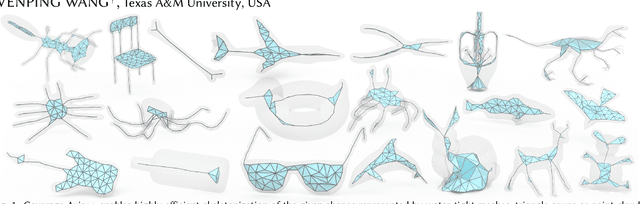

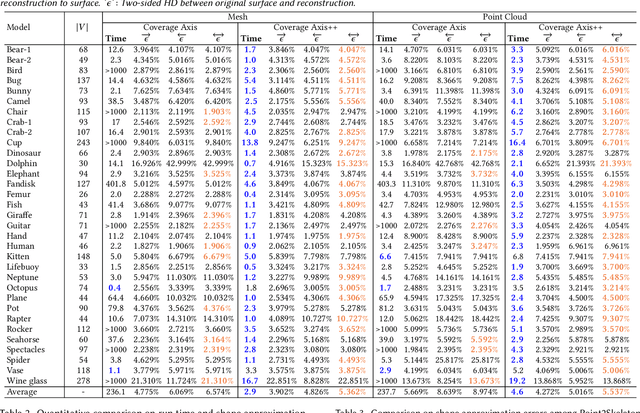

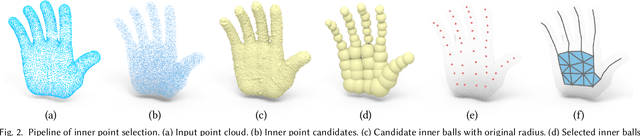

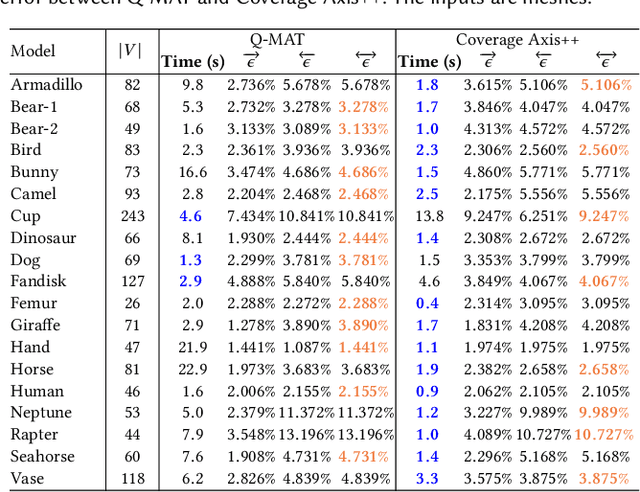

Coverage Axis++: Efficient Inner Point Selection for 3D Shape Skeletonization

Feb 01, 2024

Abstract:We introduce Coverage Axis++, a novel and efficient approach to 3D shape skeletonization. The current state-of-the-art approaches for this task often rely on the watertightness of the input or suffer from substantial computational costs, thereby limiting their practicality. To address this challenge, Coverage Axis++ proposes a heuristic algorithm to select skeletal points, offering a high-accuracy approximation of the Medial Axis Transform (MAT) while significantly mitigating computational intensity for various shape representations. We introduce a simple yet effective strategy that considers both shape coverage and uniformity to derive skeletal points. The selection procedure enforces consistency with the shape structure while favoring the dominant medial balls, which thus introduces a compact underlying shape representation in terms of MAT. As a result, Coverage Axis++ allows for skeletonization for various shape representations (e.g., water-tight meshes, triangle soups, point clouds), specification of the number of skeletal points, few hyperparameters, and highly efficient computation with improved reconstruction accuracy. Extensive experiments across a wide range of 3D shapes validate the efficiency and effectiveness of Coverage Axis++. The code will be publicly available once the paper is published.

The Hard-Constraint PINNs for Interface Optimal Control Problems

Aug 13, 2023Abstract:We show that the physics-informed neural networks (PINNs), in combination with some recently developed discontinuity capturing neural networks, can be applied to solve optimal control problems subject to partial differential equations (PDEs) with interfaces and some control constraints. The resulting algorithm is mesh-free and scalable to different PDEs, and it ensures the control constraints rigorously. Since the boundary and interface conditions, as well as the PDEs, are all treated as soft constraints by lumping them into a weighted loss function, it is necessary to learn them simultaneously and there is no guarantee that the boundary and interface conditions can be satisfied exactly. This immediately causes difficulties in tuning the weights in the corresponding loss function and training the neural networks. To tackle these difficulties and guarantee the numerical accuracy, we propose to impose the boundary and interface conditions as hard constraints in PINNs by developing a novel neural network architecture. The resulting hard-constraint PINNs approach guarantees that both the boundary and interface conditions can be satisfied exactly and they are decoupled from the learning of the PDEs. Its efficiency is promisingly validated by some elliptic and parabolic interface optimal control problems.

Accelerated primal-dual methods with enlarged step sizes and operator learning for nonsmooth optimal control problems

Jul 01, 2023Abstract:We consider a general class of nonsmooth optimal control problems with partial differential equation (PDE) constraints, which are very challenging due to its nonsmooth objective functionals and the resulting high-dimensional and ill-conditioned systems after discretization. We focus on the application of a primal-dual method, with which different types of variables can be treated individually and thus its main computation at each iteration only requires solving two PDEs. Our target is to accelerate the primal-dual method with either larger step sizes or operator learning techniques. For the accelerated primal-dual method with larger step sizes, its convergence can be still proved rigorously while it numerically accelerates the original primal-dual method in a simple and universal way. For the operator learning acceleration, we construct deep neural network surrogate models for the involved PDEs. Once a neural operator is learned, solving a PDE requires only a forward pass of the neural network, and the computational cost is thus substantially reduced. The accelerated primal-dual method with operator learning is mesh-free, numerically efficient, and scalable to different types of PDEs. The acceleration effectiveness of these two techniques is promisingly validated by some preliminary numerical results.

Towards Tailored Models on Private AIoT Devices: Federated Direct Neural Architecture Search

Feb 23, 2022

Abstract:Neural networks often encounter various stringent resource constraints while deploying on edge devices. To tackle these problems with less human efforts, automated machine learning becomes popular in finding various neural architectures that fit diverse Artificial Intelligence of Things (AIoT) scenarios. Recently, to prevent the leakage of private information while enable automated machine intelligence, there is an emerging trend to integrate federated learning and neural architecture search (NAS). Although promising as it may seem, the coupling of difficulties from both tenets makes the algorithm development quite challenging. In particular, how to efficiently search the optimal neural architecture directly from massive non-independent and identically distributed (non-IID) data among AIoT devices in a federated manner is a hard nut to crack. In this paper, to tackle this challenge, by leveraging the advances in ProxylessNAS, we propose a Federated Direct Neural Architecture Search (FDNAS) framework that allows for hardware-friendly NAS from non- IID data across devices. To further adapt to both various data distributions and different types of devices with heterogeneous embedded hardware platforms, inspired by meta-learning, a Cluster Federated Direct Neural Architecture Search (CFDNAS) framework is proposed to achieve device-aware NAS, in the sense that each device can learn a tailored deep learning model for its particular data distribution and hardware constraint. Extensive experiments on non-IID datasets have shown the state-of-the-art accuracy-efficiency trade-offs achieved by the proposed solution in the presence of both data and device heterogeneity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge