Xiao-Yong Wei

MarkovScale: Towards Optimal Sequential Scaling at Inference Time

Feb 01, 2026Abstract:Sequential scaling is a prominent inference-time scaling paradigm, yet its performance improvements are typically modest and not well understood, largely due to the prevalence of heuristic, non-principled approaches that obscure clear optimality bounds. To address this, we propose a principled framework that models sequential scaling as a two-state Markov process. This approach reveals the underlying properties of sequential scaling and yields closed-form solutions for essential aspects, such as the specific conditions under which accuracy is improved and the theoretical upper, neutral, and lower performance bounds. Leveraging this formulation, we develop MarkovScale, a practical system that applies these optimality criteria to achieve a theoretically grounded balance between accuracy and efficiency. Comprehensive experiments across 3 backbone LLMs, 5 benchmarks, and over 20 configurations show that MarkovScale consistently outperforms state-of-the-art parallel and sequential scaling methods, representing a significant step toward optimal and resource-efficient inference in LLMs. The source code will be open upon acceptance at https://open-upon-acceptance.

To Retrieve or To Think? An Agentic Approach for Context Evolution

Jan 13, 2026Abstract:Current context augmentation methods, such as retrieval-augmented generation, are essential for solving knowledge-intensive reasoning tasks.However, they typically adhere to a rigid, brute-force strategy that executes retrieval at every step. This indiscriminate approach not only incurs unnecessary computational costs but also degrades performance by saturating the context with irrelevant noise. To address these limitations, we introduce Agentic Context Evolution (ACE), a framework inspired by human metacognition that dynamically determines whether to seek new evidence or reason with existing knowledge. ACE employs a central orchestrator agent to make decisions strategically via majority voting.It aims to alternate between activating a retriever agent for external retrieval and a reasoner agent for internal analysis and refinement. By eliminating redundant retrieval steps, ACE maintains a concise and evolved context. Extensive experiments on challenging multi-hop QA benchmarks demonstrate that ACE significantly outperforms competitive baselines in accuracy while achieving efficient token consumption.Our work provides valuable insights into advancing context-evolved generation for complex, knowledge-intensive tasks.

Multi-agent Undercover Gaming: Hallucination Removal via Counterfactual Test for Multimodal Reasoning

Nov 14, 2025Abstract:Hallucination continues to pose a major obstacle in the reasoning capabilities of large language models (LLMs). Although the Multi-Agent Debate (MAD) paradigm offers a promising solution by promoting consensus among multiple agents to enhance reliability, it relies on the unrealistic assumption that all debaters are rational and reflective, which is a condition that may not hold when agents themselves are prone to hallucinations. To address this gap, we introduce the Multi-agent Undercover Gaming (MUG) protocol, inspired by social deduction games like "Who is Undercover?". MUG reframes MAD as a process of detecting "undercover" agents (those suffering from hallucinations) by employing multimodal counterfactual tests. Specifically, we modify reference images to introduce counterfactual evidence and observe whether agents can accurately identify these changes, providing ground-truth for identifying hallucinating agents and enabling robust, crowd-powered multimodal reasoning. MUG advances MAD protocols along three key dimensions: (1) enabling factual verification beyond statistical consensus through counterfactual testing; (2) introducing cross-evidence reasoning via dynamically modified evidence sources instead of relying on static inputs; and (3) fostering active reasoning, where agents engage in probing discussions rather than passively answering questions. Collectively, these innovations offer a more reliable and effective framework for multimodal reasoning in LLMs. The source code can be accessed at https://github.com/YongLD/MUG.git.

Rethinking Domain-Specific LLM Benchmark Construction: A Comprehensiveness-Compactness Approach

Aug 13, 2025Abstract:Numerous benchmarks have been built to evaluate the domain-specific abilities of large language models (LLMs), highlighting the need for effective and efficient benchmark construction. Existing domain-specific benchmarks primarily focus on the scaling law, relying on massive corpora for supervised fine-tuning or generating extensive question sets for broad coverage. However, the impact of corpus and question-answer (QA) set design on the precision and recall of domain-specific LLMs remains unexplored. In this paper, we address this gap and demonstrate that the scaling law is not always the optimal principle for benchmark construction in specific domains. Instead, we propose Comp-Comp, an iterative benchmarking framework based on a comprehensiveness-compactness principle. Here, comprehensiveness ensures semantic recall of the domain, while compactness enhances precision, guiding both corpus and QA set construction. To validate our framework, we conducted a case study in a well-renowned university, resulting in the creation of XUBench, a large-scale and comprehensive closed-domain benchmark. Although we use the academic domain as the case in this work, our Comp-Comp framework is designed to be extensible beyond academia, providing valuable insights for benchmark construction across various domains.

Seeing Beyond the Scene: Enhancing Vision-Language Models with Interactional Reasoning

May 14, 2025Abstract:Traditional scene graphs primarily focus on spatial relationships, limiting vision-language models' (VLMs) ability to reason about complex interactions in visual scenes. This paper addresses two key challenges: (1) conventional detection-to-construction methods produce unfocused, contextually irrelevant relationship sets, and (2) existing approaches fail to form persistent memories for generalizing interaction reasoning to new scenes. We propose Interaction-augmented Scene Graph Reasoning (ISGR), a framework that enhances VLMs' interactional reasoning through three complementary components. First, our dual-stream graph constructor combines SAM-powered spatial relation extraction with interaction-aware captioning to generate functionally salient scene graphs with spatial grounding. Second, we employ targeted interaction queries to activate VLMs' latent knowledge of object functionalities, converting passive recognition into active reasoning about how objects work together. Finally, we introduce a lone-term memory reinforcement learning strategy with a specialized interaction-focused reward function that transforms transient patterns into long-term reasoning heuristics. Extensive experiments demonstrate that our approach significantly outperforms baseline methods on interaction-heavy reasoning benchmarks, with particularly strong improvements on complex scene understanding tasks. The source code can be accessed at https://github.com/open_upon_acceptance.

FedConv: A Learning-on-Model Paradigm for Heterogeneous Federated Clients

Feb 28, 2025

Abstract:Federated Learning (FL) facilitates collaborative training of a shared global model without exposing clients' private data. In practical FL systems, clients (e.g., edge servers, smartphones, and wearables) typically have disparate system resources. Conventional FL, however, adopts a one-size-fits-all solution, where a homogeneous large global model is transmitted to and trained on each client, resulting in an overwhelming workload for less capable clients and starvation for other clients. To address this issue, we propose FedConv, a client-friendly FL framework, which minimizes the computation and memory burden on resource-constrained clients by providing heterogeneous customized sub-models. FedConv features a novel learning-on-model paradigm that learns the parameters of the heterogeneous sub-models via convolutional compression. Unlike traditional compression methods, the compressed models in FedConv can be directly trained on clients without decompression. To aggregate the heterogeneous sub-models, we propose transposed convolutional dilation to convert them back to large models with a unified size while retaining personalized information from clients. The compression and dilation processes, transparent to clients, are optimized on the server leveraging a small public dataset. Extensive experiments on six datasets demonstrate that FedConv outperforms state-of-the-art FL systems in terms of model accuracy (by more than 35% on average), computation and communication overhead (with 33% and 25% reduction, respectively).

Cardiac Evidence Backtracking for Eating Behavior Monitoring using Collocative Electrocardiogram Imagining

Feb 20, 2025

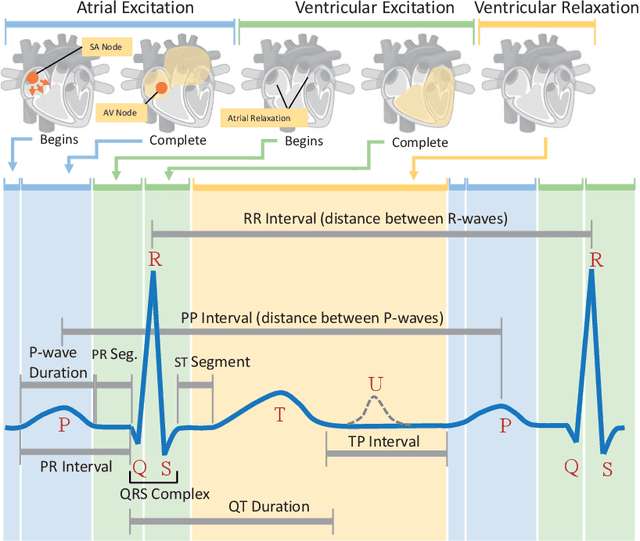

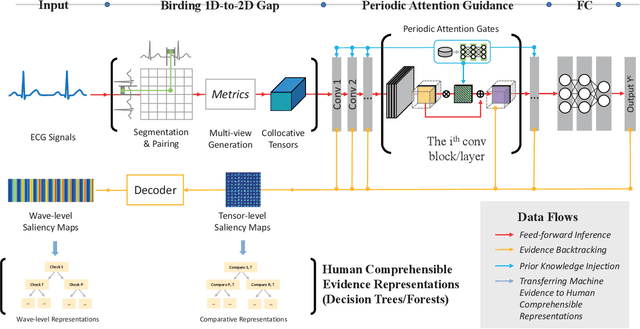

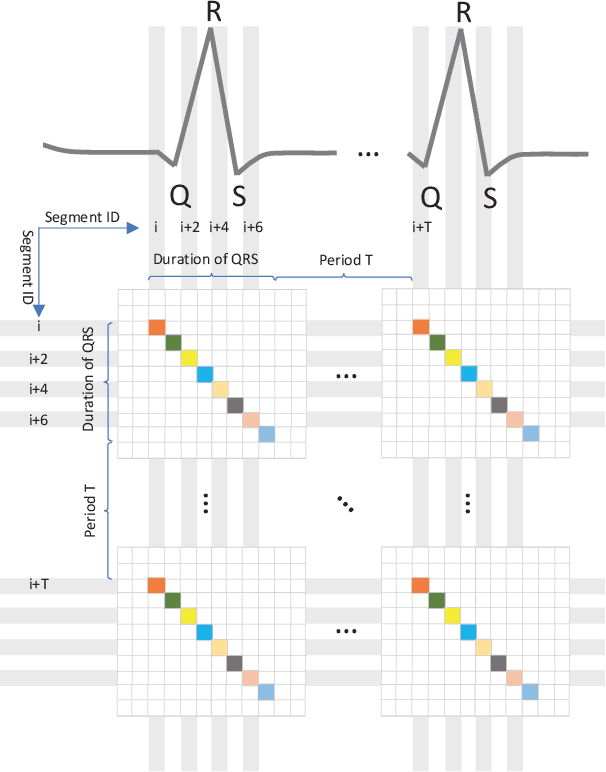

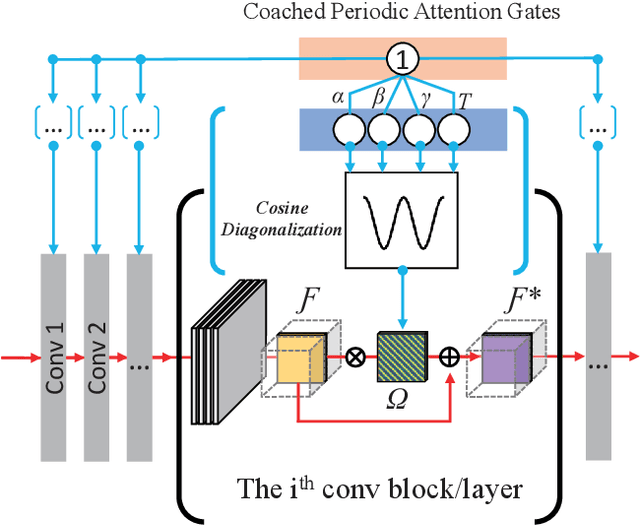

Abstract:Eating monitoring has remained an open challenge in medical research for years due to the lack of non-invasive sensors for continuous monitoring and the reliable methods for automatic behavior detection. In this paper, we present a pilot study using the wearable 24-hour ECG for sensing and tailoring the sophisticated deep learning for ad-hoc and interpretable detection. This is accomplished using a collocative learning framework in which 1) we construct collocative tensors as pseudo-images from 1D ECG signals to improve the feasibility of 2D image-based deep models; 2) we formulate the cardiac logic of analyzing the ECG data in a comparative way as periodic attention regulators so as to guide the deep inference to collect evidence in a human comprehensible manner; and 3) we improve the interpretability of the framework by enabling the backtracking of evidence with a set of methods designed for Class Activation Mapping (CAM) decoding and decision tree/forest generation. The effectiveness of the proposed framework has been validated on the largest ECG dataset of eating behavior with superior performance over conventional models, and its capacity of cardiac evidence mining has also been verified through the consistency of the evidence it backtracked and that of the previous medical studies.

Mean of Means: Human Localization with Calibration-free and Unconstrained Camera Settings (extended version)

Feb 18, 2025

Abstract:Accurate human localization is crucial for various applications, especially in the Metaverse era. Existing high precision solutions rely on expensive, tag-dependent hardware, while vision-based methods offer a cheaper, tag-free alternative. However, current vision solutions based on stereo vision face limitations due to rigid perspective transformation principles and error propagation in multi-stage SVD solvers. These solutions also require multiple high-resolution cameras with strict setup constraints.To address these limitations, we propose a probabilistic approach that considers all points on the human body as observations generated by a distribution centered around the body's geometric center. This enables us to improve sampling significantly, increasing the number of samples for each point of interest from hundreds to billions. By modeling the relation between the means of the distributions of world coordinates and pixel coordinates, leveraging the Central Limit Theorem, we ensure normality and facilitate the learning process. Experimental results demonstrate human localization accuracy of 96\% within a 0.3$m$ range and nearly 100\% accuracy within a 0.5$m$ range, achieved at a low cost of only 10 USD using two web cameras with a resolution of 640$\times$480 pixels.

PolySmart @ TRECVid 2024 Video-To-Text

Dec 23, 2024

Abstract:In this paper, we present our methods and results for the Video-To-Text (VTT) task at TRECVid 2024, exploring the capabilities of Vision-Language Models (VLMs) like LLaVA and LLaVA-NeXT-Video in generating natural language descriptions for video content. We investigate the impact of fine-tuning VLMs on VTT datasets to enhance description accuracy, contextual relevance, and linguistic consistency. Our analysis reveals that fine-tuning substantially improves the model's ability to produce more detailed and domain-aligned text, bridging the gap between generic VLM tasks and the specialized needs of VTT. Experimental results demonstrate that our fine-tuned model outperforms baseline VLMs across various evaluation metrics, underscoring the importance of domain-specific tuning for complex VTT tasks.

PolySmart and VIREO @ TRECVid 2024 Ad-hoc Video Search

Dec 20, 2024

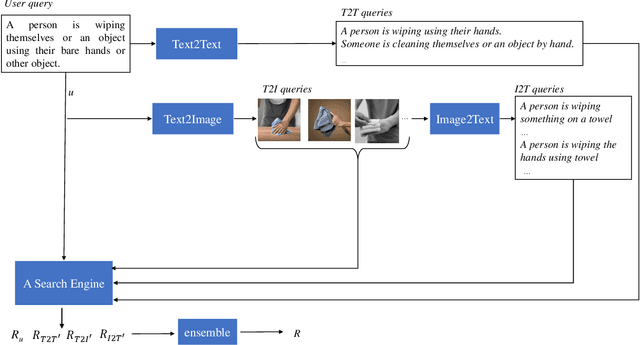

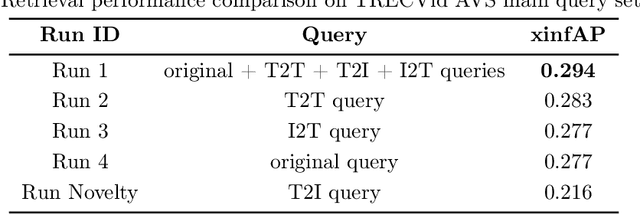

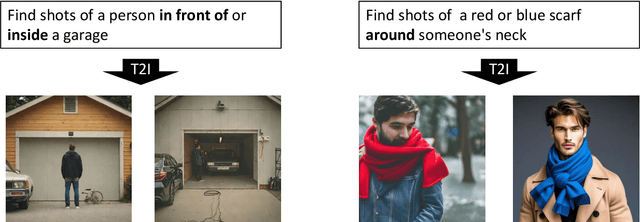

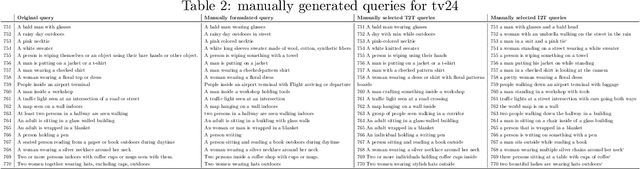

Abstract:This year, we explore generation-augmented retrieval for the TRECVid AVS task. Specifically, the understanding of textual query is enhanced by three generations, including Text2Text, Text2Image, and Image2Text, to address the out-of-vocabulary problem. Using different combinations of them and the rank list retrieved by the original query, we submitted four automatic runs. For manual runs, we use a large language model (LLM) (i.e., GPT4) to rephrase test queries based on the concept bank of the search engine, and we manually check again to ensure all the concepts used in the rephrased queries are in the bank. The result shows that the fusion of the original and generated queries outperforms the original query on TV24 query sets. The generated queries retrieve different rank lists from the original query.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge