Ramesh Jain

Personal Care Utility (PCU): Building the Health Infrastructure for Everyday Insight and Guidance

Oct 26, 2025Abstract:Building on decades of success in digital infrastructure and biomedical innovation, we propose the Personal Care Utility (PCU) - a cybernetic system for lifelong health guidance. PCU is conceived as a global, AI-powered utility that continuously orchestrates multimodal data, knowledge, and services to assist individuals and populations alike. Drawing on multimodal agents, event-centric modeling, and contextual inference, it offers three essential capabilities: (1) trusted health information tailored to the individual, (2) proactive health navigation and behavior guidance, and (3) ongoing interpretation of recovery and treatment response after medical events. Unlike conventional episodic care, PCU functions as an ambient, adaptive companion - observing, interpreting, and guiding health in real time across daily life. By integrating personal sensing, experiential computing, and population-level analytics, PCU promises not only improved outcomes for individuals but also a new substrate for public health and scientific discovery. We describe the architecture, design principles, and implementation challenges of this emerging paradigm.

World Food Atlas Project

Apr 25, 2025Abstract:A coronavirus pandemic is forcing people to be "at home" all over the world. In a life of hardly ever going out, we would have realized how the food we eat affects our bodies. What can we do to know our food more and control it better? To give us a clue, we are trying to build a World Food Atlas (WFA) that collects all the knowledge about food in the world. In this paper, we present two of our trials. The first is the Food Knowledge Graph (FKG), which is a graphical representation of knowledge about food and ingredient relationships derived from recipes and food nutrition data. The second is the FoodLog Athl and the RecipeLog that are applications for collecting people's detailed records about food habit. We also discuss several problems that we try to solve to build the WFA by integrating these two ideas.

Cardiac Evidence Backtracking for Eating Behavior Monitoring using Collocative Electrocardiogram Imagining

Feb 20, 2025

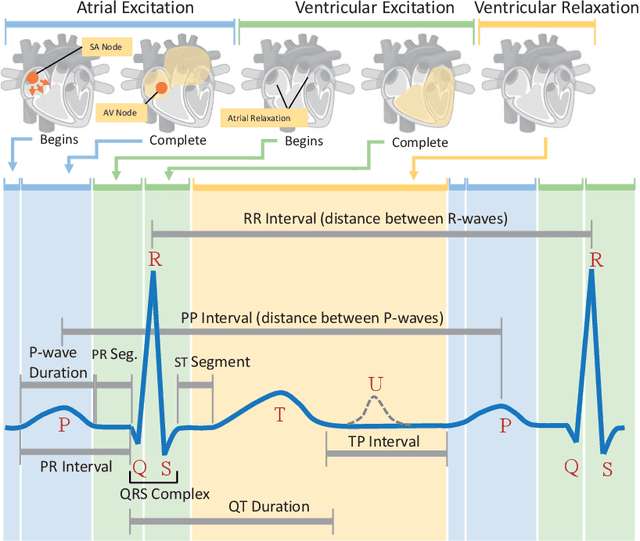

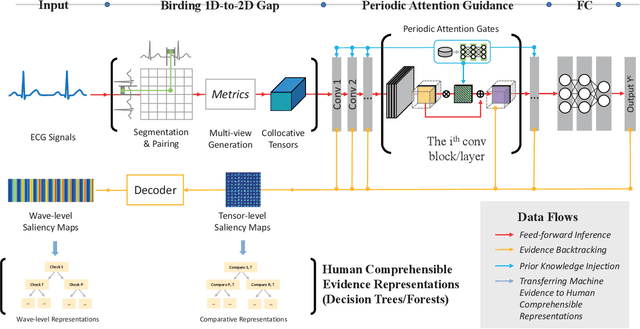

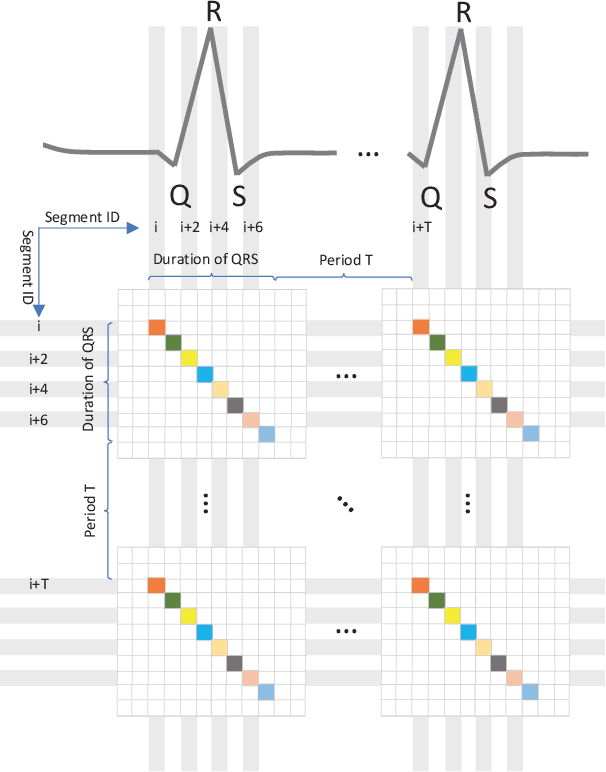

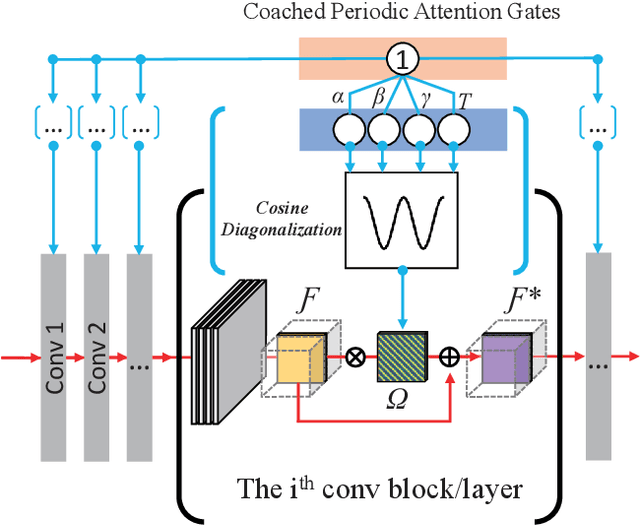

Abstract:Eating monitoring has remained an open challenge in medical research for years due to the lack of non-invasive sensors for continuous monitoring and the reliable methods for automatic behavior detection. In this paper, we present a pilot study using the wearable 24-hour ECG for sensing and tailoring the sophisticated deep learning for ad-hoc and interpretable detection. This is accomplished using a collocative learning framework in which 1) we construct collocative tensors as pseudo-images from 1D ECG signals to improve the feasibility of 2D image-based deep models; 2) we formulate the cardiac logic of analyzing the ECG data in a comparative way as periodic attention regulators so as to guide the deep inference to collect evidence in a human comprehensible manner; and 3) we improve the interpretability of the framework by enabling the backtracking of evidence with a set of methods designed for Class Activation Mapping (CAM) decoding and decision tree/forest generation. The effectiveness of the proposed framework has been validated on the largest ECG dataset of eating behavior with superior performance over conventional models, and its capacity of cardiac evidence mining has also been verified through the consistency of the evidence it backtracked and that of the previous medical studies.

Enhancing FKG.in: automating Indian food composition analysis

Dec 09, 2024

Abstract:This paper presents a novel approach to compute food composition data for Indian recipes using a knowledge graph for Indian food (FKG.in) and LLMs. The primary focus is to provide a broad overview of an automated food composition analysis workflow and describe its core functionalities: nutrition data aggregation, food composition analysis, and LLM-augmented information resolution. This workflow aims to complement FKG.in and iteratively supplement food composition data from verified knowledge bases. Additionally, this paper highlights the challenges of representing Indian food and accessing food composition data digitally. It also reviews three key sources of food composition data: the Indian Food Composition Tables, the Indian Nutrient Databank, and the Nutritionix API. Furthermore, it briefly outlines how users can interact with the workflow to obtain diet-based health recommendations and detailed food composition information for numerous recipes. We then explore the complex challenges of analyzing Indian recipe information across dimensions such as structure, multilingualism, and uncertainty as well as present our ongoing work on LLM-based solutions to address these issues. The methods proposed in this workshop paper for AI-driven knowledge curation and information resolution are application-agnostic, generalizable, and replicable for any domain.

Building FKG.in: a Knowledge Graph for Indian Food

Sep 01, 2024

Abstract:This paper presents an ontology design along with knowledge engineering, and multilingual semantic reasoning techniques to build an automated system for assimilating culinary information for Indian food in the form of a knowledge graph. The main focus is on designing intelligent methods to derive ontology designs and capture all-encompassing knowledge about food, recipes, ingredients, cooking characteristics, and most importantly, nutrition, at scale. We present our ongoing work in this workshop paper, describe in some detail the relevant challenges in curating knowledge of Indian food, and propose our high-level ontology design. We also present a novel workflow that uses AI, LLM, and language technology to curate information from recipe blog sites in the public domain to build knowledge graphs for Indian food. The methods for knowledge curation proposed in this paper are generic and can be replicated for any domain. The design is application-agnostic and can be used for AI-driven smart analysis, building recommendation systems for Personalized Digital Health, and complementing the knowledge graph for Indian food with contextual information such as user information, food biochemistry, geographic information, agricultural information, etc.

Empathy Through Multimodality in Conversational Interfaces

May 08, 2024Abstract:Agents represent one of the most emerging applications of Large Language Models (LLMs) and Generative AI, with their effectiveness hinging on multimodal capabilities to navigate complex user environments. Conversational Health Agents (CHAs), a prime example of this, are redefining healthcare by offering nuanced support that transcends textual analysis to incorporate emotional intelligence. This paper introduces an LLM-based CHA engineered for rich, multimodal dialogue-especially in the realm of mental health support. It adeptly interprets and responds to users' emotional states by analyzing multimodal cues, thus delivering contextually aware and empathetically resonant verbal responses. Our implementation leverages the versatile openCHA framework, and our comprehensive evaluation involves neutral prompts expressed in diverse emotional tones: sadness, anger, and joy. We evaluate the consistency and repeatability of the planning capability of the proposed CHA. Furthermore, human evaluators critique the CHA's empathic delivery, with findings revealing a striking concordance between the CHA's outputs and evaluators' assessments. These results affirm the indispensable role of vocal (soon multimodal) emotion recognition in strengthening the empathetic connection built by CHAs, cementing their place at the forefront of interactive, compassionate digital health solutions.

Knowledge-Infused LLM-Powered Conversational Health Agent: A Case Study for Diabetes Patients

Feb 28, 2024Abstract:Effective diabetes management is crucial for maintaining health in diabetic patients. Large Language Models (LLMs) have opened new avenues for diabetes management, facilitating their efficacy. However, current LLM-based approaches are limited by their dependence on general sources and lack of integration with domain-specific knowledge, leading to inaccurate responses. In this paper, we propose a knowledge-infused LLM-powered conversational health agent (CHA) for diabetic patients. We customize and leverage the open-source openCHA framework, enhancing our CHA with external knowledge and analytical capabilities. This integration involves two key components: 1) incorporating the American Diabetes Association dietary guidelines and the Nutritionix information and 2) deploying analytical tools that enable nutritional intake calculation and comparison with the guidelines. We compare the proposed CHA with GPT4. Our evaluation includes 100 diabetes-related questions on daily meal choices and assessing the potential risks associated with the suggested diet. Our findings show that the proposed agent demonstrates superior performance in generating responses to manage essential nutrients.

Food Recommendation as Language Processing (F-RLP): A Personalized and Contextual Paradigm

Feb 14, 2024

Abstract:State-of-the-art rule-based and classification-based food recommendation systems face significant challenges in becoming practical and useful. This difficulty arises primarily because most machine learning models struggle with problems characterized by an almost infinite number of classes and a limited number of samples within an unbalanced dataset. Conversely, the emergence of Large Language Models (LLMs) as recommendation engines offers a promising avenue. However, a general-purpose Recommendation as Language Processing (RLP) approach lacks the critical components necessary for effective food recommendations. To address this gap, we introduce Food Recommendation as Language Processing (F-RLP), a novel framework that offers a food-specific, tailored infrastructure. F-RLP leverages the capabilities of LLMs to maximize their potential, thereby paving the way for more accurate, personalized food recommendations.

Conversational Health Agents: A Personalized LLM-Powered Agent Framework

Oct 03, 2023

Abstract:Conversational Health Agents (CHAs) are interactive systems designed to enhance personal healthcare services by engaging in empathetic conversations and processing multimodal data. While current CHAs, especially those utilizing Large Language Models (LLMs), primarily focus on conversation, they often lack comprehensive agent capabilities. This includes the ability to access personal user health data from wearables, 24/7 data collection sources, and electronic health records, as well as integrating the latest published health insights and connecting with established multimodal data analysis tools. We are developing a framework to empower CHAs by equipping them with critical thinking, knowledge acquisition, and problem-solving abilities. Our CHA platform, powered by LLMs, seamlessly integrates healthcare tools, enables multilingual and multimodal conversations, and interfaces with a variety of user data analysis tools. We illustrate its proficiency in handling complex healthcare tasks, such as stress level estimation, showcasing the agent's cognitive and operational capabilities.

Foundation Metrics: Quantifying Effectiveness of Healthcare Conversations powered by Generative AI

Sep 21, 2023Abstract:Generative Artificial Intelligence is set to revolutionize healthcare delivery by transforming traditional patient care into a more personalized, efficient, and proactive process. Chatbots, serving as interactive conversational models, will probably drive this patient-centered transformation in healthcare. Through the provision of various services, including diagnosis, personalized lifestyle recommendations, and mental health support, the objective is to substantially augment patient health outcomes, all the while mitigating the workload burden on healthcare providers. The life-critical nature of healthcare applications necessitates establishing a unified and comprehensive set of evaluation metrics for conversational models. Existing evaluation metrics proposed for various generic large language models (LLMs) demonstrate a lack of comprehension regarding medical and health concepts and their significance in promoting patients' well-being. Moreover, these metrics neglect pivotal user-centered aspects, including trust-building, ethics, personalization, empathy, user comprehension, and emotional support. The purpose of this paper is to explore state-of-the-art LLM-based evaluation metrics that are specifically applicable to the assessment of interactive conversational models in healthcare. Subsequently, we present an comprehensive set of evaluation metrics designed to thoroughly assess the performance of healthcare chatbots from an end-user perspective. These metrics encompass an evaluation of language processing abilities, impact on real-world clinical tasks, and effectiveness in user-interactive conversations. Finally, we engage in a discussion concerning the challenges associated with defining and implementing these metrics, with particular emphasis on confounding factors such as the target audience, evaluation methods, and prompt techniques involved in the evaluation process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge