Jessica Borelli

Building Trust in Mental Health Chatbots: Safety Metrics and LLM-Based Evaluation Tools

Aug 03, 2024Abstract:Objective: This study aims to develop and validate an evaluation framework to ensure the safety and reliability of mental health chatbots, which are increasingly popular due to their accessibility, human-like interactions, and context-aware support. Materials and Methods: We created an evaluation framework with 100 benchmark questions and ideal responses, and five guideline questions for chatbot responses. This framework, validated by mental health experts, was tested on a GPT-3.5-turbo-based chatbot. Automated evaluation methods explored included large language model (LLM)-based scoring, an agentic approach using real-time data, and embedding models to compare chatbot responses against ground truth standards. Results: The results highlight the importance of guidelines and ground truth for improving LLM evaluation accuracy. The agentic method, dynamically accessing reliable information, demonstrated the best alignment with human assessments. Adherence to a standardized, expert-validated framework significantly enhanced chatbot response safety and reliability. Discussion: Our findings emphasize the need for comprehensive, expert-tailored safety evaluation metrics for mental health chatbots. While LLMs have significant potential, careful implementation is necessary to mitigate risks. The superior performance of the agentic approach underscores the importance of real-time data access in enhancing chatbot reliability. Conclusion: The study validated an evaluation framework for mental health chatbots, proving its effectiveness in improving safety and reliability. Future work should extend evaluations to accuracy, bias, empathy, and privacy to ensure holistic assessment and responsible integration into healthcare. Standardized evaluations will build trust among users and professionals, facilitating broader adoption and improved mental health support through technology.

Objective Prediction of Tomorrow's Affect Using Multi-Modal Physiological Data and Personal Chronicles: A Study of Monitoring College Student Well-being in 2020

Jan 26, 2022

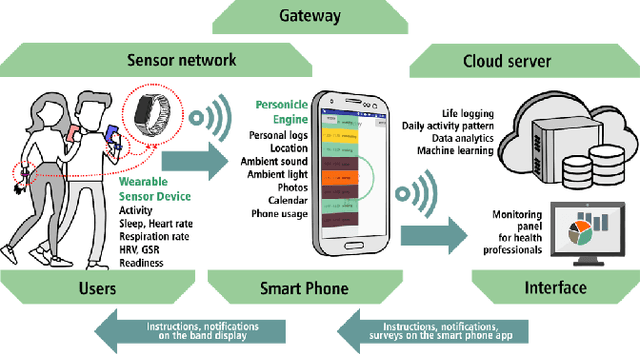

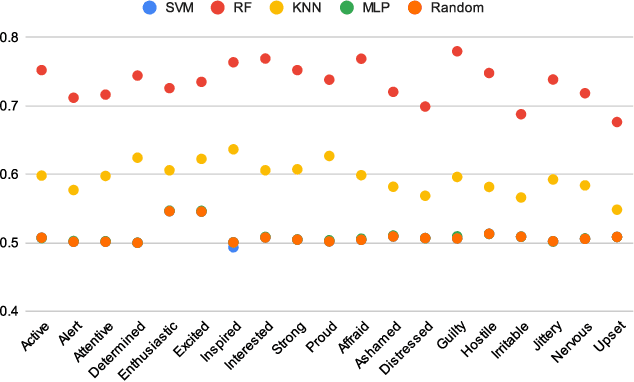

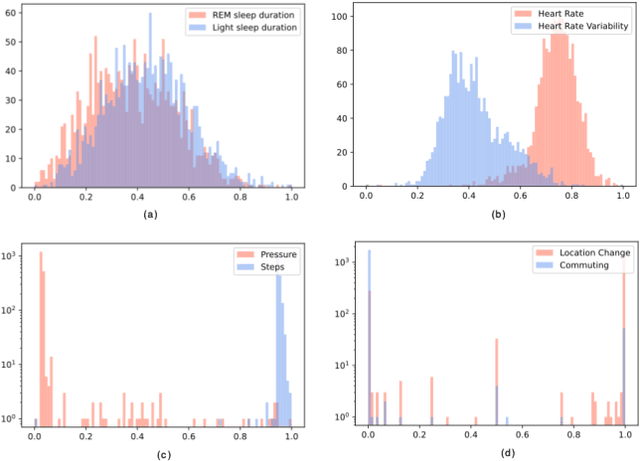

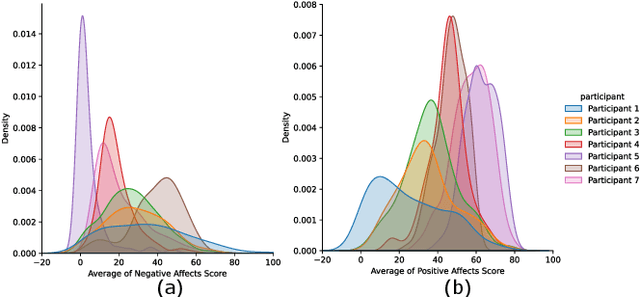

Abstract:Monitoring and understanding affective states are important aspects of healthy functioning and treatment of mood-based disorders. Recent advancements of ubiquitous wearable technologies have increased the reliability of such tools in detecting and accurately estimating mental states (e.g., mood, stress, etc.), offering comprehensive and continuous monitoring of individuals over time. Previous attempts to model an individual's mental state were limited to subjective approaches or the inclusion of only a few modalities (i.e., phone, watch). Thus, the goal of our study was to investigate the capacity to more accurately predict affect through a fully automatic and objective approach using multiple commercial devices. Longitudinal physiological data and daily assessments of emotions were collected from a sample of college students using smart wearables and phones for over a year. Results showed that our model was able to predict next-day affect with accuracy comparable to state of the art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge