Xia Wang

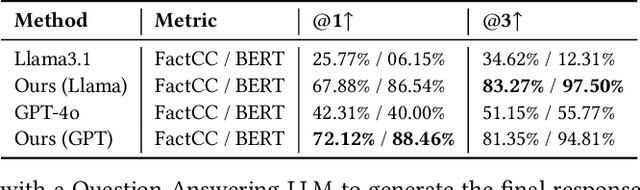

Key Laboratory of Photoelectronic Imaging Technology and System of Ministry of Education of China, School of Optics and Photonics, Beijing Institute of Technology

USIS16K: High-Quality Dataset for Underwater Salient Instance Segmentation

Jun 24, 2025Abstract:Inspired by the biological visual system that selectively allocates attention to efficiently identify salient objects or regions, underwater salient instance segmentation (USIS) aims to jointly address the problems of where to look (saliency prediction) and what is there (instance segmentation) in underwater scenarios. However, USIS remains an underexplored challenge due to the inaccessibility and dynamic nature of underwater environments, as well as the scarcity of large-scale, high-quality annotated datasets. In this paper, we introduce USIS16K, a large-scale dataset comprising 16,151 high-resolution underwater images collected from diverse environmental settings and covering 158 categories of underwater objects. Each image is annotated with high-quality instance-level salient object masks, representing a significant advance in terms of diversity, complexity, and scalability. Furthermore, we provide benchmark evaluations on underwater object detection and USIS tasks using USIS16K. To facilitate future research in this domain, the dataset and benchmark models are publicly available.

Combining LLMs with Logic-Based Framework to Explain MCTS

May 01, 2025

Abstract:In response to the lack of trust in Artificial Intelligence (AI) for sequential planning, we design a Computational Tree Logic-guided large language model (LLM)-based natural language explanation framework designed for the Monte Carlo Tree Search (MCTS) algorithm. MCTS is often considered challenging to interpret due to the complexity of its search trees, but our framework is flexible enough to handle a wide range of free-form post-hoc queries and knowledge-based inquiries centered around MCTS and the Markov Decision Process (MDP) of the application domain. By transforming user queries into logic and variable statements, our framework ensures that the evidence obtained from the search tree remains factually consistent with the underlying environmental dynamics and any constraints in the actual stochastic control process. We evaluate the framework rigorously through quantitative assessments, where it demonstrates strong performance in terms of accuracy and factual consistency.

SIDME: Self-supervised Image Demoiréing via Masked Encoder-Decoder Reconstruction

Apr 16, 2025Abstract:Moir\'e patterns, resulting from aliasing between object light signals and camera sampling frequencies, often degrade image quality during capture. Traditional demoir\'eing methods have generally treated images as a whole for processing and training, neglecting the unique signal characteristics of different color channels. Moreover, the randomness and variability of moir\'e pattern generation pose challenges to the robustness of existing methods when applied to real-world data. To address these issues, this paper presents SIDME (Self-supervised Image Demoir\'eing via Masked Encoder-Decoder Reconstruction), a novel model designed to generate high-quality visual images by effectively processing moir\'e patterns. SIDME combines a masked encoder-decoder architecture with self-supervised learning, allowing the model to reconstruct images using the inherent properties of camera sampling frequencies. A key innovation is the random masked image reconstructor, which utilizes an encoder-decoder structure to handle the reconstruction task. Furthermore, since the green channel in camera sampling has a higher sampling frequency compared to red and blue channels, a specialized self-supervised loss function is designed to improve the training efficiency and effectiveness. To ensure the generalization ability of the model, a self-supervised moir\'e image generation method has been developed to produce a dataset that closely mimics real-world conditions. Extensive experiments demonstrate that SIDME outperforms existing methods in processing real moir\'e pattern data, showing its superior generalization performance and robustness.

Cross-Modal Semi-Dense 6-DoF Tracking of an Event Camera in Challenging Conditions

Jan 16, 2024Abstract:Vision-based localization is a cost-effective and thus attractive solution for many intelligent mobile platforms. However, its accuracy and especially robustness still suffer from low illumination conditions, illumination changes, and aggressive motion. Event-based cameras are bio-inspired visual sensors that perform well in HDR conditions and have high temporal resolution, and thus provide an interesting alternative in such challenging scenarios. While purely event-based solutions currently do not yet produce satisfying mapping results, the present work demonstrates the feasibility of purely event-based tracking if an alternative sensor is permitted for mapping. The method relies on geometric 3D-2D registration of semi-dense maps and events, and achieves highly reliable and accurate cross-modal tracking results. Practically relevant scenarios are given by depth camera-supported tracking or map-based localization with a semi-dense map prior created by a regular image-based visual SLAM or structure-from-motion system. Conventional edge-based 3D-2D alignment is extended by a novel polarity-aware registration that makes use of signed time-surface maps (STSM) obtained from event streams. We furthermore introduce a novel culling strategy for occluded points. Both modifications increase the speed of the tracker and its robustness against occlusions or large view-point variations. The approach is validated on many real datasets covering the above-mentioned challenging conditions, and compared against similar solutions realised with regular cameras.

Robustness Verification for Knowledge-Based Logic of Risky Driving Scenes

Dec 27, 2023Abstract:Many decision-making scenarios in modern life benefit from the decision support of artificial intelligence algorithms, which focus on a data-driven philosophy and automated programs or systems. However, crucial decision issues related to security, fairness, and privacy should consider more human knowledge and principles to supervise such AI algorithms to reach more proper solutions and to benefit society more effectively. In this work, we extract knowledge-based logic that defines risky driving formats learned from public transportation accident datasets, which haven't been analyzed in detail to the best of our knowledge. More importantly, this knowledge is critical for recognizing traffic hazards and could supervise and improve AI models in safety-critical systems. Then we use automated verification methods to verify the robustness of such logic. More specifically, we gather 72 accident datasets from Data.gov and organize them by state. Further, we train Decision Tree and XGBoost models on each state's dataset, deriving accident judgment logic. Finally, we deploy robustness verification on these tree-based models under multiple parameter combinations.

Passive Non-line-of-sight Imaging for Moving Targets with an Event Camera

Sep 27, 2022

Abstract:Non-line-of-sight (NLOS) imaging is an emerging technique for detecting objects behind obstacles or around corners. Recent studies on passive NLOS mainly focus on steady-state measurement and reconstruction methods, which show limitations in recognition of moving targets. To the best of our knowledge, we propose a novel event-based passive NLOS imaging method. We acquire asynchronous event-based data which contains detailed dynamic information of the NLOS target, and efficiently ease the degradation of speckle caused by movement. Besides, we create the first event-based NLOS imaging dataset, NLOS-ES, and the event-based feature is extracted by time-surface representation. We compare the reconstructions through event-based data with frame-based data. The event-based method performs well on PSNR and LPIPS, which is 20% and 10% better than frame-based method, while the data volume takes only 2% of traditional method.

Learning Incrementally to Segment Multiple Organs in a CT Image

Mar 04, 2022

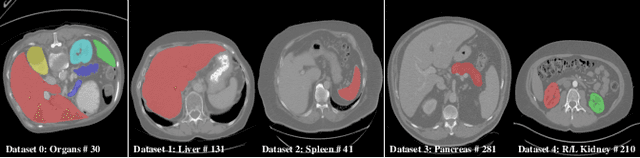

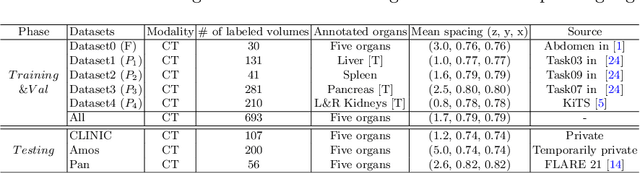

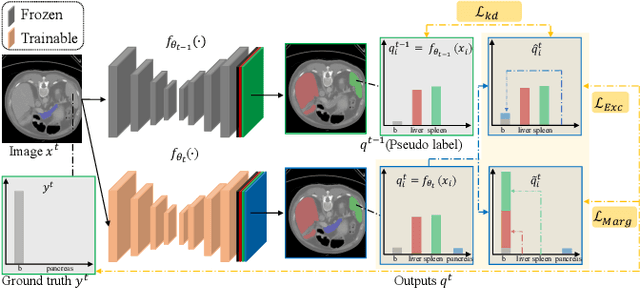

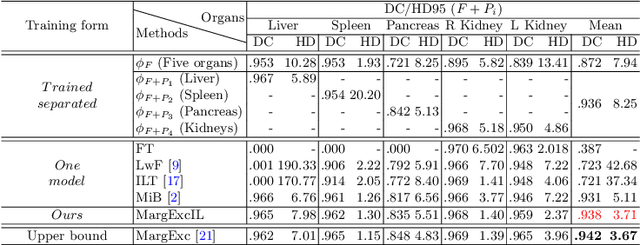

Abstract:There exists a large number of datasets for organ segmentation, which are partially annotated and sequentially constructed. A typical dataset is constructed at a certain time by curating medical images and annotating the organs of interest. In other words, new datasets with annotations of new organ categories are built over time. To unleash the potential behind these partially labeled, sequentially-constructed datasets, we propose to incrementally learn a multi-organ segmentation model. In each incremental learning (IL) stage, we lose the access to previous data and annotations, whose knowledge is assumingly captured by the current model, and gain the access to a new dataset with annotations of new organ categories, from which we learn to update the organ segmentation model to include the new organs. While IL is notorious for its `catastrophic forgetting' weakness in the context of natural image analysis, we experimentally discover that such a weakness mostly disappears for CT multi-organ segmentation. To further stabilize the model performance across the IL stages, we introduce a light memory module and some loss functions to restrain the representation of different categories in feature space, aggregating feature representation of the same class and separating feature representation of different classes. Extensive experiments on five open-sourced datasets are conducted to illustrate the effectiveness of our method.

DEVO: Depth-Event Camera Visual Odometry in Challenging Conditions

Feb 05, 2022

Abstract:We present a novel real-time visual odometry framework for a stereo setup of a depth and high-resolution event camera. Our framework balances accuracy and robustness against computational efficiency towards strong performance in challenging scenarios. We extend conventional edge-based semi-dense visual odometry towards time-surface maps obtained from event streams. Semi-dense depth maps are generated by warping the corresponding depth values of the extrinsically calibrated depth camera. The tracking module updates the camera pose through efficient, geometric semi-dense 3D-2D edge alignment. Our approach is validated on both public and self-collected datasets captured under various conditions. We show that the proposed method performs comparable to state-of-the-art RGB-D camera-based alternatives in regular conditions, and eventually outperforms in challenging conditions such as high dynamics or low illumination.

Inter-sentence Relation Extraction for Associating Biological Context with Events in Biomedical Texts

Dec 14, 2018

Abstract:We present an analysis of the problem of identifying biological context and associating it with biochemical events in biomedical texts. This constitutes a non-trivial, inter-sentential relation extraction task. We focus on biological context as descriptions of the species, tissue type and cell type that are associated with biochemical events. We describe the properties of an annotated corpus of context-event relations and present and evaluate several classifiers for context-event association trained on syntactic, distance and frequency features.

Mixed-Stationary Gaussian Process for Flexible Non-Stationary Modeling of Spatial Outcomes

Jul 17, 2018

Abstract:Gaussian processes (GPs) are commonplace in spatial statistics. Although many non-stationary models have been developed, there is arguably a lack of flexibility compared to equipping each location with its own parameters. However, the latter suffers from intractable computation and can lead to overfitting. Taking the instantaneous stationarity idea, we construct a non-stationary GP with the stationarity parameter individually set at each location. Then we utilize the non-parametric mixture model to reduce the effective number of unique parameters. Different from a simple mixture of independent GPs, the mixture in stationarity allows the components to be spatial correlated, leading to improved prediction efficiency. Theoretical properties are examined and a linearly scalable algorithm is provided. The application is shown through several simulated scenarios as well as the massive spatiotemporally correlated temperature data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge