Min Feng

SIDME: Self-supervised Image Demoiréing via Masked Encoder-Decoder Reconstruction

Apr 16, 2025Abstract:Moir\'e patterns, resulting from aliasing between object light signals and camera sampling frequencies, often degrade image quality during capture. Traditional demoir\'eing methods have generally treated images as a whole for processing and training, neglecting the unique signal characteristics of different color channels. Moreover, the randomness and variability of moir\'e pattern generation pose challenges to the robustness of existing methods when applied to real-world data. To address these issues, this paper presents SIDME (Self-supervised Image Demoir\'eing via Masked Encoder-Decoder Reconstruction), a novel model designed to generate high-quality visual images by effectively processing moir\'e patterns. SIDME combines a masked encoder-decoder architecture with self-supervised learning, allowing the model to reconstruct images using the inherent properties of camera sampling frequencies. A key innovation is the random masked image reconstructor, which utilizes an encoder-decoder structure to handle the reconstruction task. Furthermore, since the green channel in camera sampling has a higher sampling frequency compared to red and blue channels, a specialized self-supervised loss function is designed to improve the training efficiency and effectiveness. To ensure the generalization ability of the model, a self-supervised moir\'e image generation method has been developed to produce a dataset that closely mimics real-world conditions. Extensive experiments demonstrate that SIDME outperforms existing methods in processing real moir\'e pattern data, showing its superior generalization performance and robustness.

F3S: Free Flow Fever Screening

Sep 03, 2021

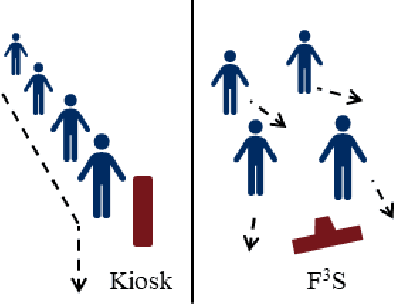

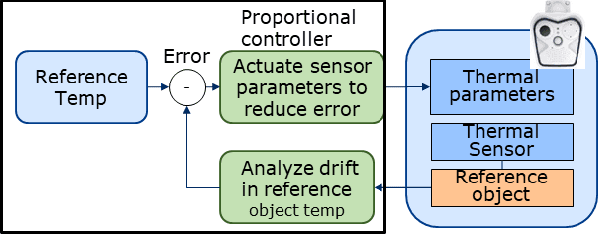

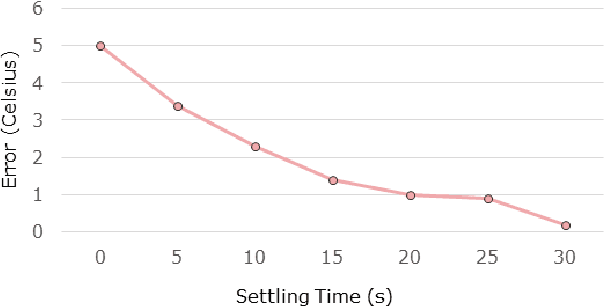

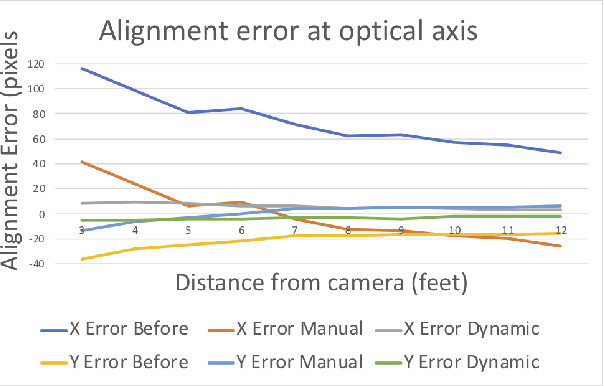

Abstract:Identification of people with elevated body temperature can reduce or dramatically slow down the spread of infectious diseases like COVID-19. We present a novel fever-screening system, F3S, that uses edge machine learning techniques to accurately measure core body temperatures of multiple individuals in a free-flow setting. F3S performs real-time sensor fusion of visual camera with thermal camera data streams to detect elevated body temperature, and it has several unique features: (a) visual and thermal streams represent very different modalities, and we dynamically associate semantically-equivalent regions across visual and thermal frames by using a new, dynamic alignment technique that analyzes content and context in real-time, (b) we track people through occlusions, identify the eye (inner canthus), forehead, face and head regions where possible, and provide an accurate temperature reading by using a prioritized refinement algorithm, and (c) we robustly detect elevated body temperature even in the presence of personal protective equipment like masks, or sunglasses or hats, all of which can be affected by hot weather and lead to spurious temperature readings. F3S has been deployed at over a dozen large commercial establishments, providing contact-less, free-flow, real-time fever screening for thousands of employees and customers in indoors and outdoor settings.

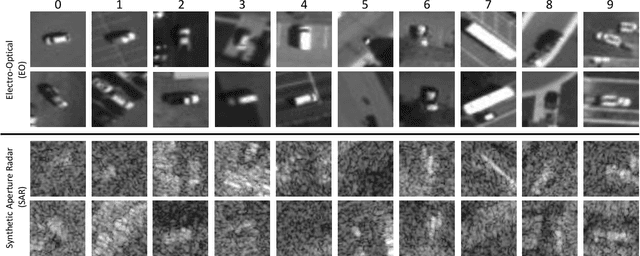

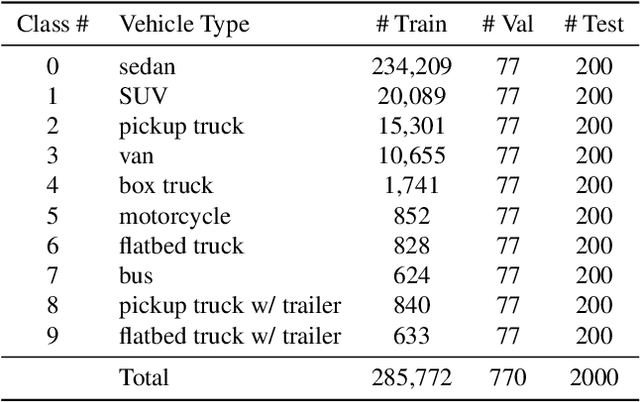

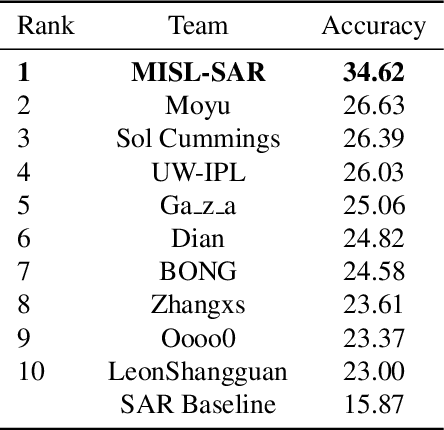

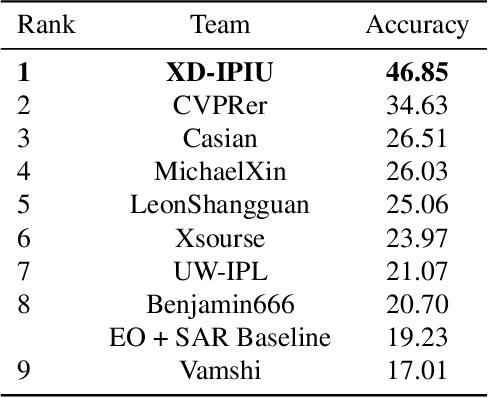

NTIRE 2021 Multi-modal Aerial View Object Classification Challenge

Jul 02, 2021

Abstract:In this paper, we introduce the first Challenge on Multi-modal Aerial View Object Classification (MAVOC) in conjunction with the NTIRE 2021 workshop at CVPR. This challenge is composed of two different tracks using EO andSAR imagery. Both EO and SAR sensors possess different advantages and drawbacks. The purpose of this competition is to analyze how to use both sets of sensory information in complementary ways. We discuss the top methods submitted for this competition and evaluate their results on our blind test set. Our challenge results show significant improvement of more than 15% accuracy from our current baselines for each track of the competition

* 10 pages, 1 figure. Conference on Computer Vision and Pattern Recognition

Hyperspectral and LiDAR data classification based on linear self-attention

Apr 06, 2021

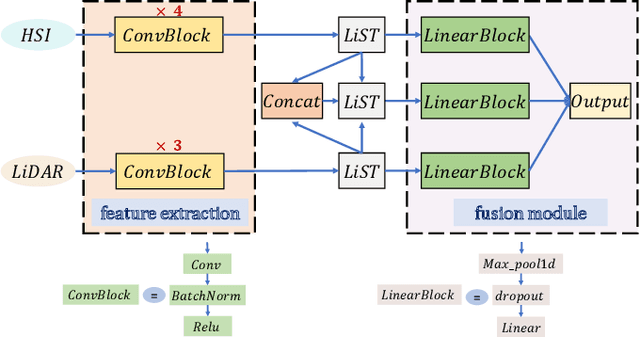

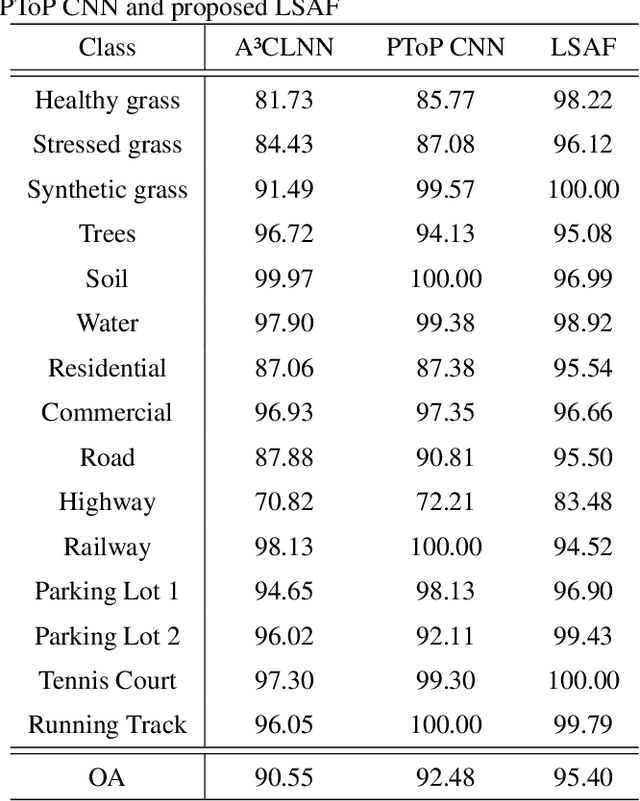

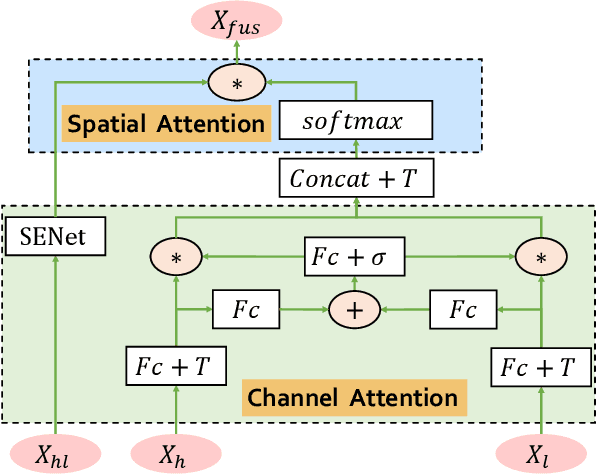

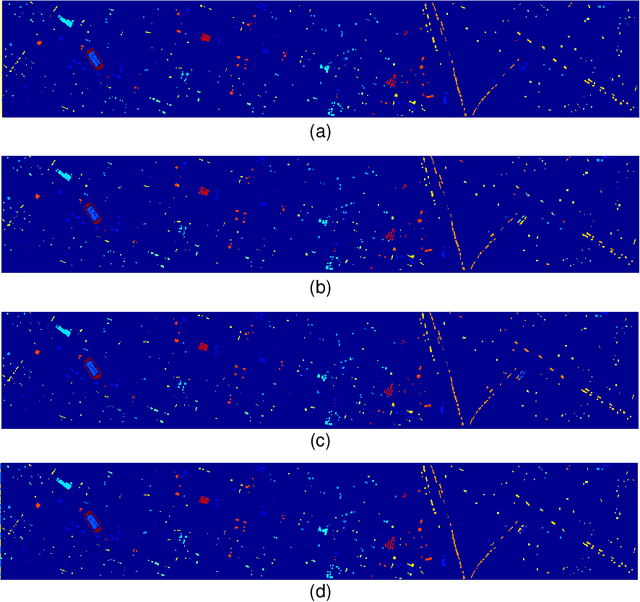

Abstract:An efficient linear self-attention fusion model is proposed in this paper for the task of hyperspectral image (HSI) and LiDAR data joint classification. The proposed method is comprised of a feature extraction module, an attention module, and a fusion module. The attention module is a plug-and-play linear self-attention module that can be extensively used in any model. The proposed model has achieved the overall accuracy of 95.40\% on the Houston dataset. The experimental results demonstrate the superiority of the proposed method over other state-of-the-art models.

Optimizing Memory Efficiency for Deep Convolutional Neural Networks on GPUs

Oct 12, 2016

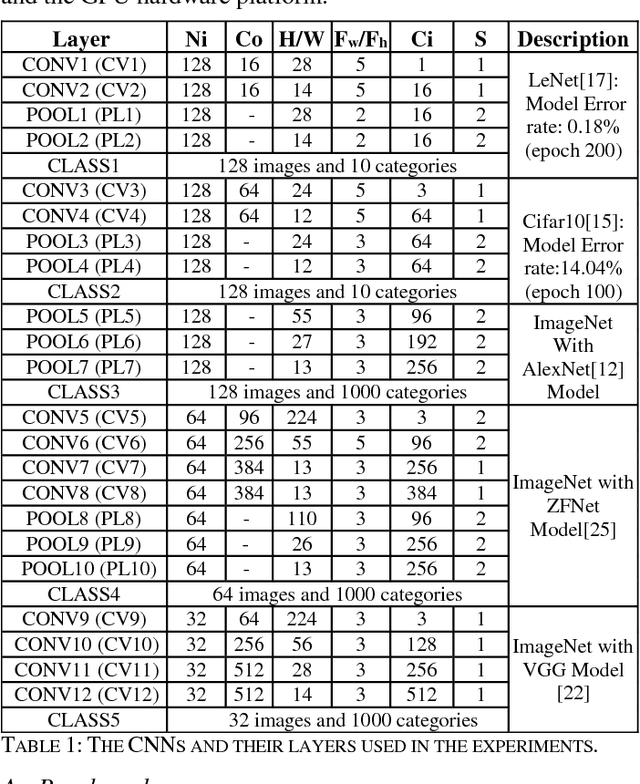

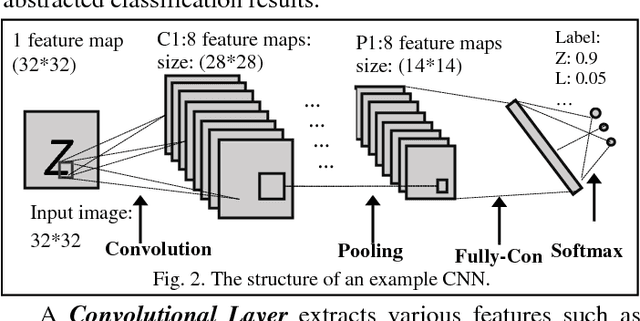

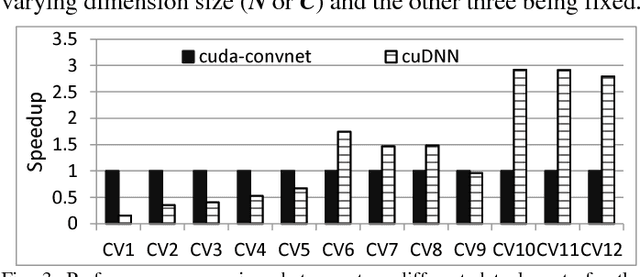

Abstract:Leveraging large data sets, deep Convolutional Neural Networks (CNNs) achieve state-of-the-art recognition accuracy. Due to the substantial compute and memory operations, however, they require significant execution time. The massive parallel computing capability of GPUs make them as one of the ideal platforms to accelerate CNNs and a number of GPU-based CNN libraries have been developed. While existing works mainly focus on the computational efficiency of CNNs, the memory efficiency of CNNs have been largely overlooked. Yet CNNs have intricate data structures and their memory behavior can have significant impact on the performance. In this work, we study the memory efficiency of various CNN layers and reveal the performance implication from both data layouts and memory access patterns. Experiments show the universal effect of our proposed optimizations on both single layers and various networks, with up to 27.9x for a single layer and up to 5.6x on the whole networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge