Wenpin Jiao

KOCO-BENCH: Can Large Language Models Leverage Domain Knowledge in Software Development?

Jan 19, 2026Abstract:Large language models (LLMs) excel at general programming but struggle with domain-specific software development, necessitating domain specialization methods for LLMs to learn and utilize domain knowledge and data. However, existing domain-specific code benchmarks cannot evaluate the effectiveness of domain specialization methods, which focus on assessing what knowledge LLMs possess rather than how they acquire and apply new knowledge, lacking explicit knowledge corpora for developing domain specialization methods. To this end, we present KOCO-BENCH, a novel benchmark designed for evaluating domain specialization methods in real-world software development. KOCO-BENCH contains 6 emerging domains with 11 software frameworks and 25 projects, featuring curated knowledge corpora alongside multi-granularity evaluation tasks including domain code generation (from function-level to project-level with rigorous test suites) and domain knowledge understanding (via multiple-choice Q&A). Unlike previous benchmarks that only provide test sets for direct evaluation, KOCO-BENCH requires acquiring and applying diverse domain knowledge (APIs, rules, constraints, etc.) from knowledge corpora to solve evaluation tasks. Our evaluations reveal that KOCO-BENCH poses significant challenges to state-of-the-art LLMs. Even with domain specialization methods (e.g., SFT, RAG, kNN-LM) applied, improvements remain marginal. Best-performing coding agent, Claude Code, achieves only 34.2%, highlighting the urgent need for more effective domain specialization methods. We release KOCO-BENCH, evaluation code, and baselines to advance further research at https://github.com/jiangxxxue/KOCO-bench.

Mutual Evidential Deep Learning for Medical Image Segmentation

May 18, 2025Abstract:Existing semi-supervised medical segmentation co-learning frameworks have realized that model performance can be diminished by the biases in model recognition caused by low-quality pseudo-labels. Due to the averaging nature of their pseudo-label integration strategy, they fail to explore the reliability of pseudo-labels from different sources. In this paper, we propose a mutual evidential deep learning (MEDL) framework that offers a potentially viable solution for pseudo-label generation in semi-supervised learning from two perspectives. First, we introduce networks with different architectures to generate complementary evidence for unlabeled samples and adopt an improved class-aware evidential fusion to guide the confident synthesis of evidential predictions sourced from diverse architectural networks. Second, utilizing the uncertainty in the fused evidence, we design an asymptotic Fisher information-based evidential learning strategy. This strategy enables the model to initially focus on unlabeled samples with more reliable pseudo-labels, gradually shifting attention to samples with lower-quality pseudo-labels while avoiding over-penalization of mislabeled classes in high data uncertainty samples. Additionally, for labeled data, we continue to adopt an uncertainty-driven asymptotic learning strategy, gradually guiding the model to focus on challenging voxels. Extensive experiments on five mainstream datasets have demonstrated that MEDL achieves state-of-the-art performance.

Enhancing LLM Generation with Knowledge Hypergraph for Evidence-Based Medicine

Mar 18, 2025

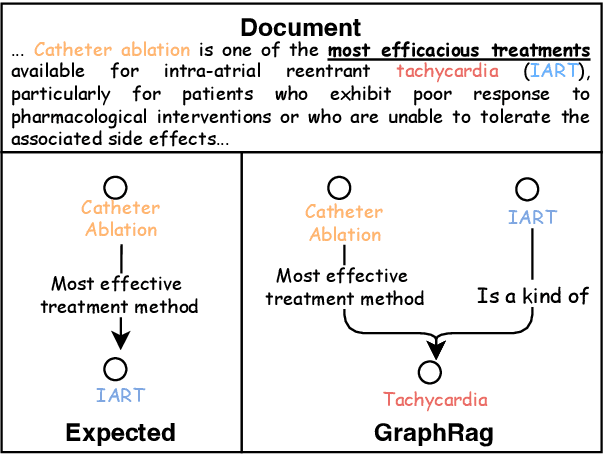

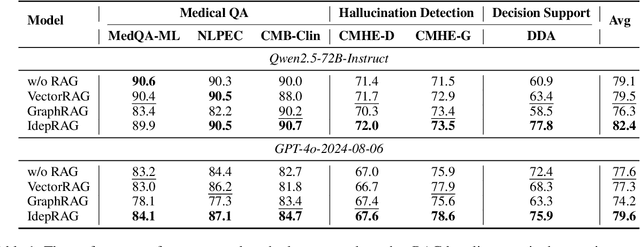

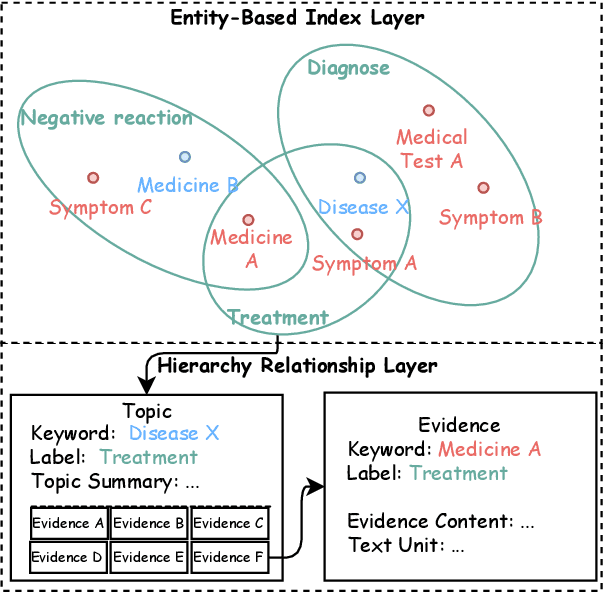

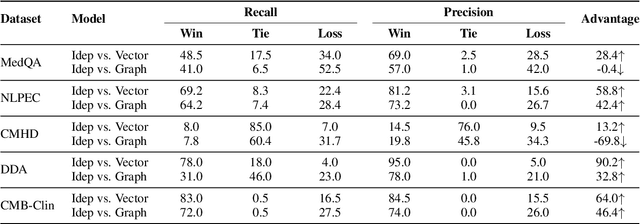

Abstract:Evidence-based medicine (EBM) plays a crucial role in the application of large language models (LLMs) in healthcare, as it provides reliable support for medical decision-making processes. Although it benefits from current retrieval-augmented generation~(RAG) technologies, it still faces two significant challenges: the collection of dispersed evidence and the efficient organization of this evidence to support the complex queries necessary for EBM. To tackle these issues, we propose using LLMs to gather scattered evidence from multiple sources and present a knowledge hypergraph-based evidence management model to integrate these evidence while capturing intricate relationships. Furthermore, to better support complex queries, we have developed an Importance-Driven Evidence Prioritization (IDEP) algorithm that utilizes the LLM to generate multiple evidence features, each with an associated importance score, which are then used to rank the evidence and produce the final retrieval results. Experimental results from six datasets demonstrate that our approach outperforms existing RAG techniques in application domains of interest to EBM, such as medical quizzing, hallucination detection, and decision support. Testsets and the constructed knowledge graph can be accessed at \href{https://drive.google.com/file/d/1WJ9QTokK3MdkjEmwuFQxwH96j_Byawj_/view?usp=drive_link}{https://drive.google.com/rag4ebm}.

UniTrans: A Unified Vertical Federated Knowledge Transfer Framework for Enhancing Cross-Hospital Collaboration

Jan 20, 2025

Abstract:Cross-hospital collaboration has the potential to address disparities in medical resources across different regions. However, strict privacy regulations prohibit the direct sharing of sensitive patient information between hospitals. Vertical federated learning (VFL) offers a novel privacy-preserving machine learning paradigm that maximizes data utility across multiple hospitals. Traditional VFL methods, however, primarily benefit patients with overlapping data, leaving vulnerable non-overlapping patients without guaranteed improvements in medical prediction services. While some knowledge transfer techniques can enhance the prediction performance for non-overlapping patients, they fall short in addressing scenarios where overlapping and non-overlapping patients belong to different domains, resulting in challenges such as feature heterogeneity and label heterogeneity. To address these issues, we propose a novel unified vertical federated knowledge transfer framework (Unitrans). Our framework consists of three key steps. First, we extract the federated representation of overlapping patients by employing an effective vertical federated representation learning method to model multi-party joint features online. Next, each hospital learns a local knowledge transfer module offline, enabling the transfer of knowledge from the federated representation of overlapping patients to the enriched representation of local non-overlapping patients in a domain-adaptive manner. Finally, hospitals utilize these enriched local representations to enhance performance across various downstream medical prediction tasks. Experiments on real-world medical datasets validate the framework's dual effectiveness in both intra-domain and cross-domain knowledge transfer. The code of \method is available at \url{https://github.com/Chung-ju/Unitrans}.

Residual Feature-Reutilization Inception Network for Image Classification

Dec 27, 2024Abstract:Capturing feature information effectively is of great importance in the field of computer vision. With the development of convolutional neural networks (CNNs), concepts like residual connection and multiple scales promote continual performance gains in diverse deep learning vision tasks. In this paper, we propose a novel CNN architecture that it consists of residual feature-reutilization inceptions (ResFRI) or split-residual feature-reutilization inceptions (Split-ResFRI). And it is composed of four convolutional combinations of different structures connected by specially designed information interaction passages, which are utilized to extract multi-scale feature information and effectively increase the receptive field of the model. Moreover, according to the network structure designed above, Split-ResFRI can adjust the segmentation ratio of the input information, thereby reducing the number of parameters and guaranteeing the model performance. Specifically, in experiments based on popular vision datasets, such as CIFAR10 ($97.94$\%), CIFAR100 ($85.91$\%) and Tiny Imagenet ($70.54$\%), we obtain state-of-the-art results compared with other modern models under the premise that the model size is approximate and no additional data is used.

Generalized Uncertainty-Based Evidential Fusion with Hybrid Multi-Head Attention for Weak-Supervised Temporal Action Localization

Dec 27, 2024Abstract:Weakly supervised temporal action localization (WS-TAL) is a task of targeting at localizing complete action instances and categorizing them with video-level labels. Action-background ambiguity, primarily caused by background noise resulting from aggregation and intra-action variation, is a significant challenge for existing WS-TAL methods. In this paper, we introduce a hybrid multi-head attention (HMHA) module and generalized uncertainty-based evidential fusion (GUEF) module to address the problem. The proposed HMHA effectively enhances RGB and optical flow features by filtering redundant information and adjusting their feature distribution to better align with the WS-TAL task. Additionally, the proposed GUEF adaptively eliminates the interference of background noise by fusing snippet-level evidences to refine uncertainty measurement and select superior foreground feature information, which enables the model to concentrate on integral action instances to achieve better action localization and classification performance. Experimental results conducted on the THUMOS14 dataset demonstrate that our method outperforms state-of-the-art methods. Our code is available in \url{https://github.com/heyuanpengpku/GUEF/tree/main}.

Why language models collapse when trained on recursively generated text

Dec 19, 2024

Abstract:Language models (LMs) have been widely used to generate text on the Internet. The generated text is often collected into the training corpus of the next generations of LMs. Previous work has experimentally found that LMs collapse when trained on recursively generated text. This paper contributes to existing knowledge from two aspects. We present a theoretical proof of LM collapse. Our proof reveals the cause of LM collapse and proves that all auto-regressive LMs will definitely collapse. We present a new finding: the performance of LMs gradually declines when trained on recursively generated text until they perform no better than a randomly initialized LM. The trained LMs produce large amounts of repetitive text and perform poorly across a wide range of natural language tasks. The above proof and new findings deepen our understanding of LM collapse and offer valuable insights that may inspire new training techniques to mitigate this threat.

Exploring LLM-based Data Annotation Strategies for Medical Dialogue Preference Alignment

Oct 05, 2024

Abstract:This research examines the use of Reinforcement Learning from AI Feedback (RLAIF) techniques to improve healthcare dialogue models, with the aim of tackling the challenges of preference-aligned data annotation while reducing the reliance on medical experts. We argue that the primary challenges in current RLAIF research for healthcare are the limitations of automated evaluation methods and the difficulties in accurately representing physician preferences. To address these challenges, we present a new evaluation framework based on standardized patient examinations. This framework is designed to objectively assess the effectiveness of large language models (LLMs) in guiding users and following instructions, enabling a comprehensive comparison across different models. Furthermore, our investigation of effective ways to express physician preferences using Constitutional AI algorithms highlighted the particular effectiveness of flowcharts. Utilizing this finding, we introduce an innovative agent-based approach for annotating preference data. This approach autonomously creates medical dialogue flows tailored to the patient's condition, demonstrates strong generalization abilities, and reduces the need for expert involvement. Our results show that the agent-based approach outperforms existing RLAIF annotation methods in standardized patient examinations and surpasses current open source medical dialogue LLMs in various test scenarios.

Integrating Physician Diagnostic Logic into Large Language Models: Preference Learning from Process Feedback

Jan 11, 2024Abstract:The use of large language models in medical dialogue generation has garnered significant attention, with a focus on improving response quality and fluency. While previous studies have made progress in optimizing model performance for single-round medical Q&A tasks, there is a need to enhance the model's capability for multi-round conversations to avoid logical inconsistencies. To address this, we propose an approach called preference learning from process feedback~(PLPF), which integrates the doctor's diagnostic logic into LLMs. PLPF involves rule modeling, preference data generation, and preference alignment to train the model to adhere to the diagnostic process. Experimental results using Standardized Patient Testing show that PLPF enhances the diagnostic accuracy of the baseline model in medical conversations by 17.6%, outperforming traditional reinforcement learning from human feedback. Additionally, PLPF demonstrates effectiveness in both multi-round and single-round dialogue tasks, showcasing its potential for improving medical dialogue generation.

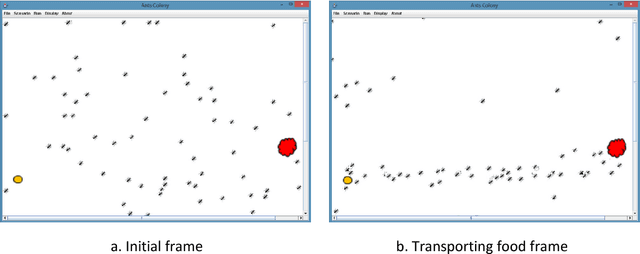

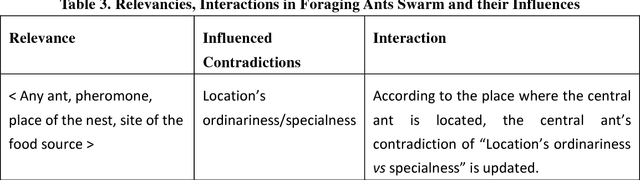

Contradiction-Centricity: A Uniform Model for Formation of Swarm Intelligence and its Simulations

Dec 12, 2017

Abstract:It is a grand challenge to model the emergence of swarm intelligence and many principles or models had been proposed. However, existing models do not catch the nature of swarm intelligence and they are not generic enough to describe various types of emergence phenomena. In this work, we propose a contradiction-centric model for emergence of swarm intelligence, in which individuals' contradictions dominate their appearances whilst they are associated and interacting to update their contradictions. This model hypothesizes that 1) the emergence of swarm intelligence is rooted in the development of contradictions of individuals and the interactions among associated individuals and 2) swarm intelligence is essentially a combinative reflection of the configurations of contradictions inside individuals and the distributions of contradictions among individuals. To verify the feasibility of the model, we simulate four types of swarm intelligence. As the simulations show, our model is truly generic and can describe the emergence of a variety of swarm intelligence, and it is also very simple and can be easily applied to demonstrate the emergence of swarm intelligence without needing complicated computations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge