Yuanpeng He

Learning to Factorize and Adapt: A Versatile Approach Toward Universal Spatio-Temporal Foundation Models

Jan 17, 2026Abstract:Spatio-Temporal (ST) Foundation Models (STFMs) promise cross-dataset generalization, yet joint ST pretraining is computationally expensive and grapples with the heterogeneity of domain-specific spatial patterns. Substantially extending our preliminary conference version, we present FactoST-v2, an enhanced factorized framework redesigned for full weight transfer and arbitrary-length generalization. FactoST-v2 decouples universal temporal learning from domain-specific spatial adaptation. The first stage pretrains a minimalist encoder-only backbone using randomized sequence masking to capture invariant temporal dynamics, enabling probabilistic quantile prediction across variable horizons. The second stage employs a streamlined adapter to rapidly inject spatial awareness via meta adaptive learning and prompting. Comprehensive evaluations across diverse domains demonstrate that FactoST-v2 achieves state-of-the-art accuracy with linear efficiency - significantly outperforming existing foundation models in zero-shot and few-shot scenarios while rivaling domain-specific expert baselines. This factorized paradigm offers a practical, scalable path toward truly universal STFMs. Code is available at https://github.com/CityMind-Lab/FactoST.

Rethinking Regularization Methods for Knowledge Graph Completion

May 29, 2025

Abstract:Knowledge graph completion (KGC) has attracted considerable attention in recent years because it is critical to improving the quality of knowledge graphs. Researchers have continuously explored various models. However, most previous efforts have neglected to take advantage of regularization from a deeper perspective and therefore have not been used to their full potential. This paper rethinks the application of regularization methods in KGC. Through extensive empirical studies on various KGC models, we find that carefully designed regularization not only alleviates overfitting and reduces variance but also enables these models to break through the upper bounds of their original performance. Furthermore, we introduce a novel sparse-regularization method that embeds the concept of rank-based selective sparsity into the KGC regularizer. The core idea is to selectively penalize those components with significant features in the embedding vector, thus effectively ignoring many components that contribute little and may only represent noise. Various comparative experiments on multiple datasets and multiple models show that the SPR regularization method is better than other regularization methods and can enable the KGC model to further break through the performance margin.

Efficient Prototype Consistency Learning in Medical Image Segmentation via Joint Uncertainty and Data Augmentation

May 22, 2025Abstract:Recently, prototype learning has emerged in semi-supervised medical image segmentation and achieved remarkable performance. However, the scarcity of labeled data limits the expressiveness of prototypes in previous methods, potentially hindering the complete representation of prototypes for class embedding. To overcome this issue, we propose an efficient prototype consistency learning via joint uncertainty quantification and data augmentation (EPCL-JUDA) to enhance the semantic expression of prototypes based on the framework of Mean-Teacher. The concatenation of original and augmented labeled data is fed into student network to generate expressive prototypes. Then, a joint uncertainty quantification method is devised to optimize pseudo-labels and generate reliable prototypes for original and augmented unlabeled data separately. High-quality global prototypes for each class are formed by fusing labeled and unlabeled prototypes, which are utilized to generate prototype-to-features to conduct consistency learning. Notably, a prototype network is proposed to reduce high memory requirements brought by the introduction of augmented data. Extensive experiments on Left Atrium, Pancreas-NIH, Type B Aortic Dissection datasets demonstrate EPCL-JUDA's superiority over previous state-of-the-art approaches, confirming the effectiveness of our framework. The code will be released soon.

Mutual Evidential Deep Learning for Medical Image Segmentation

May 18, 2025Abstract:Existing semi-supervised medical segmentation co-learning frameworks have realized that model performance can be diminished by the biases in model recognition caused by low-quality pseudo-labels. Due to the averaging nature of their pseudo-label integration strategy, they fail to explore the reliability of pseudo-labels from different sources. In this paper, we propose a mutual evidential deep learning (MEDL) framework that offers a potentially viable solution for pseudo-label generation in semi-supervised learning from two perspectives. First, we introduce networks with different architectures to generate complementary evidence for unlabeled samples and adopt an improved class-aware evidential fusion to guide the confident synthesis of evidential predictions sourced from diverse architectural networks. Second, utilizing the uncertainty in the fused evidence, we design an asymptotic Fisher information-based evidential learning strategy. This strategy enables the model to initially focus on unlabeled samples with more reliable pseudo-labels, gradually shifting attention to samples with lower-quality pseudo-labels while avoiding over-penalization of mislabeled classes in high data uncertainty samples. Additionally, for labeled data, we continue to adopt an uncertainty-driven asymptotic learning strategy, gradually guiding the model to focus on challenging voxels. Extensive experiments on five mainstream datasets have demonstrated that MEDL achieves state-of-the-art performance.

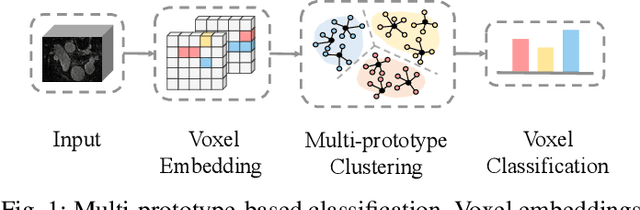

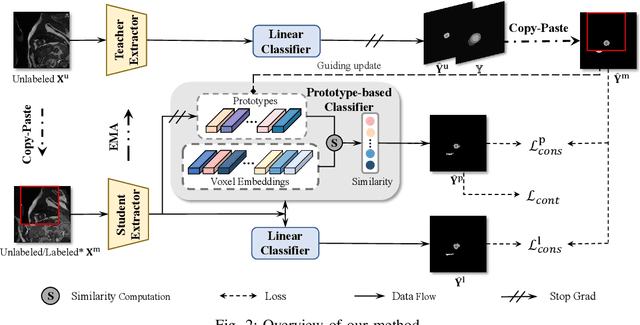

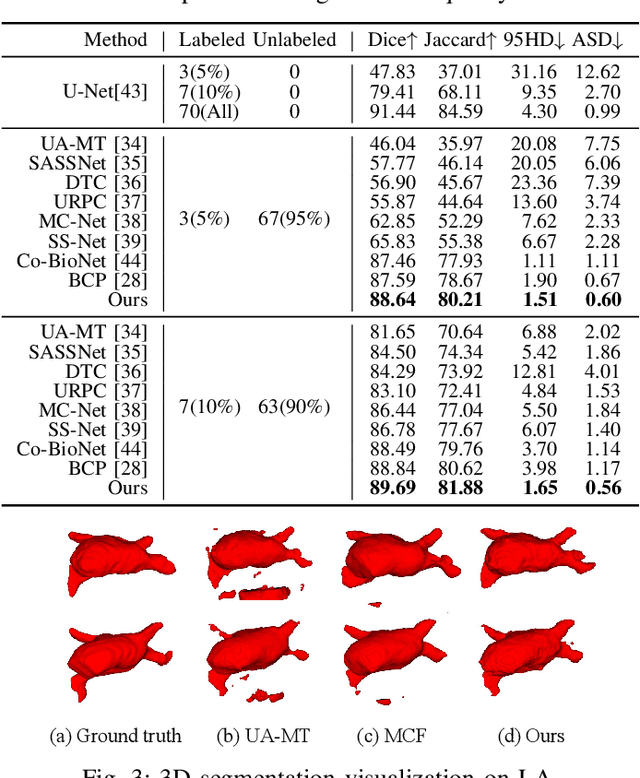

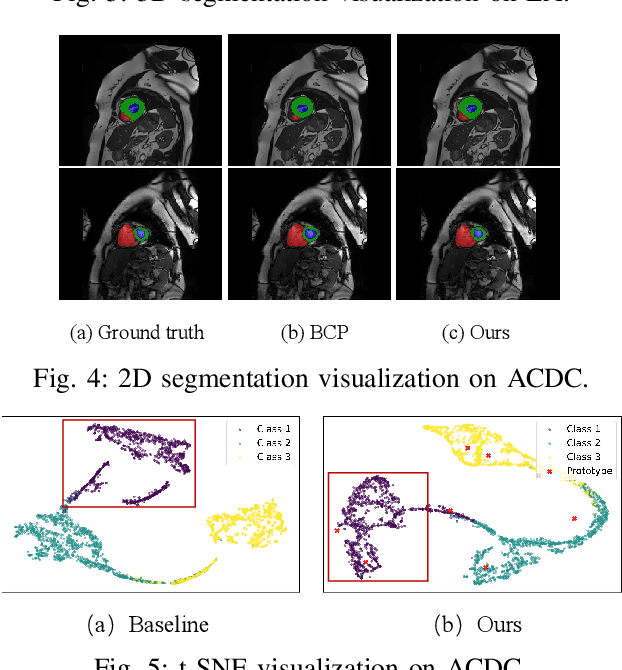

Multi-Prototype Embedding Refinement for Semi-Supervised Medical Image Segmentation

Mar 18, 2025

Abstract:Medical image segmentation aims to identify anatomical structures at the voxel-level. Segmentation accuracy relies on distinguishing voxel differences. Compared to advancements achieved in studies of the inter-class variance, the intra-class variance receives less attention. Moreover, traditional linear classifiers, limited by a single learnable weight per class, struggle to capture this finer distinction. To address the above challenges, we propose a Multi-Prototype-based Embedding Refinement method for semi-supervised medical image segmentation. Specifically, we design a multi-prototype-based classification strategy, rethinking the segmentation from the perspective of structural relationships between voxel embeddings. The intra-class variations are explored by clustering voxels along the distribution of multiple prototypes in each class. Next, we introduce a consistency constraint to alleviate the limitation of linear classifiers. This constraint integrates different classification granularities from a linear classifier and the proposed prototype-based classifier. In the thorough evaluation on two popular benchmarks, our method achieves superior performance compared with state-of-the-art methods. Code is available at https://github.com/Briley-byl123/MPER.

UniTrans: A Unified Vertical Federated Knowledge Transfer Framework for Enhancing Cross-Hospital Collaboration

Jan 20, 2025

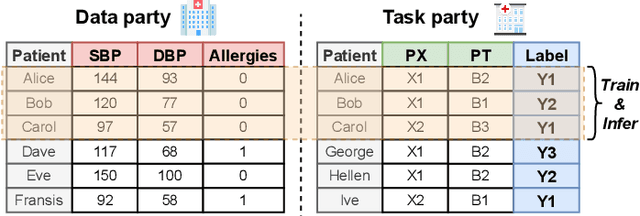

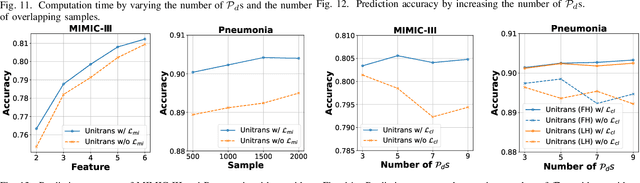

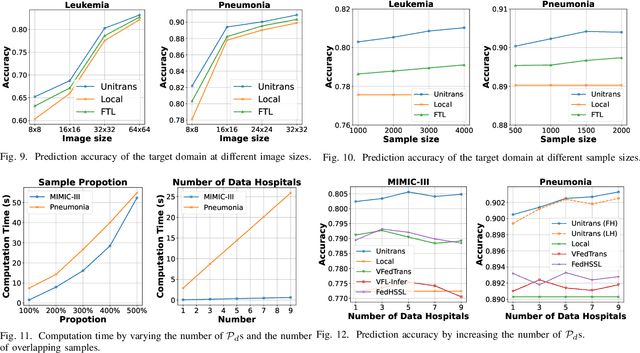

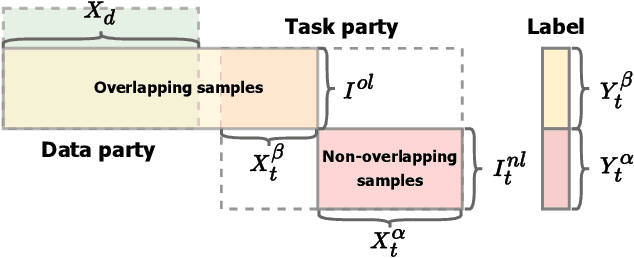

Abstract:Cross-hospital collaboration has the potential to address disparities in medical resources across different regions. However, strict privacy regulations prohibit the direct sharing of sensitive patient information between hospitals. Vertical federated learning (VFL) offers a novel privacy-preserving machine learning paradigm that maximizes data utility across multiple hospitals. Traditional VFL methods, however, primarily benefit patients with overlapping data, leaving vulnerable non-overlapping patients without guaranteed improvements in medical prediction services. While some knowledge transfer techniques can enhance the prediction performance for non-overlapping patients, they fall short in addressing scenarios where overlapping and non-overlapping patients belong to different domains, resulting in challenges such as feature heterogeneity and label heterogeneity. To address these issues, we propose a novel unified vertical federated knowledge transfer framework (Unitrans). Our framework consists of three key steps. First, we extract the federated representation of overlapping patients by employing an effective vertical federated representation learning method to model multi-party joint features online. Next, each hospital learns a local knowledge transfer module offline, enabling the transfer of knowledge from the federated representation of overlapping patients to the enriched representation of local non-overlapping patients in a domain-adaptive manner. Finally, hospitals utilize these enriched local representations to enhance performance across various downstream medical prediction tasks. Experiments on real-world medical datasets validate the framework's dual effectiveness in both intra-domain and cross-domain knowledge transfer. The code of \method is available at \url{https://github.com/Chung-ju/Unitrans}.

Generalized Uncertainty-Based Evidential Fusion with Hybrid Multi-Head Attention for Weak-Supervised Temporal Action Localization

Dec 27, 2024Abstract:Weakly supervised temporal action localization (WS-TAL) is a task of targeting at localizing complete action instances and categorizing them with video-level labels. Action-background ambiguity, primarily caused by background noise resulting from aggregation and intra-action variation, is a significant challenge for existing WS-TAL methods. In this paper, we introduce a hybrid multi-head attention (HMHA) module and generalized uncertainty-based evidential fusion (GUEF) module to address the problem. The proposed HMHA effectively enhances RGB and optical flow features by filtering redundant information and adjusting their feature distribution to better align with the WS-TAL task. Additionally, the proposed GUEF adaptively eliminates the interference of background noise by fusing snippet-level evidences to refine uncertainty measurement and select superior foreground feature information, which enables the model to concentrate on integral action instances to achieve better action localization and classification performance. Experimental results conducted on the THUMOS14 dataset demonstrate that our method outperforms state-of-the-art methods. Our code is available in \url{https://github.com/heyuanpengpku/GUEF/tree/main}.

Residual Feature-Reutilization Inception Network for Image Classification

Dec 27, 2024Abstract:Capturing feature information effectively is of great importance in the field of computer vision. With the development of convolutional neural networks (CNNs), concepts like residual connection and multiple scales promote continual performance gains in diverse deep learning vision tasks. In this paper, we propose a novel CNN architecture that it consists of residual feature-reutilization inceptions (ResFRI) or split-residual feature-reutilization inceptions (Split-ResFRI). And it is composed of four convolutional combinations of different structures connected by specially designed information interaction passages, which are utilized to extract multi-scale feature information and effectively increase the receptive field of the model. Moreover, according to the network structure designed above, Split-ResFRI can adjust the segmentation ratio of the input information, thereby reducing the number of parameters and guaranteeing the model performance. Specifically, in experiments based on popular vision datasets, such as CIFAR10 ($97.94$\%), CIFAR100 ($85.91$\%) and Tiny Imagenet ($70.54$\%), we obtain state-of-the-art results compared with other modern models under the premise that the model size is approximate and no additional data is used.

A novel framework for MCDM based on Z numbers and soft likelihood function

Dec 26, 2024Abstract:The optimization on the structure of process of information management under uncertain environment has attracted lots of attention from researchers around the world. Nevertheless, how to obtain accurate and rational evaluation from assessments produced by experts is still an open problem. Specially, intuitionistic fuzzy set provides an effective solution in handling indeterminate information. And Yager proposes a novel method for fusion of probabilistic evidence to handle uncertain and conflicting information lately which is called soft likelihood function. This paper devises a novel framework of soft likelihood function based on information volume of fuzzy membership and credibility measure for extracting truly useful and valuable information from uncertainty. An application is provided to verify the validity and correctness of the proposed framework. Besides, the comparisons with other existing methods further demonstrate the superiority of the novel framework of soft likelihood function.

Towards Realistic Long-tailed Semi-supervised Learning in an Open World

May 23, 2024Abstract:Open-world long-tailed semi-supervised learning (OLSSL) has increasingly attracted attention. However, existing OLSSL algorithms generally assume that the distributions between known and novel categories are nearly identical. Against this backdrop, we construct a more \emph{Realistic Open-world Long-tailed Semi-supervised Learning} (\textbf{ROLSSL}) setting where there is no premise on the distribution relationships between known and novel categories. Furthermore, even within the known categories, the number of labeled samples is significantly smaller than that of the unlabeled samples, as acquiring valid annotations is often prohibitively costly in the real world. Under the proposed ROLSSL setting, we propose a simple yet potentially effective solution called dual-stage post-hoc logit adjustments. The proposed approach revisits the logit adjustment strategy by considering the relationships among the frequency of samples, the total number of categories, and the overall size of data. Then, it estimates the distribution of unlabeled data for both known and novel categories to dynamically readjust the corresponding predictive probabilities, effectively mitigating category bias during the learning of known and novel classes with more selective utilization of imbalanced unlabeled data. Extensive experiments on datasets such as CIFAR100 and ImageNet100 have demonstrated performance improvements of up to 50.1\%, validating the superiority of our proposed method and establishing a strong baseline for this task. For further researches, the anonymous link to the experimental code is at \href{https://github.com/heyuanpengpku/ROLSSL}{\textcolor{brightpink}{https://github.com/heyuanpengpku/ROLSSL}}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge