Weirui Kuang

KIMAs: A Configurable Knowledge Integrated Multi-Agent System

Feb 13, 2025Abstract:Knowledge-intensive conversations supported by large language models (LLMs) have become one of the most popular and helpful applications that can assist people in different aspects. Many current knowledge-intensive applications are centered on retrieval-augmented generation (RAG) techniques. While many open-source RAG frameworks facilitate the development of RAG-based applications, they often fall short in handling practical scenarios complicated by heterogeneous data in topics and formats, conversational context management, and the requirement of low-latency response times. This technical report presents a configurable knowledge integrated multi-agent system, KIMAs, to address these challenges. KIMAs features a flexible and configurable system for integrating diverse knowledge sources with 1) context management and query rewrite mechanisms to improve retrieval accuracy and multi-turn conversational coherency, 2) efficient knowledge routing and retrieval, 3) simple but effective filter and reference generation mechanisms, and 4) optimized parallelizable multi-agent pipeline execution. Our work provides a scalable framework for advancing the deployment of LLMs in real-world settings. To show how KIMAs can help developers build knowledge-intensive applications with different scales and emphases, we demonstrate how we configure the system to three applications already running in practice with reliable performance.

AgentScope: A Flexible yet Robust Multi-Agent Platform

Feb 21, 2024Abstract:With the rapid advancement of Large Language Models (LLMs), significant progress has been made in multi-agent applications. However, the complexities in coordinating agents' cooperation and LLMs' erratic performance pose notable challenges in developing robust and efficient multi-agent applications. To tackle these challenges, we propose AgentScope, a developer-centric multi-agent platform with message exchange as its core communication mechanism. Together with abundant syntactic tools, built-in resources, and user-friendly interactions, our communication mechanism significantly reduces the barriers to both development and understanding. Towards robust and flexible multi-agent application, AgentScope provides both built-in and customizable fault tolerance mechanisms while it is also armed with system-level supports for multi-modal data generation, storage and transmission. Additionally, we design an actor-based distribution framework, enabling easy conversion between local and distributed deployments and automatic parallel optimization without extra effort. With these features, AgentScope empowers developers to build applications that fully realize the potential of intelligent agents. We have released AgentScope at https://github.com/modelscope/agentscope, and hope AgentScope invites wider participation and innovation in this fast-moving field.

FederatedScope-LLM: A Comprehensive Package for Fine-tuning Large Language Models in Federated Learning

Sep 01, 2023

Abstract:LLMs have demonstrated great capabilities in various NLP tasks. Different entities can further improve the performance of those LLMs on their specific downstream tasks by fine-tuning LLMs. When several entities have similar interested tasks, but their data cannot be shared because of privacy concerns regulations, federated learning (FL) is a mainstream solution to leverage the data of different entities. However, fine-tuning LLMs in federated learning settings still lacks adequate support from existing FL frameworks because it has to deal with optimizing the consumption of significant communication and computational resources, data preparation for different tasks, and distinct information protection demands. This paper first discusses these challenges of federated fine-tuning LLMs, and introduces our package FS-LLM as a main contribution, which consists of the following components: (1) we build an end-to-end benchmarking pipeline, automizing the processes of dataset preprocessing, federated fine-tuning execution, and performance evaluation on federated LLM fine-tuning; (2) we provide comprehensive federated parameter-efficient fine-tuning algorithm implementations and versatile programming interfaces for future extension in FL scenarios with low communication and computation costs, even without accessing the full model; (3) we adopt several accelerating and resource-efficient operators for fine-tuning LLMs with limited resources and the flexible pluggable sub-routines for interdisciplinary study. We conduct extensive experiments to validate the effectiveness of FS-LLM and benchmark advanced LLMs with state-of-the-art parameter-efficient fine-tuning algorithms in FL settings, which also yields valuable insights into federated fine-tuning LLMs for the research community. To facilitate further research and adoption, we release FS-LLM at https://github.com/alibaba/FederatedScope/tree/llm.

A Benchmark for Federated Hetero-Task Learning

Jun 21, 2022

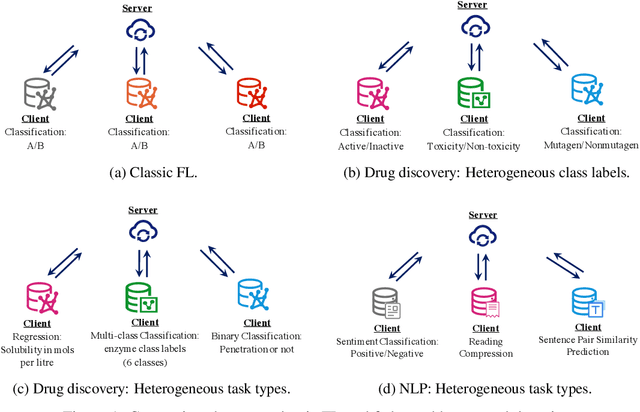

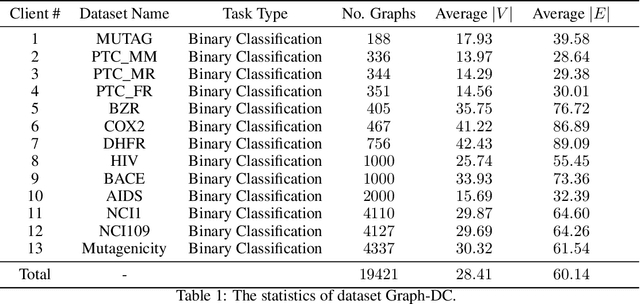

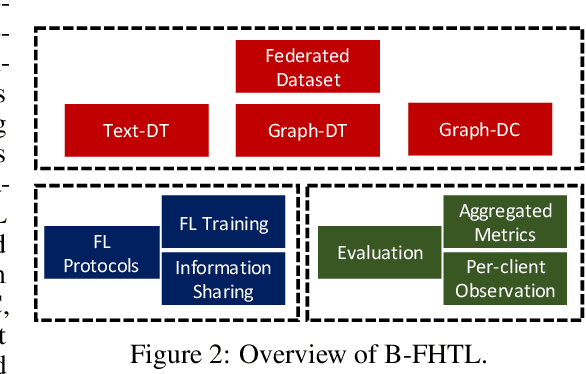

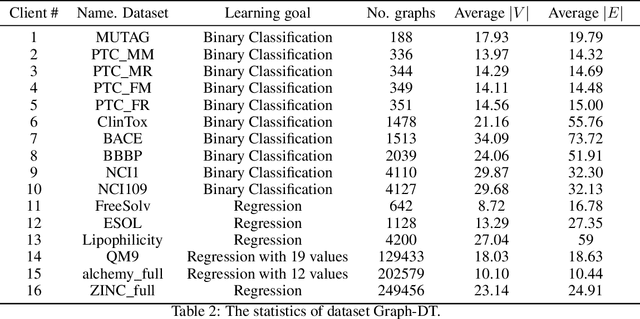

Abstract:To investigate the heterogeneity in federated learning in real-world scenarios, we generalize the classic federated learning to federated hetero-task learning, which emphasizes the inconsistency across the participants in federated learning in terms of both data distribution and learning tasks. We also present B-FHTL, a federated hetero-task learning benchmark consisting of simulation dataset, FL protocols and a unified evaluation mechanism. B-FHTL dataset contains three well-designed federated learning tasks with increasing heterogeneity. Each task simulates the clients with different non-IID data and learning tasks. To ensure fair comparison among different FL algorithms, B-FHTL builds in a full suite of FL protocols by providing high-level APIs to avoid privacy leakage, and presets most common evaluation metrics spanning across different learning tasks, such as regression, classification, text generation and etc. Furthermore, we compare the FL algorithms in fields of federated multi-task learning, federated personalization and federated meta learning within B-FHTL, and highlight the influence of heterogeneity and difficulties of federated hetero-task learning. Our benchmark, including the federated dataset, protocols, the evaluation mechanism and the preliminary experiment, is open-sourced at https://github.com/alibaba/FederatedScope/tree/master/benchmark/B-FHTL

FedHPO-B: A Benchmark Suite for Federated Hyperparameter Optimization

Jun 20, 2022

Abstract:Hyperparameter optimization (HPO) is crucial for machine learning algorithms to achieve satisfactory performance, whose progress has been boosted by related benchmarks. Nonetheless, existing efforts in benchmarking all focus on HPO for traditional centralized learning while ignoring federated learning (FL), a promising paradigm for collaboratively learning models from dispersed data. In this paper, we first identify some uniqueness of HPO for FL algorithms from various aspects. Due to this uniqueness, existing HPO benchmarks no longer satisfy the need to compare HPO methods in the FL setting. To facilitate the research of HPO in the FL setting, we propose and implement a benchmark suite FedHPO-B that incorporates comprehensive FL tasks, enables efficient function evaluations, and eases continuing extensions. We also conduct extensive experiments based on FedHPO-B to benchmark a few HPO methods. We open-source FedHPO-B at https://github.com/alibaba/FederatedScope/tree/master/benchmark/FedHPOB.

pFL-Bench: A Comprehensive Benchmark for Personalized Federated Learning

Jun 17, 2022

Abstract:Personalized Federated Learning (pFL), which utilizes and deploys distinct local models, has gained increasing attention in recent years due to its success in handling the statistical heterogeneity of FL clients. However, standardized evaluation and systematical analysis of diverse pFL methods remain a challenge. Firstly, the highly varied datasets, FL simulation settings and pFL implementations prevent fast and fair comparisons of pFL methods. Secondly, the effectiveness and robustness of pFL methods are under-explored in various practical scenarios, such as new clients generalization and resource-limited clients participation. Finally, the current pFL literature diverges in the adopted evaluation and ablation protocols. To tackle these challenges, we propose the first comprehensive pFL benchmark, pFL-Bench, for facilitating rapid, reproducible, standardized and thorough pFL evaluation. The proposed benchmark contains more than 10 datasets in diverse application domains with unified data partition and realistic heterogeneous settings; a modular and easy-to-extend pFL codebase with more than 20 competitive pFL baseline implementations; and systematic evaluations under containerized environments in terms of generalization, fairness, system overhead, and convergence. We highlight the benefits and potential of state-of-the-art pFL methods and hope pFL-Bench enables further pFL research and broad applications that would otherwise be difficult owing to the absence of a dedicated benchmark. The code is released at https://github.com/alibaba/FederatedScope/tree/master/benchmark/pFL-Bench.

FederatedScope-GNN: Towards a Unified, Comprehensive and Efficient Package for Federated Graph Learning

Apr 14, 2022

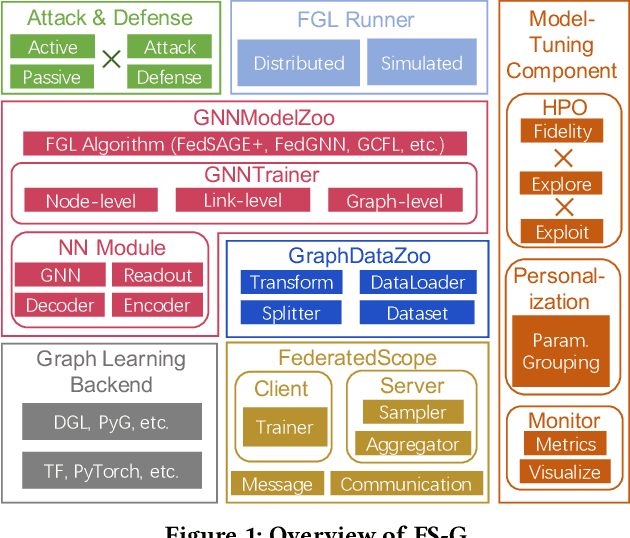

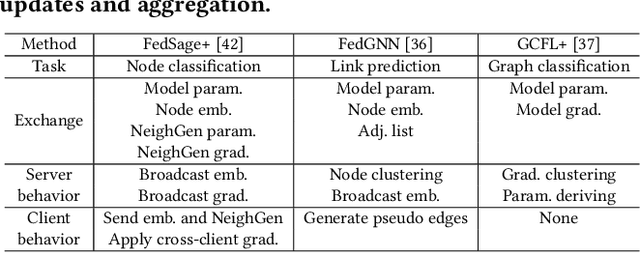

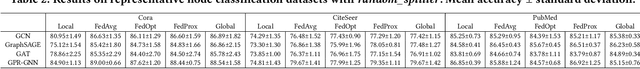

Abstract:The incredible development of federated learning (FL) has benefited various tasks in the domains of computer vision and natural language processing, and the existing frameworks such as TFF and FATE has made the deployment easy in real-world applications. However, federated graph learning (FGL), even though graph data are prevalent, has not been well supported due to its unique characteristics and requirements. The lack of FGL-related framework increases the efforts for accomplishing reproducible research and deploying in real-world applications. Motivated by such strong demand, in this paper, we first discuss the challenges in creating an easy-to-use FGL package and accordingly present our implemented package FederatedScope-GNN (FS-G), which provides (1) a unified view for modularizing and expressing FGL algorithms; (2) comprehensive DataZoo and ModelZoo for out-of-the-box FGL capability; (3) an efficient model auto-tuning component; and (4) off-the-shelf privacy attack and defense abilities. We validate the effectiveness of FS-G by conducting extensive experiments, which simultaneously gains many valuable insights about FGL for the community. Moreover, we employ FS-G to serve the FGL application in real-world E-commerce scenarios, where the attained improvements indicate great potential business benefits. We publicly release FS-G, as submodules of FederatedScope, at https://github.com/alibaba/FederatedScope to promote FGL's research and enable broad applications that would otherwise be infeasible due to the lack of a dedicated package.

FederatedScope: A Comprehensive and Flexible Federated Learning Platform via Message Passing

Apr 11, 2022

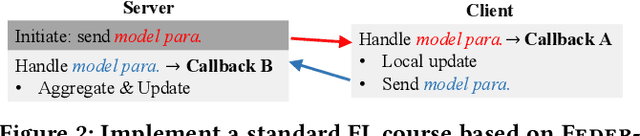

Abstract:Although remarkable progress has been made by the existing federated learning (FL) platforms to provide fundamental functionalities for development, these FL platforms cannot well satisfy burgeoning demands from rapidly growing FL tasks in both academia and industry. To fill this gap, in this paper, we propose a novel and comprehensive federated learning platform, named FederatedScope, which is based on a message-oriented framework. Towards more handy and flexible support for various FL tasks, FederatedScope frames an FL course into several rounds of message passing among participants, and allows developers to customize new types of exchanged messages and the corresponding handlers for various FL applications. Compared to the procedural framework, the proposed message-oriented framework is more flexible to express heterogeneous message exchange and the rich behaviors of participants, and provides a unified view for both simulation and deployment. Besides, we also include several functional components in FederatedScope, such as personalization, auto-tuning, and privacy protection, to satisfy the requirements of frontier studies in FL. We conduct a series of experiments on the provided easy-to-use and comprehensive FL benchmarks to validate the correctness and efficiency of FederatedScope. We have released FederatedScope for users on https://github.com/alibaba/FederatedScope to promote research and industrial deployment of federated learning in a variety of real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge