Weipeng Huang

Correcting Noisy Multilabel Predictions: Modeling Label Noise through Latent Space Shifts

Feb 20, 2025

Abstract:Noise in data appears to be inevitable in most real-world machine learning applications and would cause severe overfitting problems. Not only can data features contain noise, but labels are also prone to be noisy due to human input. In this paper, rather than noisy label learning in multiclass classifications, we instead focus on the less explored area of noisy label learning for multilabel classifications. Specifically, we investigate the post-correction of predictions generated from classifiers learned with noisy labels. The reasons are two-fold. Firstly, this approach can directly work with the trained models to save computational resources. Secondly, it could be applied on top of other noisy label correction techniques to achieve further improvements. To handle this problem, we appeal to deep generative approaches that are possible for uncertainty estimation. Our model posits that label noise arises from a stochastic shift in the latent variable, providing a more robust and beneficial means for noisy learning. We develop both unsupervised and semi-supervised learning methods for our model. The extensive empirical study presents solid evidence to that our approach is able to consistently improve the independent models and performs better than a number of existing methods across various noisy label settings. Moreover, a comprehensive empirical analysis of the proposed method is carried out to validate its robustness, including sensitivity analysis and an ablation study, among other elements.

The Utility of "Even if..." Semifactual Explanation to Optimise Positive Outcomes

Oct 29, 2023Abstract:When users receive either a positive or negative outcome from an automated system, Explainable AI (XAI) has almost exclusively focused on how to mutate negative outcomes into positive ones by crossing a decision boundary using counterfactuals (e.g., \textit{"If you earn 2k more, we will accept your loan application"}). Here, we instead focus on \textit{positive} outcomes, and take the novel step of using XAI to optimise them (e.g., \textit{"Even if you wish to half your down-payment, we will still accept your loan application"}). Explanations such as these that employ "even if..." reasoning, and do not cross a decision boundary, are known as semifactuals. To instantiate semifactuals in this context, we introduce the concept of \textit{Gain} (i.e., how much a user stands to benefit from the explanation), and consider the first causal formalisation of semifactuals. Tests on benchmark datasets show our algorithms are better at maximising gain compared to prior work, and that causality is important in the process. Most importantly however, a user study supports our main hypothesis by showing people find semifactual explanations more useful than counterfactuals when they receive the positive outcome of a loan acceptance.

Rényi Divergence Deep Mutual Learning

Sep 15, 2022

Abstract:This paper revisits an incredibly simple yet exceedingly effective computing paradigm, Deep Mutual Learning (DML). We observe that the effectiveness correlates highly to its excellent generalization quality. In the paper, we interpret the performance improvement with DML from a novel perspective that it is roughly an approximate Bayesian posterior sampling procedure. This also establishes the foundation for applying the R\'{e}nyi divergence to improve the original DML, as it brings in the variance control of the prior (in the context of DML). Therefore, we propose R\'{e}nyi Divergence Deep Mutual Learning (RDML). Our empirical results represent the advantage of the marriage of DML and the R\'{e}nyi divergence. The flexible control imposed by the R\'{e}nyi divergence is able to further improve DML to learn better generalized models.

Posterior Regularisation on Bayesian Hierarchical Mixture Clustering

May 17, 2021

Abstract:We study a recent inferential framework, named posterior regularisation, on the Bayesian hierarchical mixture clustering (BHMC) model. This framework facilitates a simple way to impose extra constraints on a Bayesian model to overcome some weakness of the original model. It narrows the search space of the parameters of the Bayesian model through a formalism that imposes certain constraints on the features of the found solutions. In this paper, in order to enhance the separation of clusters, we apply posterior regularisation to impose max-margin constraints on the nodes at every level of the hierarchy. This paper shows how the framework integrates with BHMC and achieves the expected improvements over the original Bayesian model.

Evaluating Hierarchies through A Partially Observable Markov Decision Processes Methodology

Aug 27, 2019

Abstract:Hierarchical clustering has been shown to be valuable in many scenarios, e.g. catalogues, biology research, image processing, and so on. Despite its usefulness to many situations, there is no agreed methodology on how to properly evaluate the hierarchies produced from different techniques, particularly in the case where ground-truth labels are unavailable. This motivates us to propose a framework for assessing the quality of hierarchical clustering allocations which covers the case of no ground-truth information. Such a quality measurement is useful, for example, to assess the hierarchical structures used by online retailer websites to display their product catalogues. Differently to all the previous measures and metrics, our framework tackles the evaluation from a decision theoretic perspective. We model the process as a bot searching stochastically for items in the hierarchy and establish a measure representing the degree to which the hierarchy supports this search. We employ the concept of Partially Observable Markov Decision Processes (POMDP) to model the uncertainty, the decision making, and the cognitive return for searchers in such a scenario. In this paper, we fully discuss the modeling details and demonstrate its application on some datasets.

BERT-Based Multi-Head Selection for Joint Entity-Relation Extraction

Aug 16, 2019

Abstract:In this paper, we report our method for the Information Extraction task in 2019 Language and Intelligence Challenge. We incorporate BERT into the multi-head selection framework for joint entity-relation extraction. This model extends existing approaches from three perspectives. First, BERT is adopted as a feature extraction layer at the bottom of the multi-head selection framework. We further optimize BERT by introducing a semantic-enhanced task during BERT pre-training. Second, we introduce a large-scale Baidu Baike corpus for entity recognition pre-training, which is of weekly supervised learning since there is no actual named entity label. Third, soft label embedding is proposed to effectively transmit information between entity recognition and relation extraction. Combining these three contributions, we enhance the information extracting ability of the multi-head selection model and achieve F1-score 0.876 on testset-1 with a single model. By ensembling four variants of our model, we finally achieve F1 score 0.892 (1st place) on testset-1 and F1 score 0.8924 (2nd place) on testset-2.

$t$-$k$-means: A $k$-means Variant with Robustness and Stability

Jul 17, 2019

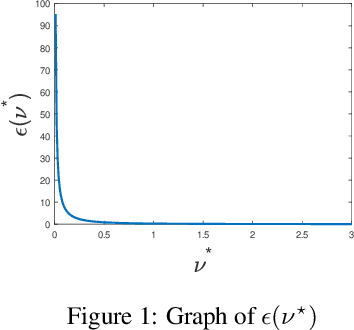

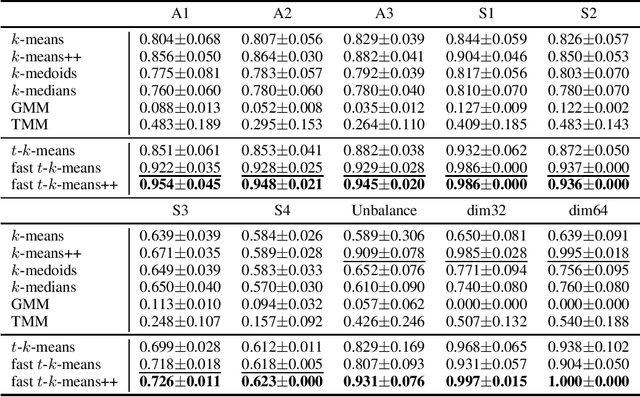

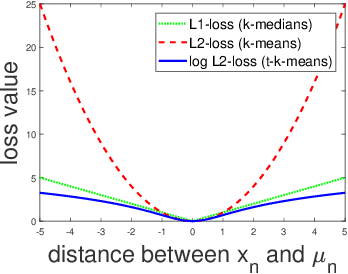

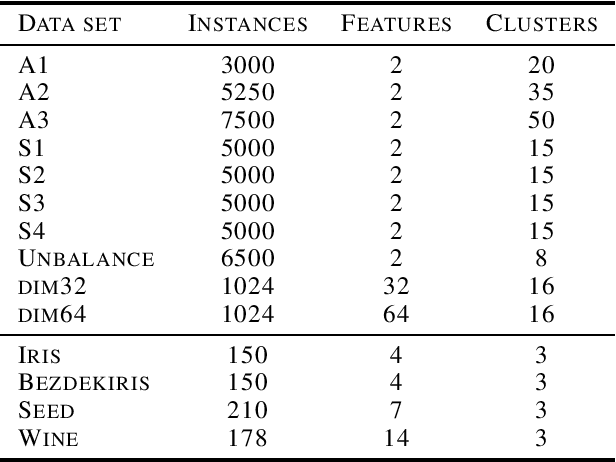

Abstract:Lloyd's $k$-means algorithm is one of the most classical clustering method, which is widely used in data mining or as a data pre-processing procedure. However, due to the thin-tailed property of the Gaussian distribution, $k$-means suffers from relatively poor performance on the heavy-tailed data or outliers. In addition, $k$-means have a relatively weak stability, $i.e.$ its result has a large variance, which reduces the credibility of the model. In this paper, we propose a robust and stable $k$-means variant, the $t$-$k$-means, as well as its fast version in solving the flat clustering problem. Theoretically, we detail the derivations of $t$-$k$-means and analyze its robustness and stability from the aspect of loss function, influence function and the expression of clustering center. A large number of experiments are conducted, which empirically demonstrates that our method has empirical soundness while preserving running efficiency.

Bayesian Hierarchical Mixture Clustering using Multilevel Hierarchical Dirichlet Processes

Jun 05, 2019

Abstract:This paper focuses on the problem of hierarchical non-overlapping clustering of a dataset. In such a clustering, each data item is associated with exactly one leaf node and each internal node is associated with all the data items stored in the sub-tree beneath it, so that each level of the hierarchy corresponds to a partition of the dataset. We develop a novel Bayesian nonparametric method combining the nested Chinese Restaurant Process (nCRP) and the Hierarchical Dirichlet Process (HDP). Compared with other existing Bayesian approaches, our solution tackles data with complex latent mixture features which has not been previously explored in the literature. We discuss the details of the model and the inference procedure. Furthermore, experiments on three datasets show that our method achieves solid empirical results in comparison with existing algorithms.

Toward Fast and Accurate Neural Chinese Word Segmentation with Multi-Criteria Learning

Mar 11, 2019

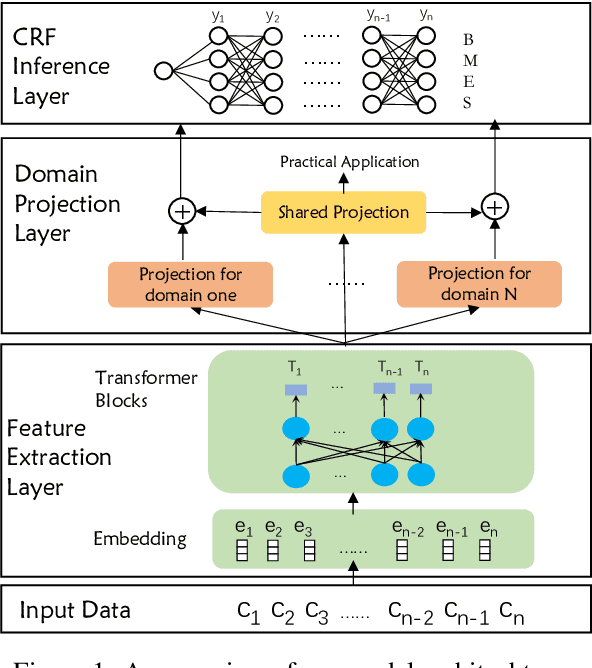

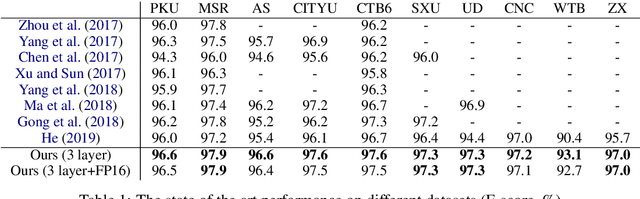

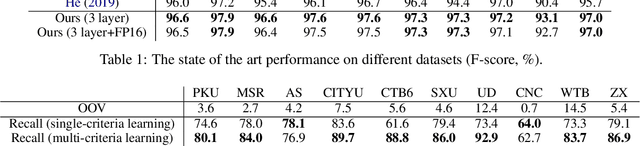

Abstract:The ambiguous annotation criteria bring into the divergence of Chinese Word Segmentation (CWS) datasets with various granularities. Multi-criteria learning leverage the annotation style of individual datasets and mine their common basic knowledge. In this paper, we proposed a domain adaptive segmenter to capture diverse criteria of datasets. Our model is based on Bidirectional Encoder Representations from Transformers (BERT), which is responsible for introducing external knowledge. We also optimize its computational efficiency via model pruning, quantization, and compiler optimization. Experiments show that our segmenter outperforms the previous results on 10 CWS datasets and is faster than the previous state-of-the-art Bi-LSTM-CRF model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge