Wadii Boulila

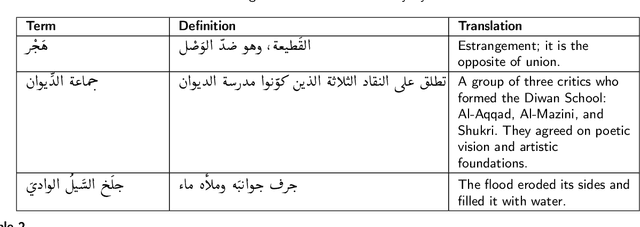

MURAD: A Large-Scale Multi-Domain Unified Reverse Arabic Dictionary Dataset

Jan 29, 2026Abstract:Arabic is a linguistically and culturally rich language with a vast vocabulary that spans scientific, religious, and literary domains. Yet, large-scale lexical datasets linking Arabic words to precise definitions remain limited. We present MURAD (Multi-domain Unified Reverse Arabic Dictionary), an open lexical dataset with 96,243 word-definition pairs. The data come from trusted reference works and educational sources. Extraction used a hybrid pipeline integrating direct text parsing, optical character recognition, and automated reconstruction. This ensures accuracy and clarity. Each record aligns a target word with its standardized Arabic definition and metadata that identifies the source domain. The dataset covers terms from linguistics, Islamic studies, mathematics, physics, psychology, and engineering. It supports computational linguistics and lexicographic research. Applications include reverse dictionary modeling, semantic retrieval, and educational tools. By releasing this resource, we aim to advance Arabic natural language processing and promote reproducible research on Arabic lexical semantics.

The Path Ahead for Agentic AI: Challenges and Opportunities

Jan 06, 2026Abstract:The evolution of Large Language Models (LLMs) from passive text generators to autonomous, goal-driven systems represents a fundamental shift in artificial intelligence. This chapter examines the emergence of agentic AI systems that integrate planning, memory, tool use, and iterative reasoning to operate autonomously in complex environments. We trace the architectural progression from statistical models to transformer-based systems, identifying capabilities that enable agentic behavior: long-range reasoning, contextual awareness, and adaptive decision-making. The chapter provides three contributions: (1) a synthesis of how LLM capabilities extend toward agency through reasoning-action-reflection loops; (2) an integrative framework describing core components perception, memory, planning, and tool execution that bridge LLMs with autonomous behavior; (3) a critical assessment of applications and persistent challenges in safety, alignment, reliability, and sustainability. Unlike existing surveys, we focus on the architectural transition from language understanding to autonomous action, emphasizing the technical gaps that must be resolved before deployment. We identify critical research priorities, including verifiable planning, scalable multi-agent coordination, persistent memory architectures, and governance frameworks. Responsible advancement requires simultaneous progress in technical robustness, interpretability, and ethical safeguards to realize potential while mitigating risks of misalignment and unintended consequences.

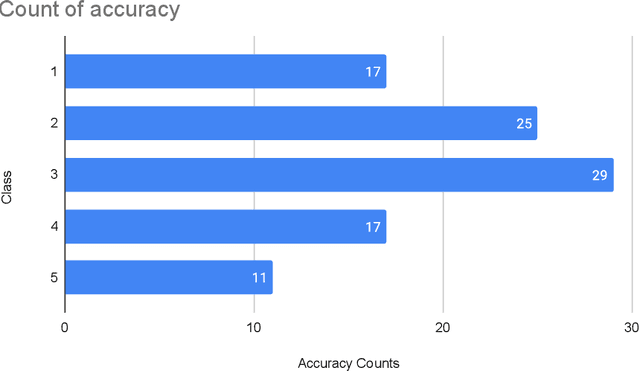

ARCADE: A City-Scale Corpus for Fine-Grained Arabic Dialect Tagging

Jan 05, 2026Abstract:The Arabic language is characterized by a rich tapestry of regional dialects that differ substantially in phonetics and lexicon, reflecting the geographic and cultural diversity of its speakers. Despite the availability of many multi-dialect datasets, mapping speech to fine-grained dialect sources, such as cities, remains underexplored. We present ARCADE (Arabic Radio Corpus for Audio Dialect Evaluation), the first Arabic speech dataset designed explicitly with city-level dialect granularity. The corpus comprises Arabic radio speech collected from streaming services across the Arab world. Our data pipeline captures 30-second segments from verified radio streams, encompassing both Modern Standard Arabic (MSA) and diverse dialectal speech. To ensure reliability, each clip was annotated by one to three native Arabic reviewers who assigned rich metadata, including emotion, speech type, dialect category, and a validity flag for dialect identification tasks. The resulting corpus comprises 6,907 annotations and 3,790 unique audio segments spanning 58 cities across 19 countries. These fine-grained annotations enable robust multi-task learning, serving as a benchmark for city-level dialect tagging. We detail the data collection methodology, assess audio quality, and provide a comprehensive analysis of label distributions. The dataset is available on: https://huggingface.co/datasets/riotu-lab/ARCADE-full

Enhancing Early Alzheimer Disease Detection through Big Data and Ensemble Few-Shot Learning

Oct 22, 2025Abstract:Alzheimer disease is a severe brain disorder that causes harm in various brain areas and leads to memory damage. The limited availability of labeled medical data poses a significant challenge for accurate Alzheimer disease detection. There is a critical need for effective methods to improve the accuracy of Alzheimer disease detection, considering the scarcity of labeled data, the complexity of the disease, and the constraints related to data privacy. To address this challenge, our study leverages the power of big data in the form of pre-trained Convolutional Neural Networks (CNNs) within the framework of Few-Shot Learning (FSL) and ensemble learning. We propose an ensemble approach based on a Prototypical Network (ProtoNet), a powerful method in FSL, integrating various pre-trained CNNs as encoders. This integration enhances the richness of features extracted from medical images. Our approach also includes a combination of class-aware loss and entropy loss to ensure a more precise classification of Alzheimer disease progression levels. The effectiveness of our method was evaluated using two datasets, the Kaggle Alzheimer dataset and the ADNI dataset, achieving an accuracy of 99.72% and 99.86%, respectively. The comparison of our results with relevant state-of-the-art studies demonstrated that our approach achieved superior accuracy and highlighted its validity and potential for real-world applications in early Alzheimer disease detection.

NTIRE 2025 Image Shadow Removal Challenge Report

Jun 18, 2025

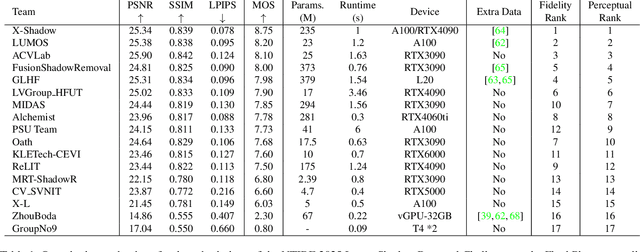

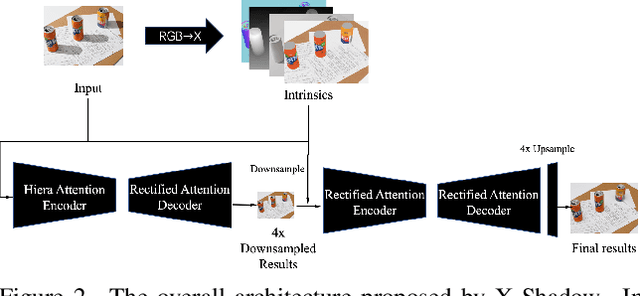

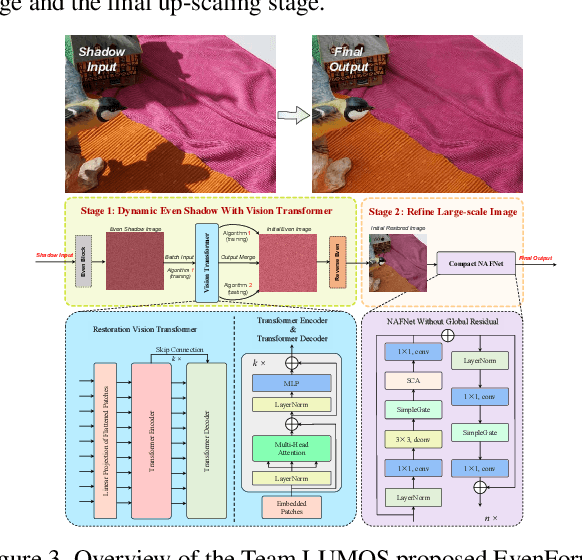

Abstract:This work examines the findings of the NTIRE 2025 Shadow Removal Challenge. A total of 306 participants have registered, with 17 teams successfully submitting their solutions during the final evaluation phase. Following the last two editions, this challenge had two evaluation tracks: one focusing on reconstruction fidelity and the other on visual perception through a user study. Both tracks were evaluated with images from the WSRD+ dataset, simulating interactions between self- and cast-shadows with a large number of diverse objects, textures, and materials.

SARD: A Large-Scale Synthetic Arabic OCR Dataset for Book-Style Text Recognition

May 30, 2025Abstract:Arabic Optical Character Recognition (OCR) is essential for converting vast amounts of Arabic print media into digital formats. However, training modern OCR models, especially powerful vision-language models, is hampered by the lack of large, diverse, and well-structured datasets that mimic real-world book layouts. Existing Arabic OCR datasets often focus on isolated words or lines or are limited in scale, typographic variety, or structural complexity found in books. To address this significant gap, we introduce SARD (Large-Scale Synthetic Arabic OCR Dataset). SARD is a massive, synthetically generated dataset specifically designed to simulate book-style documents. It comprises 843,622 document images containing 690 million words, rendered across ten distinct Arabic fonts to ensure broad typographic coverage. Unlike datasets derived from scanned documents, SARD is free from real-world noise and distortions, offering a clean and controlled environment for model training. Its synthetic nature provides unparalleled scalability and allows for precise control over layout and content variation. We detail the dataset's composition and generation process and provide benchmark results for several OCR models, including traditional and deep learning approaches, highlighting the challenges and opportunities presented by this dataset. SARD serves as a valuable resource for developing and evaluating robust OCR and vision-language models capable of processing diverse Arabic book-style texts.

GATE: General Arabic Text Embedding for Enhanced Semantic Textual Similarity with Matryoshka Representation Learning and Hybrid Loss Training

May 30, 2025Abstract:Semantic textual similarity (STS) is a critical task in natural language processing (NLP), enabling applications in retrieval, clustering, and understanding semantic relationships between texts. However, research in this area for the Arabic language remains limited due to the lack of high-quality datasets and pre-trained models. This scarcity of resources has restricted the accurate evaluation and advance of semantic similarity in Arabic text. This paper introduces General Arabic Text Embedding (GATE) models that achieve state-of-the-art performance on the Semantic Textual Similarity task within the MTEB benchmark. GATE leverages Matryoshka Representation Learning and a hybrid loss training approach with Arabic triplet datasets for Natural Language Inference, which are essential for enhancing model performance in tasks that demand fine-grained semantic understanding. GATE outperforms larger models, including OpenAI, with a 20-25% performance improvement on STS benchmarks, effectively capturing the unique semantic nuances of Arabic.

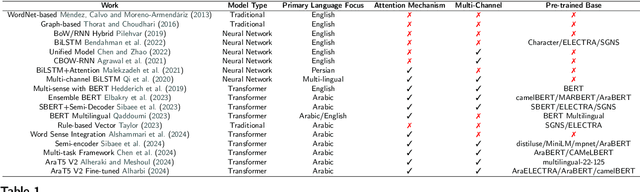

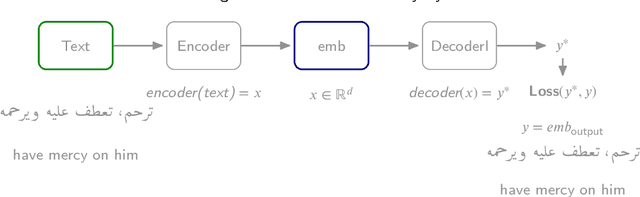

Advancing Arabic Reverse Dictionary Systems: A Transformer-Based Approach with Dataset Construction Guidelines

Apr 30, 2025

Abstract:This study addresses the critical gap in Arabic natural language processing by developing an effective Arabic Reverse Dictionary (RD) system that enables users to find words based on their descriptions or meanings. We present a novel transformer-based approach with a semi-encoder neural network architecture featuring geometrically decreasing layers that achieves state-of-the-art results for Arabic RD tasks. Our methodology incorporates a comprehensive dataset construction process and establishes formal quality standards for Arabic lexicographic definitions. Experiments with various pre-trained models demonstrate that Arabic-specific models significantly outperform general multilingual embeddings, with ARBERTv2 achieving the best ranking score (0.0644). Additionally, we provide a formal abstraction of the reverse dictionary task that enhances theoretical understanding and develop a modular, extensible Python library (RDTL) with configurable training pipelines. Our analysis of dataset quality reveals important insights for improving Arabic definition construction, leading to eight specific standards for building high-quality reverse dictionary resources. This work contributes significantly to Arabic computational linguistics and provides valuable tools for language learning, academic writing, and professional communication in Arabic.

NTIRE 2025 Challenge on Image Super-Resolution ($\times$4): Methods and Results

Apr 20, 2025Abstract:This paper presents the NTIRE 2025 image super-resolution ($\times$4) challenge, one of the associated competitions of the 10th NTIRE Workshop at CVPR 2025. The challenge aims to recover high-resolution (HR) images from low-resolution (LR) counterparts generated through bicubic downsampling with a $\times$4 scaling factor. The objective is to develop effective network designs or solutions that achieve state-of-the-art SR performance. To reflect the dual objectives of image SR research, the challenge includes two sub-tracks: (1) a restoration track, emphasizes pixel-wise accuracy and ranks submissions based on PSNR; (2) a perceptual track, focuses on visual realism and ranks results by a perceptual score. A total of 286 participants registered for the competition, with 25 teams submitting valid entries. This report summarizes the challenge design, datasets, evaluation protocol, the main results, and methods of each team. The challenge serves as a benchmark to advance the state of the art and foster progress in image SR.

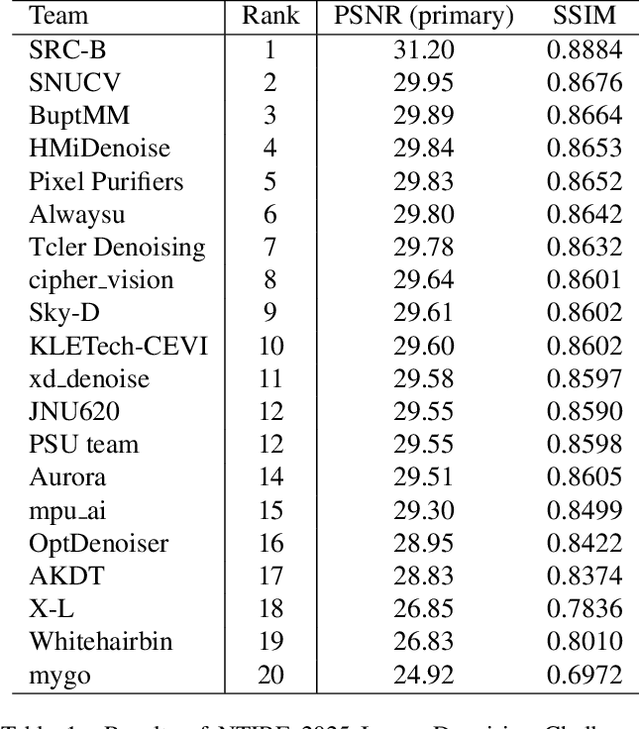

The Tenth NTIRE 2025 Image Denoising Challenge Report

Apr 16, 2025

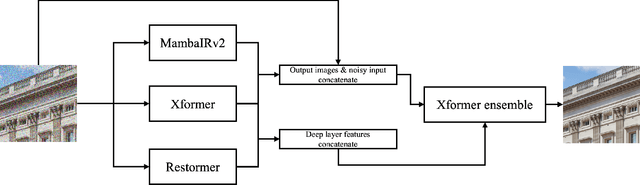

Abstract:This paper presents an overview of the NTIRE 2025 Image Denoising Challenge ({\sigma} = 50), highlighting the proposed methodologies and corresponding results. The primary objective is to develop a network architecture capable of achieving high-quality denoising performance, quantitatively evaluated using PSNR, without constraints on computational complexity or model size. The task assumes independent additive white Gaussian noise (AWGN) with a fixed noise level of 50. A total of 290 participants registered for the challenge, with 20 teams successfully submitting valid results, providing insights into the current state-of-the-art in image denoising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge