Tong Hua

A Multi-view Landmark Representation Approach with Application to GNSS-Visual-Inertial Odometry

Aug 07, 2025

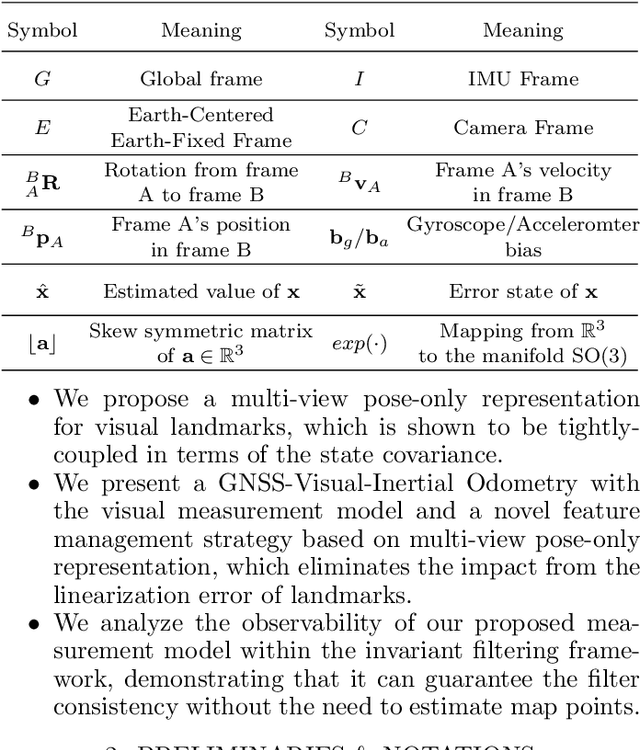

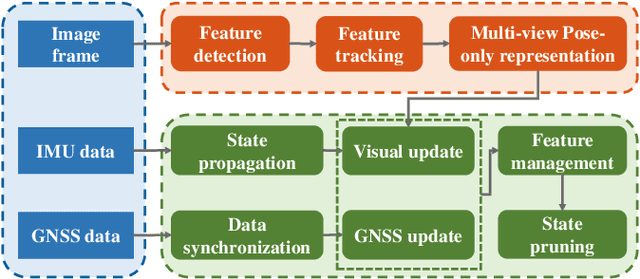

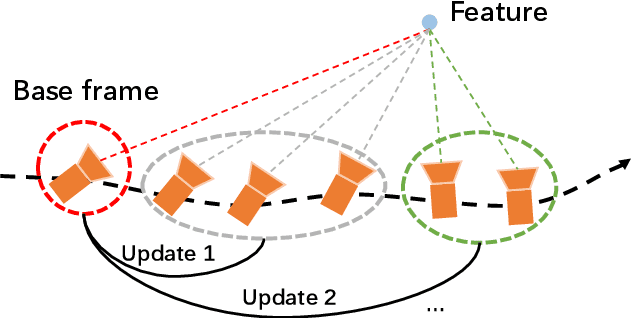

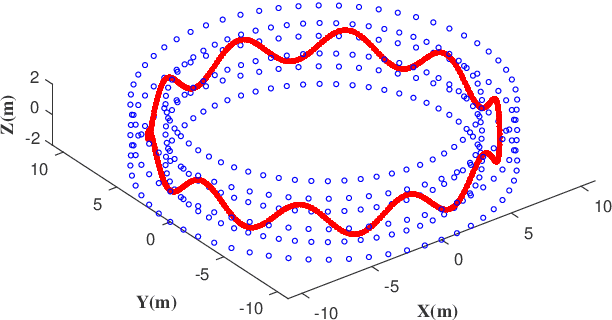

Abstract:Invariant Extended Kalman Filter (IEKF) has been a significant technique in vision-aided sensor fusion. However, it usually suffers from high computational burden when jointly optimizing camera poses and the landmarks. To improve its efficiency and applicability for multi-sensor fusion, we present a multi-view pose-only estimation approach with its application to GNSS-Visual-Inertial Odometry (GVIO) in this paper. Our main contribution is deriving a visual measurement model which directly associates landmark representation with multiple camera poses and observations. Such a pose-only measurement is proven to be tightly-coupled between landmarks and poses, and maintain a perfect null space that is independent of estimated poses. Finally, we apply the proposed approach to a filter based GVIO with a novel feature management strategy. Both simulation tests and real-world experiments are conducted to demonstrate the superiority of the proposed method in terms of efficiency and accuracy.

FAST-LIVO2: Fast, Direct LiDAR-Inertial-Visual Odometry

Aug 26, 2024

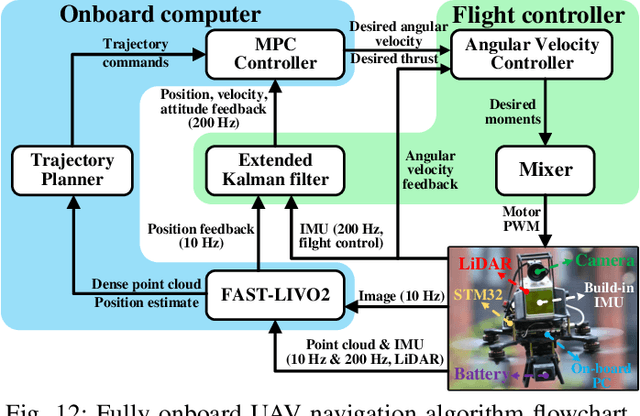

Abstract:This paper proposes FAST-LIVO2: a fast, direct LiDAR-inertial-visual odometry framework to achieve accurate and robust state estimation in SLAM tasks and provide great potential in real-time, onboard robotic applications. FAST-LIVO2 fuses the IMU, LiDAR and image measurements efficiently through an ESIKF. To address the dimension mismatch between the heterogeneous LiDAR and image measurements, we use a sequential update strategy in the Kalman filter. To enhance the efficiency, we use direct methods for both the visual and LiDAR fusion, where the LiDAR module registers raw points without extracting edge or plane features and the visual module minimizes direct photometric errors without extracting ORB or FAST corner features. The fusion of both visual and LiDAR measurements is based on a single unified voxel map where the LiDAR module constructs the geometric structure for registering new LiDAR scans and the visual module attaches image patches to the LiDAR points. To enhance the accuracy of image alignment, we use plane priors from the LiDAR points in the voxel map (and even refine the plane prior) and update the reference patch dynamically after new images are aligned. Furthermore, to enhance the robustness of image alignment, FAST-LIVO2 employs an on-demanding raycast operation and estimates the image exposure time in real time. Lastly, we detail three applications of FAST-LIVO2: UAV onboard navigation demonstrating the system's computation efficiency for real-time onboard navigation, airborne mapping showcasing the system's mapping accuracy, and 3D model rendering (mesh-based and NeRF-based) underscoring the suitability of our reconstructed dense map for subsequent rendering tasks. We open source our code, dataset and application on GitHub to benefit the robotics community.

TON-VIO: Online Time Offset Modeling Networks for Robust Temporal Alignment in High Dynamic Motion VIO

Mar 19, 2024Abstract:Temporal misalignment (time offset) between sensors is common in low cost visual-inertial odometry (VIO) systems. Such temporal misalignment introduces inconsistent constraints for state estimation, leading to a significant positioning drift especially in high dynamic motion scenarios. In this article, we focus on online temporal calibration to reduce the positioning drift caused by the time offset for high dynamic motion VIO. For the time offset observation model, most existing methods rely on accurate state estimation or stable visual tracking. For the prediction model, current methods oversimplify the time offset as a constant value with white Gaussian noise. However, these ideal conditions are seldom satisfied in real high dynamic scenarios, resulting in the poor performance. In this paper, we introduce online time offset modeling networks (TON) to enhance real-time temporal calibration. TON improves the accuracy of time offset observation and prediction modeling. Specifically, for observation modeling, we propose feature velocity observation networks to enhance velocity computation for features in unstable visual tracking conditions. For prediction modeling, we present time offset prediction networks to learn its evolution pattern. To highlight the effectiveness of our method, we integrate the proposed TON into both optimization-based and filter-based VIO systems. Simulation and real-world experiments are conducted to demonstrate the enhanced performance of our approach. Additionally, to contribute to the VIO community, we will open-source the code of our method on: https://github.com/Franky-X/FVON-TPN.

PLV-IEKF: Consistent Visual-Inertial Odometry using Points, Lines, and Vanishing Points

Nov 08, 2023Abstract:In this paper, we propose an Invariant Extended Kalman Filter (IEKF) based Visual-Inertial Odometry (VIO) using multiple features in man-made environments. Conventional EKF-based VIO usually suffers from system inconsistency and angular drift that naturally occurs in feature-based methods. However, in man-made environments, notable structural regularities, such as lines and vanishing points, offer valuable cues for localization. To exploit these structural features effectively and maintain system consistency, we design a right invariant filter-based VIO scheme incorporating point, line, and vanishing point features. We demonstrate that the conventional additive error definition for point features can also preserve system consistency like the invariant error definition by proving a mathematically equivalent measurement model. And a similar conclusion is established for line features. Additionally, we conduct an invariant filter-based observability analysis proving that vanishing point measurement maintains unobservable directions naturally. Both simulation and real-world tests are conducted to validate our methods' pose accuracy and consistency. The experimental results validate the competitive performance of our method, highlighting its ability to deliver accurate and consistent pose estimation in man-made environments.

PIEKF-VIWO: Visual-Inertial-Wheel Odometry using Partial Invariant Extended Kalman Filter

Mar 14, 2023Abstract:Invariant Extended Kalman Filter (IEKF) has been successfully applied in Visual-inertial Odometry (VIO) as an advanced achievement of Kalman filter, showing great potential in sensor fusion. In this paper, we propose partial IEKF (PIEKF), which only incorporates rotation-velocity state into the Lie group structure and apply it for Visual-Inertial-Wheel Odometry (VIWO) to improve positioning accuracy and consistency. Specifically, we derive the rotation-velocity measurement model, which combines wheel measurements with kinematic constraints. The model circumvents the wheel odometer's 3D integration and covariance propagation, which is essential for filter consistency. And a plane constraint is also introduced to enhance the position accuracy. A dynamic outlier detection method is adopted, leveraging the velocity state output. Through the simulation and real-world test, we validate the effectiveness of our approach, which outperforms the standard Multi-State Constraint Kalman Filter (MSCKF) based VIWO in consistency and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge