Dongjiao He

FAST-LIVO2: Fast, Direct LiDAR-Inertial-Visual Odometry

Aug 26, 2024

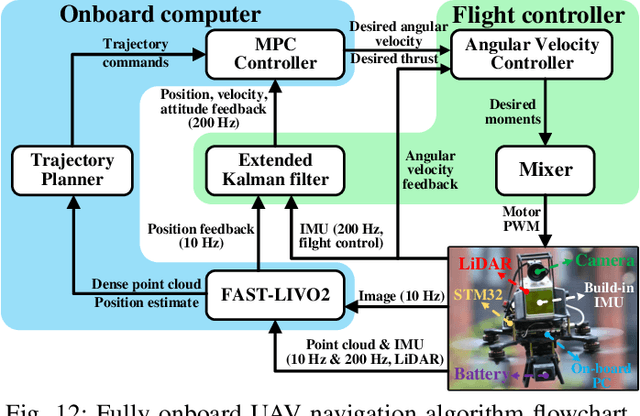

Abstract:This paper proposes FAST-LIVO2: a fast, direct LiDAR-inertial-visual odometry framework to achieve accurate and robust state estimation in SLAM tasks and provide great potential in real-time, onboard robotic applications. FAST-LIVO2 fuses the IMU, LiDAR and image measurements efficiently through an ESIKF. To address the dimension mismatch between the heterogeneous LiDAR and image measurements, we use a sequential update strategy in the Kalman filter. To enhance the efficiency, we use direct methods for both the visual and LiDAR fusion, where the LiDAR module registers raw points without extracting edge or plane features and the visual module minimizes direct photometric errors without extracting ORB or FAST corner features. The fusion of both visual and LiDAR measurements is based on a single unified voxel map where the LiDAR module constructs the geometric structure for registering new LiDAR scans and the visual module attaches image patches to the LiDAR points. To enhance the accuracy of image alignment, we use plane priors from the LiDAR points in the voxel map (and even refine the plane prior) and update the reference patch dynamically after new images are aligned. Furthermore, to enhance the robustness of image alignment, FAST-LIVO2 employs an on-demanding raycast operation and estimates the image exposure time in real time. Lastly, we detail three applications of FAST-LIVO2: UAV onboard navigation demonstrating the system's computation efficiency for real-time onboard navigation, airborne mapping showcasing the system's mapping accuracy, and 3D model rendering (mesh-based and NeRF-based) underscoring the suitability of our reconstructed dense map for subsequent rendering tasks. We open source our code, dataset and application on GitHub to benefit the robotics community.

Joint Intrinsic and Extrinsic LiDAR-Camera Calibration in Targetless Environments Using Plane-Constrained Bundle Adjustment

Aug 24, 2023

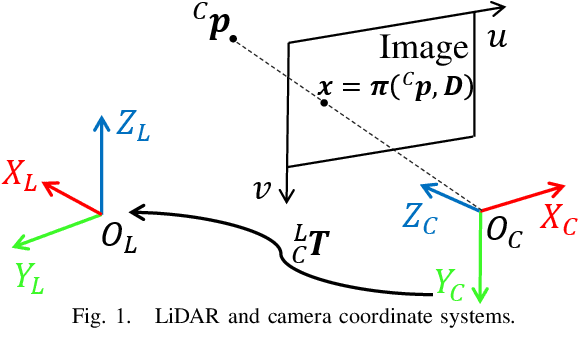

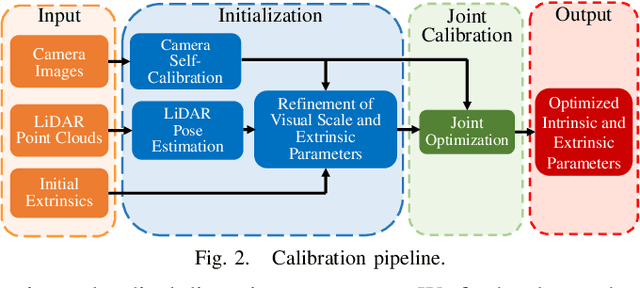

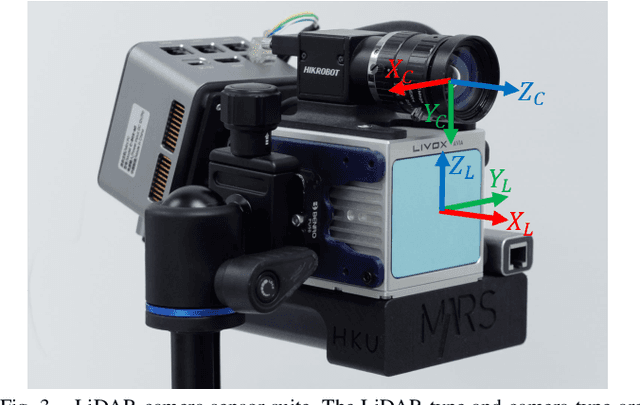

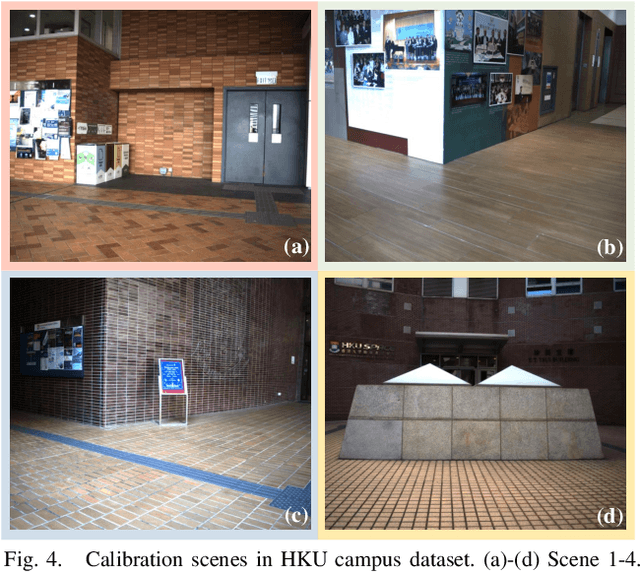

Abstract:This paper introduces a novel targetless method for joint intrinsic and extrinsic calibration of LiDAR-camera systems using plane-constrained bundle adjustment (BA). Our method leverages LiDAR point cloud measurements from planes in the scene, alongside visual points derived from those planes. The core novelty of our method lies in the integration of visual BA with the registration between visual points and LiDAR point cloud planes, which is formulated as a unified optimization problem. This formulation achieves concurrent intrinsic and extrinsic calibration, while also imparting depth constraints to the visual points to enhance the accuracy of intrinsic calibration. Experiments are conducted on both public data sequences and self-collected dataset. The results showcase that our approach not only surpasses other state-of-the-art (SOTA) methods but also maintains remarkable calibration accuracy even within challenging environments. For the benefits of the robotics community, we have open sourced our codes.

FAST-LIO2: Fast Direct LiDAR-inertial Odometry

Jul 14, 2021

Abstract:This paper presents FAST-LIO2: a fast, robust, and versatile LiDAR-inertial odometry framework. Building on a highly efficient tightly-coupled iterated Kalman filter, FAST-LIO2 has two key novelties that allow fast, robust, and accurate LiDAR navigation (and mapping). The first one is directly registering raw points to the map (and subsequently update the map, i.e., mapping) without extracting features. This enables the exploitation of subtle features in the environment and hence increases the accuracy. The elimination of a hand-engineered feature extraction module also makes it naturally adaptable to emerging LiDARs of different scanning patterns; The second main novelty is maintaining a map by an incremental k-d tree data structure, ikd-Tree, that enables incremental updates (i.e., point insertion, delete) and dynamic re-balancing. Compared with existing dynamic data structures (octree, R*-tree, nanoflann k-d tree), ikd-Tree achieves superior overall performance while naturally supports downsampling on the tree. We conduct an exhaustive benchmark comparison in 19 sequences from a variety of open LiDAR datasets. FAST-LIO2 achieves consistently higher accuracy at a much lower computation load than other state-of-the-art LiDAR-inertial navigation systems. Various real-world experiments on solid-state LiDARs with small FoV are also conducted. Overall, FAST-LIO2 is computationally-efficient (e.g., up to 100 Hz odometry and mapping in large outdoor environments), robust (e.g., reliable pose estimation in cluttered indoor environments with rotation up to 1000 deg/s), versatile (i.e., applicable to both multi-line spinning and solid-state LiDARs, UAV and handheld platforms, and Intel and ARM-based processors), while still achieving higher accuracy than existing methods. Our implementation of the system FAST-LIO2, and the data structure ikd-Tree are both open-sourced on Github.

Embedding manifold structures into Kalman filters

Feb 07, 2021

Abstract:Error-state Kalman filter is an elegant and effective filtering technique for robotic systems operating on manifolds. To avoid the tedious and repetitive derivations for implementing an error-state Kalman filter for a certain system, this paper proposes a generic symbolic representation for error-state Kalman filters on manifolds. Utilizing the $\boxplus\backslash\boxminus$ operations and further defining a $\oplus$ operation on the respective manifold, we propose a canonical representation of the robotic system, which enables us to separate the manifold structures from the system descriptions in each step of the Kalman filter, ultimately leading to a generic, symbolic and manifold-embedding Kalman filter framework. This proposed Kalman filter framework can be used by only casting the system model into the canonical form without going through the cumbersome hand-derivation of the on-manifold Kalman filter. This is particularly useful when the robotic system is of high dimension. Furthermore, the manifold-embedding Kalman filter is implemented as a toolkit in $C$++, with which an user needs only to define the system, and call the respective filter steps (e.g., propagation, update) according to the events (e.g., reception of input, reception of measurement). The existing implementation supports full iterated Kalman filtering for systems on manifold $\mathcal{S} = \mathbb{R}^m \times SO(3) \times \cdots \times SO(3) \times \mathbb{S}^2 \times \cdots \times \mathbb{S}^2 $ or any of its sub-manifolds, and is extendable to other types of manifold when necessary. The proposed symbolic Kalman filter and the developed toolkit are verified by implementing a tightly-coupled lidar-inertial navigation system. Results show superior filtering performances and computation efficiency comparable to hand-engineered counterparts. Finally, the toolkit is opened sourced at https://github.com/hku-mars/IKFoM.

Robots State Estimation and Observability Analysis Based on Statistical Motion Models

Oct 12, 2020

Abstract:This paper presents a generic motion model to capture mobile robots' dynamic behaviors (translation and rotation). The model is based on statistical models driven by white random processes and is formulated into a full state estimation algorithm based on the error-state extended Kalman filtering framework (ESEKF). Major benefits of this method are its versatility, being applicable to different robotic systems without accurately modeling the robots' specific dynamics, and ability to estimate the robot's (angular) acceleration, jerk, or higher-order dynamic states with low delay. Mathematical analysis with numerical simulations are presented to show the properties of the statistical model-based estimation framework and to reveal its connection to existing low-pass filters. Furthermore, a new paradigm is developed for robots observability analysis by developing Lie derivatives and associated partial differentiation directly on manifolds. It is shown that this new paradigm is much simpler and more natural than existing methods based on quaternion parameterizations. It is also scalable to high dimensional systems. A novel \textbf{\textit{thin}} set concept is introduced to characterize the unobservable subset of the system states, providing the theoretical foundation to observability analysis of robotic systems operating on manifolds and in high dimension. Finally, extensive experiments including full state estimation and extrinsic calibration (both POS-IMU and IMU-IMU) on a quadrotor UAV, a handheld platform and a ground vehicle are conducted. Comparisons with existing methods show that the proposed method can effectively estimate all extrinsic parameters, the robot's translation/angular acceleration and other state variables (e.g., position, velocity, attitude) of high accuracy and low delay.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge