Stefano Pasquali

FINCH: Financial Intelligence using Natural language for Contextualized SQL Handling

Oct 02, 2025Abstract:Text-to-SQL, the task of translating natural language questions into SQL queries, has long been a central challenge in NLP. While progress has been significant, applying it to the financial domain remains especially difficult due to complex schema, domain-specific terminology, and high stakes of error. Despite this, there is no dedicated large-scale financial dataset to advance research, creating a critical gap. To address this, we introduce a curated financial dataset (FINCH) comprising 292 tables and 75,725 natural language-SQL pairs, enabling both fine-tuning and rigorous evaluation. Building on this resource, we benchmark reasoning models and language models of varying scales, providing a systematic analysis of their strengths and limitations in financial Text-to-SQL tasks. Finally, we propose a finance-oriented evaluation metric (FINCH Score) that captures nuances overlooked by existing measures, offering a more faithful assessment of model performance.

Supervised Similarity for High-Yield Corporate Bonds with Quantum Cognition Machine Learning

Feb 03, 2025

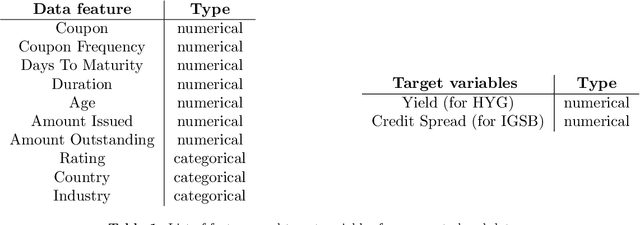

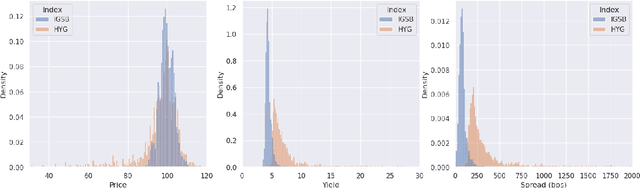

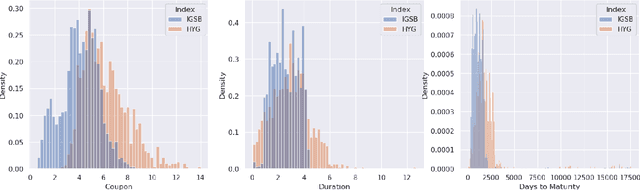

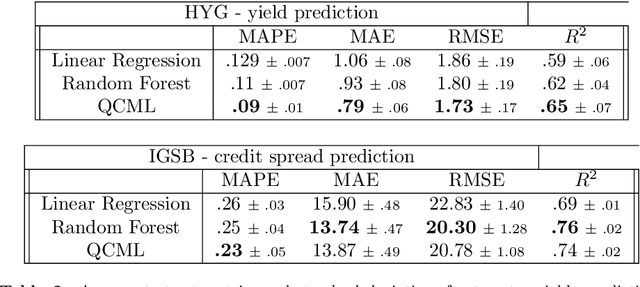

Abstract:We investigate the application of quantum cognition machine learning (QCML), a novel paradigm for both supervised and unsupervised learning tasks rooted in the mathematical formalism of quantum theory, to distance metric learning in corporate bond markets. Compared to equities, corporate bonds are relatively illiquid and both trade and quote data in these securities are relatively sparse. Thus, a measure of distance/similarity among corporate bonds is particularly useful for a variety of practical applications in the trading of illiquid bonds, including the identification of similar tradable alternatives, pricing securities with relatively few recent quotes or trades, and explaining the predictions and performance of ML models based on their training data. Previous research has explored supervised similarity learning based on classical tree-based models in this context; here, we explore the application of the QCML paradigm for supervised distance metric learning in the same context, showing that it outperforms classical tree-based models in high-yield (HY) markets, while giving comparable or better performance (depending on the evaluation metric) in investment grade (IG) markets.

A Comparative Study of DSPy Teleprompter Algorithms for Aligning Large Language Models Evaluation Metrics to Human Evaluation

Dec 19, 2024

Abstract:We argue that the Declarative Self-improving Python (DSPy) optimizers are a way to align the large language model (LLM) prompts and their evaluations to the human annotations. We present a comparative analysis of five teleprompter algorithms, namely, Cooperative Prompt Optimization (COPRO), Multi-Stage Instruction Prompt Optimization (MIPRO), BootstrapFewShot, BootstrapFewShot with Optuna, and K-Nearest Neighbor Few Shot, within the DSPy framework with respect to their ability to align with human evaluations. As a concrete example, we focus on optimizing the prompt to align hallucination detection (using LLM as a judge) to human annotated ground truth labels for a publicly available benchmark dataset. Our experiments demonstrate that optimized prompts can outperform various benchmark methods to detect hallucination, and certain telemprompters outperform the others in at least these experiments.

How to Choose a Threshold for an Evaluation Metric for Large Language Models

Dec 10, 2024

Abstract:To ensure and monitor large language models (LLMs) reliably, various evaluation metrics have been proposed in the literature. However, there is little research on prescribing a methodology to identify a robust threshold on these metrics even though there are many serious implications of an incorrect choice of the thresholds during deployment of the LLMs. Translating the traditional model risk management (MRM) guidelines within regulated industries such as the financial industry, we propose a step-by-step recipe for picking a threshold for a given LLM evaluation metric. We emphasize that such a methodology should start with identifying the risks of the LLM application under consideration and risk tolerance of the stakeholders. We then propose concrete and statistically rigorous procedures to determine a threshold for the given LLM evaluation metric using available ground-truth data. As a concrete example to demonstrate the proposed methodology at work, we employ it on the Faithfulness metric, as implemented in various publicly available libraries, using the publicly available HaluBench dataset. We also lay a foundation for creating systematic approaches to select thresholds, not only for LLMs but for any GenAI applications.

Can an unsupervised clustering algorithm reproduce a categorization system?

Aug 19, 2024

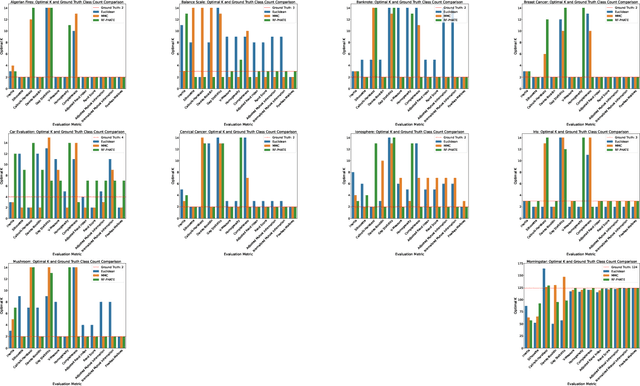

Abstract:Peer analysis is a critical component of investment management, often relying on expert-provided categorization systems. These systems' consistency is questioned when they do not align with cohorts from unsupervised clustering algorithms optimized for various metrics. We investigate whether unsupervised clustering can reproduce ground truth classes in a labeled dataset, showing that success depends on feature selection and the chosen distance metric. Using toy datasets and fund categorization as real-world examples we demonstrate that accurately reproducing ground truth classes is challenging. We also highlight the limitations of standard clustering evaluation metrics in identifying the optimal number of clusters relative to the ground truth classes. We then show that if appropriate features are available in the dataset, and a proper distance metric is known (e.g., using a supervised Random Forest-based distance metric learning method), then an unsupervised clustering can indeed reproduce the ground truth classes as distinct clusters.

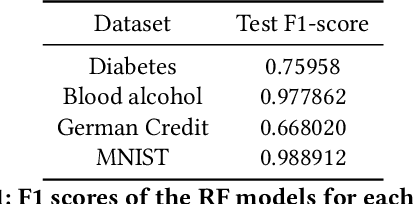

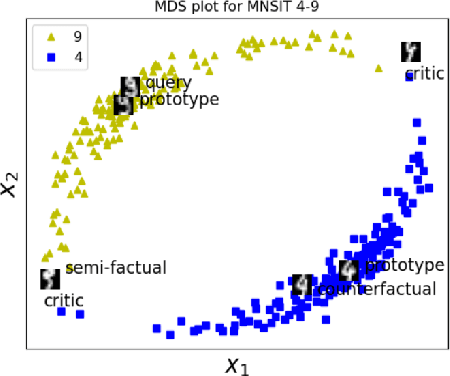

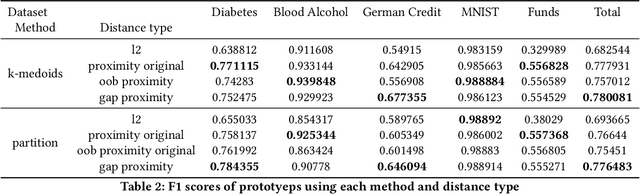

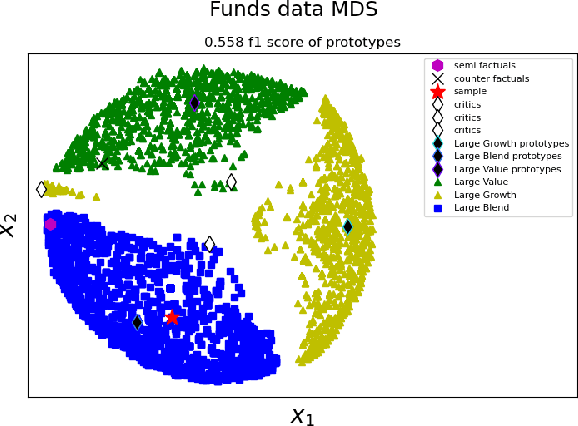

Case-based Explainability for Random Forest: Prototypes, Critics, Counter-factuals and Semi-factuals

Aug 13, 2024

Abstract:The explainability of black-box machine learning algorithms, commonly known as Explainable Artificial Intelligence (XAI), has become crucial for financial and other regulated industrial applications due to regulatory requirements and the need for transparency in business practices. Among the various paradigms of XAI, Explainable Case-Based Reasoning (XCBR) stands out as a pragmatic approach that elucidates the output of a model by referencing actual examples from the data used to train or test the model. Despite its potential, XCBR has been relatively underexplored for many algorithms such as tree-based models until recently. We start by observing that most XCBR methods are defined based on the distance metric learned by the algorithm. By utilizing a recently proposed technique to extract the distance metric learned by Random Forests (RFs), which is both geometry- and accuracy-preserving, we investigate various XCBR methods. These methods amount to identify special points from the training datasets, such as prototypes, critics, counter-factuals, and semi-factuals, to explain the predictions for a given query of the RF. We evaluate these special points using various evaluation metrics to assess their explanatory power and effectiveness.

HybridRAG: Integrating Knowledge Graphs and Vector Retrieval Augmented Generation for Efficient Information Extraction

Aug 09, 2024

Abstract:Extraction and interpretation of intricate information from unstructured text data arising in financial applications, such as earnings call transcripts, present substantial challenges to large language models (LLMs) even using the current best practices to use Retrieval Augmented Generation (RAG) (referred to as VectorRAG techniques which utilize vector databases for information retrieval) due to challenges such as domain specific terminology and complex formats of the documents. We introduce a novel approach based on a combination, called HybridRAG, of the Knowledge Graphs (KGs) based RAG techniques (called GraphRAG) and VectorRAG techniques to enhance question-answer (Q&A) systems for information extraction from financial documents that is shown to be capable of generating accurate and contextually relevant answers. Using experiments on a set of financial earning call transcripts documents which come in the form of Q&A format, and hence provide a natural set of pairs of ground-truth Q&As, we show that HybridRAG which retrieves context from both vector database and KG outperforms both traditional VectorRAG and GraphRAG individually when evaluated at both the retrieval and generation stages in terms of retrieval accuracy and answer generation. The proposed technique has applications beyond the financial domain

Open Set Recognition for Random Forest

Aug 01, 2024Abstract:In many real-world classification or recognition tasks, it is often difficult to collect training examples that exhaust all possible classes due to, for example, incomplete knowledge during training or ever changing regimes. Therefore, samples from unknown/novel classes may be encountered in testing/deployment. In such scenarios, the classifiers should be able to i) perform classification on known classes, and at the same time, ii) identify samples from unknown classes. This is known as open-set recognition. Although random forest has been an extremely successful framework as a general-purpose classification (and regression) method, in practice, it usually operates under the closed-set assumption and is not able to identify samples from new classes when run out of the box. In this work, we propose a novel approach to enabling open-set recognition capability for random forest classifiers by incorporating distance metric learning and distance-based open-set recognition. The proposed method is validated on both synthetic and real-world datasets. The experimental results indicate that the proposed approach outperforms state-of-the-art distance-based open-set recognition methods.

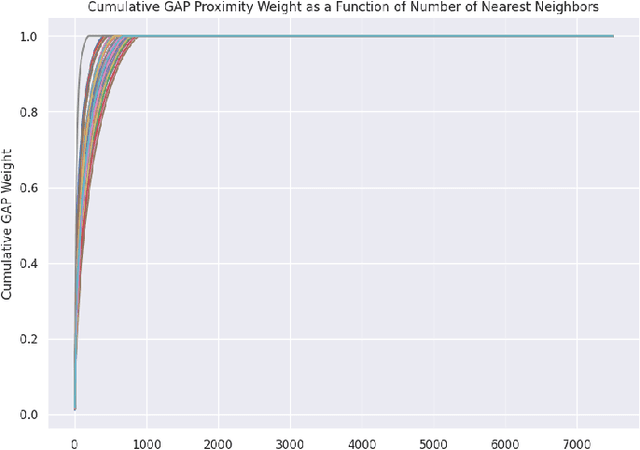

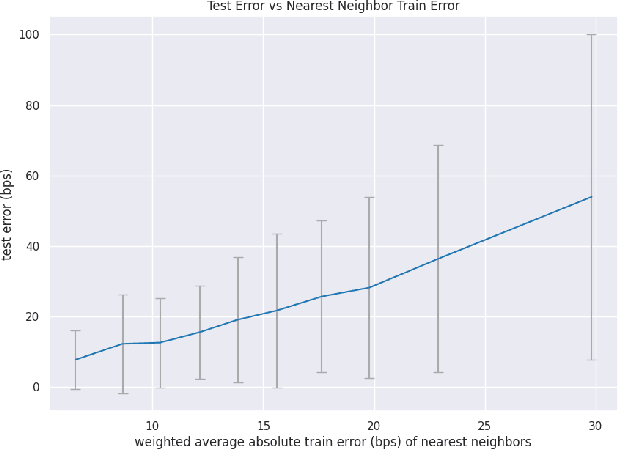

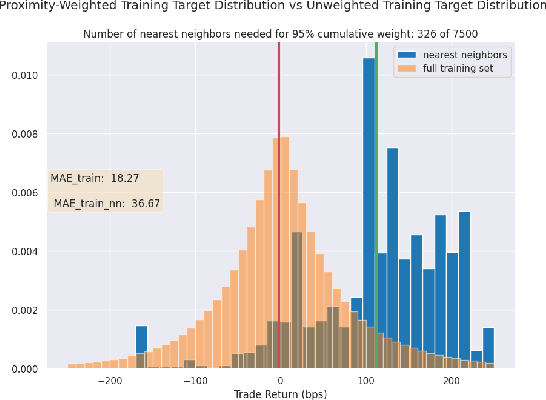

Towards Enhanced Local Explainability of Random Forests: a Proximity-Based Approach

Oct 19, 2023

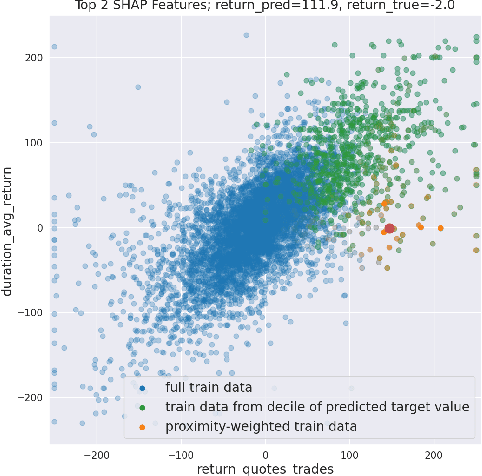

Abstract:We initiate a novel approach to explain the out of sample performance of random forest (RF) models by exploiting the fact that any RF can be formulated as an adaptive weighted K nearest-neighbors model. Specifically, we use the proximity between points in the feature space learned by the RF to re-write random forest predictions exactly as a weighted average of the target labels of training data points. This linearity facilitates a local notion of explainability of RF predictions that generates attributions for any model prediction across observations in the training set, and thereby complements established methods like SHAP, which instead generates attributions for a model prediction across dimensions of the feature space. We demonstrate this approach in the context of a bond pricing model trained on US corporate bond trades, and compare our approach to various existing approaches to model explainability.

Towards reducing hallucination in extracting information from financial reports using Large Language Models

Oct 16, 2023

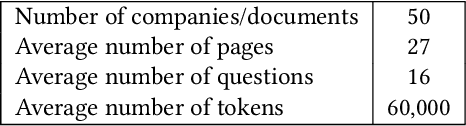

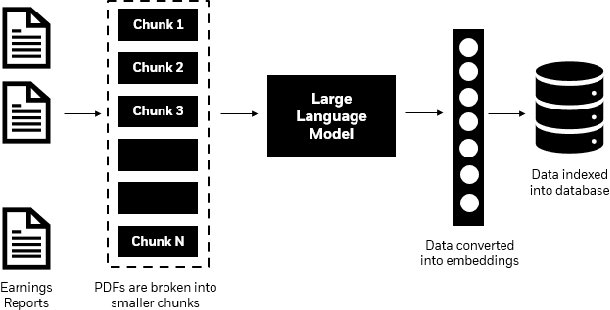

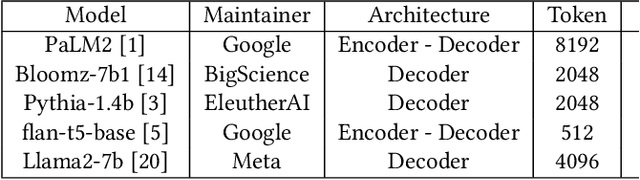

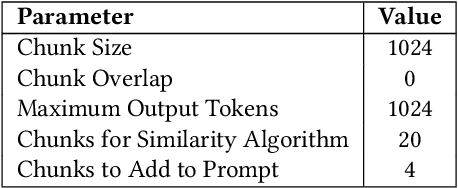

Abstract:For a financial analyst, the question and answer (Q\&A) segment of the company financial report is a crucial piece of information for various analysis and investment decisions. However, extracting valuable insights from the Q\&A section has posed considerable challenges as the conventional methods such as detailed reading and note-taking lack scalability and are susceptible to human errors, and Optical Character Recognition (OCR) and similar techniques encounter difficulties in accurately processing unstructured transcript text, often missing subtle linguistic nuances that drive investor decisions. Here, we demonstrate the utilization of Large Language Models (LLMs) to efficiently and rapidly extract information from earnings report transcripts while ensuring high accuracy transforming the extraction process as well as reducing hallucination by combining retrieval-augmented generation technique as well as metadata. We evaluate the outcomes of various LLMs with and without using our proposed approach based on various objective metrics for evaluating Q\&A systems, and empirically demonstrate superiority of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge