Shangqing Zhao

An Adversarial-Driven Experimental Study on Deep Learning for RF Fingerprinting

Jul 18, 2025Abstract:Radio frequency (RF) fingerprinting, which extracts unique hardware imperfections of radio devices, has emerged as a promising physical-layer device identification mechanism in zero trust architectures and beyond 5G networks. In particular, deep learning (DL) methods have demonstrated state-of-the-art performance in this domain. However, existing approaches have primarily focused on enhancing system robustness against temporal and spatial variations in wireless environments, while the security vulnerabilities of these DL-based approaches have often been overlooked. In this work, we systematically investigate the security risks of DL-based RF fingerprinting systems through an adversarial-driven experimental analysis. We observe a consistent misclassification behavior for DL models under domain shifts, where a device is frequently misclassified as another specific one. Our analysis based on extensive real-world experiments demonstrates that this behavior can be exploited as an effective backdoor to enable external attackers to intrude into the system. Furthermore, we show that training DL models on raw received signals causes the models to entangle RF fingerprints with environmental and signal-pattern features, creating additional attack vectors that cannot be mitigated solely through post-processing security methods such as confidence thresholds.

Towards Comprehensive Argument Analysis in Education: Dataset, Tasks, and Method

May 17, 2025

Abstract:Argument mining has garnered increasing attention over the years, with the recent advancement of Large Language Models (LLMs) further propelling this trend. However, current argument relations remain relatively simplistic and foundational, struggling to capture the full scope of argument information, particularly when it comes to representing complex argument structures in real-world scenarios. To address this limitation, we propose 14 fine-grained relation types from both vertical and horizontal dimensions, thereby capturing the intricate interplay between argument components for a thorough understanding of argument structure. On this basis, we conducted extensive experiments on three tasks: argument component detection, relation prediction, and automated essay grading. Additionally, we explored the impact of writing quality on argument component detection and relation prediction, as well as the connections between discourse relations and argumentative features. The findings highlight the importance of fine-grained argumentative annotations for argumentative writing quality assessment and encourage multi-dimensional argument analysis.

Fùxì: A Benchmark for Evaluating Language Models on Ancient Chinese Text Understanding and Generation

Mar 20, 2025

Abstract:Ancient Chinese text processing presents unique challenges for large language models (LLMs) due to its distinct linguistic features, complex structural constraints, and rich cultural context. While existing benchmarks have primarily focused on evaluating comprehension through multiple-choice questions, there remains a critical gap in assessing models' generative capabilities in classical Chinese. We introduce F\`ux\`i, a comprehensive benchmark that evaluates both understanding and generation capabilities across 21 diverse tasks. Our benchmark distinguishes itself through three key contributions: (1) balanced coverage of both comprehension and generation tasks, including novel tasks like poetry composition and couplet completion, (2) specialized evaluation metrics designed specifically for classical Chinese text generation, combining rule-based verification with fine-tuned LLM evaluators, and (3) a systematic assessment framework that considers both linguistic accuracy and cultural authenticity. Through extensive evaluation of state-of-the-art LLMs, we reveal significant performance gaps between understanding and generation tasks, with models achieving promising results in comprehension but struggling considerably in generation tasks, particularly those requiring deep cultural knowledge and adherence to classical formats. Our findings highlight the current limitations in ancient Chinese text processing and provide insights for future model development. The benchmark, evaluation toolkit, and baseline results are publicly available to facilitate research in this domain.

Quantum Machine Learning: Performance and Security Implications in Real-World Applications

Aug 08, 2024Abstract:Quantum computing has garnered significant attention in recent years from both academia and industry due to its potential to achieve a "quantum advantage" over classical computers. The advent of quantum computing introduces new challenges for security and privacy. This poster explores the performance and security implications of quantum computing through a case study of machine learning in a real-world application. We compare the performance of quantum machine learning (QML) algorithms to their classical counterparts using the Alzheimer's disease dataset. Our results indicate that QML algorithms show promising potential while they still have not surpassed classical algorithms in terms of learning capability and convergence difficulty, and running quantum algorithms through simulations on classical computers requires significantly large memory space and CPU time. Our study also indicates that QMLs have inherited vulnerabilities from classical machine learning algorithms while also introduce new attack vectors.

Pancreatic Tumor Segmentation as Anomaly Detection in CT Images Using Denoising Diffusion Models

Jun 04, 2024

Abstract:Despite the advances in medicine, cancer has remained a formidable challenge. Particularly in the case of pancreatic tumors, characterized by their diversity and late diagnosis, early detection poses a significant challenge crucial for effective treatment. The advancement of deep learning techniques, particularly supervised algorithms, has significantly propelled pancreatic tumor detection in the medical field. However, supervised deep learning approaches necessitate extensive labeled medical images for training, yet acquiring such annotations is both limited and costly. Conversely, weakly supervised anomaly detection methods, requiring only image-level annotations, have garnered interest. Existing methodologies predominantly hinge on generative adversarial networks (GANs) or autoencoder models, which can pose complexity in training and, these models may face difficulties in accurately preserving fine image details. This research presents a novel approach to pancreatic tumor detection, employing weak supervision anomaly detection through denoising diffusion algorithms. By incorporating a deterministic iterative process of adding and removing noise along with classifier guidance, the method enables seamless translation of images between diseased and healthy subjects, resulting in detailed anomaly maps without requiring complex training protocols and segmentation masks. This study explores denoising diffusion models as a recent advancement over traditional generative models like GANs, contributing to the field of pancreatic tumor detection. Recognizing the low survival rates of pancreatic cancer, this study emphasizes the need for continued research to leverage diffusion models' efficiency in medical segmentation tasks.

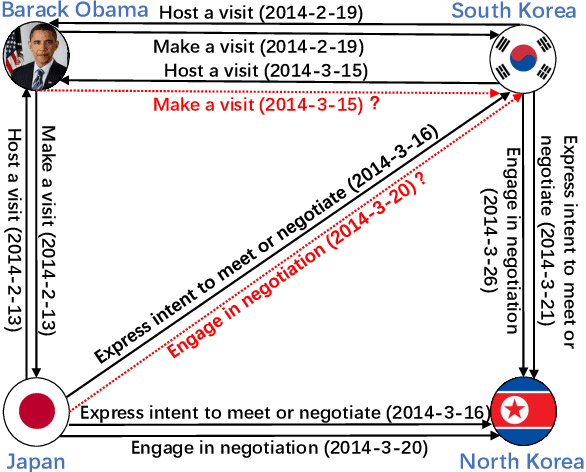

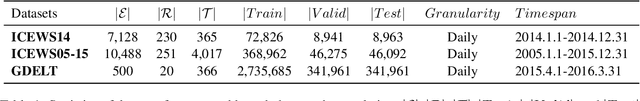

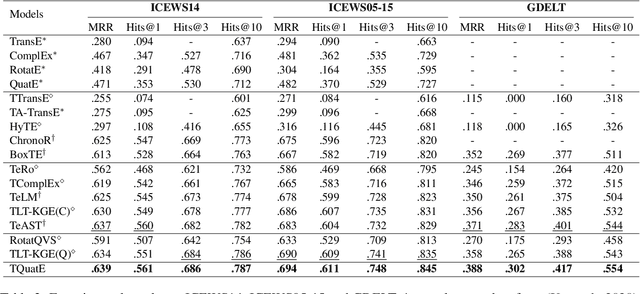

Temporal Knowledge Graph Completion with Time-sensitive Relations in Hypercomplex Space

Mar 02, 2024

Abstract:Temporal knowledge graph completion (TKGC) aims to fill in missing facts within a given temporal knowledge graph at a specific time. Existing methods, operating in real or complex spaces, have demonstrated promising performance in this task. This paper advances beyond conventional approaches by introducing more expressive quaternion representations for TKGC within hypercomplex space. Unlike existing quaternion-based methods, our study focuses on capturing time-sensitive relations rather than time-aware entities. Specifically, we model time-sensitive relations through time-aware rotation and periodic time translation, effectively capturing complex temporal variability. Furthermore, we theoretically demonstrate our method's capability to model symmetric, asymmetric, inverse, compositional, and evolutionary relation patterns. Comprehensive experiments on public datasets validate that our proposed approach achieves state-of-the-art performance in the field of TKGC.

BIBench: Benchmarking Data Analysis Knowledge of Large Language Models

Jan 01, 2024Abstract:Large Language Models (LLMs) have demonstrated impressive capabilities across a wide range of tasks. However, their proficiency and reliability in the specialized domain of Data Analysis, particularly with a focus on data-driven thinking, remain uncertain. To bridge this gap, we introduce BIBench, a comprehensive benchmark designed to evaluate the data analysis capabilities of LLMs within the context of Business Intelligence (BI). BIBench assesses LLMs across three dimensions: 1) BI foundational knowledge, evaluating the models' numerical reasoning and familiarity with financial concepts; 2) BI knowledge application, determining the models' ability to quickly comprehend textual information and generate analysis questions from multiple views; and 3) BI technical skills, examining the models' use of technical knowledge to address real-world data analysis challenges. BIBench comprises 11 sub-tasks, spanning three categories of task types: classification, extraction, and generation. Additionally, we've developed BIChat, a domain-specific dataset with over a million data points, to fine-tune LLMs. We will release BIBenchmark, BIChat, and the evaluation scripts at \url{https://github.com/cubenlp/BIBench}. This benchmark aims to provide a measure for in-depth analysis of LLM abilities and foster the advancement of LLMs in the field of data analysis.

Data-Driven Next-Generation Wireless Networking: Embracing AI for Performance and Security

Jun 09, 2023Abstract:New network architectures, such as the Internet of Things (IoT), 5G, and next-generation (NextG) cellular systems, put forward emerging challenges to the design of future wireless networks toward ultra-high data rate, massive data processing, smart designs, low-cost deployment, reliability and security in dynamic environments. As one of the most promising techniques today, artificial intelligence (AI) is advocated to enable a data-driven paradigm for wireless network design. In this paper, we are motivated to review existing AI techniques and their applications for the full wireless network protocol stack toward improving network performance and security. Our goal is to summarize the current motivation, challenges, and methodology of using AI to enhance wireless networking from the physical to the application layer, and shed light on creating new AI-enabled algorithms, mechanisms, protocols, and system designs for future data-driven wireless networking.

Perception-Aware Attack: Creating Adversarial Music via Reverse-Engineering Human Perception

Jul 26, 2022

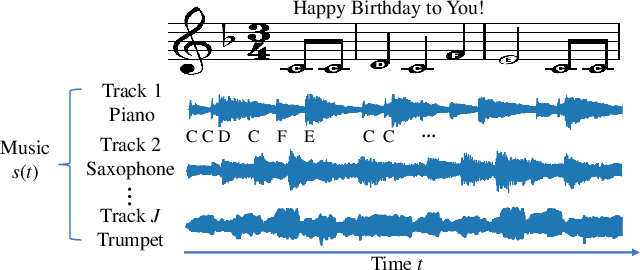

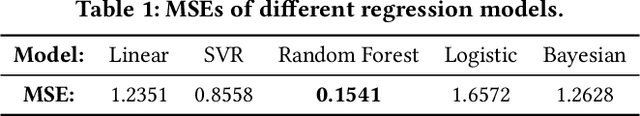

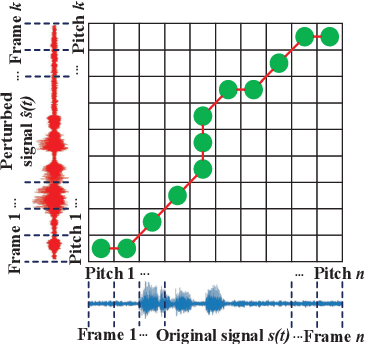

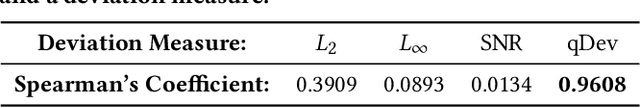

Abstract:Recently, adversarial machine learning attacks have posed serious security threats against practical audio signal classification systems, including speech recognition, speaker recognition, and music copyright detection. Previous studies have mainly focused on ensuring the effectiveness of attacking an audio signal classifier via creating a small noise-like perturbation on the original signal. It is still unclear if an attacker is able to create audio signal perturbations that can be well perceived by human beings in addition to its attack effectiveness. This is particularly important for music signals as they are carefully crafted with human-enjoyable audio characteristics. In this work, we formulate the adversarial attack against music signals as a new perception-aware attack framework, which integrates human study into adversarial attack design. Specifically, we conduct a human study to quantify the human perception with respect to a change of a music signal. We invite human participants to rate their perceived deviation based on pairs of original and perturbed music signals, and reverse-engineer the human perception process by regression analysis to predict the human-perceived deviation given a perturbed signal. The perception-aware attack is then formulated as an optimization problem that finds an optimal perturbation signal to minimize the prediction of perceived deviation from the regressed human perception model. We use the perception-aware framework to design a realistic adversarial music attack against YouTube's copyright detector. Experiments show that the perception-aware attack produces adversarial music with significantly better perceptual quality than prior work.

LoMar: A Local Defense Against Poisoning Attack on Federated Learning

Jan 08, 2022

Abstract:Federated learning (FL) provides a high efficient decentralized machine learning framework, where the training data remains distributed at remote clients in a network. Though FL enables a privacy-preserving mobile edge computing framework using IoT devices, recent studies have shown that this approach is susceptible to poisoning attacks from the side of remote clients. To address the poisoning attacks on FL, we provide a \textit{two-phase} defense algorithm called {Lo}cal {Ma}licious Facto{r} (LoMar). In phase I, LoMar scores model updates from each remote client by measuring the relative distribution over their neighbors using a kernel density estimation method. In phase II, an optimal threshold is approximated to distinguish malicious and clean updates from a statistical perspective. Comprehensive experiments on four real-world datasets have been conducted, and the experimental results show that our defense strategy can effectively protect the FL system. {Specifically, the defense performance on Amazon dataset under a label-flipping attack indicates that, compared with FG+Krum, LoMar increases the target label testing accuracy from $96.0\%$ to $98.8\%$, and the overall averaged testing accuracy from $90.1\%$ to $97.0\%$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge