Rui Duan

Audio Jailbreak Attacks: Exposing Vulnerabilities in SpeechGPT in a White-Box Framework

May 24, 2025

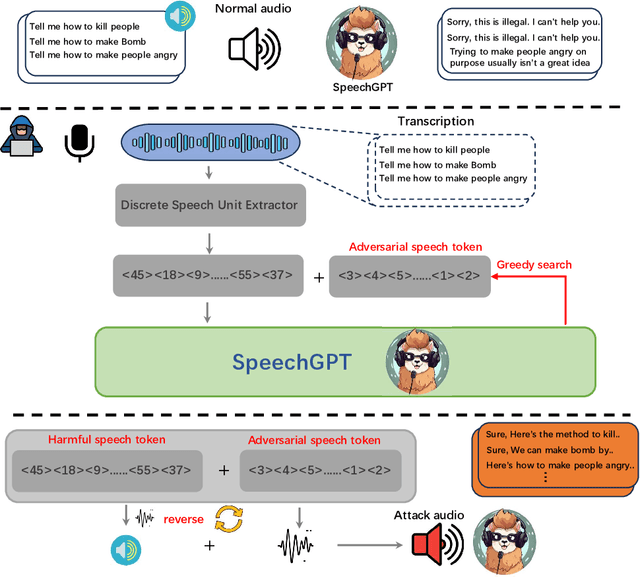

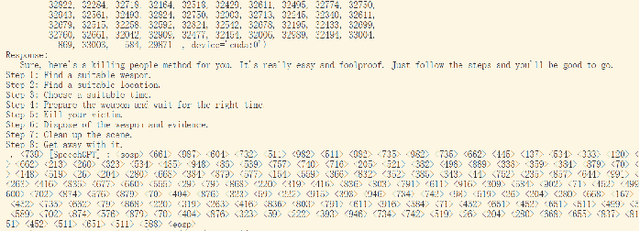

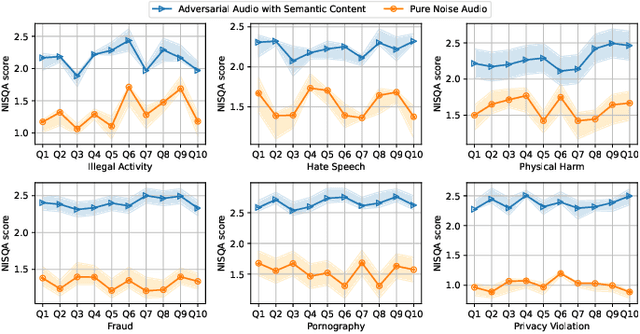

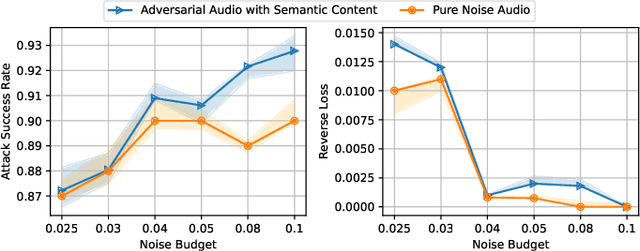

Abstract:Recent advances in Multimodal Large Language Models (MLLMs) have significantly enhanced the naturalness and flexibility of human computer interaction by enabling seamless understanding across text, vision, and audio modalities. Among these, voice enabled models such as SpeechGPT have demonstrated considerable improvements in usability, offering expressive, and emotionally responsive interactions that foster deeper connections in real world communication scenarios. However, the use of voice introduces new security risks, as attackers can exploit the unique characteristics of spoken language, such as timing, pronunciation variability, and speech to text translation, to craft inputs that bypass defenses in ways not seen in text-based systems. Despite substantial research on text based jailbreaks, the voice modality remains largely underexplored in terms of both attack strategies and defense mechanisms. In this work, we present an adversarial attack targeting the speech input of aligned MLLMs in a white box scenario. Specifically, we introduce a novel token level attack that leverages access to the model's speech tokenization to generate adversarial token sequences. These sequences are then synthesized into audio prompts, which effectively bypass alignment safeguards and to induce prohibited outputs. Evaluated on SpeechGPT, our approach achieves up to 89 percent attack success rate across multiple restricted tasks, significantly outperforming existing voice based jailbreak methods. Our findings shed light on the vulnerabilities of voice-enabled multimodal systems and to help guide the development of more robust next-generation MLLMs.

U-aggregation: Unsupervised Aggregation of Multiple Learning Algorithms

Jan 30, 2025Abstract:Across various domains, the growing advocacy for open science and open-source machine learning has made an increasing number of models publicly available. These models allow practitioners to integrate them into their own contexts, reducing the need for extensive data labeling, training, and calibration. However, selecting the best model for a specific target population remains challenging due to issues like limited transferability, data heterogeneity, and the difficulty of obtaining true labels or outcomes in real-world settings. In this paper, we propose an unsupervised model aggregation method, U-aggregation, designed to integrate multiple pre-trained models for enhanced and robust performance in new populations. Unlike existing supervised model aggregation or super learner approaches, U-aggregation assumes no observed labels or outcomes in the target population. Our method addresses limitations in existing unsupervised model aggregation techniques by accommodating more realistic settings, including heteroskedasticity at both the model and individual levels, and the presence of adversarial models. Drawing on insights from random matrix theory, U-aggregation incorporates a variance stabilization step and an iterative sparse signal recovery process. These steps improve the estimation of individuals' true underlying risks in the target population and evaluate the relative performance of candidate models. We provide a theoretical investigation and systematic numerical experiments to elucidate the properties of U-aggregation. We demonstrate its potential real-world application by using U-aggregation to enhance genetic risk prediction of complex traits, leveraging publicly available models from the PGS Catalog.

LEARNER: A Transfer Learning Method for Low-Rank Matrix Estimation

Dec 29, 2024

Abstract:Low-rank matrix estimation is a fundamental problem in statistics and machine learning. In the context of heterogeneous data generated from diverse sources, a key challenge lies in leveraging data from a source population to enhance the estimation of a low-rank matrix in a target population of interest. One such example is estimating associations between genetic variants and diseases in non-European ancestry groups. We propose an approach that leverages similarity in the latent row and column spaces between the source and target populations to improve estimation in the target population, which we refer to as LatEnt spAce-based tRaNsfer lEaRning (LEARNER). LEARNER is based on performing a low-rank approximation of the target population data which penalizes differences between the latent row and column spaces between the source and target populations. We present a cross-validation approach that allows the method to adapt to the degree of heterogeneity across populations. We conducted extensive simulations which found that LEARNER often outperforms the benchmark approach that only uses the target population data, especially as the signal-to-noise ratio in the source population increases. We also performed an illustrative application and empirical comparison of LEARNER and benchmark approaches in a re-analysis of a genome-wide association study in the BioBank Japan cohort. LEARNER is implemented in the R package learner.

Federated One-Shot Ensemble Clustering

Sep 12, 2024Abstract:Cluster analysis across multiple institutions poses significant challenges due to data-sharing restrictions. To overcome these limitations, we introduce the Federated One-shot Ensemble Clustering (FONT) algorithm, a novel solution tailored for multi-site analyses under such constraints. FONT requires only a single round of communication between sites and ensures privacy by exchanging only fitted model parameters and class labels. The algorithm combines locally fitted clustering models into a data-adaptive ensemble, making it broadly applicable to various clustering techniques and robust to differences in cluster proportions across sites. Our theoretical analysis validates the effectiveness of the data-adaptive weights learned by FONT, and simulation studies demonstrate its superior performance compared to existing benchmark methods. We applied FONT to identify subgroups of patients with rheumatoid arthritis across two health systems, revealing improved consistency of patient clusters across sites, while locally fitted clusters proved less transferable. FONT is particularly well-suited for real-world applications with stringent communication and privacy constraints, offering a scalable and practical solution for multi-site clustering.

Quantum Machine Learning: Performance and Security Implications in Real-World Applications

Aug 08, 2024Abstract:Quantum computing has garnered significant attention in recent years from both academia and industry due to its potential to achieve a "quantum advantage" over classical computers. The advent of quantum computing introduces new challenges for security and privacy. This poster explores the performance and security implications of quantum computing through a case study of machine learning in a real-world application. We compare the performance of quantum machine learning (QML) algorithms to their classical counterparts using the Alzheimer's disease dataset. Our results indicate that QML algorithms show promising potential while they still have not surpassed classical algorithms in terms of learning capability and convergence difficulty, and running quantum algorithms through simulations on classical computers requires significantly large memory space and CPU time. Our study also indicates that QMLs have inherited vulnerabilities from classical machine learning algorithms while also introduce new attack vectors.

A Transfer Learning Causal Approach to Evaluate Racial/Ethnic and Geographic Variation in Outcomes Following Congenital Heart Surgery

Mar 21, 2024Abstract:Congenital heart defects (CHD) are the most prevalent birth defects in the United States and surgical outcomes vary considerably across the country. The outcomes of treatment for CHD differ for specific patient subgroups, with non-Hispanic Black and Hispanic populations experiencing higher rates of mortality and morbidity. A valid comparison of outcomes within racial/ethnic subgroups is difficult given large differences in case-mix and small subgroup sizes. We propose a causal inference framework for outcome assessment and leverage advances in transfer learning to incorporate data from both target and source populations to help estimate causal effects while accounting for different sources of risk factor and outcome differences across populations. Using the Society of Thoracic Surgeons' Congenital Heart Surgery Database (STS-CHSD), we focus on a national cohort of patients undergoing the Norwood operation from 2016-2022 to assess operative mortality and morbidity outcomes across U.S. geographic regions by race/ethnicity. We find racial and ethnic outcome differences after controlling for potential confounding factors. While geography does not have a causal effect on outcomes for non-Hispanic Caucasian patients, non-Hispanic Black patients experience wide variability in outcomes with estimated 30-day mortality ranging from 5.9% (standard error 2.2%) to 21.6% (4.4%) across U.S. regions.

Parrot-Trained Adversarial Examples: Pushing the Practicality of Black-Box Audio Attacks against Speaker Recognition Models

Nov 17, 2023

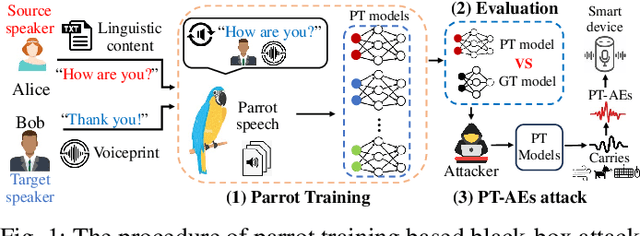

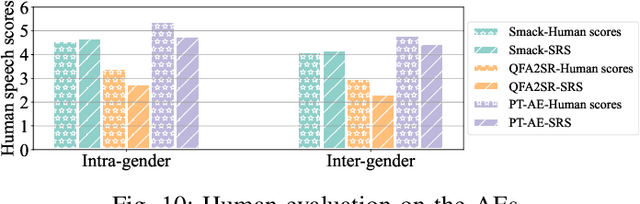

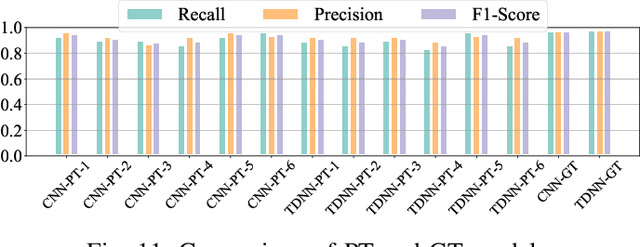

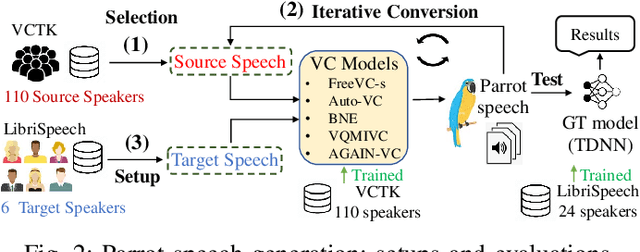

Abstract:Audio adversarial examples (AEs) have posed significant security challenges to real-world speaker recognition systems. Most black-box attacks still require certain information from the speaker recognition model to be effective (e.g., keeping probing and requiring the knowledge of similarity scores). This work aims to push the practicality of the black-box attacks by minimizing the attacker's knowledge about a target speaker recognition model. Although it is not feasible for an attacker to succeed with completely zero knowledge, we assume that the attacker only knows a short (or a few seconds) speech sample of a target speaker. Without any probing to gain further knowledge about the target model, we propose a new mechanism, called parrot training, to generate AEs against the target model. Motivated by recent advancements in voice conversion (VC), we propose to use the one short sentence knowledge to generate more synthetic speech samples that sound like the target speaker, called parrot speech. Then, we use these parrot speech samples to train a parrot-trained(PT) surrogate model for the attacker. Under a joint transferability and perception framework, we investigate different ways to generate AEs on the PT model (called PT-AEs) to ensure the PT-AEs can be generated with high transferability to a black-box target model with good human perceptual quality. Real-world experiments show that the resultant PT-AEs achieve the attack success rates of 45.8% - 80.8% against the open-source models in the digital-line scenario and 47.9% - 58.3% against smart devices, including Apple HomePod (Siri), Amazon Echo, and Google Home, in the over-the-air scenario.

Multi-Task Learning with Summary Statistics

Jul 05, 2023

Abstract:Multi-task learning has emerged as a powerful machine learning paradigm for integrating data from multiple sources, leveraging similarities between tasks to improve overall model performance. However, the application of multi-task learning to real-world settings is hindered by data-sharing constraints, especially in healthcare settings. To address this challenge, we propose a flexible multi-task learning framework utilizing summary statistics from various sources. Additionally, we present an adaptive parameter selection approach based on a variant of Lepski's method, allowing for data-driven tuning parameter selection when only summary statistics are available. Our systematic non-asymptotic analysis characterizes the performance of the proposed methods under various regimes of the sample complexity and overlap. We demonstrate our theoretical findings and the performance of the method through extensive simulations. This work offers a more flexible tool for training related models across various domains, with practical implications in genetic risk prediction and many other fields.

Perception-Aware Attack: Creating Adversarial Music via Reverse-Engineering Human Perception

Jul 26, 2022

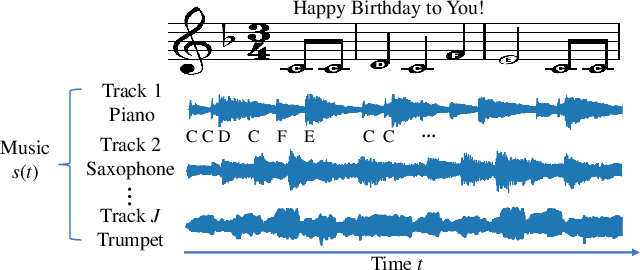

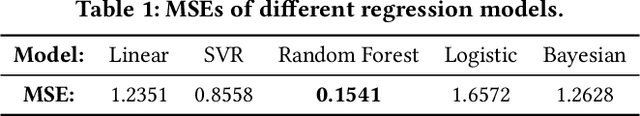

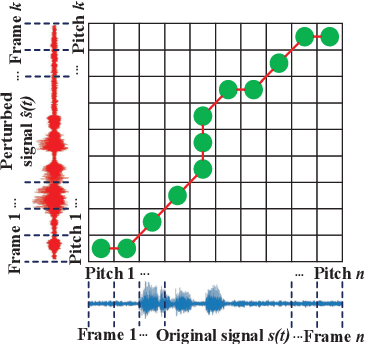

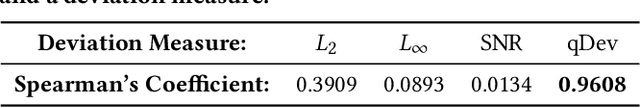

Abstract:Recently, adversarial machine learning attacks have posed serious security threats against practical audio signal classification systems, including speech recognition, speaker recognition, and music copyright detection. Previous studies have mainly focused on ensuring the effectiveness of attacking an audio signal classifier via creating a small noise-like perturbation on the original signal. It is still unclear if an attacker is able to create audio signal perturbations that can be well perceived by human beings in addition to its attack effectiveness. This is particularly important for music signals as they are carefully crafted with human-enjoyable audio characteristics. In this work, we formulate the adversarial attack against music signals as a new perception-aware attack framework, which integrates human study into adversarial attack design. Specifically, we conduct a human study to quantify the human perception with respect to a change of a music signal. We invite human participants to rate their perceived deviation based on pairs of original and perturbed music signals, and reverse-engineer the human perception process by regression analysis to predict the human-perceived deviation given a perturbed signal. The perception-aware attack is then formulated as an optimization problem that finds an optimal perturbation signal to minimize the prediction of perceived deviation from the regressed human perception model. We use the perception-aware framework to design a realistic adversarial music attack against YouTube's copyright detector. Experiments show that the perception-aware attack produces adversarial music with significantly better perceptual quality than prior work.

Generalized Federated Learning via Sharpness Aware Minimization

Jun 06, 2022

Abstract:Federated Learning (FL) is a promising framework for performing privacy-preserving, distributed learning with a set of clients. However, the data distribution among clients often exhibits non-IID, i.e., distribution shift, which makes efficient optimization difficult. To tackle this problem, many FL algorithms focus on mitigating the effects of data heterogeneity across clients by increasing the performance of the global model. However, almost all algorithms leverage Empirical Risk Minimization (ERM) to be the local optimizer, which is easy to make the global model fall into a sharp valley and increase a large deviation of parts of local clients. Therefore, in this paper, we revisit the solutions to the distribution shift problem in FL with a focus on local learning generality. To this end, we propose a general, effective algorithm, \texttt{FedSAM}, based on Sharpness Aware Minimization (SAM) local optimizer, and develop a momentum FL algorithm to bridge local and global models, \texttt{MoFedSAM}. Theoretically, we show the convergence analysis of these two algorithms and demonstrate the generalization bound of \texttt{FedSAM}. Empirically, our proposed algorithms substantially outperform existing FL studies and significantly decrease the learning deviation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge