Zhuo Lu

Parrot-Trained Adversarial Examples: Pushing the Practicality of Black-Box Audio Attacks against Speaker Recognition Models

Nov 17, 2023

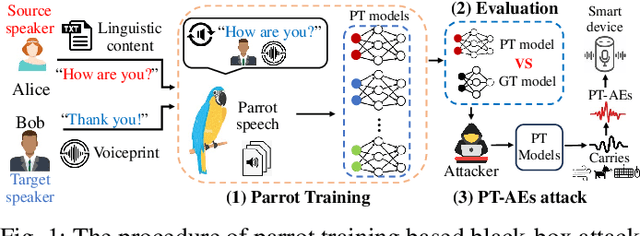

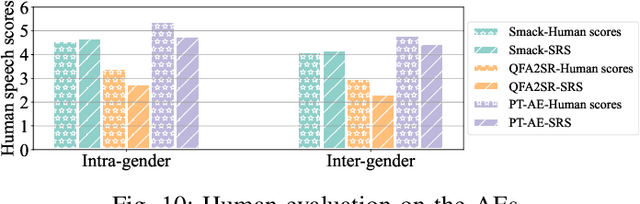

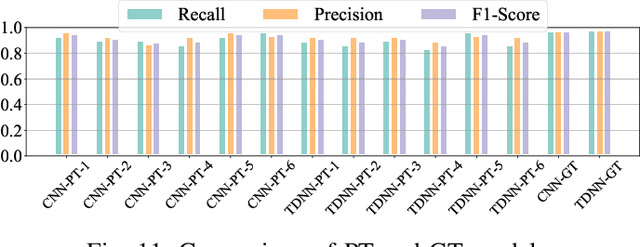

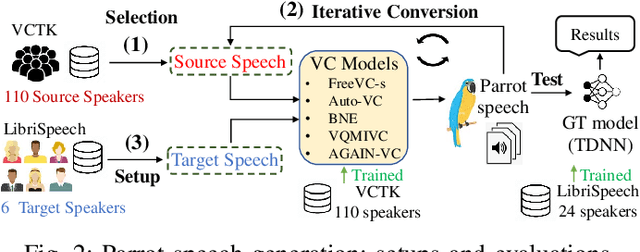

Abstract:Audio adversarial examples (AEs) have posed significant security challenges to real-world speaker recognition systems. Most black-box attacks still require certain information from the speaker recognition model to be effective (e.g., keeping probing and requiring the knowledge of similarity scores). This work aims to push the practicality of the black-box attacks by minimizing the attacker's knowledge about a target speaker recognition model. Although it is not feasible for an attacker to succeed with completely zero knowledge, we assume that the attacker only knows a short (or a few seconds) speech sample of a target speaker. Without any probing to gain further knowledge about the target model, we propose a new mechanism, called parrot training, to generate AEs against the target model. Motivated by recent advancements in voice conversion (VC), we propose to use the one short sentence knowledge to generate more synthetic speech samples that sound like the target speaker, called parrot speech. Then, we use these parrot speech samples to train a parrot-trained(PT) surrogate model for the attacker. Under a joint transferability and perception framework, we investigate different ways to generate AEs on the PT model (called PT-AEs) to ensure the PT-AEs can be generated with high transferability to a black-box target model with good human perceptual quality. Real-world experiments show that the resultant PT-AEs achieve the attack success rates of 45.8% - 80.8% against the open-source models in the digital-line scenario and 47.9% - 58.3% against smart devices, including Apple HomePod (Siri), Amazon Echo, and Google Home, in the over-the-air scenario.

Data-Driven Next-Generation Wireless Networking: Embracing AI for Performance and Security

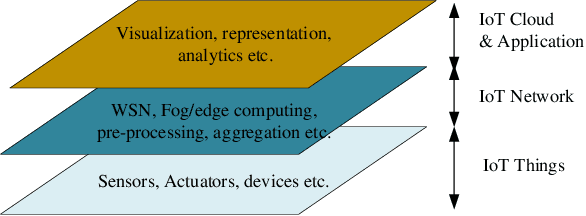

Jun 09, 2023Abstract:New network architectures, such as the Internet of Things (IoT), 5G, and next-generation (NextG) cellular systems, put forward emerging challenges to the design of future wireless networks toward ultra-high data rate, massive data processing, smart designs, low-cost deployment, reliability and security in dynamic environments. As one of the most promising techniques today, artificial intelligence (AI) is advocated to enable a data-driven paradigm for wireless network design. In this paper, we are motivated to review existing AI techniques and their applications for the full wireless network protocol stack toward improving network performance and security. Our goal is to summarize the current motivation, challenges, and methodology of using AI to enhance wireless networking from the physical to the application layer, and shed light on creating new AI-enabled algorithms, mechanisms, protocols, and system designs for future data-driven wireless networking.

Perception-Aware Attack: Creating Adversarial Music via Reverse-Engineering Human Perception

Jul 26, 2022

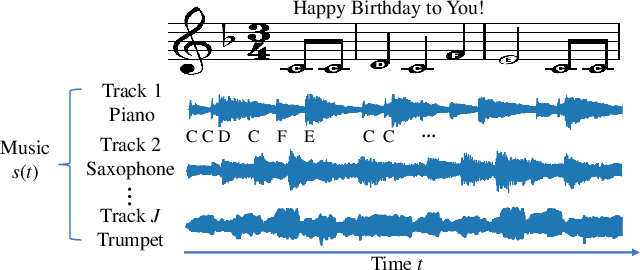

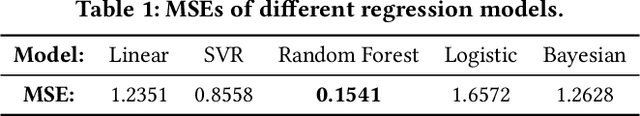

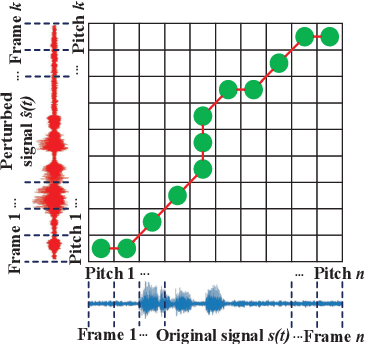

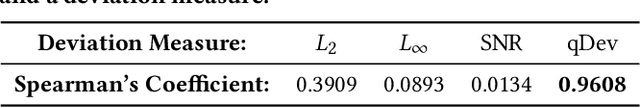

Abstract:Recently, adversarial machine learning attacks have posed serious security threats against practical audio signal classification systems, including speech recognition, speaker recognition, and music copyright detection. Previous studies have mainly focused on ensuring the effectiveness of attacking an audio signal classifier via creating a small noise-like perturbation on the original signal. It is still unclear if an attacker is able to create audio signal perturbations that can be well perceived by human beings in addition to its attack effectiveness. This is particularly important for music signals as they are carefully crafted with human-enjoyable audio characteristics. In this work, we formulate the adversarial attack against music signals as a new perception-aware attack framework, which integrates human study into adversarial attack design. Specifically, we conduct a human study to quantify the human perception with respect to a change of a music signal. We invite human participants to rate their perceived deviation based on pairs of original and perturbed music signals, and reverse-engineer the human perception process by regression analysis to predict the human-perceived deviation given a perturbed signal. The perception-aware attack is then formulated as an optimization problem that finds an optimal perturbation signal to minimize the prediction of perceived deviation from the regressed human perception model. We use the perception-aware framework to design a realistic adversarial music attack against YouTube's copyright detector. Experiments show that the perception-aware attack produces adversarial music with significantly better perceptual quality than prior work.

Generalized Federated Learning via Sharpness Aware Minimization

Jun 06, 2022

Abstract:Federated Learning (FL) is a promising framework for performing privacy-preserving, distributed learning with a set of clients. However, the data distribution among clients often exhibits non-IID, i.e., distribution shift, which makes efficient optimization difficult. To tackle this problem, many FL algorithms focus on mitigating the effects of data heterogeneity across clients by increasing the performance of the global model. However, almost all algorithms leverage Empirical Risk Minimization (ERM) to be the local optimizer, which is easy to make the global model fall into a sharp valley and increase a large deviation of parts of local clients. Therefore, in this paper, we revisit the solutions to the distribution shift problem in FL with a focus on local learning generality. To this end, we propose a general, effective algorithm, \texttt{FedSAM}, based on Sharpness Aware Minimization (SAM) local optimizer, and develop a momentum FL algorithm to bridge local and global models, \texttt{MoFedSAM}. Theoretically, we show the convergence analysis of these two algorithms and demonstrate the generalization bound of \texttt{FedSAM}. Empirically, our proposed algorithms substantially outperform existing FL studies and significantly decrease the learning deviation.

IoTGAN: GAN Powered Camouflage Against Machine Learning Based IoT Device Identification

Jan 10, 2022

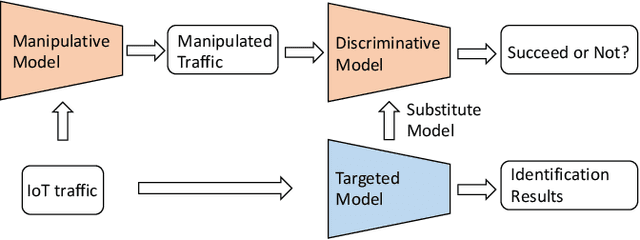

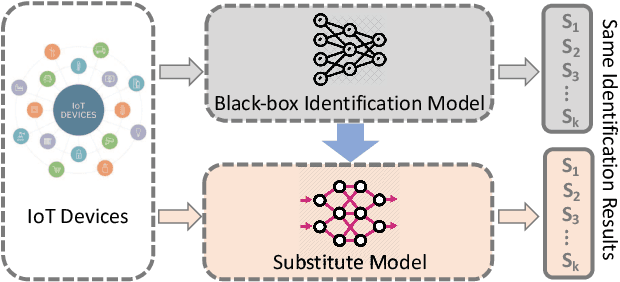

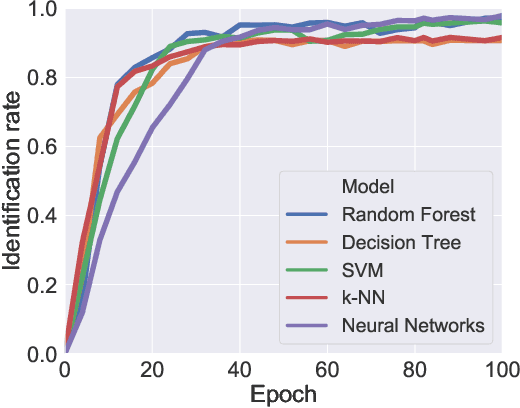

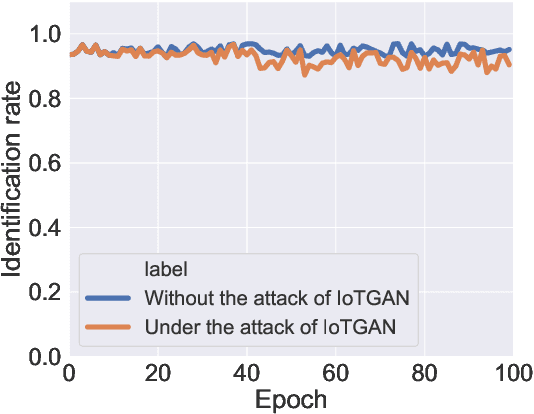

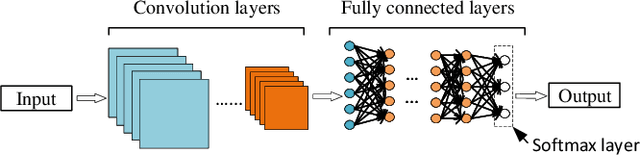

Abstract:With the proliferation of IoT devices, researchers have developed a variety of IoT device identification methods with the assistance of machine learning. Nevertheless, the security of these identification methods mostly depends on collected training data. In this research, we propose a novel attack strategy named IoTGAN to manipulate an IoT device's traffic such that it can evade machine learning based IoT device identification. In the development of IoTGAN, we have two major technical challenges: (i) How to obtain the discriminative model in a black-box setting, and (ii) How to add perturbations to IoT traffic through the manipulative model, so as to evade the identification while not influencing the functionality of IoT devices. To address these challenges, a neural network based substitute model is used to fit the target model in black-box settings, it works as a discriminative model in IoTGAN. A manipulative model is trained to add adversarial perturbations into the IoT device's traffic to evade the substitute model. Experimental results show that IoTGAN can successfully achieve the attack goals. We also develop efficient countermeasures to protect machine learning based IoT device identification from been undermined by IoTGAN.

LoMar: A Local Defense Against Poisoning Attack on Federated Learning

Jan 08, 2022

Abstract:Federated learning (FL) provides a high efficient decentralized machine learning framework, where the training data remains distributed at remote clients in a network. Though FL enables a privacy-preserving mobile edge computing framework using IoT devices, recent studies have shown that this approach is susceptible to poisoning attacks from the side of remote clients. To address the poisoning attacks on FL, we provide a \textit{two-phase} defense algorithm called {Lo}cal {Ma}licious Facto{r} (LoMar). In phase I, LoMar scores model updates from each remote client by measuring the relative distribution over their neighbors using a kernel density estimation method. In phase II, an optimal threshold is approximated to distinguish malicious and clean updates from a statistical perspective. Comprehensive experiments on four real-world datasets have been conducted, and the experimental results show that our defense strategy can effectively protect the FL system. {Specifically, the defense performance on Amazon dataset under a label-flipping attack indicates that, compared with FG+Krum, LoMar increases the target label testing accuracy from $96.0\%$ to $98.8\%$, and the overall averaged testing accuracy from $90.1\%$ to $97.0\%$.

FedLGA: Towards System-Heterogeneity of Federated Learning via Local Gradient Approximation

Dec 22, 2021

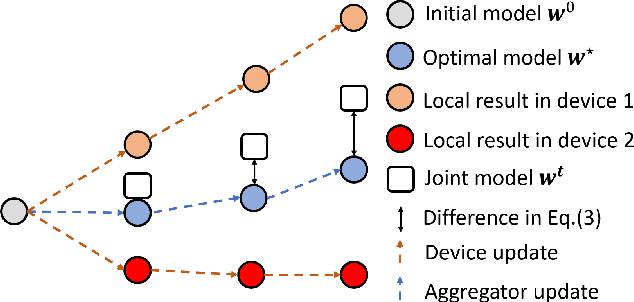

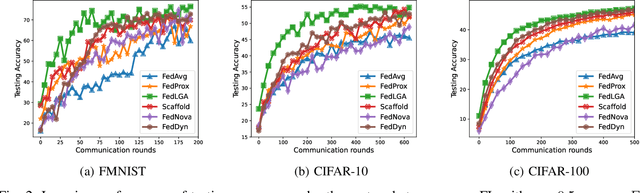

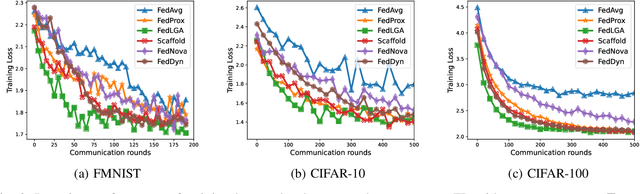

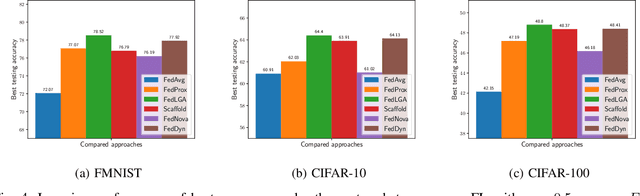

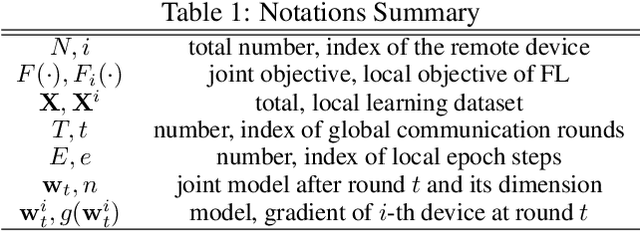

Abstract:Federated Learning (FL) is a decentralized machine learning architecture, which leverages a large number of remote devices to learn a joint model with distributed training data. However, the system-heterogeneity is one major challenge in a FL network to achieve robust distributed learning performance, which is of two aspects: i) device-heterogeneity due to the diverse computational capacity among devices; ii) data-heterogeneity due to the non-identically distributed data across the network. Though there have been benchmarks against the heterogeneous FL, e.g., FedProx, the prior studies lack formalization and it remains an open problem. In this work, we formalize the system-heterogeneous FL problem and propose a new algorithm, called FedLGA, which addresses this problem by bridging the divergence of local model updates via gradient approximation. To achieve this, FedLGA provides an alternated Hessian estimation method, which only requires extra linear complexity on the aggregator. Theoretically, we show that with a device-heterogeneous ratio $\rho$, FedLGA achieves convergence rates on non-i.i.d distributed FL training data against non-convex optimization problems for $\mathcal{O} \left( \frac{(1+\rho)}{\sqrt{ENT}} + \frac{1}{T} \right)$ and $\mathcal{O} \left( \frac{(1+\rho)\sqrt{E}}{\sqrt{TK}} + \frac{1}{T} \right)$ for full and partial device participation respectively, where $E$ is the number of local learning epoch, $T$ is the number of total communication round, $N$ is the total device number and $K$ is the number of selected device in one communication round under partially participation scheme. The results of comprehensive experiments on multiple datasets show that FedLGA outperforms current FL benchmarks against the system-heterogeneity.

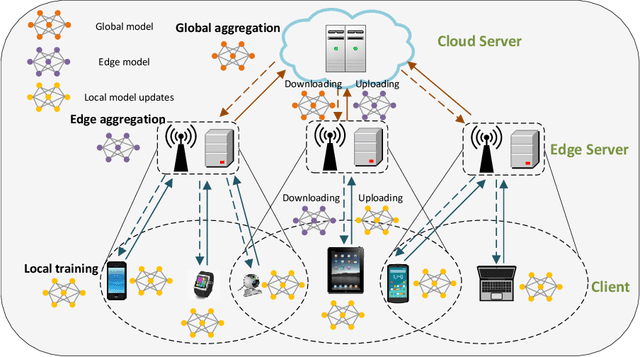

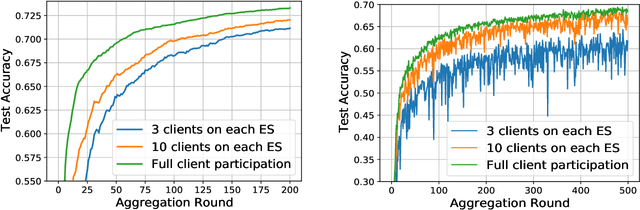

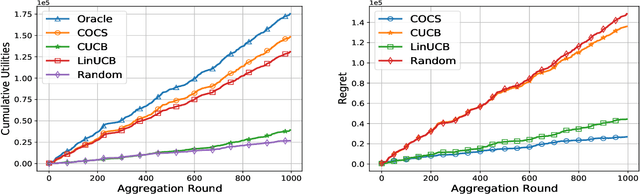

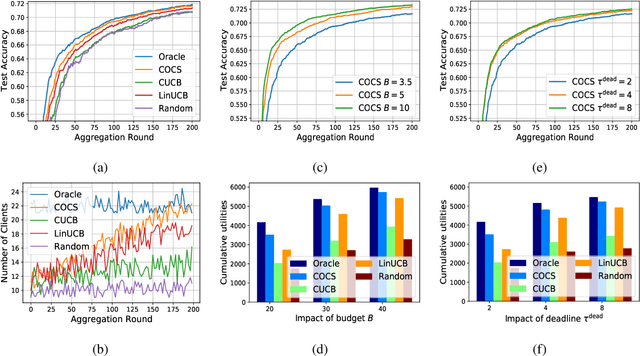

Context-Aware Online Client Selection for Hierarchical Federated Learning

Dec 03, 2021

Abstract:Federated Learning (FL) has been considered as an appealing framework to tackle data privacy issues of mobile devices compared to conventional Machine Learning (ML). Using Edge Servers (ESs) as intermediaries to perform model aggregation in proximity can reduce the transmission overhead, and it enables great potentials in low-latency FL, where the hierarchical architecture of FL (HFL) has been attracted more attention. Designing a proper client selection policy can significantly improve training performance, and it has been extensively used in FL studies. However, to the best of our knowledge, there are no studies focusing on HFL. In addition, client selection for HFL faces more challenges than conventional FL, e.g., the time-varying connection of client-ES pairs and the limited budget of the Network Operator (NO). In this paper, we investigate a client selection problem for HFL, where the NO learns the number of successful participating clients to improve the training performance (i.e., select as many clients in each round) as well as under the limited budget on each ES. An online policy, called Context-aware Online Client Selection (COCS), is developed based on Contextual Combinatorial Multi-Armed Bandit (CC-MAB). COCS observes the side-information (context) of local computing and transmission of client-ES pairs and makes client selection decisions to maximize NO's utility given a limited budget. Theoretically, COCS achieves a sublinear regret compared to an Oracle policy on both strongly convex and non-convex HFL. Simulation results also support the efficiency of the proposed COCS policy on real-world datasets.

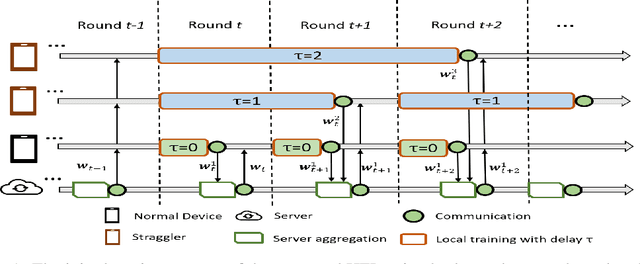

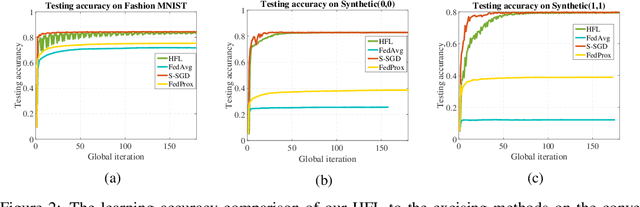

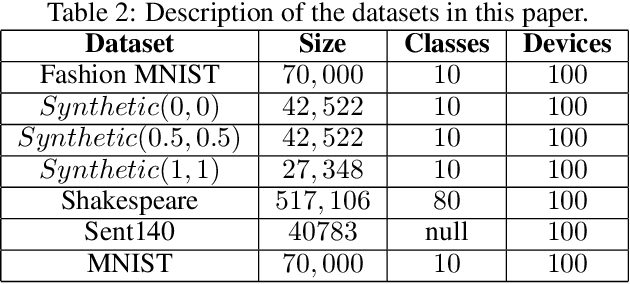

Stragglers Are Not Disaster: A Hybrid Federated Learning Algorithm with Delayed Gradients

Feb 12, 2021

Abstract:Federated learning (FL) is a new machine learning framework which trains a joint model across a large amount of decentralized computing devices. Existing methods, e.g., Federated Averaging (FedAvg), are able to provide an optimization guarantee by synchronously training the joint model, but usually suffer from stragglers, i.e., IoT devices with low computing power or communication bandwidth, especially on heterogeneous optimization problems. To mitigate the influence of stragglers, this paper presents a novel FL algorithm, namely Hybrid Federated Learning (HFL), to achieve a learning balance in efficiency and effectiveness. It consists of two major components: synchronous kernel and asynchronous updater. Unlike traditional synchronous FL methods, our HFL introduces the asynchronous updater which actively pulls unsynchronized and delayed local weights from stragglers. An adaptive approximation method, Adaptive Delayed-SGD (AD-SGD), is proposed to merge the delayed local updates into the joint model. The theoretical analysis of HFL shows that the convergence rate of the proposed algorithm is $\mathcal{O}(\frac{1}{t+\tau})$ for both convex and non-convex optimization problems.

Adversarial Machine Learning based Partial-model Attack in IoT

Jul 10, 2020

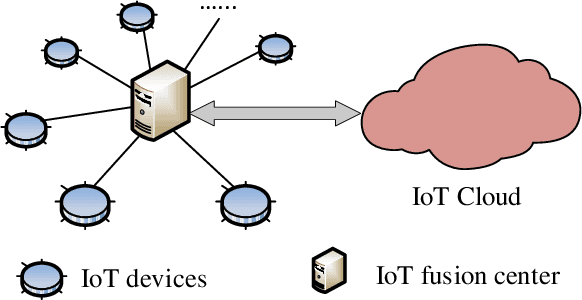

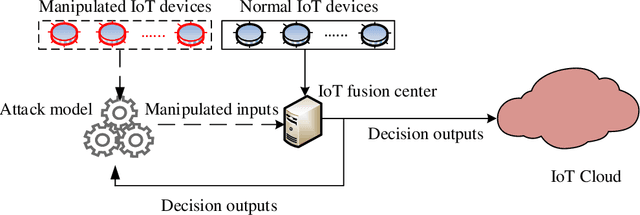

Abstract:As Internet of Things (IoT) has emerged as the next logical stage of the Internet, it has become imperative to understand the vulnerabilities of the IoT systems when supporting diverse applications. Because machine learning has been applied in many IoT systems, the security implications of machine learning need to be studied following an adversarial machine learning approach. In this paper, we propose an adversarial machine learning based partial-model attack in the data fusion/aggregation process of IoT by only controlling a small part of the sensing devices. Our numerical results demonstrate the feasibility of this attack to disrupt the decision making in data fusion with limited control of IoT devices, e.g., the attack success rate reaches 83\% when the adversary tampers with only 8 out of 20 IoT devices. These results show that the machine learning engine of IoT system is highly vulnerable to attacks even when the adversary manipulates a small portion of IoT devices, and the outcome of these attacks severely disrupts IoT system operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge