Stragglers Are Not Disaster: A Hybrid Federated Learning Algorithm with Delayed Gradients

Paper and Code

Feb 12, 2021

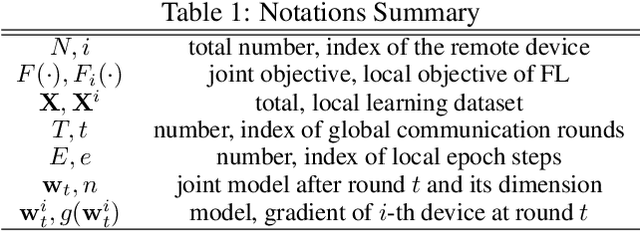

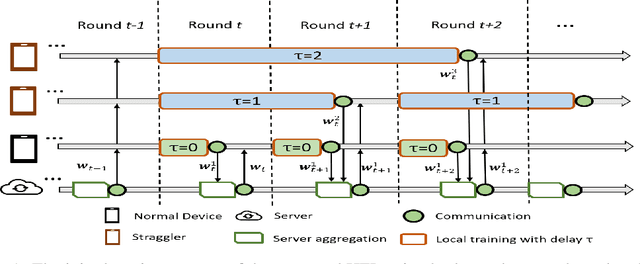

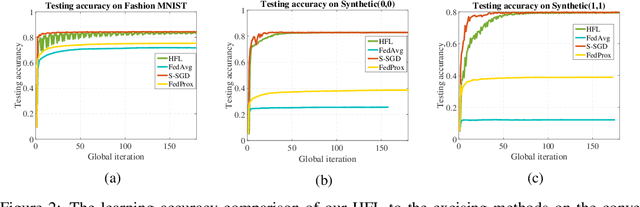

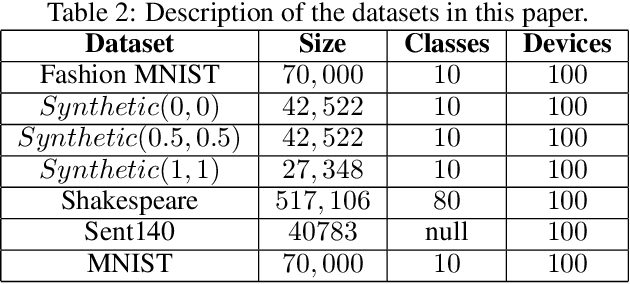

Federated learning (FL) is a new machine learning framework which trains a joint model across a large amount of decentralized computing devices. Existing methods, e.g., Federated Averaging (FedAvg), are able to provide an optimization guarantee by synchronously training the joint model, but usually suffer from stragglers, i.e., IoT devices with low computing power or communication bandwidth, especially on heterogeneous optimization problems. To mitigate the influence of stragglers, this paper presents a novel FL algorithm, namely Hybrid Federated Learning (HFL), to achieve a learning balance in efficiency and effectiveness. It consists of two major components: synchronous kernel and asynchronous updater. Unlike traditional synchronous FL methods, our HFL introduces the asynchronous updater which actively pulls unsynchronized and delayed local weights from stragglers. An adaptive approximation method, Adaptive Delayed-SGD (AD-SGD), is proposed to merge the delayed local updates into the joint model. The theoretical analysis of HFL shows that the convergence rate of the proposed algorithm is $\mathcal{O}(\frac{1}{t+\tau})$ for both convex and non-convex optimization problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge