Sepehr Samavi

Deploying SICNav in the Field: Safe and Interactive Crowd Navigation using MPC and Bilevel Optimization

Jun 10, 2025Abstract:Safe and efficient navigation in crowded environments remains a critical challenge for robots that provide a variety of service tasks such as food delivery or autonomous wheelchair mobility. Classical robot crowd navigation methods decouple human motion prediction from robot motion planning, which neglects the closed-loop interactions between humans and robots. This lack of a model for human reactions to the robot plan (e.g. moving out of the way) can cause the robot to get stuck. Our proposed Safe and Interactive Crowd Navigation (SICNav) method is a bilevel Model Predictive Control (MPC) framework that combines prediction and planning into one optimization problem, explicitly modeling interactions among agents. In this paper, we present a systems overview of the crowd navigation platform we use to deploy SICNav in previously unseen indoor and outdoor environments. We provide a preliminary analysis of the system's operation over the course of nearly 7 km of autonomous navigation over two hours in both indoor and outdoor environments.

SICNav-Diffusion: Safe and Interactive Crowd Navigation with Diffusion Trajectory Predictions

Mar 11, 2025Abstract:To navigate crowds without collisions, robots must interact with humans by forecasting their future motion and reacting accordingly. While learning-based prediction models have shown success in generating likely human trajectory predictions, integrating these stochastic models into a robot controller presents several challenges. The controller needs to account for interactive coupling between planned robot motion and human predictions while ensuring both predictions and robot actions are safe (i.e. collision-free). To address these challenges, we present a receding horizon crowd navigation method for single-robot multi-human environments. We first propose a diffusion model to generate joint trajectory predictions for all humans in the scene. We then incorporate these multi-modal predictions into a SICNav Bilevel MPC problem that simultaneously solves for a robot plan (upper-level) and acts as a safety filter to refine the predictions for non-collision (lower-level). Combining planning and prediction refinement into one bilevel problem ensures that the robot plan and human predictions are coupled. We validate the open-loop trajectory prediction performance of our diffusion model on the commonly used ETH/UCY benchmark and evaluate the closed-loop performance of our robot navigation method in simulation and extensive real-robot experiments demonstrating safe, efficient, and reactive robot motion.

SICNav: Safe and Interactive Crowd Navigation using Model Predictive Control and Bilevel Optimization

Oct 17, 2023Abstract:Robots need to predict and react to human motions to navigate through a crowd without collisions. Many existing methods decouple prediction from planning, which does not account for the interaction between robot and human motions and can lead to the robot getting stuck. We propose SICNav, a Model Predictive Control (MPC) method that jointly solves for robot motion and predicted crowd motion in closed-loop. We model each human in the crowd to be following an Optimal Reciprocal Collision Avoidance (ORCA) scheme and embed that model as a constraint in the robot's local planner, resulting in a bilevel nonlinear MPC optimization problem. We use a KKT-reformulation to cast the bilevel problem as a single level and use a nonlinear solver to optimize. Our MPC method can influence pedestrian motion while explicitly satisfying safety constraints in a single-robot multi-human environment. We analyze the performance of SICNav in a simulation environment to demonstrate safe robot motion that can influence the surrounding humans. We also validate the trajectory forecasting performance of ORCA on a human trajectory dataset.

Does Unpredictability Influence Driving Behavior?

Jul 28, 2023

Abstract:In this paper we investigate the effect of the unpredictability of surrounding cars on an ego-car performing a driving maneuver. We use Maximum Entropy Inverse Reinforcement Learning to model reward functions for an ego-car conducting a lane change in a highway setting. We define a new feature based on the unpredictability of surrounding cars and use it in the reward function. We learn two reward functions from human data: a baseline and one that incorporates our defined unpredictability feature, then compare their performance with a quantitative and qualitative evaluation. Our evaluation demonstrates that incorporating the unpredictability feature leads to a better fit of human-generated test data. These results encourage further investigation of the effect of unpredictability on driving behavior.

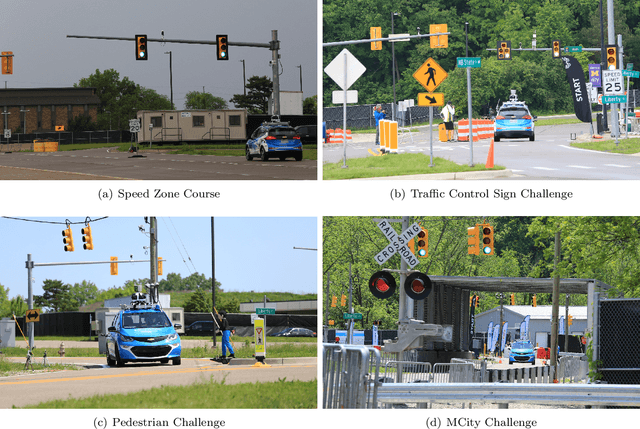

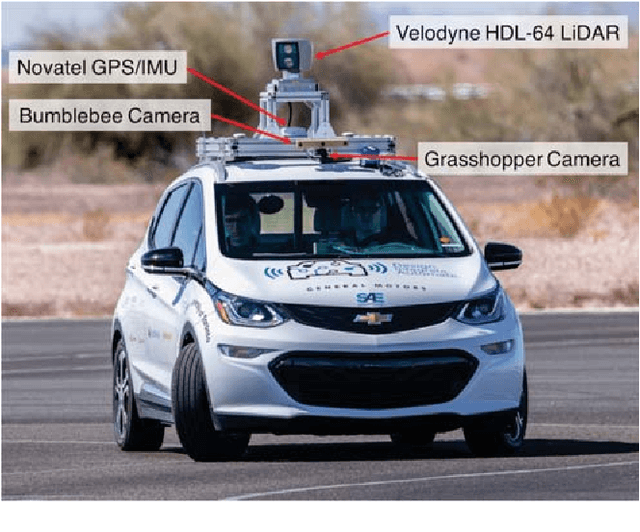

Zeus: A System Description of the Two-Time Winner of the Collegiate SAE AutoDrive Competition

Apr 19, 2020

Abstract:The SAE AutoDrive Challenge is a three-year collegiate competition to develop a self-driving car by 2020. The second year of the competition was held in June 2019 at MCity, a mock town built for self-driving car testing at the University of Michigan. Teams were required to autonomously navigate a series of intersections while handling pedestrians, traffic lights, and traffic signs. Zeus is aUToronto's winning entry in the AutoDrive Challenge. This article describes the system design and development of Zeus as well as many of the lessons learned along the way. This includes details on the team's organizational structure, sensor suite, software components, and performance at the Year 2 competition. With a team of mostly undergraduates and minimal resources, aUToronto has made progress towards a functioning self-driving vehicle, in just two years. This article may prove valuable to researchers looking to develop their own self-driving platform.

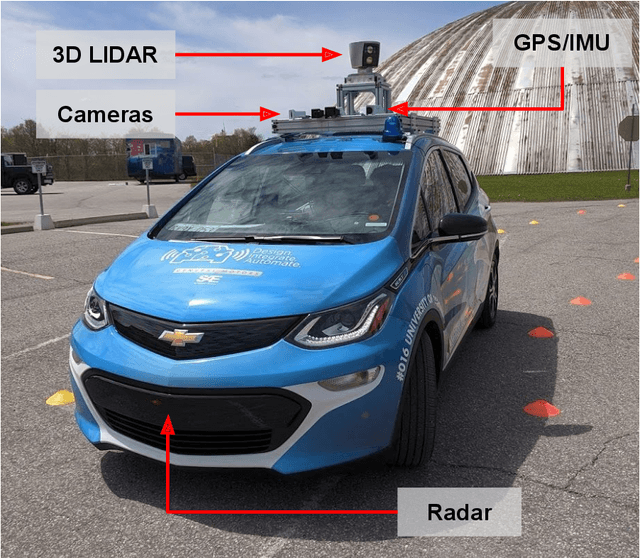

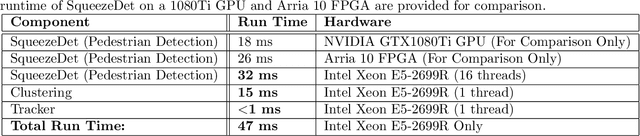

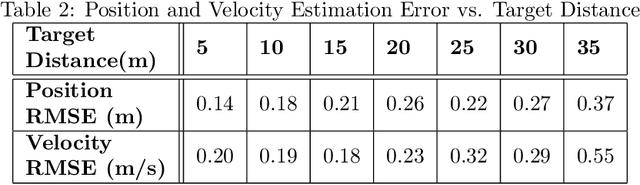

aUToTrack: A Lightweight Object Detection and Tracking System for the SAE AutoDrive Challenge

May 21, 2019

Abstract:The University of Toronto is one of eight teams competing in the SAE AutoDrive Challenge -- a competition to develop a self-driving car by 2020. After placing first at the Year 1 challenge, we are headed to MCity in June 2019 for the second challenge. There, we will interact with pedestrians, cyclists, and cars. For safe operation, it is critical to have an accurate estimate of the position of all objects surrounding the vehicle. The contributions of this work are twofold: First, we present a new object detection and tracking dataset (UofTPed50), which uses GPS to ground truth the position and velocity of a pedestrian. To our knowledge, a dataset of this type for pedestrians has not been shown in the literature before. Second, we present a lightweight object detection and tracking system (aUToTrack) that uses vision, LIDAR, and GPS/IMU positioning to achieve state-of-the-art performance on the KITTI Object Tracking benchmark. We show that aUToTrack accurately estimates the position and velocity of pedestrians, in real-time, using CPUs only. aUToTrack has been tested in closed-loop experiments on a real self-driving car, and we demonstrate its performance on our dataset.

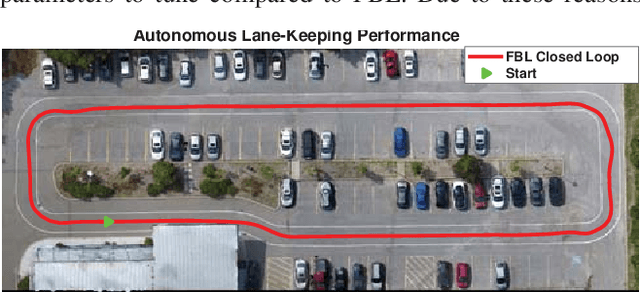

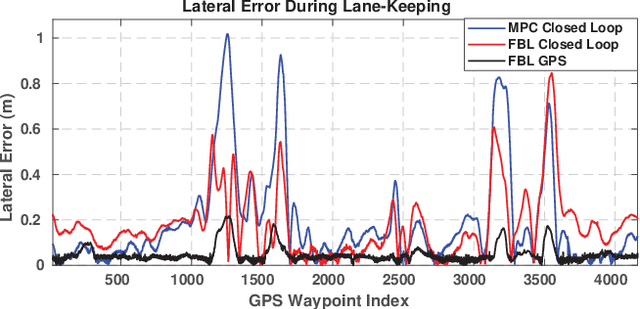

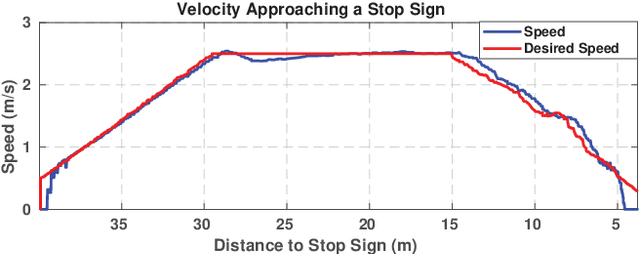

Building a Winning Self-Driving Car in Six Months

Nov 03, 2018

Abstract:The SAE AutoDrive Challenge is a three-year competition to develop a Level 4 autonomous vehicle by 2020. The first set of challenges were held in April of 2018 in Yuma, Arizona. Our team (aUToronto/Zeus) placed first. In this paper, we describe our complete system architecture and specialized algorithms that enabled us to win. We show that it is possible to develop a vehicle with basic autonomy features in just six months relying on simple, robust algorithms. We do not make use of a prior map. Instead, we have developed a multi-sensor visual localization solution. All of our algorithms run in real-time using CPUs only. We also highlight the closed-loop performance of our system in detail in several experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge