Sen Chen

Large Language Model Supply Chain: Open Problems From the Security Perspective

Nov 03, 2024Abstract:Large Language Model (LLM) is changing the software development paradigm and has gained huge attention from both academia and industry. Researchers and developers collaboratively explore how to leverage the powerful problem-solving ability of LLMs for specific domain tasks. Due to the wide usage of LLM-based applications, e.g., ChatGPT, multiple works have been proposed to ensure the security of LLM systems. However, a comprehensive understanding of the entire processes of LLM system construction (the LLM supply chain) is crucial but relevant works are limited. More importantly, the security issues hidden in the LLM SC which could highly impact the reliable usage of LLMs are lack of exploration. Existing works mainly focus on assuring the quality of LLM from the model level, security assurance for the entire LLM SC is ignored. In this work, we take the first step to discuss the potential security risks in each component as well as the integration between components of LLM SC. We summarize 12 security-related risks and provide promising guidance to help build safer LLM systems. We hope our work can facilitate the evolution of artificial general intelligence with secure LLM ecosystems.

PatchFinder: A Two-Phase Approach to Security Patch Tracing for Disclosed Vulnerabilities in Open-Source Software

Jul 24, 2024

Abstract:Open-source software (OSS) vulnerabilities are increasingly prevalent, emphasizing the importance of security patches. However, in widely used security platforms like NVD, a substantial number of CVE records still lack trace links to patches. Although rank-based approaches have been proposed for security patch tracing, they heavily rely on handcrafted features in a single-step framework, which limits their effectiveness. In this paper, we propose PatchFinder, a two-phase framework with end-to-end correlation learning for better-tracing security patches. In the **initial retrieval** phase, we employ a hybrid patch retriever to account for both lexical and semantic matching based on the code changes and the description of a CVE, to narrow down the search space by extracting those commits as candidates that are similar to the CVE descriptions. Afterwards, in the **re-ranking** phase, we design an end-to-end architecture under the supervised fine-tuning paradigm for learning the semantic correlations between CVE descriptions and commits. In this way, we can automatically rank the candidates based on their correlation scores while maintaining low computation overhead. We evaluated our system against 4,789 CVEs from 532 OSS projects. The results are highly promising: PatchFinder achieves a Recall@10 of 80.63% and a Mean Reciprocal Rank (MRR) of 0.7951. Moreover, the Manual Effort@10 required is curtailed to 2.77, marking a 1.94 times improvement over current leading methods. When applying PatchFinder in practice, we initially identified 533 patch commits and submitted them to the official, 482 of which have been confirmed by CVE Numbering Authorities.

An Empirical Study of Bugs in Open-Source Federated Learning Framework

Aug 09, 2023

Abstract:Federated learning (FL), as a decentralized machine learning solution to the protection of users' private data, has become an important learning paradigm in recent years, especially since the enforcement of stricter laws and regulations in most countries. Therefore, a variety of FL frameworks are released to facilitate the development and application of federated learning. Despite the considerable amount of research on the security and privacy of FL models and systems, the security issues in FL frameworks have not been systematically studied yet. In this paper, we conduct the first empirical study on 1,112 FL framework bugs to investigate their characteristics. These bugs are manually collected, classified, and labeled from 12 open-source FL frameworks on GitHub. In detail, we construct taxonomies of 15 symptoms, 12 root causes, and 20 fix patterns of these bugs and investigate their correlations and distributions on 23 logical components and two main application scenarios. From the results of our study, we present nine findings, discuss their implications, and propound several suggestions to FL framework developers and security researchers on the FL frameworks.

Towards Understanding and Mitigating Audio Adversarial Examples for Speaker Recognition

Jun 07, 2022

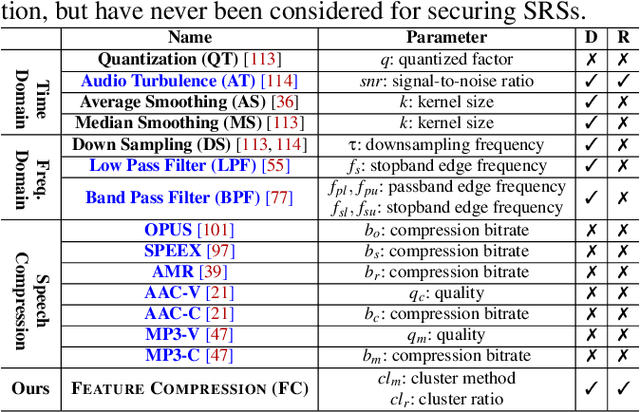

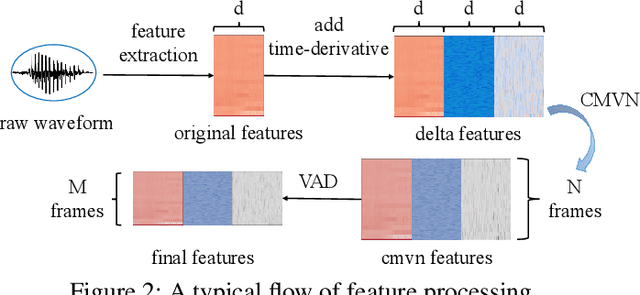

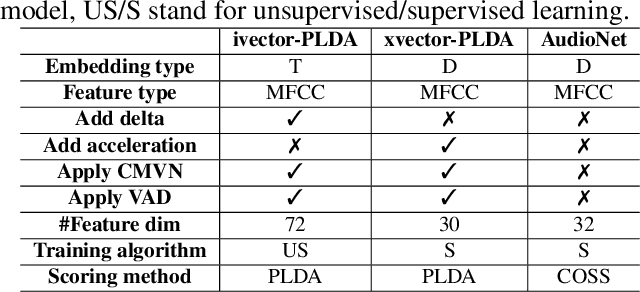

Abstract:Speaker recognition systems (SRSs) have recently been shown to be vulnerable to adversarial attacks, raising significant security concerns. In this work, we systematically investigate transformation and adversarial training based defenses for securing SRSs. According to the characteristic of SRSs, we present 22 diverse transformations and thoroughly evaluate them using 7 recent promising adversarial attacks (4 white-box and 3 black-box) on speaker recognition. With careful regard for best practices in defense evaluations, we analyze the strength of transformations to withstand adaptive attacks. We also evaluate and understand their effectiveness against adaptive attacks when combined with adversarial training. Our study provides lots of useful insights and findings, many of them are new or inconsistent with the conclusions in the image and speech recognition domains, e.g., variable and constant bit rate speech compressions have different performance, and some non-differentiable transformations remain effective against current promising evasion techniques which often work well in the image domain. We demonstrate that the proposed novel feature-level transformation combined with adversarial training is rather effective compared to the sole adversarial training in a complete white-box setting, e.g., increasing the accuracy by 13.62% and attack cost by two orders of magnitude, while other transformations do not necessarily improve the overall defense capability. This work sheds further light on the research directions in this field. We also release our evaluation platform SPEAKERGUARD to foster further research.

AS2T: Arbitrary Source-To-Target Adversarial Attack on Speaker Recognition Systems

Jun 07, 2022

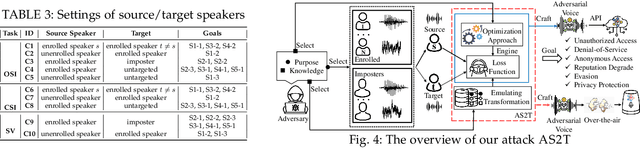

Abstract:Recent work has illuminated the vulnerability of speaker recognition systems (SRSs) against adversarial attacks, raising significant security concerns in deploying SRSs. However, they considered only a few settings (e.g., some combinations of source and target speakers), leaving many interesting and important settings in real-world attack scenarios alone. In this work, we present AS2T, the first attack in this domain which covers all the settings, thus allows the adversary to craft adversarial voices using arbitrary source and target speakers for any of three main recognition tasks. Since none of the existing loss functions can be applied to all the settings, we explore many candidate loss functions for each setting including the existing and newly designed ones. We thoroughly evaluate their efficacy and find that some existing loss functions are suboptimal. Then, to improve the robustness of AS2T towards practical over-the-air attack, we study the possible distortions occurred in over-the-air transmission, utilize different transformation functions with different parameters to model those distortions, and incorporate them into the generation of adversarial voices. Our simulated over-the-air evaluation validates the effectiveness of our solution in producing robust adversarial voices which remain effective under various hardware devices and various acoustic environments with different reverberation, ambient noises, and noise levels. Finally, we leverage AS2T to perform thus far the largest-scale evaluation to understand transferability among 14 diverse SRSs. The transferability analysis provides many interesting and useful insights which challenge several findings and conclusion drawn in previous works in the image domain. Our study also sheds light on future directions of adversarial attacks in the speaker recognition domain.

Talking Head Generation Driven by Speech-Related Facial Action Units and Audio- Based on Multimodal Representation Fusion

Apr 27, 2022

Abstract:Talking head generation is to synthesize a lip-synchronized talking head video by inputting an arbitrary face image and corresponding audio clips. Existing methods ignore not only the interaction and relationship of cross-modal information, but also the local driving information of the mouth muscles. In this study, we propose a novel generative framework that contains a dilated non-causal temporal convolutional self-attention network as a multimodal fusion module to promote the relationship learning of cross-modal features. In addition, our proposed method uses both audio- and speech-related facial action units (AUs) as driving information. Speech-related AU information can guide mouth movements more accurately. Because speech is highly correlated with speech-related AUs, we propose an audio-to-AU module to predict speech-related AU information. We utilize pre-trained AU classifier to ensure that the generated images contain correct AU information. We verify the effectiveness of the proposed model on the GRID and TCD-TIMIT datasets. An ablation study is also conducted to verify the contribution of each component. The results of quantitative and qualitative experiments demonstrate that our method outperforms existing methods in terms of both image quality and lip-sync accuracy.

Talking Head Generation with Audio and Speech Related Facial Action Units

Oct 19, 2021

Abstract:The task of talking head generation is to synthesize a lip synchronized talking head video by inputting an arbitrary face image and audio clips. Most existing methods ignore the local driving information of the mouth muscles. In this paper, we propose a novel recurrent generative network that uses both audio and speech-related facial action units (AUs) as the driving information. AU information related to the mouth can guide the movement of the mouth more accurately. Since speech is highly correlated with speech-related AUs, we propose an Audio-to-AU module in our system to predict the speech-related AU information from speech. In addition, we use AU classifier to ensure that the generated images contain correct AU information. Frame discriminator is also constructed for adversarial training to improve the realism of the generated face. We verify the effectiveness of our model on the GRID dataset and TCD-TIMIT dataset. We also conduct an ablation study to verify the contribution of each component in our model. Quantitative and qualitative experiments demonstrate that our method outperforms existing methods in both image quality and lip-sync accuracy.

SEC4SR: A Security Analysis Platform for Speaker Recognition

Sep 04, 2021

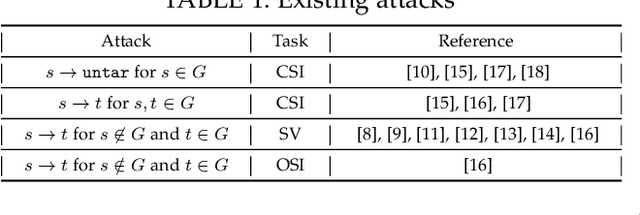

Abstract:Adversarial attacks have been expanded to speaker recognition (SR). However, existing attacks are often assessed using different SR models, recognition tasks and datasets, and only few adversarial defenses borrowed from computer vision are considered. Yet,these defenses have not been thoroughly evaluated against adaptive attacks. Thus, there is still a lack of quantitative understanding about the strengths and limitations of adversarial attacks and defenses. More effective defenses are also required for securing SR systems. To bridge this gap, we present SEC4SR, the first platform enabling researchers to systematically and comprehensively evaluate adversarial attacks and defenses in SR. SEC4SR incorporates 4 white-box and 2 black-box attacks, 24 defenses including our novel feature-level transformations. It also contains techniques for mounting adaptive attacks. Using SEC4SR, we conduct thus far the largest-scale empirical study on adversarial attacks and defenses in SR, involving 23 defenses, 15 attacks and 4 attack settings. Our study provides lots of useful findings that may advance future research: such as (1) all the transformations slightly degrade accuracy on benign examples and their effectiveness vary with attacks; (2) most transformations become less effective under adaptive attacks, but some transformations become more effective; (3) few transformations combined with adversarial training yield stronger defenses over some but not all attacks, while our feature-level transformation combined with adversarial training yields the strongest defense over all the attacks. Extensive experiments demonstrate capabilities and advantages of SEC4SR which can benefit future research in SR.

Why an Android App is Classified as Malware? Towards Malware Classification Interpretation

Apr 24, 2020

Abstract:Machine learning (ML) based approach is considered as one of the most promising techniques for Android malware detection and has achieved high accuracy by leveraging commonly-used features. In practice, most of the ML classifications only provide a binary label to mobile users and app security analysts. However, stakeholders are more interested in the reason why apps are classified as malicious in both academia and industry. This belongs to the research area of interpretable ML but in a specific research domain (i.e., mobile malware detection). Although several interpretable ML methods have been exhibited to explain the final classification results in many cutting-edge Artificial Intelligent (AI) based research fields, till now, there is no study interpreting why an app is classified as malware or unveiling the domain-specific challenges. In this paper, to fill this gap, we propose a novel and interpretable ML-based approach (named XMal) to classify malware with high accuracy and explain the classification result meanwhile. (1) The first classification phase of XMal hinges multi-layer perceptron (MLP) and attention mechanism, and also pinpoints the key features most related to the classification result. (2) The second interpreting phase aims at automatically producing neural language descriptions to interpret the core malicious behaviors within apps. We evaluate the behavior description results by comparing with the existing interpretable ML-based methods (i.e., Drebin and LIME) to demonstrate the effectiveness of XMal. We find that XMal is able to reveal the malicious behaviors more accurately. Additionally, our experiments show that XMal can also interpret the reason why some samples are misclassified by ML classifiers. Our study peeks into the interpretable ML through the research of Android malware detection and analysis.

Advanced Evasion Attacks and Mitigations on Practical ML-Based Phishing Website Classifiers

Apr 15, 2020

Abstract:Machine learning (ML) based approaches have been the mainstream solution for anti-phishing detection. When they are deployed on the client-side, ML-based classifiers are vulnerable to evasion attacks. However, such potential threats have received relatively little attention because existing attacks destruct the functionalities or appearance of webpages and are conducted in the white-box scenario, making it less practical. Consequently, it becomes imperative to understand whether it is possible to launch evasion attacks with limited knowledge of the classifier, while preserving the functionalities and appearance. In this work, we show that even in the grey-, and black-box scenarios, evasion attacks are not only effective on practical ML-based classifiers, but can also be efficiently launched without destructing the functionalities and appearance. For this purpose, we propose three mutation-based attacks, differing in the knowledge of the target classifier, addressing a key technical challenge: automatically crafting an adversarial sample from a known phishing website in a way that can mislead classifiers. To launch attacks in the white- and grey-box scenarios, we also propose a sample-based collision attack to gain the knowledge of the target classifier. We demonstrate the effectiveness and efficiency of our evasion attacks on the state-of-the-art, Google's phishing page filter, achieved 100% attack success rate in less than one second per website. Moreover, the transferability attack on BitDefender's industrial phishing page classifier, TrafficLight, achieved up to 81.25% attack success rate. We further propose a similarity-based method to mitigate such evasion attacks, Pelican. We demonstrate that Pelican can effectively detect evasion attacks. Our findings contribute to design more robust phishing website classifiers in practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge