Sebastian Schelter

Cost-Efficient RAG for Entity Matching with LLMs: A Blocking-based Exploration

Feb 05, 2026Abstract:Retrieval-augmented generation (RAG) enhances LLM reasoning in knowledge-intensive tasks, but existing RAG pipelines incur substantial retrieval and generation overhead when applied to large-scale entity matching. To address this limitation, we introduce CE-RAG4EM, a cost-efficient RAG architecture that reduces computation through blocking-based batch retrieval and generation. We also present a unified framework for analyzing and evaluating RAG systems for entity matching, focusing on blocking-aware optimizations and retrieval granularity. Extensive experiments suggest that CE-RAG4EM can achieve comparable or improved matching quality while substantially reducing end-to-end runtime relative to strong baselines. Our analysis further reveals that key configuration parameters introduce an inherent trade-off between performance and overhead, offering practical guidance for designing efficient and scalable RAG systems for entity matching and data integration.

SemPipes -- Optimizable Semantic Data Operators for Tabular Machine Learning Pipelines

Feb 04, 2026Abstract:Real-world machine learning on tabular data relies on complex data preparation pipelines for prediction, data integration, augmentation, and debugging. Designing these pipelines requires substantial domain expertise and engineering effort, motivating the question of how large language models (LLMs) can support tabular ML through code synthesis. We introduce SemPipes, a novel declarative programming model that integrates LLM-powered semantic data operators into tabular ML pipelines. Semantic operators specify data transformations in natural language while delegating execution to a runtime system. During training, SemPipes synthesizes custom operator implementations based on data characteristics, operator instructions, and pipeline context. This design enables the automatic optimization of data operations in a pipeline via LLM-based code synthesis guided by evolutionary search. We evaluate SemPipes across diverse tabular ML tasks and show that semantic operators substantially improve end-to-end predictive performance for both expert-designed and agent-generated pipelines, while reducing pipeline complexity. We implement SemPipes in Python and release it at https://github.com/deem-data/sempipes/tree/v1.

Towards Cross-Modal Error Detection with Tables and Images

Oct 14, 2025Abstract:Ensuring data quality at scale remains a persistent challenge for large organizations. Despite recent advances, maintaining accurate and consistent data is still complex, especially when dealing with multiple data modalities. Traditional error detection and correction methods tend to focus on a single modality, typically a table, and often miss cross-modal errors that are common in domains like e-Commerce and healthcare, where image, tabular, and text data co-exist. To address this gap, we take an initial step towards cross-modal error detection in tabular data, by benchmarking several methods. Our evaluation spans four datasets and five baseline approaches. Among them, Cleanlab, a label error detection framework, and DataScope, a data valuation method, perform the best when paired with a strong AutoML framework, achieving the highest F1 scores. Our findings indicate that current methods remain limited, particularly when applied to heavy-tailed real-world data, motivating further research in this area.

Towards a Real-World Aligned Benchmark for Unlearning in Recommender Systems

Aug 23, 2025Abstract:Modern recommender systems heavily leverage user interaction data to deliver personalized experiences. However, relying on personal data presents challenges in adhering to privacy regulations, such as the GDPR's "right to be forgotten". Machine unlearning (MU) aims to address these challenges by enabling the efficient removal of specific training data from models post-training, without compromising model utility or leaving residual information. However, current benchmarks for unlearning in recommender systems -- most notably CURE4Rec -- fail to reflect real-world operational demands. They focus narrowly on collaborative filtering, overlook tasks like session-based and next-basket recommendation, simulate unrealistically large unlearning requests, and ignore critical efficiency constraints. In this paper, we propose a set of design desiderata and research questions to guide the development of a more realistic benchmark for unlearning in recommender systems, with the goal of gathering feedback from the research community. Our benchmark proposal spans multiple recommendation tasks, includes domain-specific unlearning scenarios, and several unlearning algorithms -- including ones adapted from a recent NeurIPS unlearning competition. Furthermore, we argue for an unlearning setup that reflects the sequential, time-sensitive nature of real-world deletion requests. We also present a preliminary experiment in a next-basket recommendation setting based on our proposed desiderata and find that unlearning also works for sequential recommendation models, exposed to many small unlearning requests. In this case, we observe that a modification of a custom-designed unlearning algorithm for recommender systems outperforms general unlearning algorithms significantly, and that unlearning can be executed with a latency of only several seconds.

scSSL-Bench: Benchmarking Self-Supervised Learning for Single-Cell Data

Jun 10, 2025Abstract:Self-supervised learning (SSL) has proven to be a powerful approach for extracting biologically meaningful representations from single-cell data. To advance our understanding of SSL methods applied to single-cell data, we present scSSL-Bench, a comprehensive benchmark that evaluates nineteen SSL methods. Our evaluation spans nine datasets and focuses on three common downstream tasks: batch correction, cell type annotation, and missing modality prediction. Furthermore, we systematically assess various data augmentation strategies. Our analysis reveals task-specific trade-offs: the specialized single-cell frameworks, scVI, CLAIRE, and the finetuned scGPT excel at uni-modal batch correction, while generic SSL methods, such as VICReg and SimCLR, demonstrate superior performance in cell typing and multi-modal data integration. Random masking emerges as the most effective augmentation technique across all tasks, surpassing domain-specific augmentations. Notably, our results indicate the need for a specialized single-cell multi-modal data integration framework. scSSL-Bench provides a standardized evaluation platform and concrete recommendations for applying SSL to single-cell analysis, advancing the convergence of deep learning and single-cell genomics.

Understanding Visual Saliency of Outlier Items in Product Search

Mar 30, 2025

Abstract:In two-sided marketplaces, items compete for user attention, which translates to revenue for suppliers. Item exposure, indicated by the amount of attention items receive in a ranking, can be influenced by factors like position bias. Recent work suggests that inter-item dependencies, such as outlier items in a ranking, also affect item exposure. Outlier items are items that observably deviate from the other items in a ranked list. Understanding outlier items is crucial for determining an item's exposure distribution. In our previous work, we investigated the impact of different presentational features on users' perception of outlier in search results. In this work, we focus on two key questions left unanswered by our previous work: (i) What is the effect of isolated bottom-up visual factors on item outlierness in product lists? (ii) How do top-down factors influence users' perception of item outlierness in a realistic online shopping scenario? We start with bottom-up factors and employ visual saliency models to evaluate their ability to detect outlier items in product lists purely based on visual attributes. Then, to examine top-down factors, we conduct eye-tracking experiments on an online shopping task. Moreover, we employ eye-tracking to not only be closer to the real-world case but also to address the accuracy problem of reaction time in the visual search task. Our experiments show the ability of visual saliency models to detect bottom-up factors, consistently highlighting areas with strong visual contrasts. The results of our eye-tracking experiment for lists without outliers show that despite being less visually attractive, product descriptions captured attention the fastest, indicating the importance of top-down factors. In our eye-tracking experiments, we observed that outlier items engaged users for longer durations compared to non-outlier items.

AnyMatch -- Efficient Zero-Shot Entity Matching with a Small Language Model

Sep 09, 2024

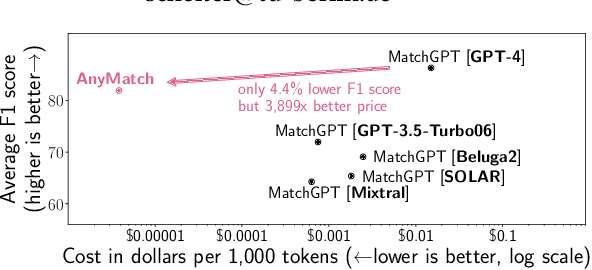

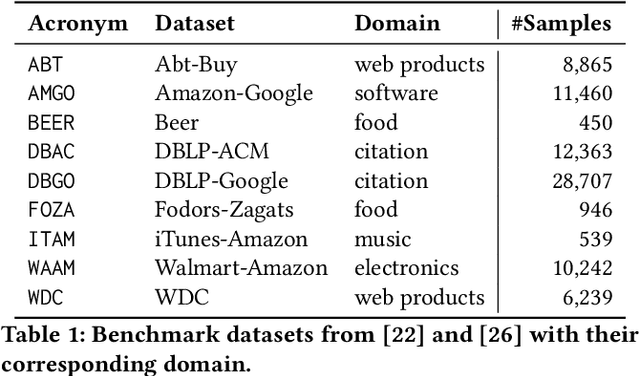

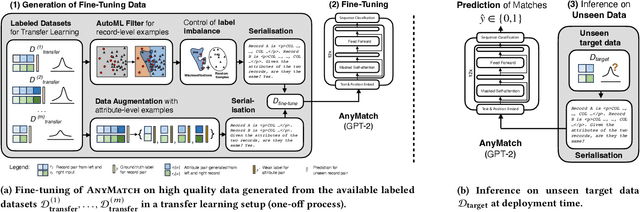

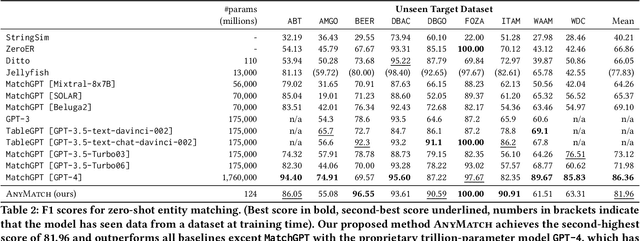

Abstract:Entity matching (EM) is the problem of determining whether two records refer to same real-world entity, which is crucial in data integration, e.g., for product catalogs or address databases. A major drawback of many EM approaches is their dependence on labelled examples. We thus focus on the challenging setting of zero-shot entity matching where no labelled examples are available for an unseen target dataset. Recently, large language models (LLMs) have shown promising results for zero-shot EM, but their low throughput and high deployment cost limit their applicability and scalability. We revisit the zero-shot EM problem with AnyMatch, a small language model fine-tuned in a transfer learning setup. We propose several novel data selection techniques to generate fine-tuning data for our model, e.g., by selecting difficult pairs to match via an AutoML filter, by generating additional attribute-level examples, and by controlling label imbalance in the data. We conduct an extensive evaluation of the prediction quality and deployment cost of our model, in a comparison to thirteen baselines on nine benchmark datasets. We find that AnyMatch provides competitive prediction quality despite its small parameter size: it achieves the second-highest F1 score overall, and outperforms several other approaches that employ models with hundreds of billions of parameters. Furthermore, our approach exhibits major cost benefits: the average prediction quality of AnyMatch is within 4.4% of the state-of-the-art method MatchGPT with the proprietary trillion-parameter model GPT-4, yet AnyMatch requires four orders of magnitude less parameters and incurs a 3,899 times lower inference cost (in dollars per 1,000 tokens).

Towards Interactively Improving ML Data Preparation Code via "Shadow Pipelines"

Apr 30, 2024

Abstract:Data scientists develop ML pipelines in an iterative manner: they repeatedly screen a pipeline for potential issues, debug it, and then revise and improve its code according to their findings. However, this manual process is tedious and error-prone. Therefore, we propose to support data scientists during this development cycle with automatically derived interactive suggestions for pipeline improvements. We discuss our vision to generate these suggestions with so-called shadow pipelines, hidden variants of the original pipeline that modify it to auto-detect potential issues, try out modifications for improvements, and suggest and explain these modifications to the user. We envision to apply incremental view maintenance-based optimisations to ensure low-latency computation and maintenance of the shadow pipelines. We conduct preliminary experiments to showcase the feasibility of our envisioned approach and the potential benefits of our proposed optimisations.

Hierarchical Forecasting at Scale

Oct 19, 2023Abstract:Existing hierarchical forecasting techniques scale poorly when the number of time series increases. We propose to learn a coherent forecast for millions of time series with a single bottom-level forecast model by using a sparse loss function that directly optimizes the hierarchical product and/or temporal structure. The benefit of our sparse hierarchical loss function is that it provides practitioners a method of producing bottom-level forecasts that are coherent to any chosen cross-sectional or temporal hierarchy. In addition, removing the need for a post-processing step as required in traditional hierarchical forecasting techniques reduces the computational cost of the prediction phase in the forecasting pipeline. On the public M5 dataset, our sparse hierarchical loss function performs up to 10% (RMSE) better compared to the baseline loss function. We implement our sparse hierarchical loss function within an existing forecasting model at bol, a large European e-commerce platform, resulting in an improved forecasting performance of 2% at the product level. Finally, we found an increase in forecasting performance of about 5-10% when evaluating the forecasting performance across the cross-sectional hierarchies that we defined. These results demonstrate the usefulness of our sparse hierarchical loss applied to a production forecasting system at a major e-commerce platform.

Improving Retrieval-Augmented Large Language Models via Data Importance Learning

Jul 06, 2023

Abstract:Retrieval augmentation enables large language models to take advantage of external knowledge, for example on tasks like question answering and data imputation. However, the performance of such retrieval-augmented models is limited by the data quality of their underlying retrieval corpus. In this paper, we propose an algorithm based on multilinear extension for evaluating the data importance of retrieved data points. There are exponentially many terms in the multilinear extension, and one key contribution of this paper is a polynomial time algorithm that computes exactly, given a retrieval-augmented model with an additive utility function and a validation set, the data importance of data points in the retrieval corpus using the multilinear extension of the model's utility function. We further proposed an even more efficient ({\epsilon}, {\delta})-approximation algorithm. Our experimental results illustrate that we can enhance the performance of large language models by only pruning or reweighting the retrieval corpus, without requiring further training. For some tasks, this even allows a small model (e.g., GPT-JT), augmented with a search engine API, to outperform GPT-3.5 (without retrieval augmentation). Moreover, we show that weights based on multilinear extension can be computed efficiently in practice (e.g., in less than ten minutes for a corpus with 100 million elements).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge