Ruiqian Li

MARMOT: Masked Autoencoder for Modeling Transient Imaging

Jun 10, 2025

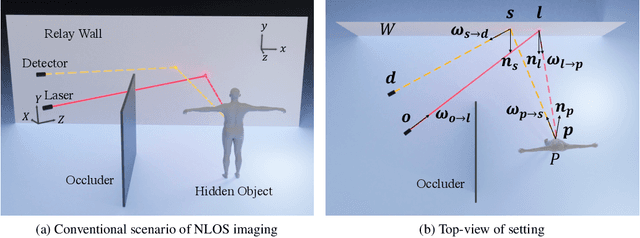

Abstract:Pretrained models have demonstrated impressive success in many modalities such as language and vision. Recent works facilitate the pretraining paradigm in imaging research. Transients are a novel modality, which are captured for an object as photon counts versus arrival times using a precisely time-resolved sensor. In particular for non-line-of-sight (NLOS) scenarios, transients of hidden objects are measured beyond the sensor's direct line of sight. Using NLOS transients, the majority of previous works optimize volume density or surfaces to reconstruct the hidden objects and do not transfer priors learned from datasets. In this work, we present a masked autoencoder for modeling transient imaging, or MARMOT, to facilitate NLOS applications. Our MARMOT is a self-supervised model pretrianed on massive and diverse NLOS transient datasets. Using a Transformer-based encoder-decoder, MARMOT learns features from partially masked transients via a scanning pattern mask (SPM), where the unmasked subset is functionally equivalent to arbitrary sampling, and predicts full measurements. Pretrained on TransVerse-a synthesized transient dataset of 500K 3D models-MARMOT adapts to downstream imaging tasks using direct feature transfer or decoder finetuning. Comprehensive experiments are carried out in comparisons with state-of-the-art methods. Quantitative and qualitative results demonstrate the efficiency of our MARMOT.

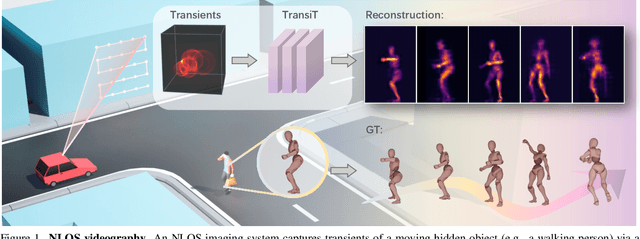

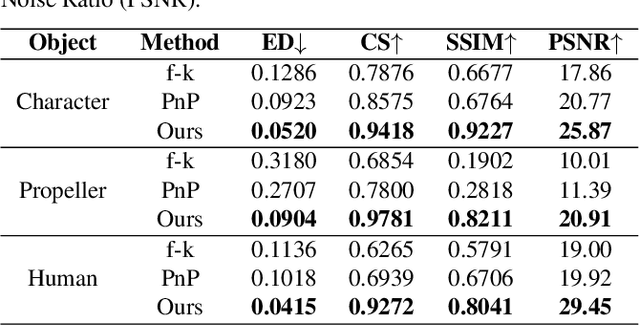

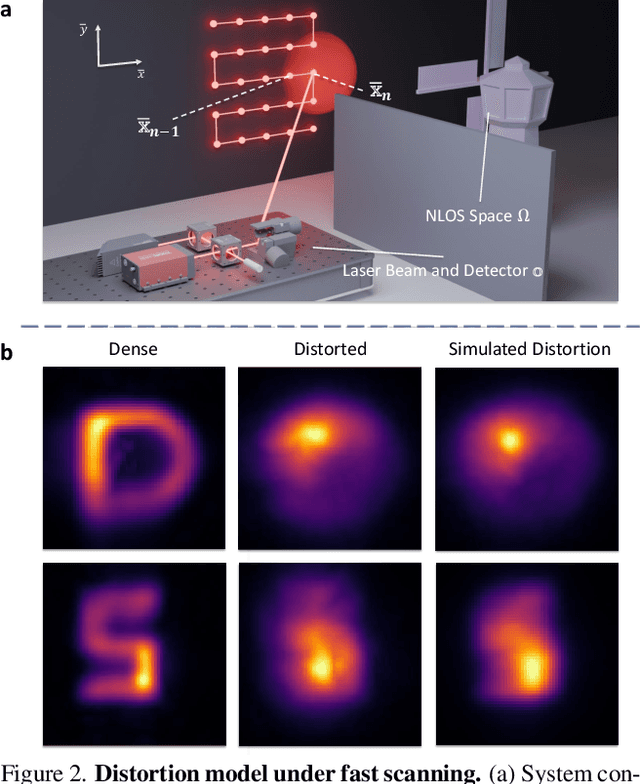

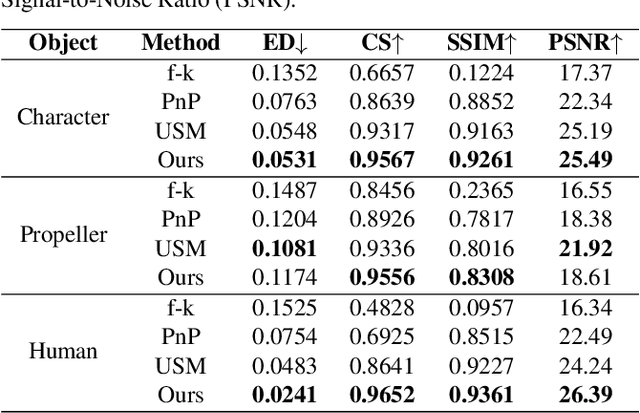

TransiT: Transient Transformer for Non-line-of-sight Videography

Mar 14, 2025

Abstract:High quality and high speed videography using Non-Line-of-Sight (NLOS) imaging benefit autonomous navigation, collision prevention, and post-disaster search and rescue tasks. Current solutions have to balance between the frame rate and image quality. High frame rates, for example, can be achieved by reducing either per-point scanning time or scanning density, but at the cost of lowering the information density at individual frames. Fast scanning process further reduces the signal-to-noise ratio and different scanning systems exhibit different distortion characteristics. In this work, we design and employ a new Transient Transformer architecture called TransiT to achieve real-time NLOS recovery under fast scans. TransiT directly compresses the temporal dimension of input transients to extract features, reducing computation costs and meeting high frame rate requirements. It further adopts a feature fusion mechanism as well as employs a spatial-temporal Transformer to help capture features of NLOS transient videos. Moreover, TransiT applies transfer learning to bridge the gap between synthetic and real-measured data. In real experiments, TransiT manages to reconstruct from sparse transients of $16 \times 16$ measured at an exposure time of 0.4 ms per point to NLOS videos at a $64 \times 64$ resolution at 10 frames per second. We will make our code and dataset available to the community.

IMUSIC: IMU-based Facial Expression Capture

Feb 03, 2024

Abstract:For facial motion capture and analysis, the dominated solutions are generally based on visual cues, which cannot protect privacy and are vulnerable to occlusions. Inertial measurement units (IMUs) serve as potential rescues yet are mainly adopted for full-body motion capture. In this paper, we propose IMUSIC to fill the gap, a novel path for facial expression capture using purely IMU signals, significantly distant from previous visual solutions.The key design in our IMUSIC is a trilogy. We first design micro-IMUs to suit facial capture, companion with an anatomy-driven IMU placement scheme. Then, we contribute a novel IMU-ARKit dataset, which provides rich paired IMU/visual signals for diverse facial expressions and performances. Such unique multi-modality brings huge potential for future directions like IMU-based facial behavior analysis. Moreover, utilizing IMU-ARKit, we introduce a strong baseline approach to accurately predict facial blendshape parameters from purely IMU signals. Specifically, we tailor a Transformer diffusion model with a two-stage training strategy for this novel tracking task. The IMUSIC framework empowers us to perform accurate facial capture in scenarios where visual methods falter and simultaneously safeguard user privacy. We conduct extensive experiments about both the IMU configuration and technical components to validate the effectiveness of our IMUSIC approach. Notably, IMUSIC enables various potential and novel applications, i.e., privacy-protecting facial capture, hybrid capture against occlusions, or detecting minute facial movements that are often invisible through visual cues. We will release our dataset and implementations to enrich more possibilities of facial capture and analysis in our community.

Omni-Line-of-Sight Imaging for Holistic Shape Reconstruction

Apr 21, 2023

Abstract:We introduce Omni-LOS, a neural computational imaging method for conducting holistic shape reconstruction (HSR) of complex objects utilizing a Single-Photon Avalanche Diode (SPAD)-based time-of-flight sensor. As illustrated in Fig. 1, our method enables new capabilities to reconstruct near-$360^\circ$ surrounding geometry of an object from a single scan spot. In such a scenario, traditional line-of-sight (LOS) imaging methods only see the front part of the object and typically fail to recover the occluded back regions. Inspired by recent advances of non-line-of-sight (NLOS) imaging techniques which have demonstrated great power to reconstruct occluded objects, Omni-LOS marries LOS and NLOS together, leveraging their complementary advantages to jointly recover the holistic shape of the object from a single scan position. The core of our method is to put the object nearby diffuse walls and augment the LOS scan in the front view with the NLOS scans from the surrounding walls, which serve as virtual ``mirrors'' to trap lights toward the object. Instead of separately recovering the LOS and NLOS signals, we adopt an implicit neural network to represent the object, analogous to NeRF and NeTF. While transients are measured along straight rays in LOS but over the spherical wavefronts in NLOS, we derive differentiable ray propagation models to simultaneously model both types of transient measurements so that the NLOS reconstruction also takes into account the direct LOS measurements and vice versa. We further develop a proof-of-concept Omni-LOS hardware prototype for real-world validation. Comprehensive experiments on various wall settings demonstrate that Omni-LOS successfully resolves shape ambiguities caused by occlusions, achieves high-fidelity 3D scan quality, and manages to recover objects of various scales and complexity.

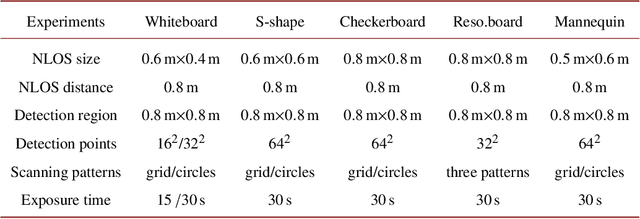

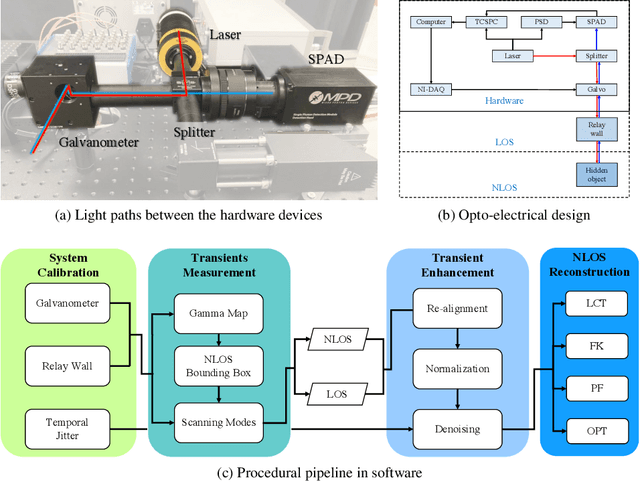

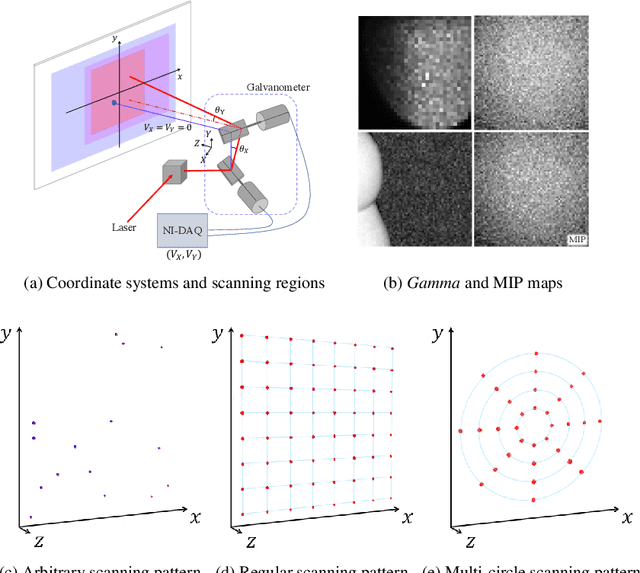

Onsite Non-Line-of-Sight Imaging via Online Calibrations

Dec 29, 2021

Abstract:There has been an increasing interest in deploying non-line-of-sight (NLOS) imaging systems for recovering objects behind an obstacle. Existing solutions generally pre-calibrate the system before scanning the hidden objects. Onsite adjustments of the occluder, object and scanning pattern require re-calibration. We present an online calibration technique that directly decouples the acquired transients at onsite scanning into the LOS and hidden components. We use the former to directly (re)calibrate the system upon changes of scene/obstacle configurations, scanning regions, and scanning patterns whereas the latter for hidden object recovery via spatial, frequency or learning based techniques. Our technique avoids using auxiliary calibration apparatus such as mirrors or checkerboards and supports both laboratory validations and real-world deployments.

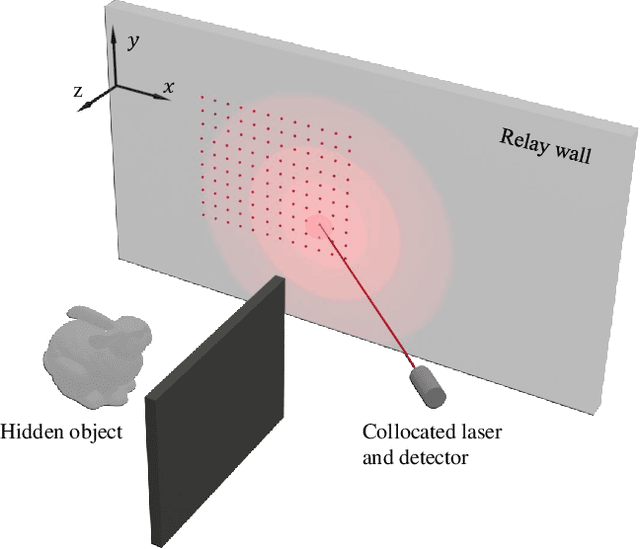

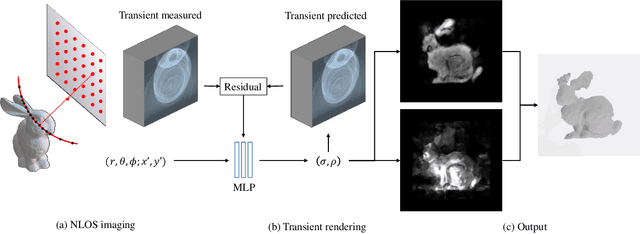

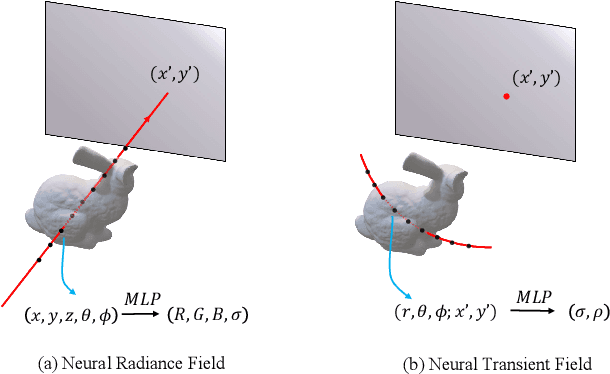

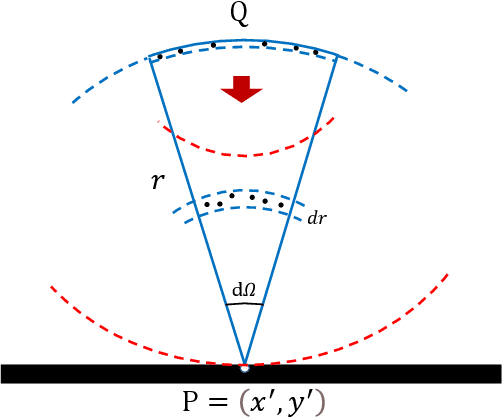

Non-line-of-Sight Imaging via Neural Transient Fields

Jan 05, 2021

Abstract:We present a neural modeling framework for Non-Line-of-Sight (NLOS) imaging. Previous solutions have sought to explicitly recover the 3D geometry (e.g., as point clouds) or voxel density (e.g., within a pre-defined volume) of the hidden scene. In contrast, inspired by the recent Neural Radiance Field (NeRF) approach, we use a multi-layer perceptron (MLP) to represent the neural transient field or NeTF. However, NeTF measures the transient over spherical wavefronts rather than the radiance along lines. We therefore formulate a spherical volume NeTF reconstruction pipeline, applicable to both confocal and non-confocal setups. Compared with NeRF, NeTF samples a much sparser set of viewpoints (scanning spots) and the sampling is highly uneven. We thus introduce a Monte Carlo technique to improve the robustness in the reconstruction. Comprehensive experiments on synthetic and real datasets demonstrate NeTF provides higher quality reconstruction and preserves fine details largely missing in the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge