Rahul Kumar

Adaptive Ergodic Search with Energy-Aware Scheduling for Persistent Multi-Robot Missions

May 16, 2025Abstract:Autonomous robots are increasingly deployed for long-term information-gathering tasks, which pose two key challenges: planning informative trajectories in environments that evolve across space and time, and ensuring persistent operation under energy constraints. This paper presents a unified framework, mEclares, that addresses both challenges through adaptive ergodic search and energy-aware scheduling in multi-robot systems. Our contributions are two-fold: (1) we model real-world variability using stochastic spatiotemporal environments, where the underlying information evolves unpredictably due to process uncertainty. To guide exploration, we construct a target information spatial distribution (TISD) based on clarity, a metric that captures the decay of information in the absence of observations and highlights regions of high uncertainty; and (2) we introduce Robustmesch (Rmesch), an online scheduling method that enables persistent operation by coordinating rechargeable robots sharing a single mobile charging station. Unlike prior work, our approach avoids reliance on preplanned schedules, static or dedicated charging stations, and simplified robot dynamics. Instead, the scheduler supports general nonlinear models, accounts for uncertainty in the estimated position of the charging station, and handles central node failures. The proposed framework is validated through real-world hardware experiments, and feasibility guarantees are provided under specific assumptions.

Preset-Voice Matching for Privacy Regulated Speech-to-Speech Translation Systems

Jul 18, 2024

Abstract:In recent years, there has been increased demand for speech-to-speech translation (S2ST) systems in industry settings. Although successfully commercialized, cloning-based S2ST systems expose their distributors to liabilities when misused by individuals and can infringe on personality rights when exploited by media organizations. This work proposes a regulated S2ST framework called Preset-Voice Matching (PVM). PVM removes cross-lingual voice cloning in S2ST by first matching the input voice to a similar prior consenting speaker voice in the target-language. With this separation, PVM avoids cloning the input speaker, ensuring PVM systems comply with regulations and reduce risk of misuse. Our results demonstrate PVM can significantly improve S2ST system run-time in multi-speaker settings and the naturalness of S2ST synthesized speech. To our knowledge, PVM is the first explicitly regulated S2ST framework leveraging similarly-matched preset-voices for dynamic S2ST tasks.

Pretraining Data and Tokenizer for Indic LLM

Jul 17, 2024Abstract:We present a novel approach to data preparation for developing multilingual Indic large language model. Our meticulous data acquisition spans open-source and proprietary sources, including Common Crawl, Indic books, news articles, and Wikipedia, ensuring a diverse and rich linguistic representation. For each Indic language, we design a custom preprocessing pipeline to effectively eliminate redundant and low-quality text content. Additionally, we perform deduplication on Common Crawl data to address the redundancy present in 70% of the crawled web pages. This study focuses on developing high-quality data, optimizing tokenization for our multilingual dataset for Indic large language models with 3B and 7B parameters, engineered for superior performance in Indic languages. We introduce a novel multilingual tokenizer training strategy, demonstrating our custom-trained Indic tokenizer outperforms the state-of-the-art OpenAI Tiktoken tokenizer, achieving a superior token-to-word ratio for Indic languages.

BookSQL: A Large Scale Text-to-SQL Dataset for Accounting Domain

Jun 12, 2024

Abstract:Several large-scale datasets (e.g., WikiSQL, Spider) for developing natural language interfaces to databases have recently been proposed. These datasets cover a wide breadth of domains but fall short on some essential domains, such as finance and accounting. Given that accounting databases are used worldwide, particularly by non-technical people, there is an imminent need to develop models that could help extract information from accounting databases via natural language queries. In this resource paper, we aim to fill this gap by proposing a new large-scale Text-to-SQL dataset for the accounting and financial domain: BookSQL. The dataset consists of 100k natural language queries-SQL pairs, and accounting databases of 1 million records. We experiment with and analyze existing state-of-the-art models (including GPT-4) for the Text-to-SQL task on BookSQL. We find significant performance gaps, thus pointing towards developing more focused models for this domain.

meSch: Multi-Agent Energy-Aware Scheduling for Task Persistence

Jun 07, 2024Abstract:This paper develops a scheduling protocol for a team of autonomous robots that operate in long-term persistent tasks. The proposed framework, called meSch, accounts for the robots' limited battery capacity and the presence of a single charging station, and achieves the following contributions: 1) First, it guarantees exclusive use of the charging station by one robot at a time; the approach is online, applicable to general nonlinear robot models, does not require robots to be deployed at different times, and can handle robots with different discharge rates. 2) Second, we consider the scenario when the charging station is mobile and subject to uncertainty. This approach ensures that the robots can rendezvous with the charging station while considering the uncertainty in its position. Finally, we provide the evaluation of the efficacy of meSch in simulation and experimental case studies.

Generative AI-Based Text Generation Methods Using Pre-Trained GPT-2 Model

Apr 02, 2024

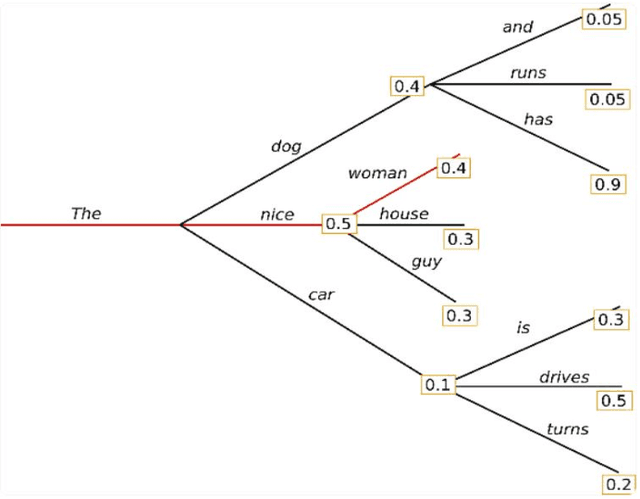

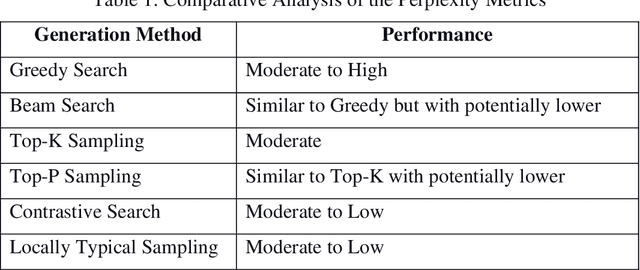

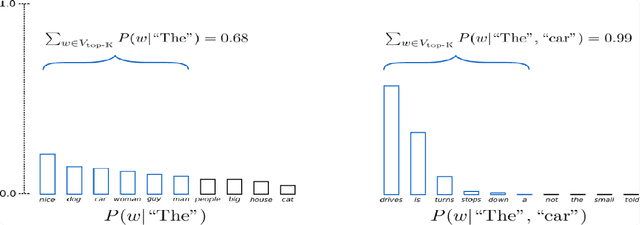

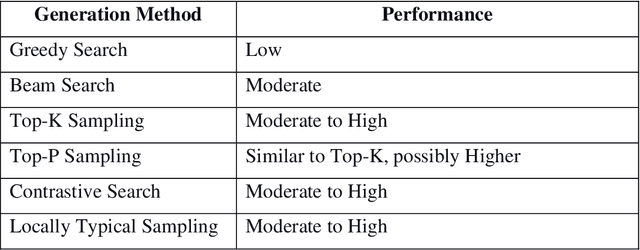

Abstract:This work delved into the realm of automatic text generation, exploring a variety of techniques ranging from traditional deterministic approaches to more modern stochastic methods. Through analysis of greedy search, beam search, top-k sampling, top-p sampling, contrastive searching, and locally typical searching, this work has provided valuable insights into the strengths, weaknesses, and potential applications of each method. Each text-generating method is evaluated using several standard metrics and a comparative study has been made on the performance of the approaches. Finally, some future directions of research in the field of automatic text generation are also identified.

Much Easier Said Than Done: Falsifying the Causal Relevance of Linear Decoding Methods

Nov 08, 2022

Abstract:Linear classifier probes are frequently utilized to better understand how neural networks function. Researchers have approached the problem of determining unit importance in neural networks by probing their learned, internal representations. Linear classifier probes identify highly selective units as the most important for network function. Whether or not a network actually relies on high selectivity units can be tested by removing them from the network using ablation. Surprisingly, when highly selective units are ablated they only produce small performance deficits, and even then only in some cases. In spite of the absence of ablation effects for selective neurons, linear decoding methods can be effectively used to interpret network function, leaving their effectiveness a mystery. To falsify the exclusive role of selectivity in network function and resolve this contradiction, we systematically ablate groups of units in subregions of activation space. Here, we find a weak relationship between neurons identified by probes and those identified by ablation. More specifically, we find that an interaction between selectivity and the average activity of the unit better predicts ablation performance deficits for groups of units in AlexNet, VGG16, MobileNetV2, and ResNet101. Linear decoders are likely somewhat effective because they overlap with those units that are causally important for network function. Interpretability methods could be improved by focusing on causally important units.

Assessing the Effectiveness of Syntactic Structure to Learn Code Edit Representations

Jun 11, 2021

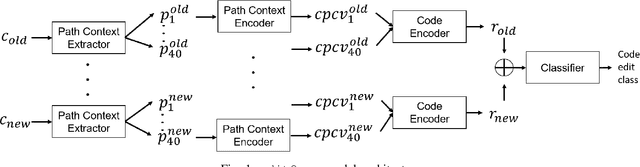

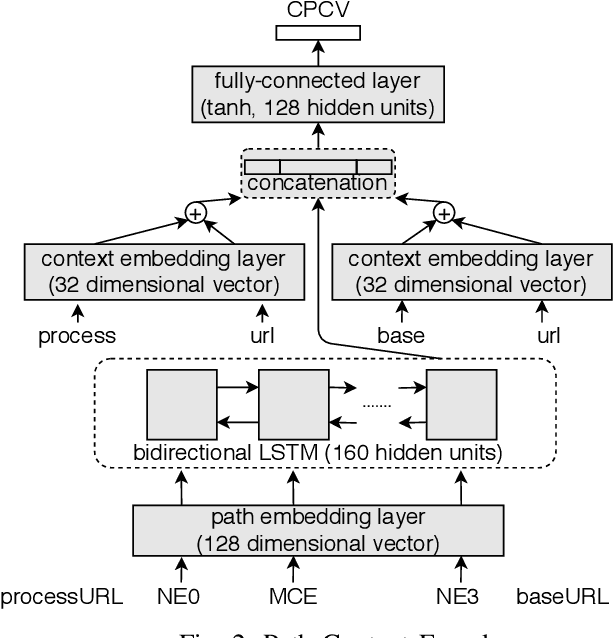

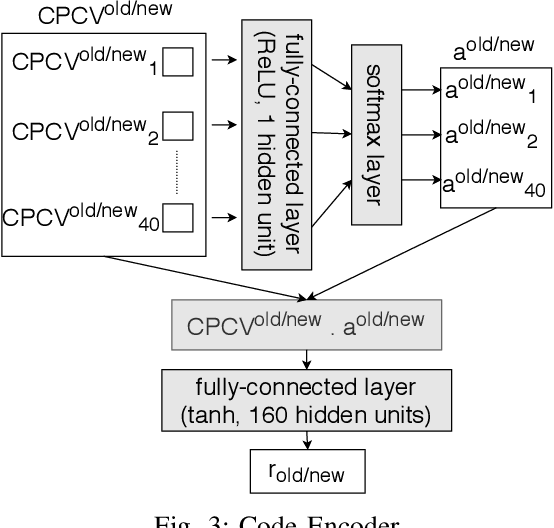

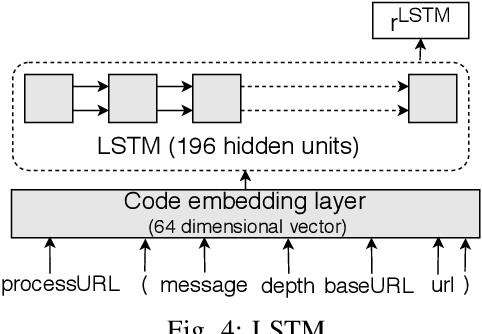

Abstract:In recent times, it has been shown that one can use code as data to aid various applications such as automatic commit message generation, automatic generation of pull request descriptions and automatic program repair. Take for instance the problem of commit message generation. Treating source code as a sequence of tokens, state of the art techniques generate commit messages using neural machine translation models. However, they tend to ignore the syntactic structure of programming languages. Previous work, i.e., code2seq has used structural information from Abstract Syntax Tree (AST) to represent source code and they use it to automatically generate method names. In this paper, we elaborate upon this state of the art approach and modify it to represent source code edits. We determine the effect of using such syntactic structure for the problem of classifying code edits. Inspired by the code2seq approach, we evaluate how using structural information from AST, i.e., paths between AST leaf nodes can help with the task of code edit classification on two datasets of fine-grained syntactic edits. Our experiments shows that attempts of adding syntactic structure does not result in any improvements over less sophisticated methods. The results suggest that techniques such as code2seq, while promising, have a long way to go before they can be generically applied to learning code edit representations. We hope that these results will benefit other researchers and inspire them to work further on this problem.

Many Hands Make Light Work: Using Essay Traits to Automatically Score Essays

Feb 01, 2021

Abstract:Most research in the area of automatic essay grading (AEG) is geared towards scoring the essay holistically while there has also been some work done on scoring individual essay traits. In this paper, we describe a way to score essays holistically using a multi-task learning (MTL) approach, where scoring the essay holistically is the primary task, and scoring the essay traits is the auxiliary task. We compare our results with a single-task learning (STL) approach, using both LSTMs and BiLSTMs. We also compare our results of the auxiliary task with such tasks done in other AEG systems. To find out which traits work best for different types of essays, we conduct ablation tests for each of the essay traits. We also report the runtime and number of training parameters for each system. We find that MTL-based BiLSTM system gives the best results for scoring the essay holistically, as well as performing well on scoring the essay traits.

Robotic Motion Planning using Learned Critical Sources and Local Sampling

Jun 07, 2020

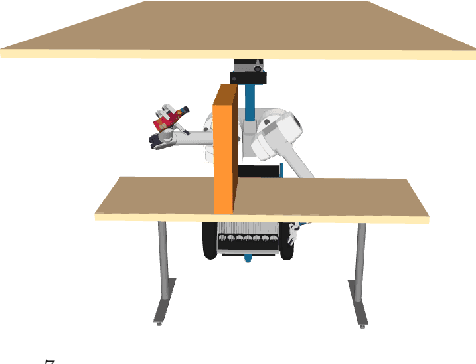

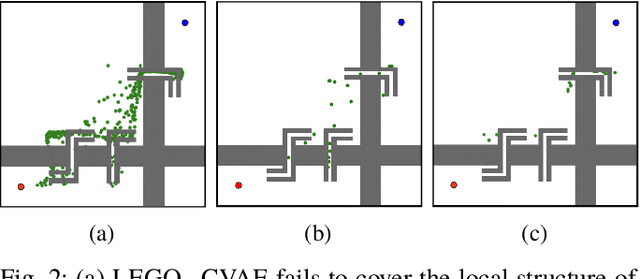

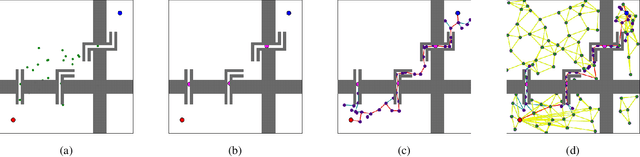

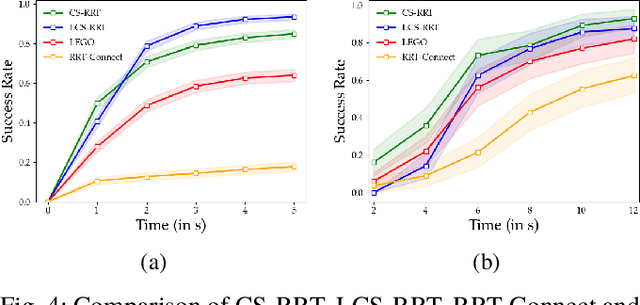

Abstract:Sampling based methods are widely used for robotic motion planning. Traditionally, these samples are drawn from probabilistic ( or deterministic ) distributions to cover the state space uniformly. Despite being probabilistically complete, they fail to find a feasible path in a reasonable amount of time in constrained environments where it is essential to go through narrow passages (bottleneck regions). Current state of the art techniques train a learning model (learner) to predict samples selectively on these bottleneck regions. However, these algorithms depend completely on samples generated by this learner to navigate through the bottleneck regions. As the complexity of the planning problem increases, the amount of data and time required to make this learner robust to fine variations in the structure of the workspace becomes computationally intractable. In this work, we present (1) an efficient and robust method to use a learner to locate the bottleneck regions and (2) two algorithms that use local sampling methods to leverage the location of these bottleneck regions for efficient motion planning while maintaining probabilistic completeness. We test our algorithms on 2 dimensional planning problems and 7 dimensional robotic arm planning, and report significant gains over heuristics as well as learned baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge