Pim A. de Jong

Decision making in cancer: Causal questions require causal answers

Sep 15, 2022

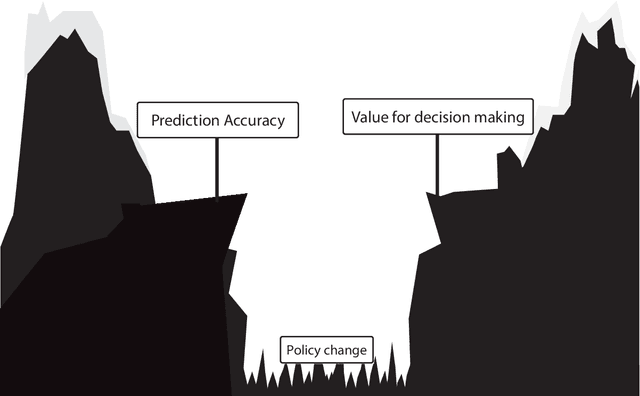

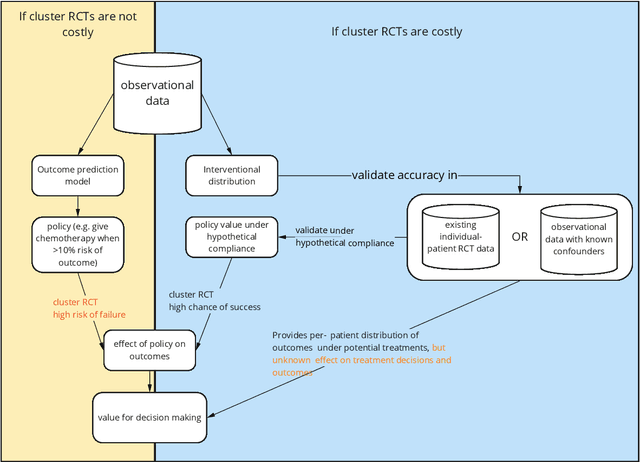

Abstract:Treatment decisions in cancer care are guided by treatment effect estimates from randomized controlled trials (RCTs). RCTs estimate the average effect of one treatment versus another in a certain population. However, treatments may not be equally effective for every patient in a population. Knowing the effectiveness of treatments tailored to specific patient and tumor characteristics would enable individualized treatment decisions. Getting tailored treatment effects by averaging outcomes in different patient subgroups in RCTs requires an unfeasible number of patients to have sufficient statistical power in all relevant subgroups for all possible treatments. The American Joint Committee on Cancer (AJCC) recommends that researchers develop outcome prediction models (OPMs) in an effort to individualize treatment decisions. OPMs sometimes called risk models or prognosis models, use patient and tumor characteristics to predict a patient outcome such as overall survival. The assumption is that the predictions are useful for treatment decisions using rules such as "prescribe chemotherapy only if the OPM predicts the patient has a high risk of recurrence". Recognizing the importance of reliable predictions, the AJCC published a checklist for OPMs to ensure dependable OPM prediction accuracy in the patient population for which the OPM was designed. However, accurate outcome predictions do not imply that these predictions yield good treatment decisions. In this perspective, we show that OPM rely on a fixed treatment policy which implies that OPM that were found to accurately predict outcomes in validation studies can still lead to patient harm when used to inform treatment decisions. We then give guidance on how to develop models that are useful for individualized treatment decisions and how to evaluate whether a model has value for decision-making.

Multimodal Learning for Cardiovascular Risk Prediction using EHR Data

Aug 27, 2020

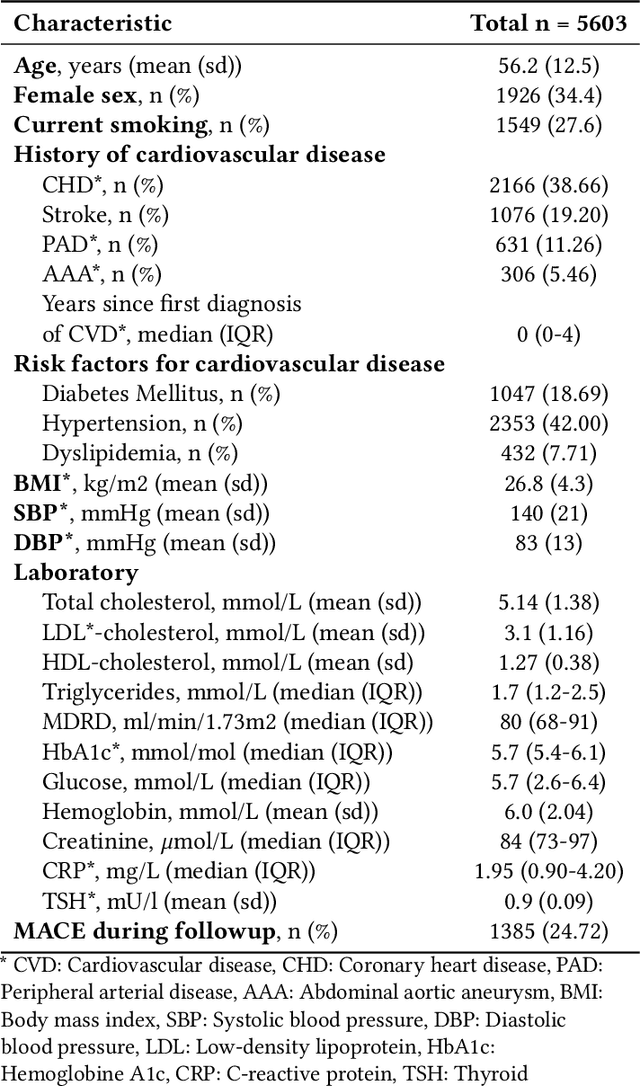

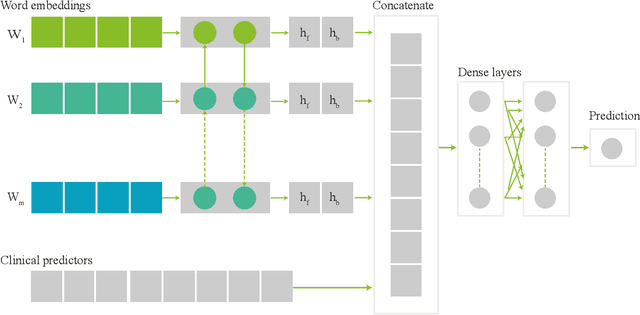

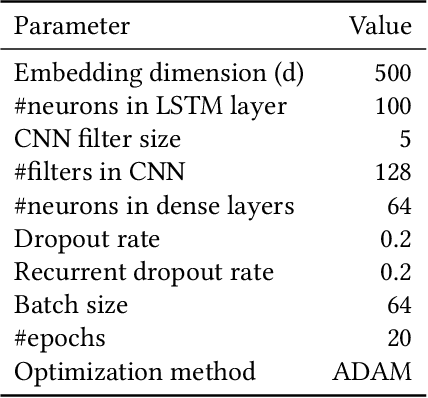

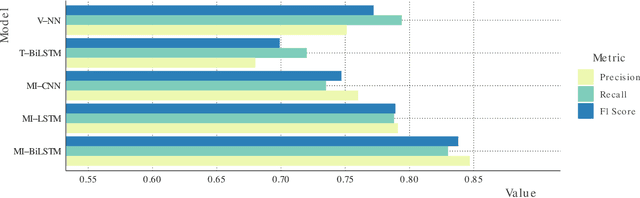

Abstract:Electronic health records (EHRs) contain structured and unstructured data of significant clinical and research value. Various machine learning approaches have been developed to employ information in EHRs for risk prediction. The majority of these attempts, however, focus on structured EHR fields and lose the vast amount of information in the unstructured texts. To exploit the potential information captured in EHRs, in this study we propose a multimodal recurrent neural network model for cardiovascular risk prediction that integrates both medical texts and structured clinical information. The proposed multimodal bidirectional long short-term memory (BiLSTM) model concatenates word embeddings to classical clinical predictors before applying them to a final fully connected neural network. In the experiments, we compare performance of different deep neural network (DNN) architectures including convolutional neural network and long short-term memory in scenarios of using clinical variables and chest X-ray radiology reports. Evaluated on a data set of real world patients with manifest vascular disease or at high-risk for cardiovascular disease, the proposed BiLSTM model demonstrates state-of-the-art performance and outperforms other DNN baseline architectures.

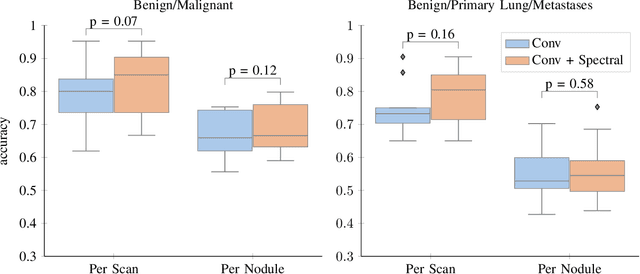

Primary Tumor Origin Classification of Lung Nodules in Spectral CT using Transfer Learning

Jun 30, 2020

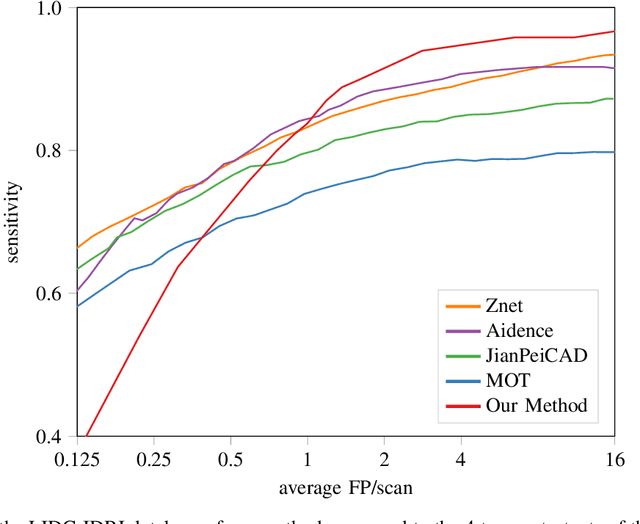

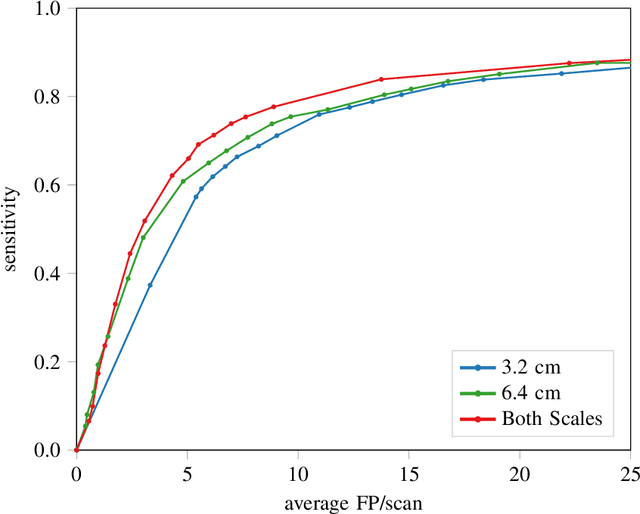

Abstract:Early detection of lung cancer has been proven to decrease mortality significantly. A recent development in computed tomography (CT), spectral CT, can potentially improve diagnostic accuracy, as it yields more information per scan than regular CT. However, the shear workload involved with analyzing a large number of scans drives the need for automated diagnosis methods. Therefore, we propose a detection and classification system for lung nodules in CT scans. Furthermore, we want to observe whether spectral images can increase classifier performance. For the detection of nodules we trained a VGG-like 3D convolutional neural net (CNN). To obtain a primary tumor classifier for our dataset we pre-trained a 3D CNN with similar architecture on nodule malignancies of a large publicly available dataset, the LIDC-IDRI dataset. Subsequently we used this pre-trained network as feature extractor for the nodules in our dataset. The resulting feature vectors were classified into two (benign/malignant) and three (benign/primary lung cancer/metastases) classes using support vector machine (SVM). This classification was performed both on nodule- and scan-level. We obtained state-of-the art performance for detection and malignancy regression on the LIDC-IDRI database. Classification performance on our own dataset was higher for scan- than for nodule-level predictions. For the three-class scan-level classification we obtained an accuracy of 78\%. Spectral features did increase classifier performance, but not significantly. Our work suggests that a pre-trained feature extractor can be used as primary tumor origin classifier for lung nodules, eliminating the need for elaborate fine-tuning of a new network and large datasets. Code is available at \url{https://github.com/tueimage/lung-nodule-msc-2018}.

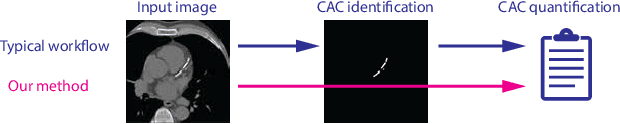

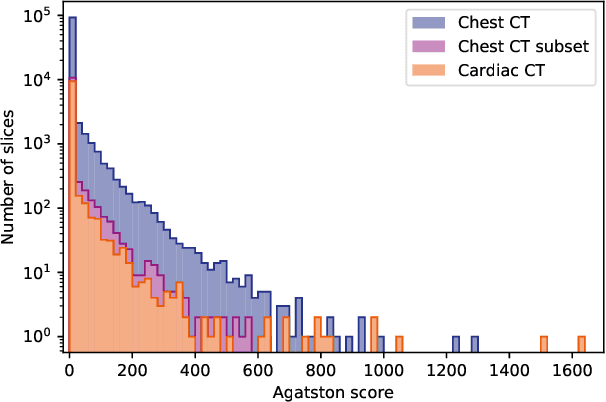

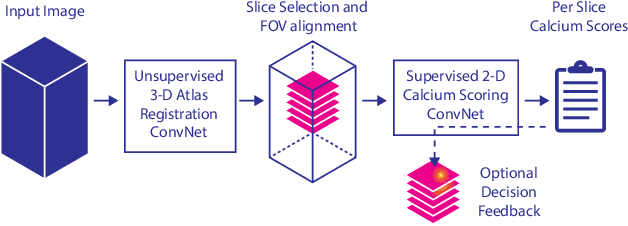

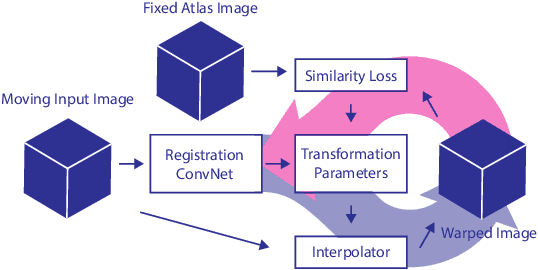

Direct Automatic Coronary Calcium Scoring in Cardiac and Chest CT

Feb 12, 2019

Abstract:Cardiovascular disease (CVD) is the global leading cause of death. A strong risk factor for CVD events is the amount of coronary artery calcium (CAC). To meet demands of the increasing interest in quantification of CAC, i.e. coronary calcium scoring, especially as an unrequested finding for screening and research, automatic methods have been proposed. Current automatic calcium scoring methods are relatively computationally expensive and only provide scores for one type of CT. To address this, we propose a computationally efficient method that employs two ConvNets: the first performs registration to align the fields of view of input CTs and the second performs direct regression of the calcium score, thereby circumventing time-consuming intermediate CAC segmentation. Optional decision feedback provides insight in the regions that contributed to the calcium score. Experiments were performed using 903 cardiac CT and 1,687 chest CT scans. The method predicted calcium scores in less than 0.3 s. Intra-class correlation coefficient between predicted and manual calcium scores was 0.98 for both cardiac and chest CT. The method showed almost perfect agreement between automatic and manual CVD risk categorization in both datasets, with a linearly weighted Cohen's kappa of 0.95 in cardiac CT and 0.93 in chest CT. Performance is similar to that of state-of-the-art methods, but the proposed method is hundreds of times faster. By providing visual feedback, insight is given in the decision process, making it readily implementable in clinical and research settings.

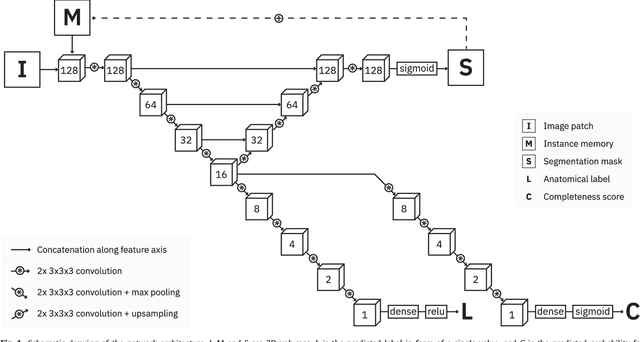

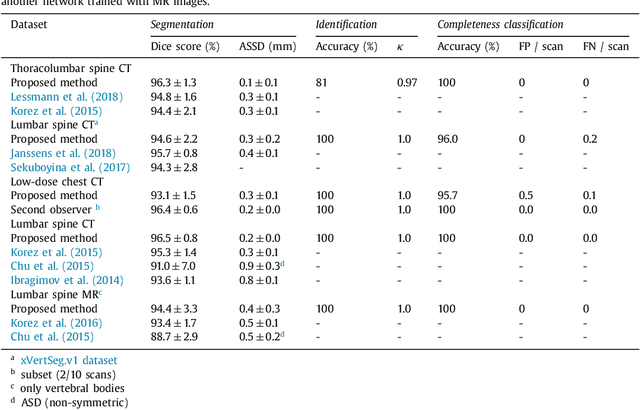

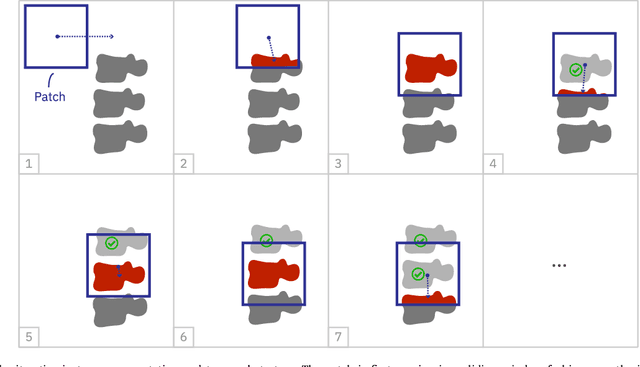

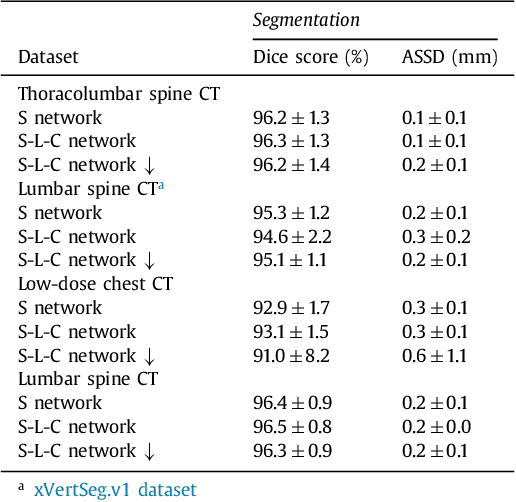

Iterative fully convolutional neural networks for automatic vertebra segmentation

Apr 12, 2018

Abstract:Precise segmentation of the vertebrae is often required for automatic detection of vertebral abnormalities. This especially enables incidental detection of abnormalities such as compression fractures in images that were acquired for other diagnostic purposes. While many CT and MR scans of the chest and abdomen cover a section of the spine, they often do not cover the entire spine. Additionally, the first and last visible vertebrae are likely only partially included in such scans. In this paper, we therefore approach vertebra segmentation as an instance segmentation problem. A fully convolutional neural network is combined with an instance memory that retains information about already segmented vertebrae. This network iteratively analyzes image patches, using the instance memory to search for and segment the first not yet segmented vertebra. At the same time, each vertebra is classified as completely or partially visible, so that partially visible vertebrae can be excluded from further analyses. We evaluated this method on spine CT scans from a vertebra segmentation challenge and on low-dose chest CT scans. The method achieved an average Dice score of 95.8% and 92.1%, respectively, and a mean absolute surface distance of 0.194 mm and 0.344 mm.

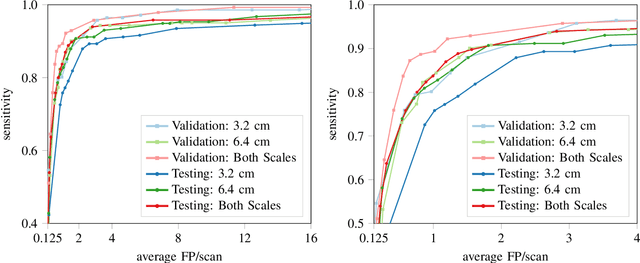

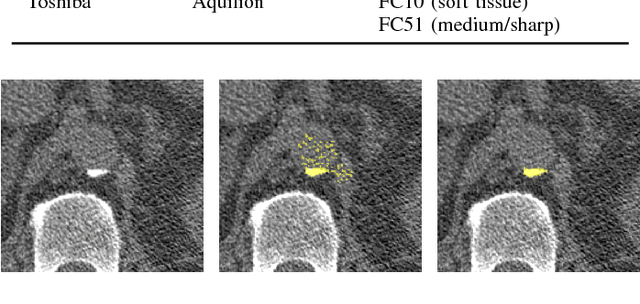

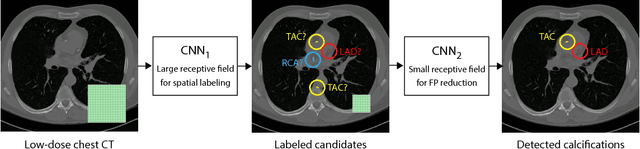

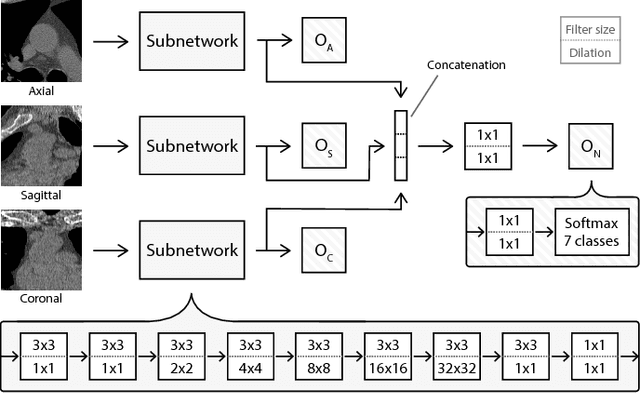

Automatic calcium scoring in low-dose chest CT using deep neural networks with dilated convolutions

Feb 01, 2018

Abstract:Heavy smokers undergoing screening with low-dose chest CT are affected by cardiovascular disease as much as by lung cancer. Low-dose chest CT scans acquired in screening enable quantification of atherosclerotic calcifications and thus enable identification of subjects at increased cardiovascular risk. This paper presents a method for automatic detection of coronary artery, thoracic aorta and cardiac valve calcifications in low-dose chest CT using two consecutive convolutional neural networks. The first network identifies and labels potential calcifications according to their anatomical location and the second network identifies true calcifications among the detected candidates. This method was trained and evaluated on a set of 1744 CT scans from the National Lung Screening Trial. To determine whether any reconstruction or only images reconstructed with soft tissue filters can be used for calcification detection, we evaluated the method on soft and medium/sharp filter reconstructions separately. On soft filter reconstructions, the method achieved F1 scores of 0.89, 0.89, 0.67, and 0.55 for coronary artery, thoracic aorta, aortic valve and mitral valve calcifications, respectively. On sharp filter reconstructions, the F1 scores were 0.84, 0.81, 0.64, and 0.66, respectively. Linearly weighted kappa coefficients for risk category assignment based on per subject coronary artery calcium were 0.91 and 0.90 for soft and sharp filter reconstructions, respectively. These results demonstrate that the presented method enables reliable automatic cardiovascular risk assessment in all low-dose chest CT scans acquired for lung cancer screening.

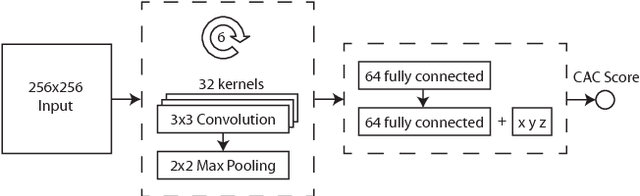

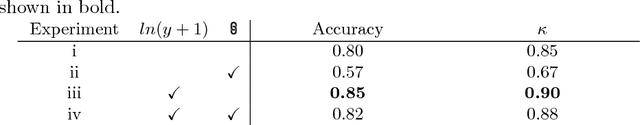

Direct and Real-Time Cardiovascular Risk Prediction

Dec 08, 2017

Abstract:Coronary artery calcium (CAC) burden quantified in low-dose chest CT is a predictor of cardiovascular events. We propose an automatic method for CAC quantification, circumventing intermediate segmentation of CAC. The method determines a bounding box around the heart using a ConvNet for localization. Subsequently, a dedicated ConvNet analyzes axial slices within the bounding boxes to determine CAC quantity by regression. A dataset of 1,546 baseline CT scans was used from the National Lung Screening Trial with manually identified CAC. The method achieved an ICC of 0.98 between manual reference and automatically obtained Agatston scores. Stratification of subjects into five cardiovascular risk categories resulted in an accuracy of 85\% and Cohen's linearly weighted $\kappa$ of 0.90. The results demonstrate that real-time quantification of CAC burden in chest CT without the need for segmentation of CAC is possible.

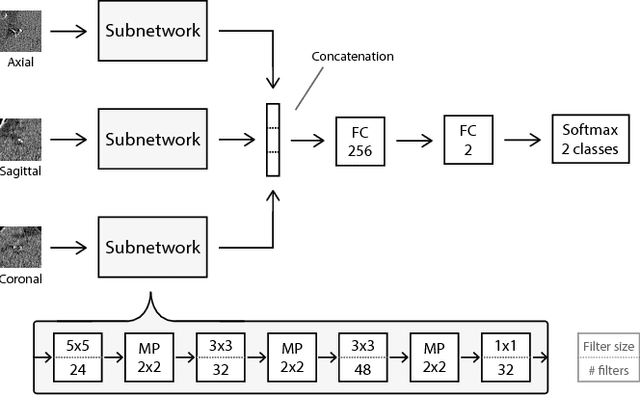

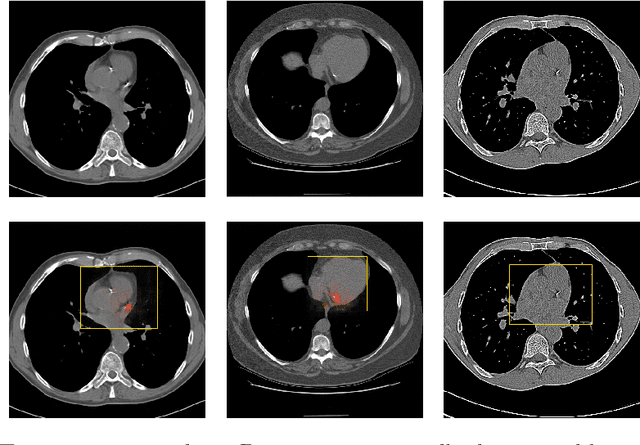

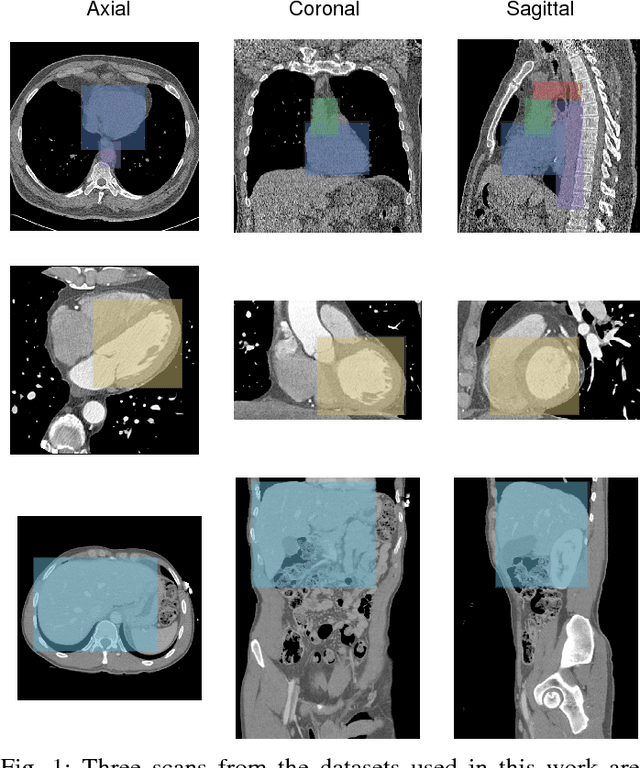

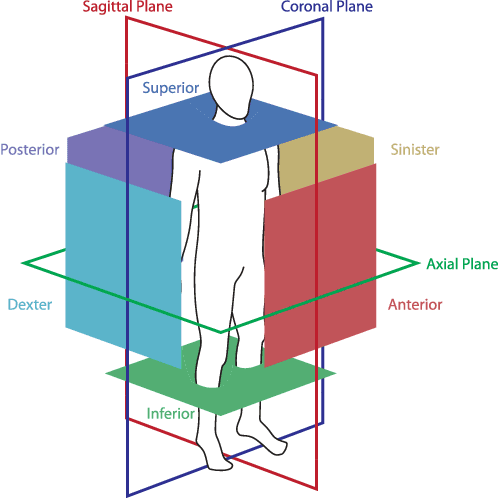

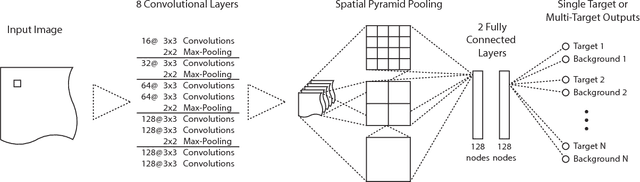

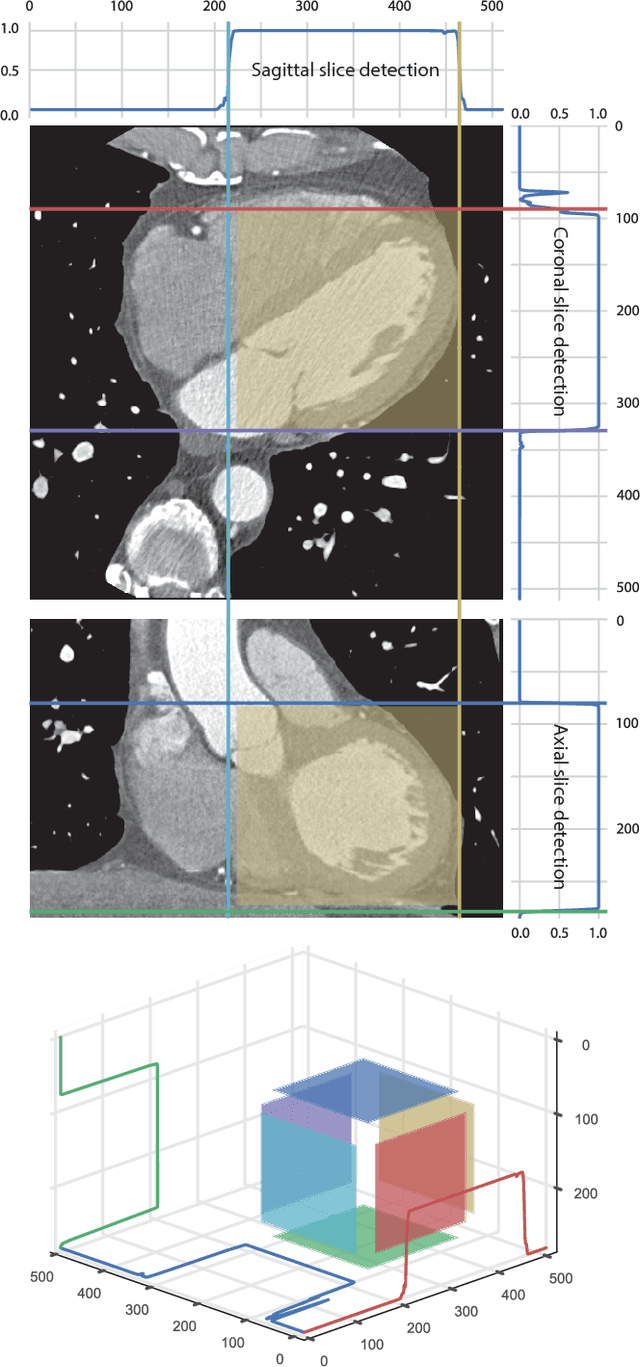

ConvNet-Based Localization of Anatomical Structures in 3D Medical Images

Apr 19, 2017

Abstract:Localization of anatomical structures is a prerequisite for many tasks in medical image analysis. We propose a method for automatic localization of one or more anatomical structures in 3D medical images through detection of their presence in 2D image slices using a convolutional neural network (ConvNet). A single ConvNet is trained to detect presence of the anatomical structure of interest in axial, coronal, and sagittal slices extracted from a 3D image. To allow the ConvNet to analyze slices of different sizes, spatial pyramid pooling is applied. After detection, 3D bounding boxes are created by combining the output of the ConvNet in all slices. In the experiments 200 chest CT, 100 cardiac CT angiography (CTA), and 100 abdomen CT scans were used. The heart, ascending aorta, aortic arch, and descending aorta were localized in chest CT scans, the left cardiac ventricle in cardiac CTA scans, and the liver in abdomen CT scans. Localization was evaluated using the distances between automatically and manually defined reference bounding box centroids and walls. The best results were achieved in localization of structures with clearly defined boundaries (e.g. aortic arch) and the worst when the structure boundary was not clearly visible (e.g. liver). The method was more robust and accurate in localization multiple structures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge