Peter J. Stuckey

MATA: A Trainable Hierarchical Automaton System for Multi-Agent Visual Reasoning

Jan 27, 2026Abstract:Recent vision-language models have strong perceptual ability but their implicit reasoning is hard to explain and easily generates hallucinations on complex queries. Compositional methods improve interpretability, but most rely on a single agent or hand-crafted pipeline and cannot decide when to collaborate across complementary agents or compete among overlapping ones. We introduce MATA (Multi-Agent hierarchical Trainable Automaton), a multi-agent system presented as a hierarchical finite-state automaton for visual reasoning whose top-level transitions are chosen by a trainable hyper agent. Each agent corresponds to a state in the hyper automaton, and runs a small rule-based sub-automaton for reliable micro-control. All agents read and write a shared memory, yielding transparent execution history. To supervise the hyper agent's transition policy, we build transition-trajectory trees and transform to memory-to-next-state pairs, forming the MATA-SFT-90K dataset for supervised finetuning (SFT). The finetuned LLM as the transition policy understands the query and the capacity of agents, and it can efficiently choose the optimal agent to solve the task. Across multiple visual reasoning benchmarks, MATA achieves the state-of-the-art results compared with monolithic and compositional baselines. The code and dataset are available at https://github.com/ControlNet/MATA.

Most General Explanations of Tree Ensembles

May 19, 2025Abstract:Explainable Artificial Intelligence (XAI) is critical for attaining trust in the operation of AI systems. A key question of an AI system is ``why was this decision made this way''. Formal approaches to XAI use a formal model of the AI system to identify abductive explanations. While abductive explanations may be applicable to a large number of inputs sharing the same concrete values, more general explanations may be preferred for numeric inputs. So-called inflated abductive explanations give intervals for each feature ensuring that any input whose values fall withing these intervals is still guaranteed to make the same prediction. Inflated explanations cover a larger portion of the input space, and hence are deemed more general explanations. But there can be many (inflated) abductive explanations for an instance. Which is the best? In this paper, we show how to find a most general abductive explanation for an AI decision. This explanation covers as much of the input space as possible, while still being a correct formal explanation of the model's behaviour. Given that we only want to give a human one explanation for a decision, the most general explanation gives us the explanation with the broadest applicability, and hence the one most likely to seem sensible. (The paper has been accepted at IJCAI2025 conference.)

Hier-SLAM++: Neuro-Symbolic Semantic SLAM with a Hierarchically Categorical Gaussian Splatting

Feb 20, 2025

Abstract:We propose Hier-SLAM++, a comprehensive Neuro-Symbolic semantic 3D Gaussian Splatting SLAM method with both RGB-D and monocular input featuring an advanced hierarchical categorical representation, which enables accurate pose estimation as well as global 3D semantic mapping. The parameter usage in semantic SLAM systems increases significantly with the growing complexity of the environment, making scene understanding particularly challenging and costly. To address this problem, we introduce a novel and general hierarchical representation that encodes both semantic and geometric information in a compact form into 3D Gaussian Splatting, leveraging the capabilities of large language models (LLMs) as well as the 3D generative model. By utilizing the proposed hierarchical tree structure, semantic information is symbolically represented and learned in an end-to-end manner. We further introduce a novel semantic loss designed to optimize hierarchical semantic information through both inter-level and cross-level optimization. Additionally, we propose an improved SLAM system to support both RGB-D and monocular inputs using a feed-forward model. To the best of our knowledge, this is the first semantic monocular Gaussian Splatting SLAM system, significantly reducing sensor requirements for 3D semantic understanding and broadening the applicability of semantic Gaussian SLAM system. We conduct experiments on both synthetic and real-world datasets, demonstrating superior or on-par performance with state-of-the-art NeRF-based and Gaussian-based SLAM systems, while significantly reducing storage and training time requirements.

Timetable Nodes for Public Transport Network

Oct 21, 2024Abstract:Faster pathfinding in time-dependent transport networks is an important and challenging problem in navigation systems. There are two main types of transport networks: road networks for car driving and public transport route network. The solutions that work well in road networks, such as Time-dependent Contraction Hierarchies and other graph-based approaches, do not usually apply in transport networks. In transport networks, non-graph solutions such as CSA and RAPTOR show the best results compared to graph-based techniques. In our work, we propose a method that advances graph-based approaches by using different optimization techniques from computational geometry to speed up the search process in transport networks. We apply a new pre-computation step, which we call timetable nodes (TTN). Our inspiration comes from an iterative search problem in computational geometry. We implement two versions of the TTN: one uses a Combined Search Tree (TTN-CST), and the second uses Fractional Cascading (TTN-FC). Both of these approaches decrease the asymptotic complexity of reaching new nodes from $O(k\times \log|C|)$ to $O(k + \log(k) + \log(|C|))$, where $k$ is the number of outgoing edges from a node and $|C|$ is the size of the timetable information (total outgoing edges). Our solution suits any other time-dependent networks and can be integrated into other pathfinding algorithms. Our experiments indicate that this pre-computation significantly enhances the performance on high-density graphs. This study showcases how leveraging computational geometry can enhance pathfinding in transport networks, enabling faster pathfinding in scenarios involving large numbers of outgoing edges.

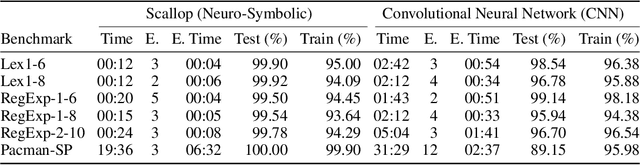

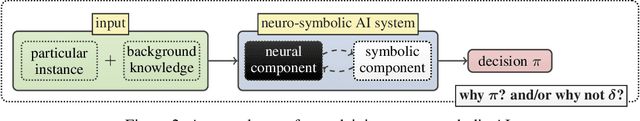

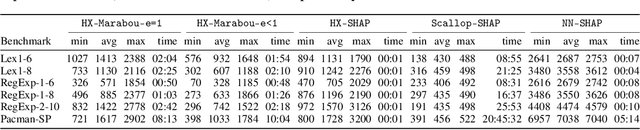

Formal Explanations for Neuro-Symbolic AI

Oct 18, 2024

Abstract:Despite the practical success of Artificial Intelligence (AI), current neural AI algorithms face two significant issues. First, the decisions made by neural architectures are often prone to bias and brittleness. Second, when a chain of reasoning is required, neural systems often perform poorly. Neuro-symbolic artificial intelligence is a promising approach that tackles these (and other) weaknesses by combining the power of neural perception and symbolic reasoning. Meanwhile, the success of AI has made it critical to understand its behaviour, leading to the development of explainable artificial intelligence (XAI). While neuro-symbolic AI systems have important advantages over purely neural AI, we still need to explain their actions, which are obscured by the interactions of the neural and symbolic components. To address the issue, this paper proposes a formal approach to explaining the decisions of neuro-symbolic systems. The approach hinges on the use of formal abductive explanations and on solving the neuro-symbolic explainability problem hierarchically. Namely, it first computes a formal explanation for the symbolic component of the system, which serves to identify a subset of the individual parts of neural information that needs to be explained. This is followed by explaining only those individual neural inputs, independently of each other, which facilitates succinctness of hierarchical formal explanations and helps to increase the overall performance of the approach. Experimental results for a few complex reasoning tasks demonstrate practical efficiency of the proposed approach, in comparison to purely neural systems, from the perspective of explanation size, explanation time, training time, model sizes, and the quality of explanations reported.

Anytime Approximate Formal Feature Attribution

Dec 12, 2023

Abstract:Widespread use of artificial intelligence (AI) algorithms and machine learning (ML) models on the one hand and a number of crucial issues pertaining to them warrant the need for explainable artificial intelligence (XAI). A key explainability question is: given this decision was made, what are the input features which contributed to the decision? Although a range of XAI approaches exist to tackle this problem, most of them have significant limitations. Heuristic XAI approaches suffer from the lack of quality guarantees, and often try to approximate Shapley values, which is not the same as explaining which features contribute to a decision. A recent alternative is so-called formal feature attribution (FFA), which defines feature importance as the fraction of formal abductive explanations (AXp's) containing the given feature. This measures feature importance from the view of formally reasoning about the model's behavior. It is challenging to compute FFA using its definition because that involves counting AXp's, although one can approximate it. Based on these results, this paper makes several contributions. First, it gives compelling evidence that computing FFA is intractable, even if the set of contrastive formal explanations (CXp's) is provided, by proving that the problem is #P-hard. Second, by using the duality between AXp's and CXp's, it proposes an efficient heuristic to switch from CXp enumeration to AXp enumeration on-the-fly resulting in an adaptive explanation enumeration algorithm effectively approximating FFA in an anytime fashion. Finally, experimental results obtained on a range of widely used datasets demonstrate the effectiveness of the proposed FFA approximation approach in terms of the error of FFA approximation as well as the number of explanations computed and their diversity given a fixed time limit.

Traffic Flow Optimisation for Lifelong Multi-Agent Path Finding

Aug 23, 2023Abstract:Multi-Agent Path Finding (MAPF) is a fundamental problem in robotics that asks us to compute collision-free paths for a team of agents, all moving across a shared map. Although many works appear on this topic, all current algorithms struggle as the number of agents grows. The principal reason is that existing approaches typically plan free-flow optimal paths, which creates congestion. To tackle this issue we propose a new approach for MAPF where agents are guided to their destination by following congestion-avoiding paths. We evaluate the idea in two large-scale settings: one-shot MAPF, where each agent has a single destination, and lifelong MAPF, where agents are continuously assigned new tasks. For one-shot MAPF we show that our approach substantially improves solution quality. For Lifelong MAPF we report large improvements in overall throughput.

The divergence time of protein structures modelled by Markov matrices and its relation to the divergence of sequences

Aug 11, 2023Abstract:A complete time-parameterized statistical model quantifying the divergent evolution of protein structures in terms of the patterns of conservation of their secondary structures is inferred from a large collection of protein 3D structure alignments. This provides a better alternative to time-parameterized sequence-based models of protein relatedness, that have clear limitations dealing with twilight and midnight zones of sequence relationships. Since protein structures are far more conserved due to the selection pressure directly placed on their function, divergence time estimates can be more accurate when inferred from structures. We use the Bayesian and information-theoretic framework of Minimum Message Length to infer a time-parameterized stochastic matrix (accounting for perturbed structural states of related residues) and associated Dirichlet models (accounting for insertions and deletions during the evolution of protein domains). These are used in concert to estimate the Markov time of divergence of tertiary structures, a task previously only possible using proxies (like RMSD). By analyzing one million pairs of homologous structures, we yield a relationship between the Markov divergence time of structures and of sequences. Using these inferred models and the relationship between the divergence of sequences and structures, we demonstrate a competitive performance in secondary structure prediction against neural network architectures commonly employed for this task. The source code and supplementary information are downloadable from \url{http://lcb.infotech.monash.edu.au/sstsum}.

Lifted Sequential Planning with Lazy Constraint Generation Solvers

Jul 17, 2023Abstract:This paper studies the possibilities made open by the use of Lazy Clause Generation (LCG) based approaches to Constraint Programming (CP) for tackling sequential classical planning. We propose a novel CP model based on seminal ideas on so-called lifted causal encodings for planning as satisfiability, that does not require grounding, as choosing groundings for functions and action schemas becomes an integral part of the problem of designing valid plans. This encoding does not require encoding frame axioms, and does not explicitly represent states as decision variables for every plan step. We also present a propagator procedure that illustrates the possibilities of LCG to widen the kind of inference methods considered to be feasible in planning as (iterated) CSP solving. We test encodings and propagators over classic IPC and recently proposed benchmarks for lifted planning, and report that for planning problem instances requiring fewer plan steps our methods compare very well with the state-of-the-art in optimal sequential planning.

On Formal Feature Attribution and Its Approximation

Jul 14, 2023

Abstract:Recent years have witnessed the widespread use of artificial intelligence (AI) algorithms and machine learning (ML) models. Despite their tremendous success, a number of vital problems like ML model brittleness, their fairness, and the lack of interpretability warrant the need for the active developments in explainable artificial intelligence (XAI) and formal ML model verification. The two major lines of work in XAI include feature selection methods, e.g. Anchors, and feature attribution techniques, e.g. LIME and SHAP. Despite their promise, most of the existing feature selection and attribution approaches are susceptible to a range of critical issues, including explanation unsoundness and out-of-distribution sampling. A recent formal approach to XAI (FXAI) although serving as an alternative to the above and free of these issues suffers from a few other limitations. For instance and besides the scalability limitation, the formal approach is unable to tackle the feature attribution problem. Additionally, a formal explanation despite being formally sound is typically quite large, which hampers its applicability in practical settings. Motivated by the above, this paper proposes a way to apply the apparatus of formal XAI to the case of feature attribution based on formal explanation enumeration. Formal feature attribution (FFA) is argued to be advantageous over the existing methods, both formal and non-formal. Given the practical complexity of the problem, the paper then proposes an efficient technique for approximating exact FFA. Finally, it offers experimental evidence of the effectiveness of the proposed approximate FFA in comparison to the existing feature attribution algorithms not only in terms of feature importance and but also in terms of their relative order.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge