Miquel Ramirez

Temporal Planning via Interval Logic Satisfiability for Autonomous Systems

Jun 14, 2024

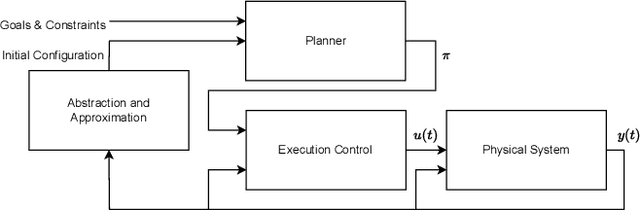

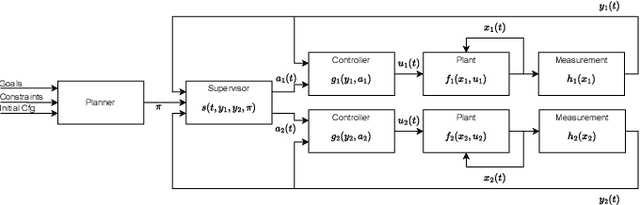

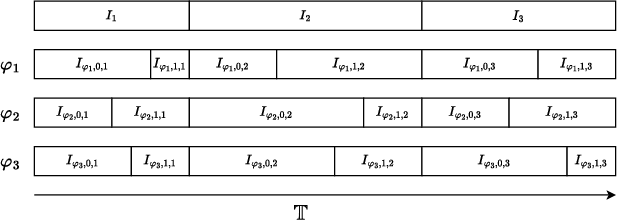

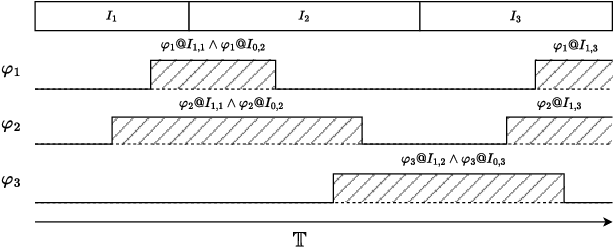

Abstract:Many automated planning methods and formulations rely on suitably designed abstractions or simplifications of the constrained dynamics associated with agents to attain computational scalability. We consider formulations of temporal planning where intervals are associated with both action and fluent atoms, and relations between these are given as sentences in Allen's Interval Logic. We propose a notion of planning graphs that can account for complex concurrency relations between actions and fluents as a Constraint Programming (CP) model. We test an implementation of our algorithm on a state-of-the-art framework for CP and compare it with PDDL 2.1 planners that capture plans requiring complex concurrent interactions between agents. We demonstrate our algorithm outperforms existing PDDL 2.1 planners in the case studies. Still, scalability remains challenging when plans must comply with intricate concurrent interactions and the sequencing of actions.

Lifted Sequential Planning with Lazy Constraint Generation Solvers

Jul 17, 2023Abstract:This paper studies the possibilities made open by the use of Lazy Clause Generation (LCG) based approaches to Constraint Programming (CP) for tackling sequential classical planning. We propose a novel CP model based on seminal ideas on so-called lifted causal encodings for planning as satisfiability, that does not require grounding, as choosing groundings for functions and action schemas becomes an integral part of the problem of designing valid plans. This encoding does not require encoding frame axioms, and does not explicitly represent states as decision variables for every plan step. We also present a propagator procedure that illustrates the possibilities of LCG to widen the kind of inference methods considered to be feasible in planning as (iterated) CSP solving. We test encodings and propagators over classic IPC and recently proposed benchmarks for lifted planning, and report that for planning problem instances requiring fewer plan steps our methods compare very well with the state-of-the-art in optimal sequential planning.

Lazy Probabilistic Roadmaps Revisited

Sep 28, 2022

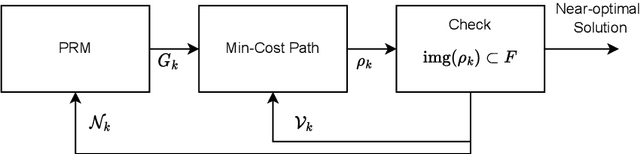

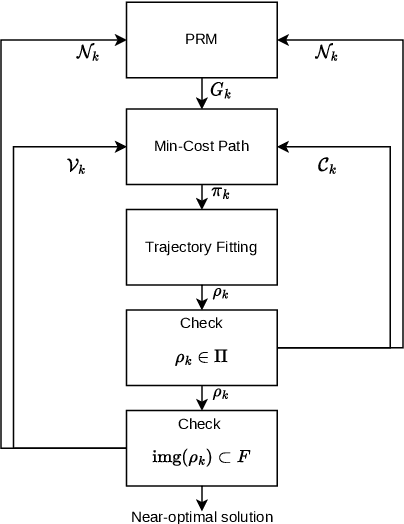

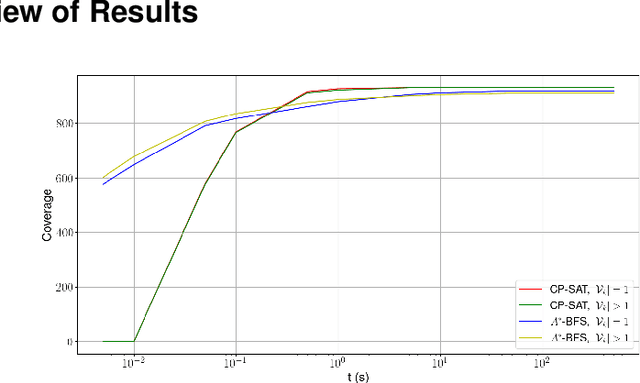

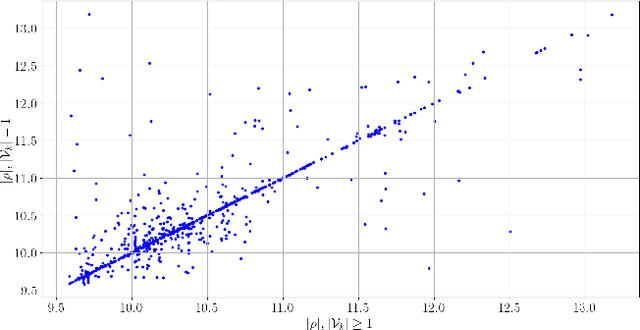

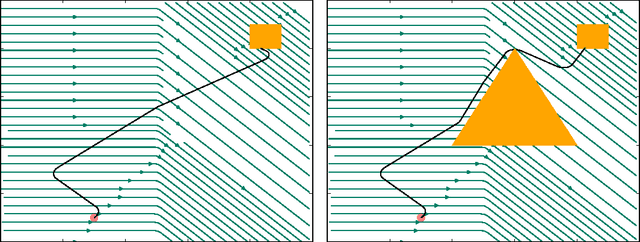

Abstract:This paper describes a revision of the classic Lazy Probabilistic Roadmaps algorithm (Lazy PRM), that results from pairing PRM and a novel Branch-and-Cut (BC) algorithm. Cuts are dynamically generated constraints that are imposed on minimum cost paths over the geometric graphs selected by PRM. Cuts eliminate paths that cannot be mapped into smooth plans that satisfy suitably defined kinematic constraints. We generate candidate smooth plans by fitting splines to vertices in minimum-cost path. Plans are validated with a recently proposed algorithm that maps them into finite traces, without need to choose a fixed discretization step. Trace elements exactly describe when plans cross constraint boundaries modulo arithmetic precision. We evaluate several planners using our methods over the recently proposed BARN benchmark, and we report evidence of the scalability of our approach.

Sampling from Pre-Images to Learn Heuristic Functions for Classical Planning

Jul 07, 2022

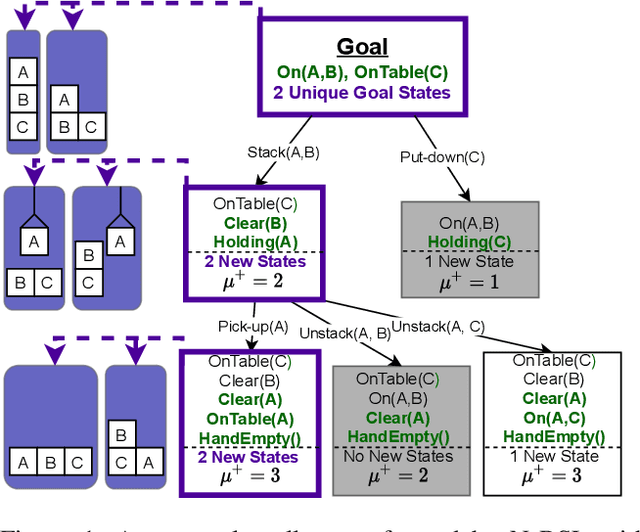

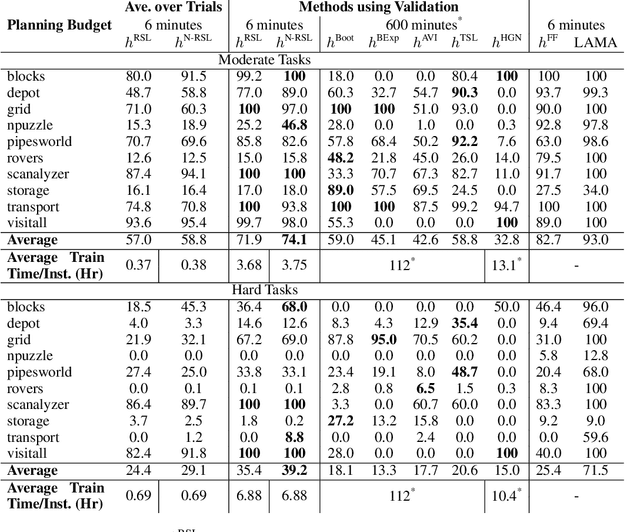

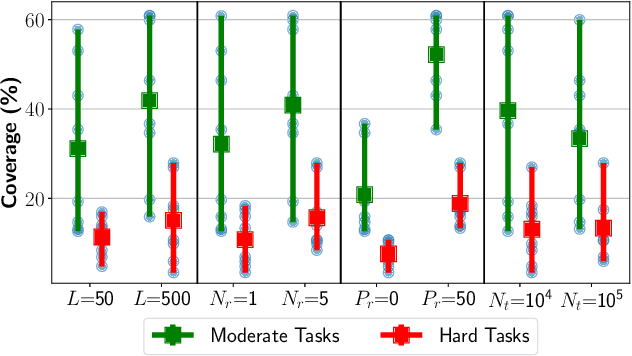

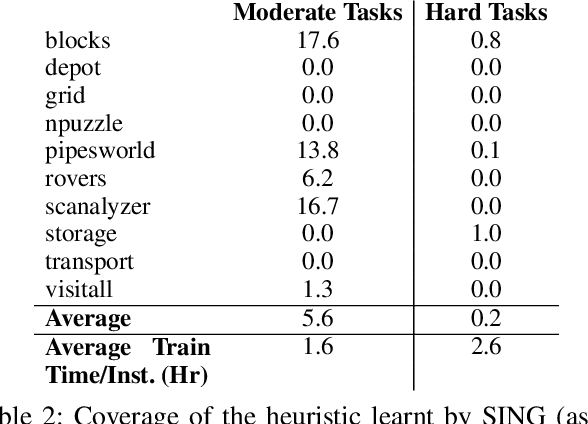

Abstract:We introduce a new algorithm, Regression based Supervised Learning (RSL), for learning per instance Neural Network (NN) defined heuristic functions for classical planning problems. RSL uses regression to select relevant sets of states at a range of different distances from the goal. RSL then formulates a Supervised Learning problem to obtain the parameters that define the NN heuristic, using the selected states labeled with exact or estimated distances to goal states. Our experimental study shows that RSL outperforms, in terms of coverage, previous classical planning NN heuristics functions while requiring two orders of magnitude less training time.

Width-based Lookaheads with Learnt Base Policies and Heuristics Over the Atari-2600 Benchmark

Jun 23, 2021

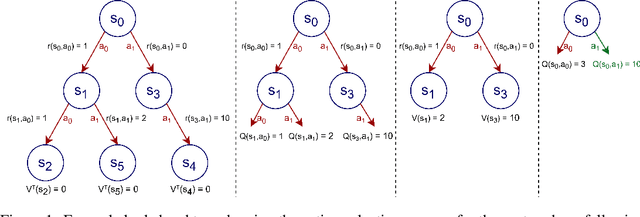

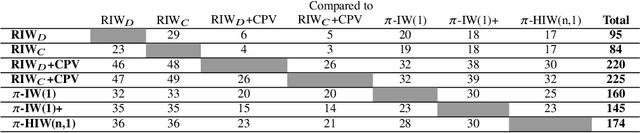

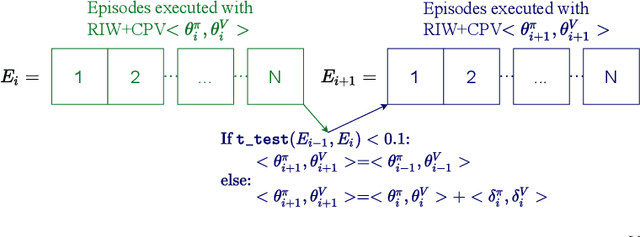

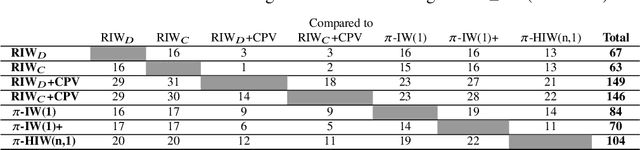

Abstract:We propose new width-based planning and learning algorithms applied over the Atari-2600 benchmark. The algorithms presented are inspired from a careful analysis of the design decisions made by previous width-based planners. We benchmark our new algorithms over the Atari-2600 games and show that our best performing algorithm, RIW$_C$+CPV, outperforms previously introduced width-based planning and learning algorithms $\pi$-IW(1), $\pi$-IW(1)+ and $\pi$-HIW(n, 1). Furthermore, we present a taxonomy of the set of Atari-2600 games according to some of their defining characteristics. This analysis of the games provides further insight into the behaviour and performance of the width-based algorithms introduced. Namely, for games with large branching factors, and games with sparse meaningful rewards, RIW$_C$+CPV outperforms $\pi$-IW, $\pi$-IW(1)+ and $\pi$-HIW(n, 1).

Approximate Novelty Search

May 17, 2021

Abstract:Width-based search algorithms seek plans by prioritizing states according to a suitably defined measure of novelty, that maps states into a set of novelty categories. Space and time complexity to evaluate state novelty is known to be exponential on the cardinality of the set. We present novel methods to obtain polynomial approximations of novelty and width-based search. First, we approximate novelty computation via random sampling and Bloom filters, reducing the runtime and memory footprint. Second, we approximate the best-first search using an adaptive policy that decides whether to forgo the expansion of nodes in the open list. These two techniques are integrated into existing width-based algorithms, resulting in new planners that perform significantly better than other state-of-the-art planners over benchmarks from the International Planning Competitions.

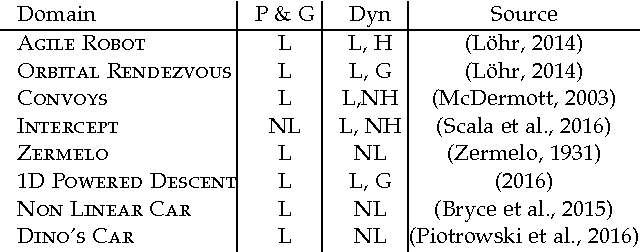

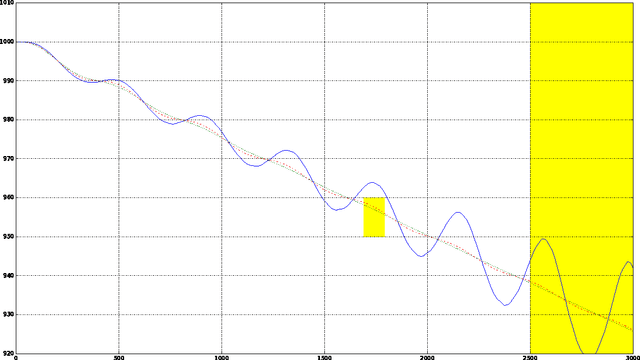

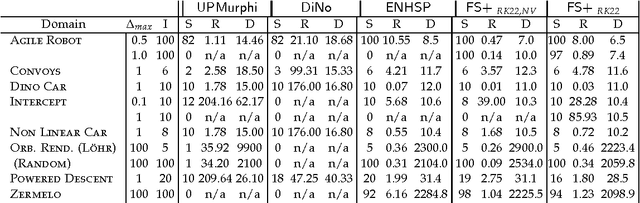

Numerical Integration and Dynamic Discretization in Heuristic Search Planning over Hybrid Domains

Mar 13, 2017

Abstract:In this paper we look into the problem of planning over hybrid domains, where change can be both discrete and instantaneous, or continuous over time. In addition, it is required that each state on the trajectory induced by the execution of plans complies with a given set of global constraints. We approach the computation of plans for such domains as the problem of searching over a deterministic state model. In this model, some of the successor states are obtained by solving numerically the so-called initial value problem over a set of ordinary differential equations (ODE) given by the current plan prefix. These equations hold over time intervals whose duration is determined dynamically, according to whether zero crossing events take place for a set of invariant conditions. The resulting planner, FS+, incorporates these features together with effective heuristic guidance. FS+ does not impose any of the syntactic restrictions on process effects often found on the existing literature on Hybrid Planning. A key concept of our approach is that a clear separation is struck between planning and simulation time steps. The former is the time allowed to observe the evolution of a given dynamical system before committing to a future course of action, whilst the later is part of the model of the environment. FS+ is shown to be a robust planner over a diverse set of hybrid domains, taken from the existing literature on hybrid planning and systems.

Heuristics for Planning, Plan Recognition and Parsing

May 22, 2016

Abstract:In a recent paper, we have shown that Plan Recognition over STRIPS can be formulated and solved using Classical Planning heuristics and algorithms. In this work, we show that this formulation subsumes the standard formulation of Plan Recognition over libraries through a compilation of libraries into STRIPS theories. The libraries correspond to AND/OR graphs that may be cyclic and where children of AND nodes may be partially ordered. These libraries include Context-Free Grammars as a special case, where the Plan Recognition problem becomes a parsing with missing tokens problem. Plan Recognition over the standard libraries become Planning problems that can be easily solved by any modern planner, while recognition over more complex libraries, including Context-Free Grammars (CFGs), illustrate limitations of current Planning heuristics and suggest improvements that may be relevant in other Planning problems too.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge