Adrian R. Pearce

Strategy Extraction in Single-Agent Games

May 22, 2023

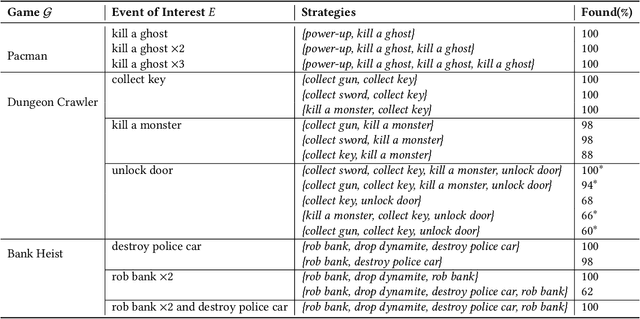

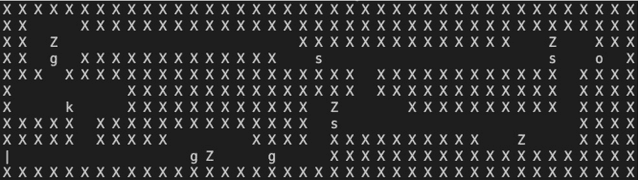

Abstract:The ability to continuously learn and adapt to new situations is one where humans are far superior compared to AI agents. We propose an approach to knowledge transfer using behavioural strategies as a form of transferable knowledge influenced by the human cognitive ability to develop strategies. A strategy is defined as a partial sequence of events - where an event is both the result of an agent's action and changes in state - to reach some predefined event of interest. This information acts as guidance or a partial solution that an agent can generalise and use to make predictions about how to handle unknown observed phenomena. As a first step toward this goal, we develop a method for extracting strategies from an agent's existing knowledge that can be applied in multiple contexts. Our method combines observed event frequency information with local sequence alignment techniques to find patterns of significance that form a strategy. We show that our method can identify plausible strategies in three environments: Pacman, Bank Heist and a dungeon-crawling video game. Our evaluation serves as a promising first step toward extracting knowledge for generalisation and, ultimately, transfer learning.

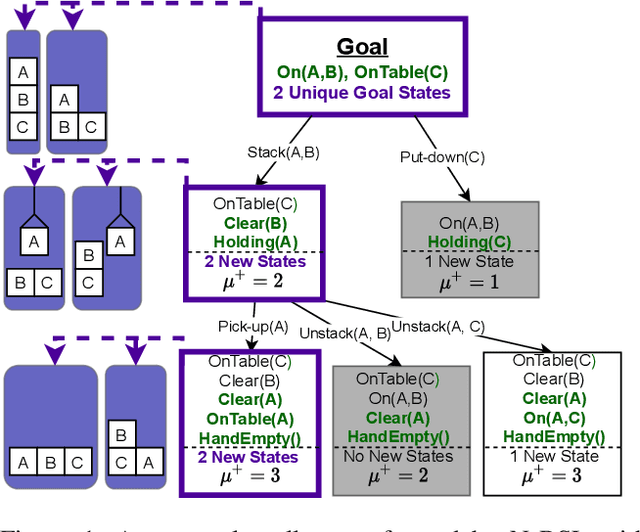

Sampling from Pre-Images to Learn Heuristic Functions for Classical Planning

Jul 07, 2022

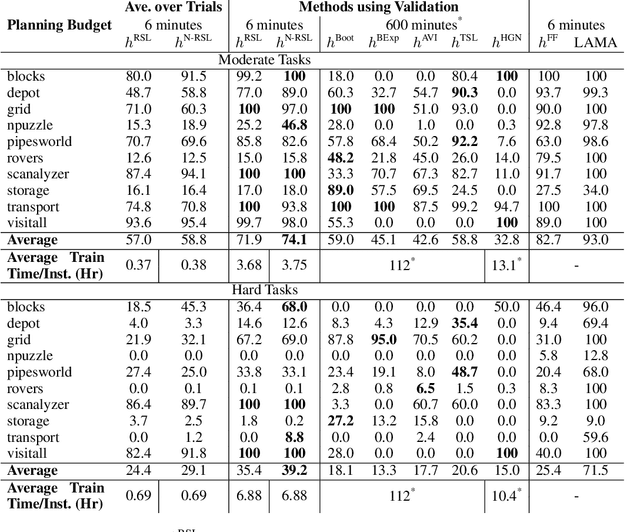

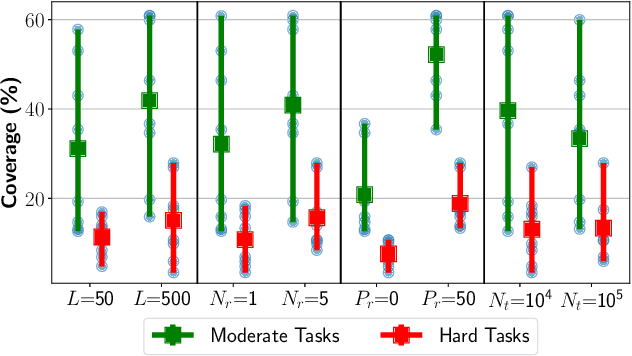

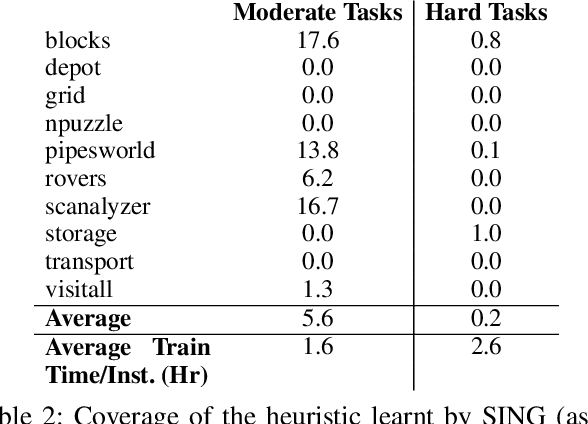

Abstract:We introduce a new algorithm, Regression based Supervised Learning (RSL), for learning per instance Neural Network (NN) defined heuristic functions for classical planning problems. RSL uses regression to select relevant sets of states at a range of different distances from the goal. RSL then formulates a Supervised Learning problem to obtain the parameters that define the NN heuristic, using the selected states labeled with exact or estimated distances to goal states. Our experimental study shows that RSL outperforms, in terms of coverage, previous classical planning NN heuristics functions while requiring two orders of magnitude less training time.

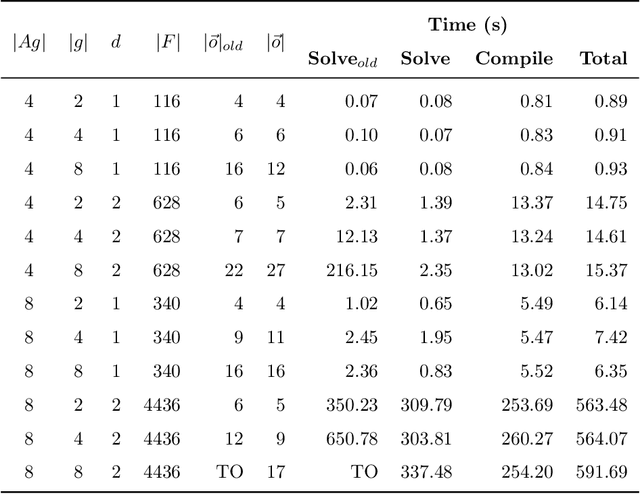

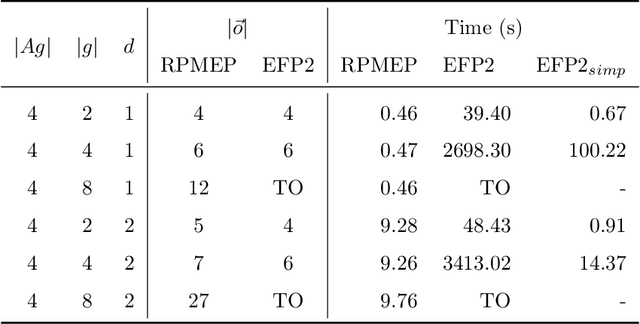

Efficient Multi-agent Epistemic Planning: Teaching Planners About Nested Belief

Oct 06, 2021

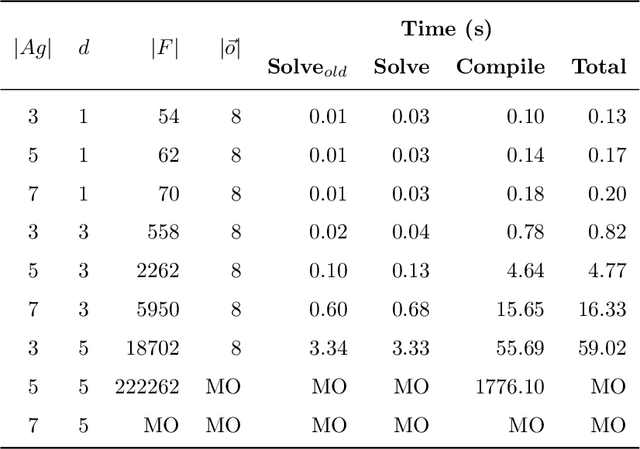

Abstract:Many AI applications involve the interaction of multiple autonomous agents, requiring those agents to reason about their own beliefs, as well as those of other agents. However, planning involving nested beliefs is known to be computationally challenging. In this work, we address the task of synthesizing plans that necessitate reasoning about the beliefs of other agents. We plan from the perspective of a single agent with the potential for goals and actions that involve nested beliefs, non-homogeneous agents, co-present observations, and the ability for one agent to reason as if it were another. We formally characterize our notion of planning with nested belief, and subsequently demonstrate how to automatically convert such problems into problems that appeal to classical planning technology for solving efficiently. Our approach represents an important step towards applying the well-established field of automated planning to the challenging task of planning involving nested beliefs of multiple agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge