Oscar Pizarro

Detecting Endangered Marine Species in Autonomous Underwater Vehicle Imagery Using Point Annotations and Few-Shot Learning

Jun 04, 2024

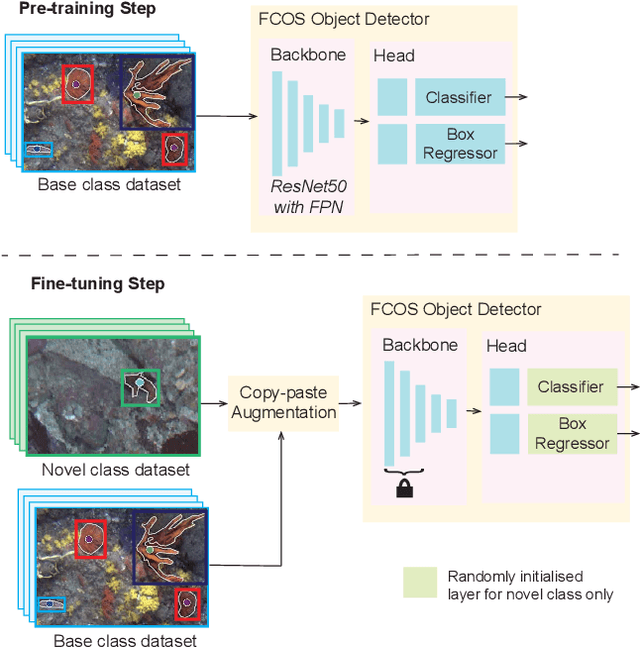

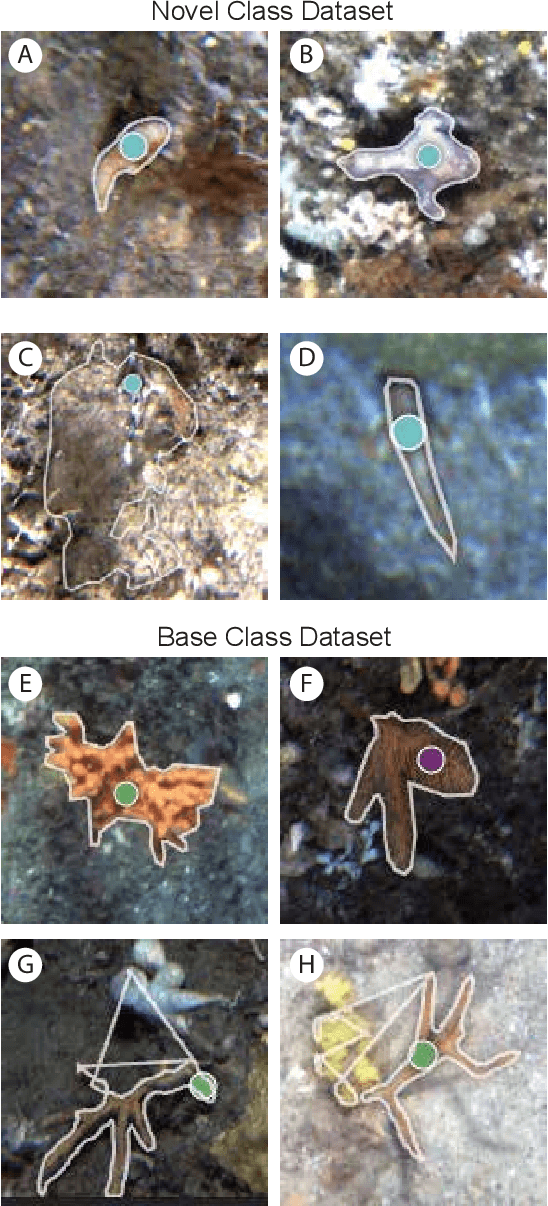

Abstract:One use of Autonomous Underwater Vehicles (AUVs) is the monitoring of habitats associated with threatened, endangered and protected marine species, such as the handfish of Tasmania, Australia. Seafloor imagery collected by AUVs can be used to identify individuals within their broader habitat context, but the sheer volume of imagery collected can overwhelm efforts to locate rare or cryptic individuals. Machine learning models can be used to identify the presence of a particular species in images using a trained object detector, but the lack of training examples reduces detection performance, particularly for rare species that may only have a small number of examples in the wild. In this paper, inspired by recent work in few-shot learning, images and annotations of common marine species are exploited to enhance the ability of the detector to identify rare and cryptic species. Annotated images of six common marine species are used in two ways. Firstly, the common species are used in a pre-training step to allow the backbone to create rich features for marine species. Secondly, a copy-paste operation is used with the common species images to augment the training data. While annotations for more common marine species are available in public datasets, they are often in point format, which is unsuitable for training an object detector. A popular semantic segmentation model efficiently generates bounding box annotations for training from the available point annotations. Our proposed framework is applied to AUV images of handfish, increasing average precision by up to 48\% compared to baseline object detection training. This approach can be applied to other objects with low numbers of annotations and promises to increase the ability to actively monitor threatened, endangered and protected species.

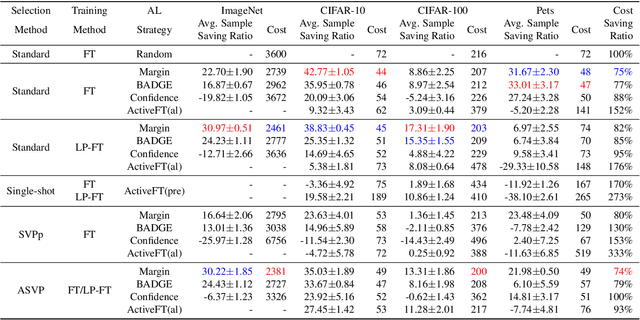

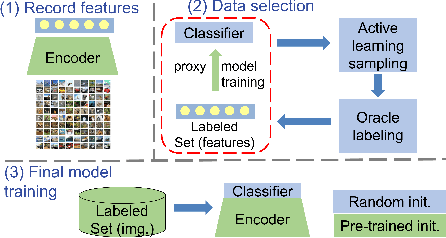

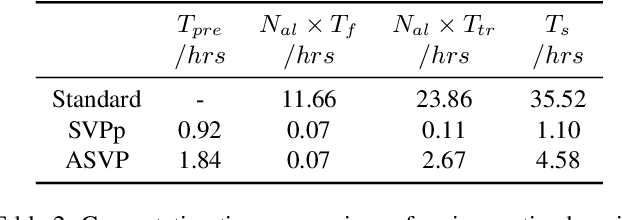

Feature Alignment: Rethinking Efficient Active Learning via Proxy in the Context of Pre-trained Models

Mar 02, 2024

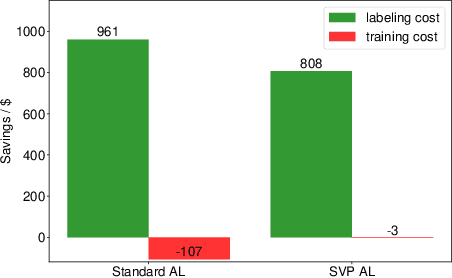

Abstract:Fine-tuning the pre-trained model with active learning holds promise for reducing annotation costs. However, this combination introduces significant computational costs, particularly with the growing scale of pre-trained models. Recent research has proposed proxy-based active learning, which pre-computes features to reduce computational costs. Yet, this approach often incurs a significant loss in active learning performance, which may even outweigh the computational cost savings. In this paper, we argue the performance drop stems not only from pre-computed features' inability to distinguish between categories of labeled samples, resulting in the selection of redundant samples but also from the tendency to compromise valuable pre-trained information when fine-tuning with samples selected through the proxy model. To address this issue, we propose a novel method called aligned selection via proxy to update pre-computed features while selecting a proper training method to inherit valuable pre-training information. Extensive experiments validate that our method significantly improves the total cost of efficient active learning while maintaining computational efficiency.

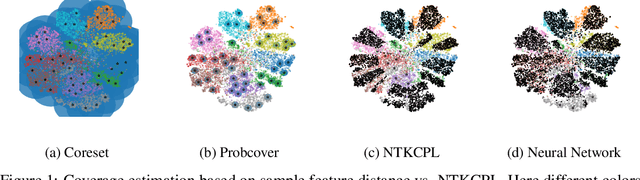

NTKCPL: Active Learning on Top of Self-Supervised Model by Estimating True Coverage

Jun 07, 2023

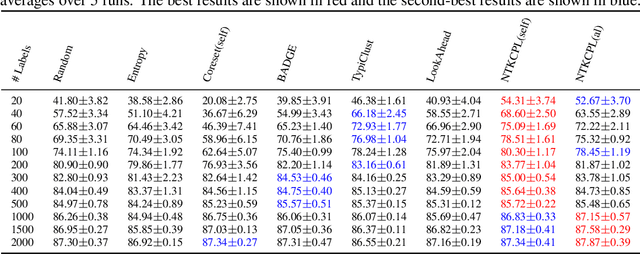

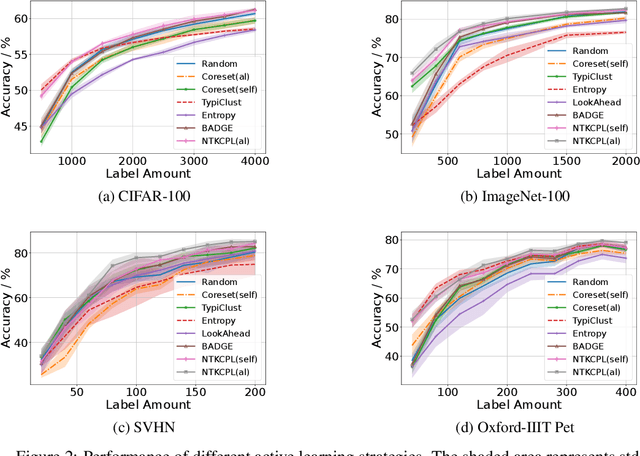

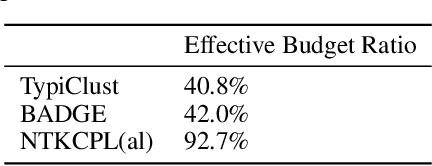

Abstract:High annotation cost for training machine learning classifiers has driven extensive research in active learning and self-supervised learning. Recent research has shown that in the context of supervised learning different active learning strategies need to be applied at various stages of the training process to ensure improved performance over the random baseline. We refer to the point where the number of available annotations changes the suitable active learning strategy as the phase transition point. In this paper, we establish that when combining active learning with self-supervised models to achieve improved performance, the phase transition point occurs earlier. It becomes challenging to determine which strategy should be used for previously unseen datasets. We argue that existing active learning algorithms are heavily influenced by the phase transition because the empirical risk over the entire active learning pool estimated by these algorithms is inaccurate and influenced by the number of labeled samples. To address this issue, we propose a novel active learning strategy, neural tangent kernel clustering-pseudo-labels (NTKCPL). It estimates empirical risk based on pseudo-labels and the model prediction with NTK approximation. We analyze the factors affecting this approximation error and design a pseudo-label clustering generation method to reduce the approximation error. We validate our method on five datasets, empirically demonstrating that it outperforms the baseline methods in most cases and is valid over a wider range of training budgets.

A Semi-supervised Object Detection Algorithm for Underwater Imagery

Jun 07, 2023

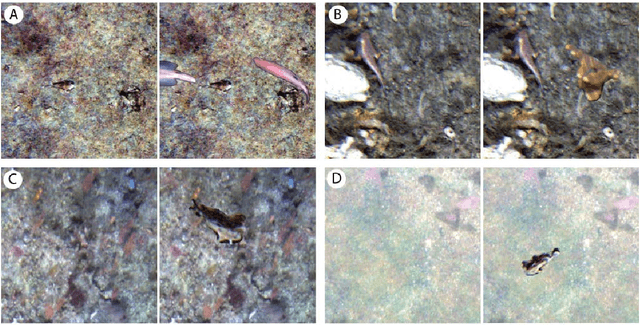

Abstract:Detection of artificial objects from underwater imagery gathered by Autonomous Underwater Vehicles (AUVs) is a key requirement for many subsea applications. Real-world AUV image datasets tend to be very large and unlabelled. Furthermore, such datasets are typically imbalanced, containing few instances of objects of interest, particularly when searching for unusual objects in a scene. It is therefore, difficult to fit models capable of reliably detecting these objects. Given these factors, we propose to treat artificial objects as anomalies and detect them through a semi-supervised framework based on Variational Autoencoders (VAEs). We develop a method which clusters image data in a learned low-dimensional latent space and extracts images that are likely to contain anomalous features. We also devise an anomaly score based on extracting poorly reconstructed regions of an image. We demonstrate that by applying both methods on large image datasets, human operators can be shown candidate anomalous samples with a low false positive rate to identify objects of interest. We apply our approach to real seafloor imagery gathered by an AUV and evaluate its sensitivity to the dimensionality of the latent representation used by the VAE. We evaluate the precision-recall tradeoff and demonstrate that by choosing an appropriate latent dimensionality and threshold, we are able to achieve an average precision of 0.64 on unlabelled datasets.

Improved Benthic Classification using Resolution Scaling and SymmNet Unsupervised Domain Adaptation

Mar 20, 2023

Abstract:Autonomous Underwater Vehicles (AUVs) conduct regular visual surveys of marine environments to characterise and monitor the composition and diversity of the benthos. The use of machine learning classifiers for this task is limited by the low numbers of annotations available and the many fine-grained classes involved. In addition to these challenges, there are domain shifts between image sets acquired during different AUV surveys due to changes in camera systems, imaging altitude, illumination and water column properties leading to a drop in classification performance for images from a different survey where some or all these elements may have changed. This paper proposes a framework to improve the performance of a benthic morphospecies classifier when used to classify images from a different survey compared to the training data. We adapt the SymmNet state-of-the-art Unsupervised Domain Adaptation method with an efficient bilinear pooling layer and image scaling to normalise spatial resolution, and show improved classification accuracy. We test our approach on two datasets with images from AUV surveys with different imaging payloads and locations. The results show that generic domain adaptation can be enhanced to produce a significant increase in accuracy for images from an AUV survey that differs from the training images.

Active Self-Semi-Supervised Learning for Few Labeled Samples Fast Training

Mar 09, 2022

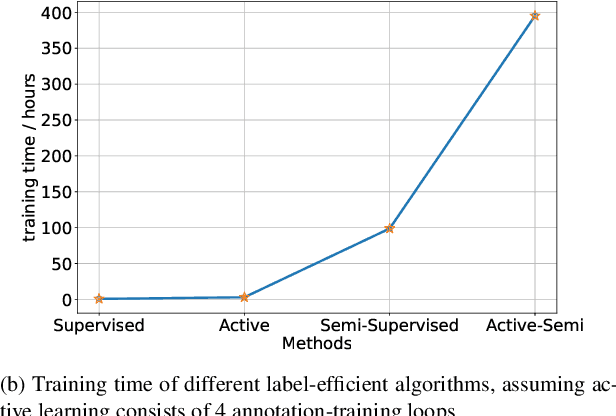

Abstract:Faster training and fewer annotations are two key issues for applying deep models to various practical domains. Now, semi-supervised learning has achieved great success in training with few annotations. However, low-quality labeled samples produced by random sampling make it difficult to continue to reduce the number of annotations. In this paper we propose an active self-semi-supervised training framework that bootstraps semi-supervised models with good prior pseudo-labels, where the priors are obtained by label propagation over self-supervised features. Because the accuracy of the prior is not only affected by the quality of features, but also by the selection of the labeled samples. We develop active learning and label propagation strategies to obtain better prior pseudo-labels. Consequently, our framework can greatly improve the performance of models with few annotations and greatly reduce the training time. Experiments on three semi-supervised learning benchmarks demonstrate effectiveness. Our method achieves similar accuracy to standard semi-supervised approaches in about 1/3 of the training time, and even outperform them when fewer annotations are available (84.10\% in CIFAR-10 with 10 labels).

Maximising Wrenches for Kinematically Redundant Systems with Experiments on UVMS

Feb 28, 2022

Abstract:This paper presents methods for finding optimal configurations and actuator forces/torques to maximise contact wrenches in a desired direction for Underwater Vehicles Manipulator Systems (UVMS). The wrench maximisation problem is formulated as a linear programming problem, and the optimal configuration is solved as a bi-level optimisation in the parameterised redundancy space. We additionally consider the cases of one or more manipulators with multiple contact forces, maximising wrench capability while tracking a trajectory, and generating large wrench impulses using dynamic motions. We look at the specific cases of maximising force to lift a heavy load, and maximising torque during a valve turning operation. Extensive experimental results are presented using an underwater robotic platform equipped with a 4DOF manipulator, and show significant increases in wrench capability compared to existing methods for UVMS.

Towards Automated Sample Collection and Return in Extreme Underwater Environments

Dec 30, 2021

Abstract:In this report, we present the system design, operational strategy, and results of coordinated multi-vehicle field demonstrations of autonomous marine robotic technologies in search-for-life missions within the Pacific shelf margin of Costa Rica and the Santorini-Kolumbo caldera complex, which serve as analogs to environments that may exist in oceans beyond Earth. This report focuses on the automation of ROV manipulator operations for targeted biological sample-collection-and-return from the seafloor. In the context of future extraterrestrial exploration missions to ocean worlds, an ROV is an analog to a planetary lander, which must be capable of high-level autonomy. Our field trials involve two underwater vehicles, the SuBastian ROV and the Nereid Under Ice (NUI) hybrid ROV for mixed initiative (i.e., teleoperated or autonomous) missions, both equipped 7-DoF hydraulic manipulators. We describe an adaptable, hardware-independent computer vision architecture that enables high-level automated manipulation. The vision system provides a 3D understanding of the workspace to inform manipulator motion planning in complex unstructured environments. We demonstrate the effectiveness of the vision system and control framework through field trials in increasingly challenging environments, including the automated collection and return of biological samples from within the active undersea volcano, Kolumbo. Based on our experiences in the field, we discuss the performance of our system and identify promising directions for future research.

GeoCLR: Georeference Contrastive Learning for Efficient Seafloor Image Interpretation

Aug 13, 2021

Abstract:This paper describes Georeference Contrastive Learning of visual Representation (GeoCLR) for efficient training of deep-learning Convolutional Neural Networks (CNNs). The method leverages georeference information by generating a similar image pair using images taken of nearby locations, and contrasting these with an image pair that is far apart. The underlying assumption is that images gathered within a close distance are more likely to have similar visual appearance, where this can be reasonably satisfied in seafloor robotic imaging applications where image footprints are limited to edge lengths of a few metres and are taken so that they overlap along a vehicle's trajectory, whereas seafloor substrates and habitats have patch sizes that are far larger. A key advantage of this method is that it is self-supervised and does not require any human input for CNN training. The method is computationally efficient, where results can be generated between dives during multi-day AUV missions using computational resources that would be accessible during most oceanic field trials. We apply GeoCLR to habitat classification on a dataset that consists of ~86k images gathered using an Autonomous Underwater Vehicle (AUV). We demonstrate how the latent representations generated by GeoCLR can be used to efficiently guide human annotation efforts, where the semi-supervised framework improves classification accuracy by an average of 11.8 % compared to state-of-the-art transfer learning using the same CNN and equivalent number of human annotations for training.

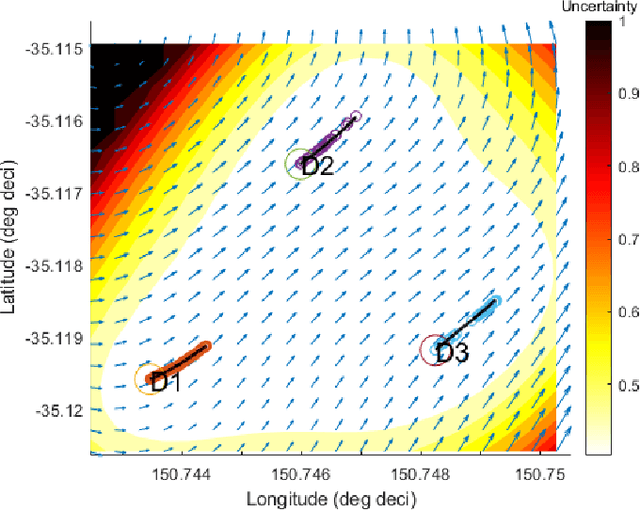

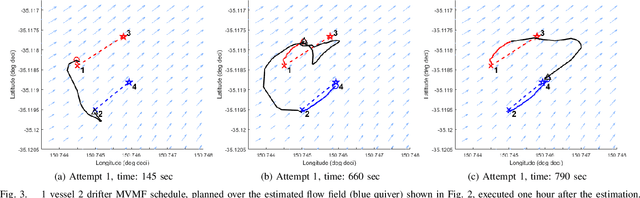

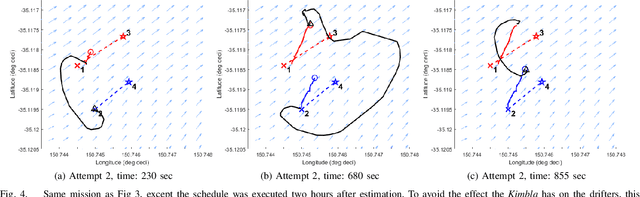

Field trial on Ocean Estimation for Multi-Vessel Multi-Float-based Active perception

Jun 17, 2021

Abstract:Marine vehicles have been used for various scientific missions where information over features of interest is collected. In order to maximise efficiency in collecting information over a large search space, we should be able to deploy a large number of autonomous vehicles that make a decision based on the latest understanding of the target feature in the environment. In our previous work, we have presented a hierarchical framework for the multi-vessel multi-float (MVMF) problem where surface vessels drop and pick up underactuated floats in a time-minimal way. In this paper, we present the field trial results using the framework with a number of drifters and floats. We discovered a number of important aspects that need to be considered in the proposed framework, and present the potential approaches to address the challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge