Detecting Endangered Marine Species in Autonomous Underwater Vehicle Imagery Using Point Annotations and Few-Shot Learning

Paper and Code

Jun 04, 2024

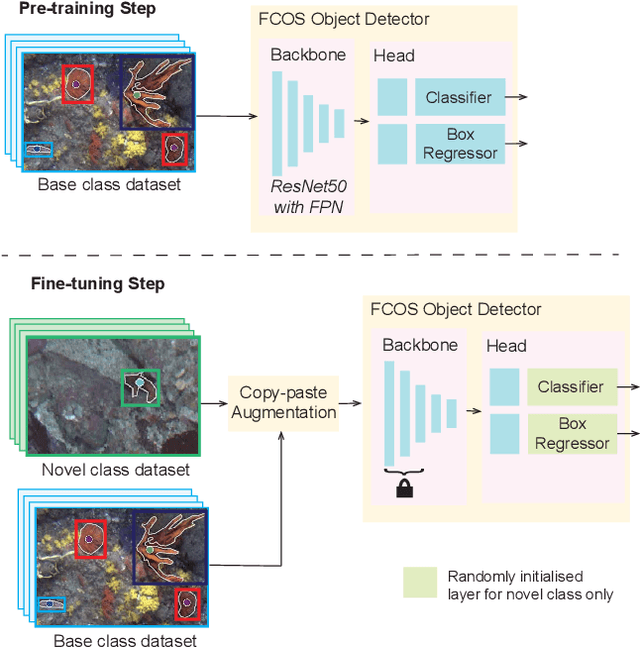

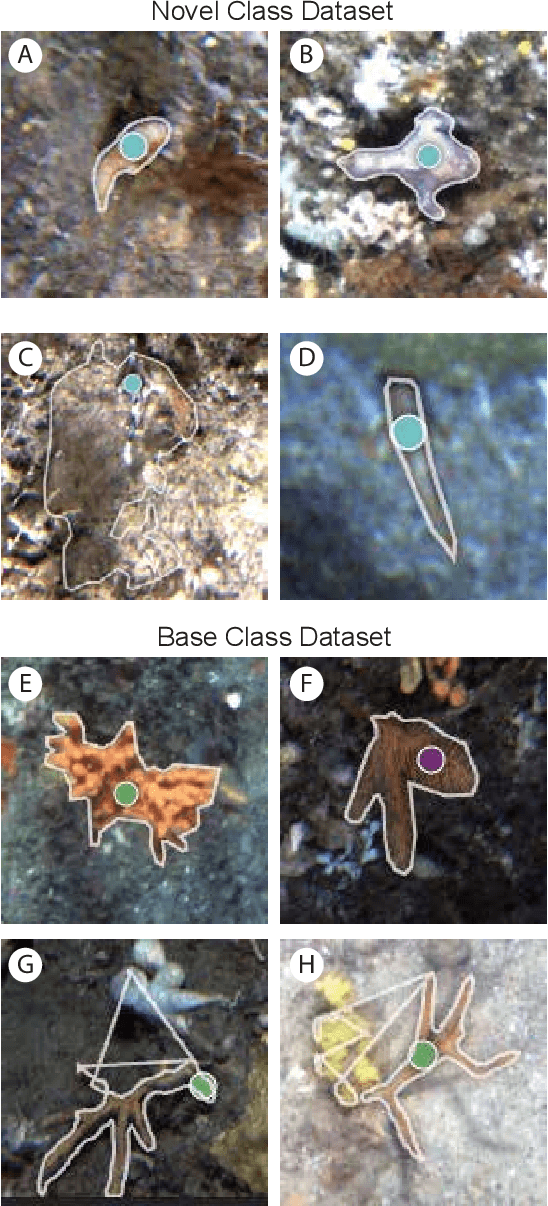

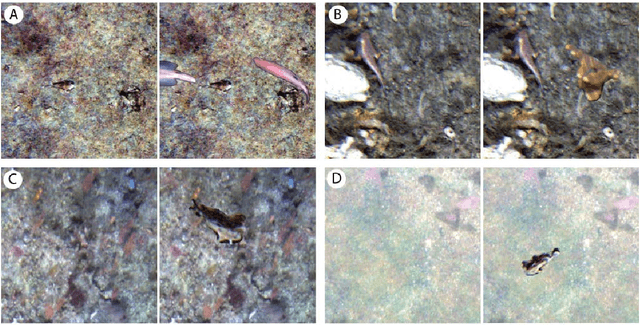

One use of Autonomous Underwater Vehicles (AUVs) is the monitoring of habitats associated with threatened, endangered and protected marine species, such as the handfish of Tasmania, Australia. Seafloor imagery collected by AUVs can be used to identify individuals within their broader habitat context, but the sheer volume of imagery collected can overwhelm efforts to locate rare or cryptic individuals. Machine learning models can be used to identify the presence of a particular species in images using a trained object detector, but the lack of training examples reduces detection performance, particularly for rare species that may only have a small number of examples in the wild. In this paper, inspired by recent work in few-shot learning, images and annotations of common marine species are exploited to enhance the ability of the detector to identify rare and cryptic species. Annotated images of six common marine species are used in two ways. Firstly, the common species are used in a pre-training step to allow the backbone to create rich features for marine species. Secondly, a copy-paste operation is used with the common species images to augment the training data. While annotations for more common marine species are available in public datasets, they are often in point format, which is unsuitable for training an object detector. A popular semantic segmentation model efficiently generates bounding box annotations for training from the available point annotations. Our proposed framework is applied to AUV images of handfish, increasing average precision by up to 48\% compared to baseline object detection training. This approach can be applied to other objects with low numbers of annotations and promises to increase the ability to actively monitor threatened, endangered and protected species.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge