Blair Thornton

Closed-loop underwater soft robotic foil shape control using flexible e-skin

Aug 02, 2024

Abstract:The use of soft robotics for real-world underwater applications is limited, even more than in terrestrial applications, by the ability to accurately measure and control the deformation of the soft materials in real time without the need for feedback from an external sensor. Real-time underwater shape estimation would allow for accurate closed-loop control of soft propulsors, enabling high-performance swimming and manoeuvring. We propose and demonstrate a method for closed-loop underwater soft robotic foil control based on a flexible capacitive e-skin and machine learning which does not necessitate feedback from an external sensor. The underwater e-skin is applied to a highly flexible foil undergoing deformations from 2% to 9% of its camber by means of soft hydraulic actuators. Accurate set point regulation of the camber is successfully tracked during sinusoidal and triangle actuation routines with an amplitude of 5% peak-to-peak and 10-second period with a normalised RMS error of 0.11, and 2% peak-to-peak amplitude with a period of 5 seconds with a normalised RMS error of 0.03. The tail tip deflection can be measured across a 30 mm (0.15 chords) range. These results pave the way for using e-skin technology for underwater soft robotic closed-loop control applications.

Seafloor Classification based on an AUV Based Sub-bottom Acoustic Probe Data for Mn-crust survey

Sep 26, 2023

Abstract:The possibility of automatically classifying high frequency sub-bottom acoustic reflections collected from an Autonomous Underwater Robot is investigated in this paper. In field surveys of Cobalt-rich Manganese Crusts (Mn-crusts), existing methods relies on visual confirmation of seafloor from images and thickness measurements using the sub-bottom probe. Using these visual classification results as ground truth, an autoencoder is trained to extract latent features from bundled acoustic reflections. A Support Vector Machine classifier is then trained to classify the latent space to idetify seafloor classes. Results from data collected from seafloor at 1500m deep regions of Mn-crust showed an accuracy of about 70%.

Energy-efficient tunable-stiffness soft robots using second moment of area actuation

Nov 24, 2022

Abstract:The optimal stiffness for soft swimming robots depends on swimming speed, which means no single stiffness can maximise efficiency in all swimming conditions. Tunable stiffness would produce an increased range of high-efficiency swimming speeds for robots with flexible propulsors and enable soft control surfaces for steering underwater vehicles. We propose and demonstrate a method for tunable soft robotic stiffness using inflatable rubber tubes to stiffen a silicone foil through pressure and second moment of area change. We achieved double the effective stiffness of the system for an input pressure change from 0 to 0.8 bar and 2 J energy input. We achieved a resonant amplitude gain of 5 to 7 times the input amplitude and tripled the high-gain frequency range comparedto a foil with fixed stiffness. These results show that changing second moment of area is an energy effective approach tot unable-stiffness robots.

* 6 pages, 8 figures, Presented at IEEE/RSJ International Conference on Intelligent Robots and Systems 2022 in Kyoto, Japan

GeoCLR: Georeference Contrastive Learning for Efficient Seafloor Image Interpretation

Aug 13, 2021

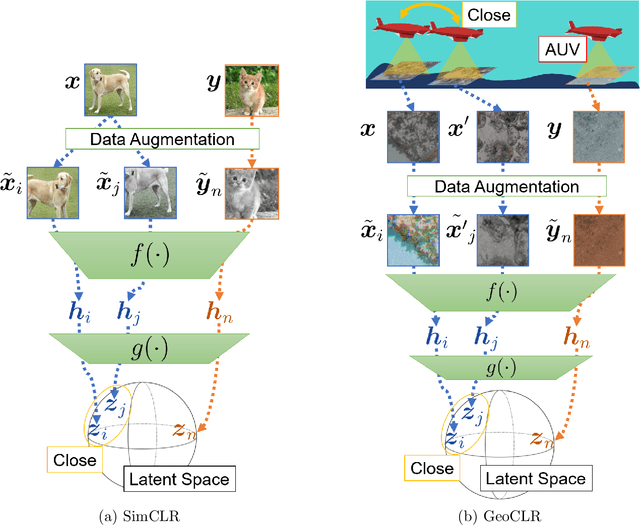

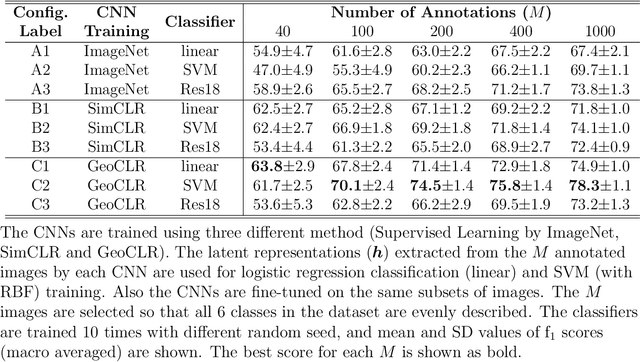

Abstract:This paper describes Georeference Contrastive Learning of visual Representation (GeoCLR) for efficient training of deep-learning Convolutional Neural Networks (CNNs). The method leverages georeference information by generating a similar image pair using images taken of nearby locations, and contrasting these with an image pair that is far apart. The underlying assumption is that images gathered within a close distance are more likely to have similar visual appearance, where this can be reasonably satisfied in seafloor robotic imaging applications where image footprints are limited to edge lengths of a few metres and are taken so that they overlap along a vehicle's trajectory, whereas seafloor substrates and habitats have patch sizes that are far larger. A key advantage of this method is that it is self-supervised and does not require any human input for CNN training. The method is computationally efficient, where results can be generated between dives during multi-day AUV missions using computational resources that would be accessible during most oceanic field trials. We apply GeoCLR to habitat classification on a dataset that consists of ~86k images gathered using an Autonomous Underwater Vehicle (AUV). We demonstrate how the latent representations generated by GeoCLR can be used to efficiently guide human annotation efforts, where the semi-supervised framework improves classification accuracy by an average of 11.8 % compared to state-of-the-art transfer learning using the same CNN and equivalent number of human annotations for training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge