Ninghan Zhong

Bench-Push: Benchmarking Pushing-based Navigation and Manipulation Tasks for Mobile Robots

Dec 12, 2025Abstract:Mobile robots are increasingly deployed in cluttered environments with movable objects, posing challenges for traditional methods that prohibit interaction. In such settings, the mobile robot must go beyond traditional obstacle avoidance, leveraging pushing or nudging strategies to accomplish its goals. While research in pushing-based robotics is growing, evaluations rely on ad hoc setups, limiting reproducibility and cross-comparison. To address this, we present Bench-Push, the first unified benchmark for pushing-based mobile robot navigation and manipulation tasks. Bench-Push includes multiple components: 1) a comprehensive range of simulated environments that capture the fundamental challenges in pushing-based tasks, including navigating a maze with movable obstacles, autonomous ship navigation in ice-covered waters, box delivery, and area clearing, each with varying levels of complexity; 2) novel evaluation metrics to capture efficiency, interaction effort, and partial task completion; and 3) demonstrations using Bench-Push to evaluate example implementations of established baselines across environments. Bench-Push is open-sourced as a Python library with a modular design. The code, documentation, and trained models can be found at https://github.com/IvanIZ/BenchNPIN.

Bench-NPIN: Benchmarking Non-prehensile Interactive Navigation

May 17, 2025Abstract:Mobile robots are increasingly deployed in unstructured environments where obstacles and objects are movable. Navigation in such environments is known as interactive navigation, where task completion requires not only avoiding obstacles but also strategic interactions with movable objects. Non-prehensile interactive navigation focuses on non-grasping interaction strategies, such as pushing, rather than relying on prehensile manipulation. Despite a growing body of research in this field, most solutions are evaluated using case-specific setups, limiting reproducibility and cross-comparison. In this paper, we present Bench-NPIN, the first comprehensive benchmark for non-prehensile interactive navigation. Bench-NPIN includes multiple components: 1) a comprehensive range of simulated environments for non-prehensile interactive navigation tasks, including navigating a maze with movable obstacles, autonomous ship navigation in icy waters, box delivery, and area clearing, each with varying levels of complexity; 2) a set of evaluation metrics that capture unique aspects of interactive navigation, such as efficiency, interaction effort, and partial task completion; and 3) demonstrations using Bench-NPIN to evaluate example implementations of established baselines across environments. Bench-NPIN is an open-source Python library with a modular design. The code, documentation, and trained models can be found at https://github.com/IvanIZ/BenchNPIN.

AUTO-IceNav: A Local Navigation Strategy for Autonomous Surface Ships in Broken Ice Fields

Nov 26, 2024

Abstract:Ice conditions often require ships to reduce speed and deviate from their main course to avoid damage to the ship. In addition, broken ice fields are becoming the dominant ice conditions encountered in the Arctic, where the effects of collisions with ice are highly dependent on where contact occurs and on the particular features of the ice floes. In this paper, we present AUTO-IceNav, a framework for the autonomous navigation of ships operating in ice floe fields. Trajectories are computed in a receding-horizon manner, where we frequently replan given updated ice field data. During a planning step, we assume a nominal speed that is safe with respect to the current ice conditions, and compute a reference path. We formulate a novel cost function that minimizes the kinetic energy loss of the ship from ship-ice collisions and incorporate this cost as part of our lattice-based path planner. The solution computed by the lattice planning stage is then used as an initial guess in our proposed optimization-based improvement step, producing a locally optimal path. Extensive experiments were conducted both in simulation and in a physical testbed to validate our approach.

Autonomous Navigation in Ice-Covered Waters with Learned Predictions on Ship-Ice Interactions

Sep 18, 2024Abstract:Autonomous navigation in ice-covered waters poses significant challenges due to the frequent lack of viable collision-free trajectories. When complete obstacle avoidance is infeasible, it becomes imperative for the navigation strategy to minimize collisions. Additionally, the dynamic nature of ice, which moves in response to ship maneuvers, complicates the path planning process. To address these challenges, we propose a novel deep learning model to estimate the coarse dynamics of ice movements triggered by ship actions through occupancy estimation. To ensure real-time applicability, we propose a novel approach that caches intermediate prediction results and seamlessly integrates the predictive model into a graph search planner. We evaluate the proposed planner both in simulation and in a physical testbed against existing approaches and show that our planner significantly reduces collisions with ice when compared to the state-of-the-art. Codes and demos of this work are available at https://github.com/IvanIZ/predictive-asv-planner.

Towards Safe Multi-Level Human-Robot Interaction in Industrial Tasks

Aug 06, 2023

Abstract:Multiple levels of safety measures are required by multiple interaction modes which collaborative robots need to perform industrial tasks with human co-workers. We develop three independent modules to account for safety in different types of human-robot interaction: vision-based safety monitoring pauses robot when human is present in a shared space; contact-based safety monitoring pauses robot when unexpected contact happens between human and robot; hierarchical intention tracking keeps robot in a safe distance from human when human and robot work independently, and switches robot to compliant mode when human intends to guide robot. We discuss the prospect of future research in development and integration of multi-level safety modules. We focus on how to provide safety guarantees for collaborative robot solutions with human behavior modeling.

Attentiveness Map Estimation for Haptic Teleoperation of Mobile Robot Obstacle Avoidance and Approach

Dec 16, 2022Abstract:Haptic feedback can improve safety of teleoperated robots when situational awareness is limited or operators are inattentive. Standard potential field approaches increase haptic resistance as an obstacle is approached, which is desirable when the operator is unaware of the obstacle but undesirable when the movement is intentional, such as when the operator wishes to inspect or manipulate an object. This paper presents a novel haptic teleoperation framework that estimates the operator's attentiveness to dampen haptic feedback for intentional movement. A biologically-inspired attention model is developed based on computational working memory theories to integrate visual saliency estimation with spatial mapping. This model generates an attentiveness map in real-time, and the haptic rendering system generates lower haptic forces for obstacles that the operator is estimated to be aware of. Experimental results in simulation show that the proposed framework outperforms haptic teleoperation without attentiveness estimation in terms of task performance, robot safety, and user experience.

Seamless Interaction Design with Coexistence and Cooperation Modes for Robust Human-Robot Collaboration

Jun 09, 2022

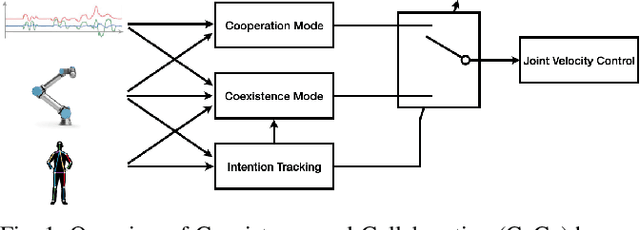

Abstract:A robot needs multiple interaction modes to robustly collaborate with a human in complicated industrial tasks. We develop a Coexistence-and-Cooperation (CoCo) human-robot collaboration system. Coexistence mode enables the robot to work with the human on different sub-tasks independently in a shared space. Cooperation mode enables the robot to follow human guidance and recover failures. A human intention tracking algorithm takes in both human and robot motion measurements as input and provides a switch on the interaction modes. We demonstrate the effectiveness of CoCo system in a use case analogous to a real world multi-step assembly task.

Hierarchical Intention Tracking for Robust Human-Robot Collaboration in Industrial Assembly Tasks

Mar 17, 2022

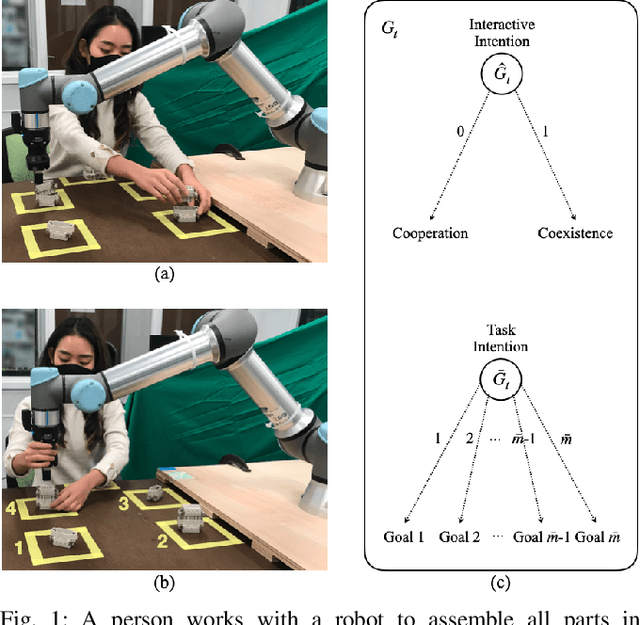

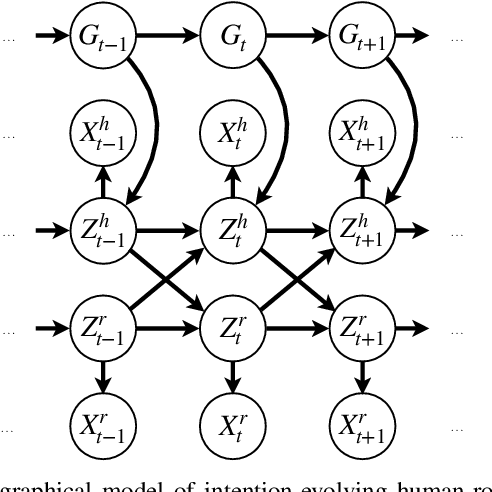

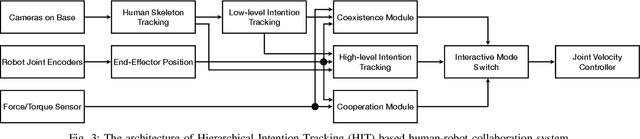

Abstract:Collaborative robots require effective intention estimation to safely and smoothly work with humans in less structured tasks such as industrial assembly. During these tasks, human intention continuously changes across multiple steps, and is composed of a hierarchy including high-level interactive intention and low-level task intention. Thus, we propose the concept of intention tracking and introduce a collaborative robot system with a hierarchical framework that concurrently tracks intentions at both levels by observing force/torque measurements, robot state sequences, and tracked human trajectories. The high-level intention estimate enables the robot to both (1) safely avoid collision with the human to minimize interruption and (2) cooperatively approach the human and help recover from an assembly failure through admittance control. The low-level intention estimate provides the robot with task-specific information (e.g., which part the human is working on) for concurrent task execution. We implement the system on a UR5e robot, and demonstrate robust, seamless and ergonomic collaboration between the human and the robot in an assembly use case through an ablative pilot study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge