Nikolaus Kriegeskorte

Transformer brain encoders explain human high-level visual responses

May 22, 2025Abstract:A major goal of neuroscience is to understand brain computations during visual processing in naturalistic settings. A dominant approach is to use image-computable deep neural networks trained with different task objectives as a basis for linear encoding models. However, in addition to requiring tuning a large number of parameters, the linear encoding approach ignores the structure of the feature maps both in the brain and the models. Recently proposed alternatives have focused on decomposing the linear mapping to spatial and feature components but focus on finding static receptive fields for units that are applicable only in early visual areas. In this work, we employ the attention mechanism used in the transformer architecture to study how retinotopic visual features can be dynamically routed to category-selective areas in high-level visual processing. We show that this computational motif is significantly more powerful than alternative methods in predicting brain activity during natural scene viewing, across different feature basis models and modalities. We also show that this approach is inherently more interpretable, without the need to create importance maps, by interpreting the attention routing signal for different high-level categorical areas. Our approach proposes a mechanistic model of how visual information from retinotopic maps can be routed based on the relevance of the input content to different category-selective regions.

How does the primate brain combine generative and discriminative computations in vision?

Jan 11, 2024Abstract:Vision is widely understood as an inference problem. However, two contrasting conceptions of the inference process have each been influential in research on biological vision as well as the engineering of machine vision. The first emphasizes bottom-up signal flow, describing vision as a largely feedforward, discriminative inference process that filters and transforms the visual information to remove irrelevant variation and represent behaviorally relevant information in a format suitable for downstream functions of cognition and behavioral control. In this conception, vision is driven by the sensory data, and perception is direct because the processing proceeds from the data to the latent variables of interest. The notion of "inference" in this conception is that of the engineering literature on neural networks, where feedforward convolutional neural networks processing images are said to perform inference. The alternative conception is that of vision as an inference process in Helmholtz's sense, where the sensory evidence is evaluated in the context of a generative model of the causal processes giving rise to it. In this conception, vision inverts a generative model through an interrogation of the evidence in a process often thought to involve top-down predictions of sensory data to evaluate the likelihood of alternative hypotheses. The authors include scientists rooted in roughly equal numbers in each of the conceptions and motivated to overcome what might be a false dichotomy between them and engage the other perspective in the realm of theory and experiment. The primate brain employs an unknown algorithm that may combine the advantages of both conceptions. We explain and clarify the terminology, review the key empirical evidence, and propose an empirical research program that transcends the dichotomy and sets the stage for revealing the mysterious hybrid algorithm of primate vision.

The Topology and Geometry of Neural Representations

Sep 22, 2023

Abstract:A central question for neuroscience is how to characterize brain representations of perceptual and cognitive content. An ideal characterization should distinguish different functional regions with robustness to noise and idiosyncrasies of individual brains that do not correspond to computational differences. Previous studies have characterized brain representations by their representational geometry, which is defined by the representational dissimilarity matrix (RDM), a summary statistic that abstracts from the roles of individual neurons (or responses channels) and characterizes the discriminability of stimuli. Here we explore a further step of abstraction: from the geometry to the topology of brain representations. We propose topological representational similarity analysis (tRSA), an extension of representational similarity analysis (RSA) that uses a family of geo-topological summary statistics that generalizes the RDM to characterize the topology while de-emphasizing the geometry. We evaluate this new family of statistics in terms of the sensitivity and specificity for model selection using both simulations and functional MRI (fMRI) data. In the simulations, the ground truth is a data-generating layer representation in a neural network model and the models are the same and other layers in different model instances (trained from different random seeds). In fMRI, the ground truth is a visual area and the models are the same and other areas measured in different subjects. Results show that topology-sensitive characterizations of population codes are robust to noise and interindividual variability and maintain excellent sensitivity to the unique representational signatures of different neural network layers and brain regions.

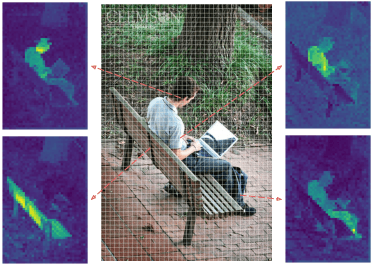

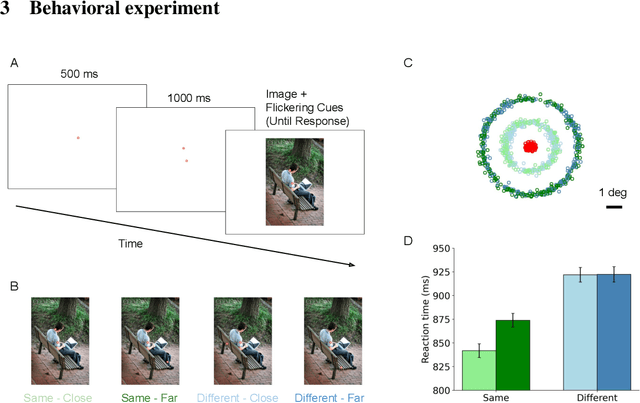

Affinity-based Attention in Self-supervised Transformers Predicts Dynamics of Object Grouping in Humans

Jun 01, 2023

Abstract:The spreading of attention has been proposed as a mechanism for how humans group features to segment objects. However, such a mechanism has not yet been implemented and tested in naturalistic images. Here, we leverage the feature maps from self-supervised vision Transformers and propose a model of human object-based attention spreading and segmentation. Attention spreads within an object through the feature affinity signal between different patches of the image. We also collected behavioral data on people grouping objects in natural images by judging whether two dots are on the same object or on two different objects. We found that our models of affinity spread that were built on feature maps from the self-supervised Transformers showed significant improvement over baseline and CNN based models on predicting reaction time patterns of humans, despite not being trained on the task or with any other object labels. Our work provides new benchmarks for evaluating models of visual representation learning including Transformers.

Distinguishing representational geometries with controversial stimuli: Bayesian experimental design and its application to face dissimilarity judgments

Nov 28, 2022

Abstract:Comparing representations of complex stimuli in neural network layers to human brain representations or behavioral judgments can guide model development. However, even qualitatively distinct neural network models often predict similar representational geometries of typical stimulus sets. We propose a Bayesian experimental design approach to synthesizing stimulus sets for adjudicating among representational models efficiently. We apply our method to discriminate among candidate neural network models of behavioral face dissimilarity judgments. Our results indicate that a neural network trained to invert a 3D-face-model graphics renderer is more human-aligned than the same architecture trained on identification, classification, or autoencoding. Our proposed stimulus synthesis objective is generally applicable to designing experiments to be analyzed by representational similarity analysis for model comparison.

Testing the limits of natural language models for predicting human language judgments

Apr 07, 2022

Abstract:Neural network language models can serve as computational hypotheses about how humans process language. We compared the model-human consistency of diverse language models using a novel experimental approach: controversial sentence pairs. For each controversial sentence pair, two language models disagree about which sentence is more likely to occur in natural text. Considering nine language models (including n-gram, recurrent neural networks, and transformer models), we created hundreds of such controversial sentence pairs by either selecting sentences from a corpus or synthetically optimizing sentence pairs to be highly controversial. Human subjects then provided judgments indicating for each pair which of the two sentences is more likely. Controversial sentence pairs proved highly effective at revealing model failures and identifying models that aligned most closely with human judgments. The most human-consistent model tested was GPT-2, although experiments also revealed significant shortcomings of its alignment with human perception.

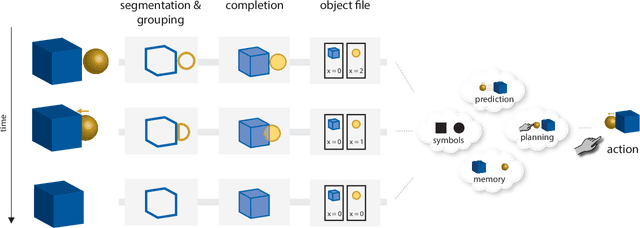

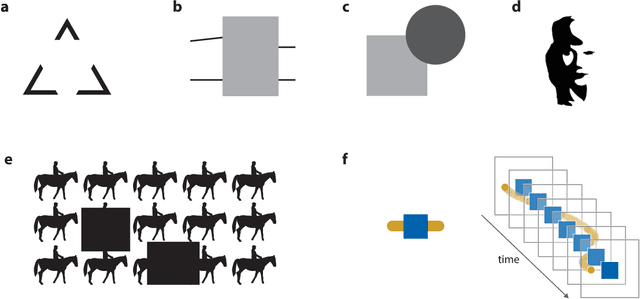

Capturing the objects of vision with neural networks

Sep 07, 2021

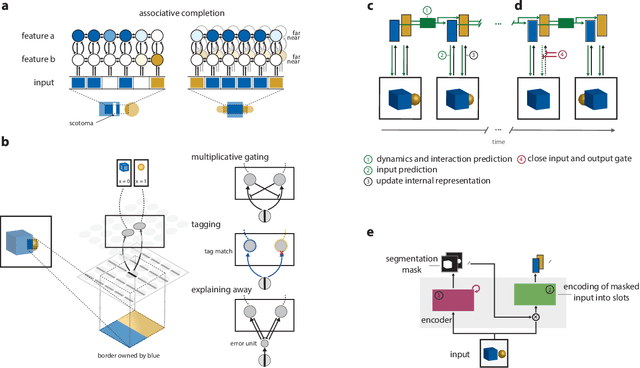

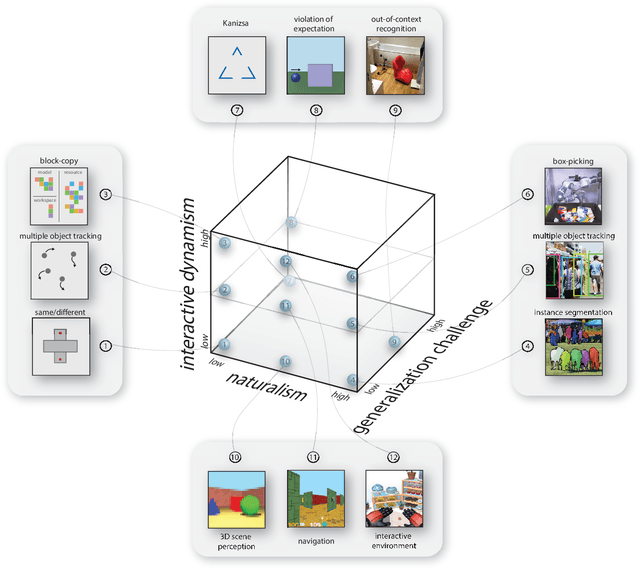

Abstract:Human visual perception carves a scene at its physical joints, decomposing the world into objects, which are selectively attended, tracked, and predicted as we engage our surroundings. Object representations emancipate perception from the sensory input, enabling us to keep in mind that which is out of sight and to use perceptual content as a basis for action and symbolic cognition. Human behavioral studies have documented how object representations emerge through grouping, amodal completion, proto-objects, and object files. Deep neural network (DNN) models of visual object recognition, by contrast, remain largely tethered to the sensory input, despite achieving human-level performance at labeling objects. Here, we review related work in both fields and examine how these fields can help each other. The cognitive literature provides a starting point for the development of new experimental tasks that reveal mechanisms of human object perception and serve as benchmarks driving development of deep neural network models that will put the object into object recognition.

Going in circles is the way forward: the role of recurrence in visual inference

Mar 26, 2020

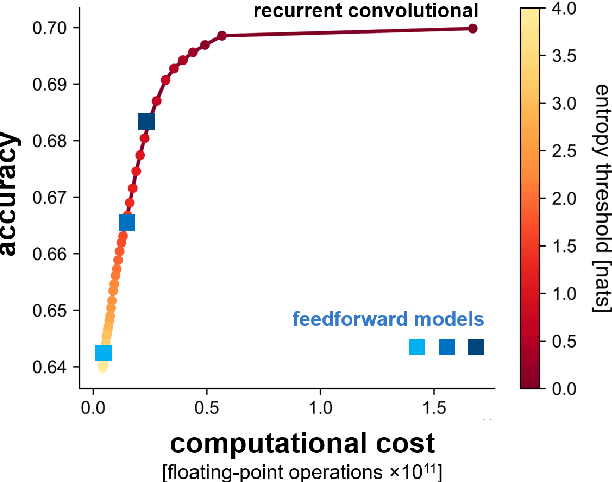

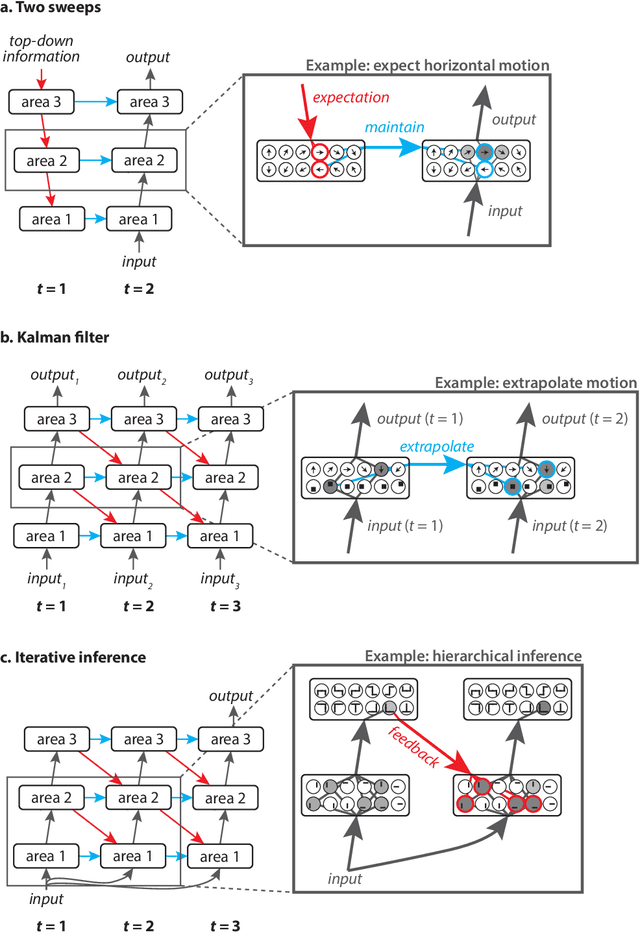

Abstract:Biological visual systems exhibit abundant recurrent connectivity. State-of-the-art neural network models for visual recognition, by contrast, rely heavily or exclusively on feedforward computation. Any finite-time recurrent neural network (RNN) can be unrolled along time to yield an equivalent feedforward neural network (FNN). This important insight suggests that computational neuroscientists may not need to engage recurrent computation, and that computer-vision engineers may be limiting themselves to a special case of FNN if they build recurrent models. Here we argue, to the contrary, that FNNs are a special case of RNNs and that computational neuroscientists and engineers should engage recurrence to understand how brains and machines can (1) achieve greater and more flexible computational depth, (2) compress complex computations into limited hardware, (3) integrate priors and priorities into visual inference through expectation and attention, (4) exploit sequential dependencies in their data for better inference and prediction, and (5) leverage the power of iterative computation.

Controversial stimuli: pitting neural networks against each other as models of human recognition

Nov 21, 2019

Abstract:Distinct scientific theories can make similar predictions. To adjudicate between theories, we must design experiments for which the theories make distinct predictions. Here we consider the problem of comparing deep neural networks as models of human visual recognition. To efficiently determine which models better explain human responses, we synthesize controversial stimuli: images for which different models produce distinct responses. We tested nine different models, which employed different architectures and recognition algorithms, including discriminative and generative models, all trained to recognize handwritten digits (from the MNIST set of digit images). We synthesized controversial stimuli to maximize the disagreement among the models. Human subjects viewed hundreds of these stimuli and judged the probability of presence of each digit in each image. We quantified how accurately each model predicted the human judgements. We found that the generative models (which learn the distribution of images for each class) better predicted the human judgments than the discriminative models (which learn to directly map from images to labels). The best performing model was the generative Analysis-by-Synthesis model (based on variational autoencoders). However, a simpler generative model (based on Gaussian-kernel-density estimation) also performed better than each of the discriminative models. None of the candidate models fully explained the human responses. We discuss the advantages and limitations of controversial stimuli as an experimental paradigm and how they generalize and improve on adversarial examples as probes of discrepancies between models and human perception.

Visualizing Representational Dynamics with Multidimensional Scaling Alignment

Jul 28, 2019

Abstract:Representational similarity analysis (RSA) has been shown to be an effective framework to characterize brain-activity profiles and deep neural network activations as representational geometry by computing the pairwise distances of the response patterns as a representational dissimilarity matrix (RDM). However, how to properly analyze and visualize the representational geometry as dynamics over the time course from stimulus onset to offset is not well understood. In this work, we formulated the pipeline to understand representational dynamics with RDM movies and Procrustes-aligned Multidimensional Scaling (pMDS), and applied it to neural recording of monkey IT cortex. Our results suggest that the the multidimensional scaling alignment can genuinely capture the dynamics of the category-specific representation spaces with multiple visualization possibilities, and that object categorization may be hierarchical, multi-staged, and oscillatory (or recurrent).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge