Going in circles is the way forward: the role of recurrence in visual inference

Paper and Code

Mar 26, 2020

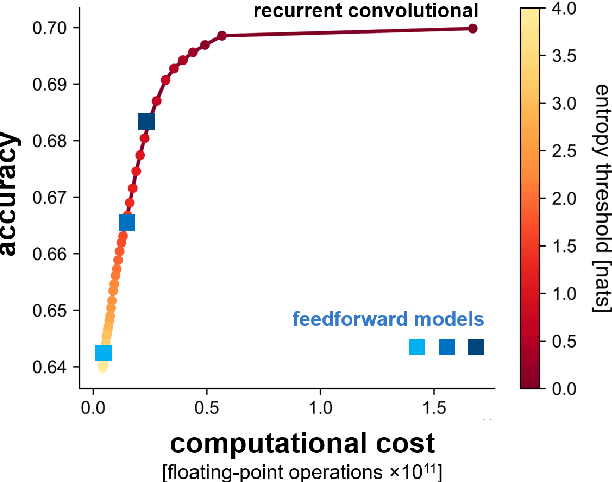

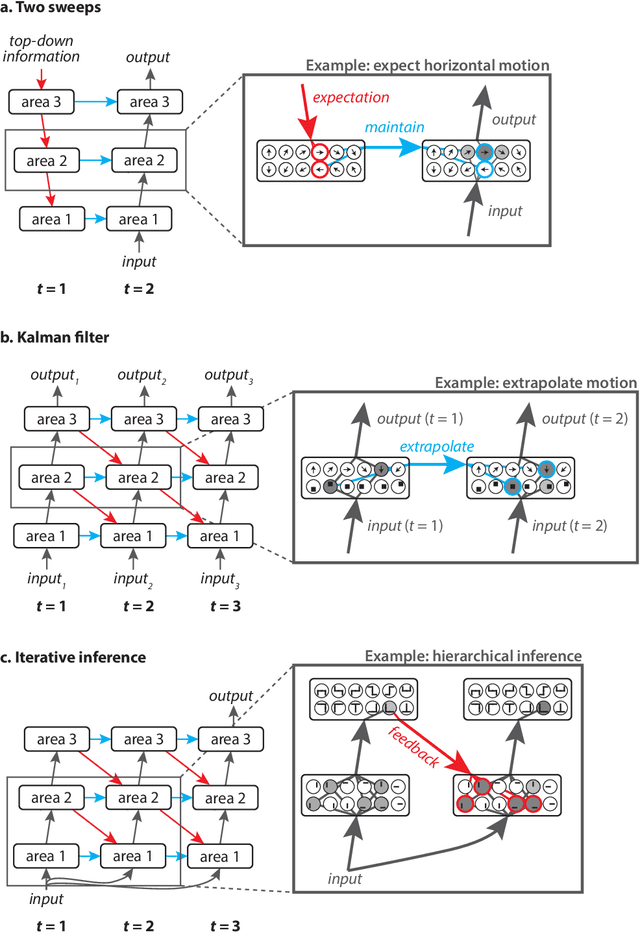

Biological visual systems exhibit abundant recurrent connectivity. State-of-the-art neural network models for visual recognition, by contrast, rely heavily or exclusively on feedforward computation. Any finite-time recurrent neural network (RNN) can be unrolled along time to yield an equivalent feedforward neural network (FNN). This important insight suggests that computational neuroscientists may not need to engage recurrent computation, and that computer-vision engineers may be limiting themselves to a special case of FNN if they build recurrent models. Here we argue, to the contrary, that FNNs are a special case of RNNs and that computational neuroscientists and engineers should engage recurrence to understand how brains and machines can (1) achieve greater and more flexible computational depth, (2) compress complex computations into limited hardware, (3) integrate priors and priorities into visual inference through expectation and attention, (4) exploit sequential dependencies in their data for better inference and prediction, and (5) leverage the power of iterative computation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge