Maria Vittoria Minniti

AnyTask: an Automated Task and Data Generation Framework for Advancing Sim-to-Real Policy Learning

Dec 19, 2025Abstract:Generalist robot learning remains constrained by data: large-scale, diverse, and high-quality interaction data are expensive to collect in the real world. While simulation has become a promising way for scaling up data collection, the related tasks, including simulation task design, task-aware scene generation, expert demonstration synthesis, and sim-to-real transfer, still demand substantial human effort. We present AnyTask, an automated framework that pairs massively parallel GPU simulation with foundation models to design diverse manipulation tasks and synthesize robot data. We introduce three AnyTask agents for generating expert demonstrations aiming to solve as many tasks as possible: 1) ViPR, a novel task and motion planning agent with VLM-in-the-loop Parallel Refinement; 2) ViPR-Eureka, a reinforcement learning agent with generated dense rewards and LLM-guided contact sampling; 3) ViPR-RL, a hybrid planning and learning approach that jointly produces high-quality demonstrations with only sparse rewards. We train behavior cloning policies on generated data, validate them in simulation, and deploy them directly on real robot hardware. The policies generalize to novel object poses, achieving 44% average success across a suite of real-world pick-and-place, drawer opening, contact-rich pushing, and long-horizon manipulation tasks. Our project website is at https://anytask.rai-inst.com .

Real-is-Sim: Bridging the Sim-to-Real Gap with a Dynamic Digital Twin for Real-World Robot Policy Evaluation

Apr 04, 2025Abstract:Recent advancements in behavior cloning have enabled robots to perform complex manipulation tasks. However, accurately assessing training performance remains challenging, particularly for real-world applications, as behavior cloning losses often correlate poorly with actual task success. Consequently, researchers resort to success rate metrics derived from costly and time-consuming real-world evaluations, making the identification of optimal policies and detection of overfitting or underfitting impractical. To address these issues, we propose real-is-sim, a novel behavior cloning framework that incorporates a dynamic digital twin (based on Embodied Gaussians) throughout the entire policy development pipeline: data collection, training, and deployment. By continuously aligning the simulated world with the physical world, demonstrations can be collected in the real world with states extracted from the simulator. The simulator enables flexible state representations by rendering image inputs from any viewpoint or extracting low-level state information from objects embodied within the scene. During training, policies can be directly evaluated within the simulator in an offline and highly parallelizable manner. Finally, during deployment, policies are run within the simulator where the real robot directly tracks the simulated robot's joints, effectively decoupling policy execution from real hardware and mitigating traditional domain-transfer challenges. We validate real-is-sim on the PushT manipulation task, demonstrating strong correlation between success rates obtained in the simulator and real-world evaluations. Videos of our system can be found at https://realissim.rai-inst.com.

Theia: Distilling Diverse Vision Foundation Models for Robot Learning

Jul 29, 2024

Abstract:Vision-based robot policy learning, which maps visual inputs to actions, necessitates a holistic understanding of diverse visual tasks beyond single-task needs like classification or segmentation. Inspired by this, we introduce Theia, a vision foundation model for robot learning that distills multiple off-the-shelf vision foundation models trained on varied vision tasks. Theia's rich visual representations encode diverse visual knowledge, enhancing downstream robot learning. Extensive experiments demonstrate that Theia outperforms its teacher models and prior robot learning models using less training data and smaller model sizes. Additionally, we quantify the quality of pre-trained visual representations and hypothesize that higher entropy in feature norm distributions leads to improved robot learning performance. Code and models are available at https://github.com/bdaiinstitute/theia.

Bayesian Multi-Task Learning MPC for Robotic Mobile Manipulation

Nov 18, 2022

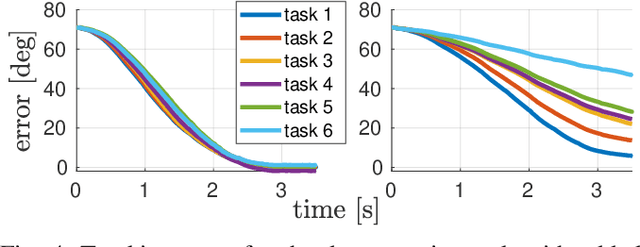

Abstract:Mobile manipulation in robotics is challenging due to the need of solving many diverse tasks, such as opening a door or picking-and-placing an object. Typically, a basic first-principles system description of the robot is available, thus motivating the use of model-based controllers. However, the robot dynamics and its interaction with an object are affected by uncertainty, limiting the controller's performance. To tackle this problem, we propose a Bayesian multi-task learning model that uses trigonometric basis functions to identify the error in the dynamics. In this way, data from different but related tasks can be leveraged to provide a descriptive error model that can be efficiently updated online for new, unseen tasks. We combine this learning scheme with a model predictive controller, and extensively test the effectiveness of the proposed approach, including comparisons with available baseline controllers. We present simulation tests with a ball-balancing robot, and door-opening hardware experiments with a quadrupedal manipulator.

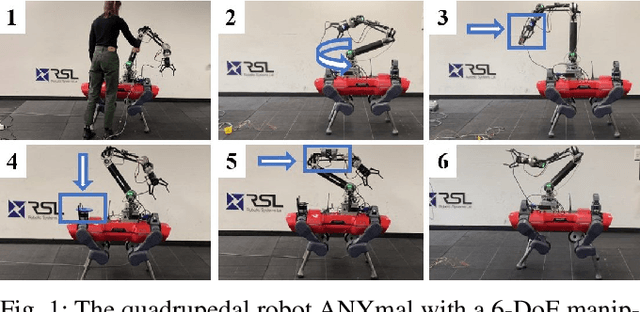

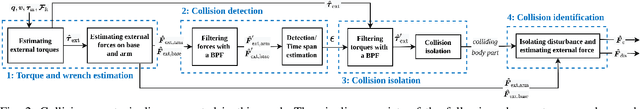

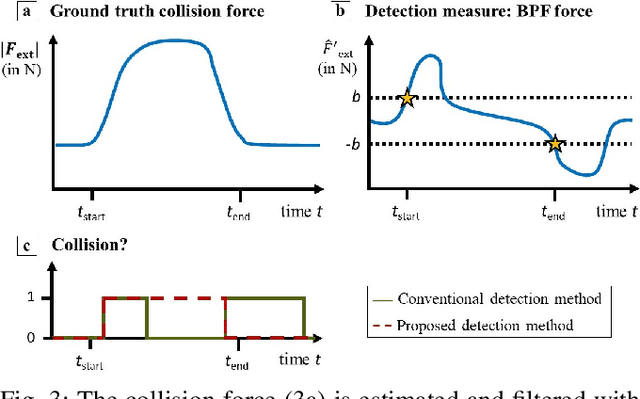

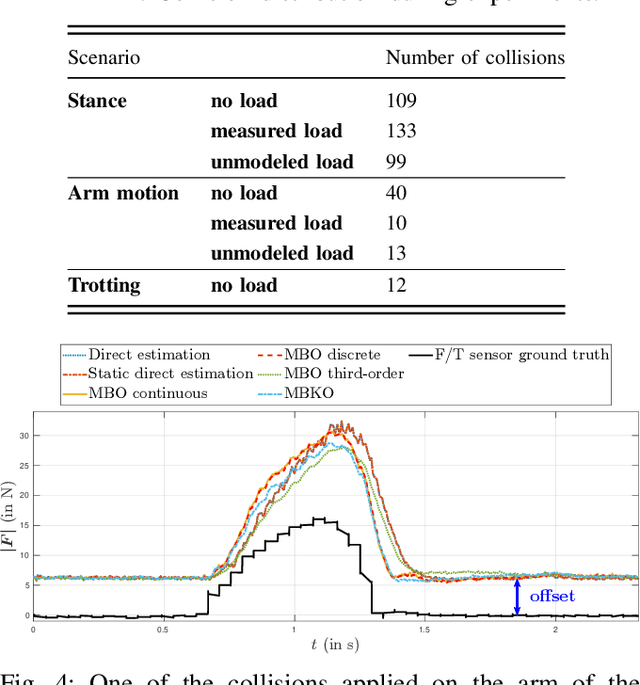

Collision detection and identification for a legged manipulator

Jul 29, 2022

Abstract:To safely deploy legged robots in the real world it is necessary to provide them with the ability to reliably detect unexpected contacts and accurately estimate the corresponding contact force. In this paper, we propose a collision detection and identification pipeline for a quadrupedal manipulator. We first introduce an approach to estimate the collision time span based on band-pass filtering and show that this information is key for obtaining accurate collision force estimates. We then improve the accuracy of the identified force magnitude by compensating for model inaccuracies, unmodeled loads, and any other potential source of quasi-static disturbances acting on the robot. We validate our framework with extensive hardware experiments in various scenarios, including trotting and additional unmodeled load on the robot.

Adaptive CLF-MPC With Application To Quadrupedal Robots

Dec 08, 2021

Abstract:Modern robotic systems are endowed with superior mobility and mechanical skills that make them suited to be employed in real-world scenarios, where interactions with heavy objects and precise manipulation capabilities are required. For instance, legged robots with high payload capacity can be used in disaster scenarios to remove dangerous material or carry injured people. It is thus essential to develop planning algorithms that can enable complex robots to perform motion and manipulation tasks accurately. In addition, online adaptation mechanisms with respect to new, unknown environments are needed. In this work, we impose that the optimal state-input trajectories generated by Model Predictive Control (MPC) satisfy the Lyapunov function criterion derived in adaptive control for robotic systems. As a result, we combine the stability guarantees provided by Control Lyapunov Functions (CLFs) and the optimality offered by MPC in a unified adaptive framework, yielding an improved performance during the robot's interaction with unknown objects. We validate the proposed approach in simulation and hardware tests on a quadrupedal robot carrying un-modeled payloads and pulling heavy boxes.

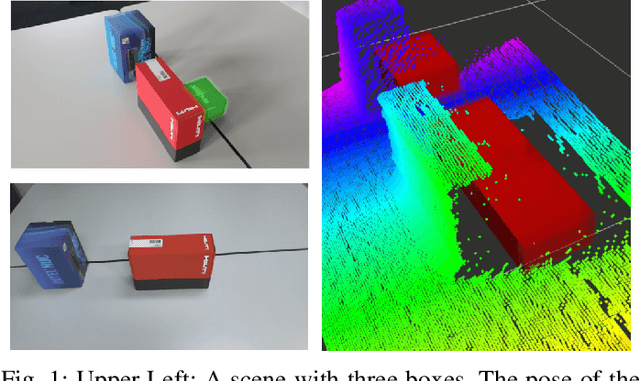

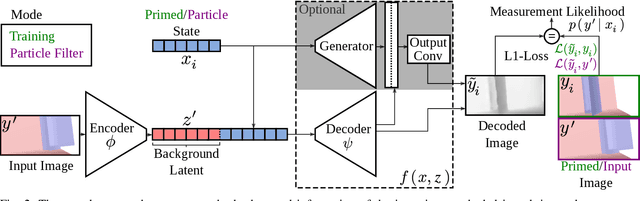

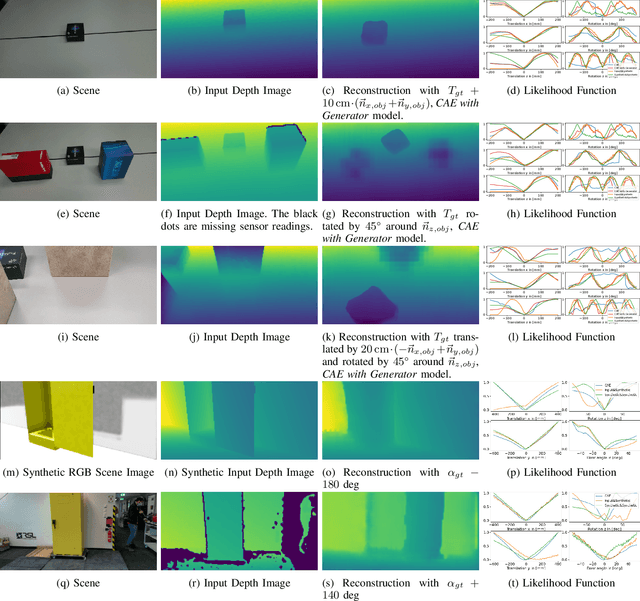

Deep Measurement Updates for Bayes Filters

Dec 01, 2021

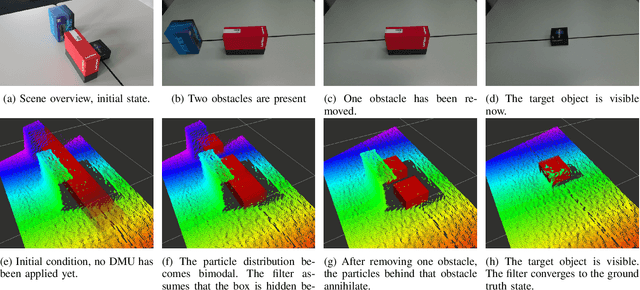

Abstract:Measurement update rules for Bayes filters often contain hand-crafted heuristics to compute observation probabilities for high-dimensional sensor data, like images. In this work, we propose the novel approach Deep Measurement Update (DMU) as a general update rule for a wide range of systems. DMU has a conditional encoder-decoder neural network structure to process depth images as raw inputs. Even though the network is trained only on synthetic data, the model shows good performance at evaluation time on real-world data. With our proposed training scheme primed data training , we demonstrate how the DMU models can be trained efficiently to be sensitive to condition variables without having to rely on a stochastic information bottleneck. We validate the proposed methods in multiple scenarios of increasing complexity, beginning with the pose estimation of a single object to the joint estimation of the pose and the internal state of an articulated system. Moreover, we provide a benchmark against Articulated Signed Distance Functions(A-SDF) on the RBO dataset as a baseline comparison for articulation state estimation.

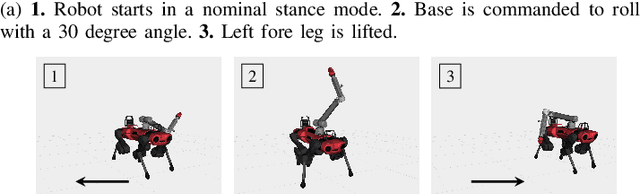

Passivity-based control for haptic teleoperation of a legged manipulator in presence of time-delays

Aug 17, 2021

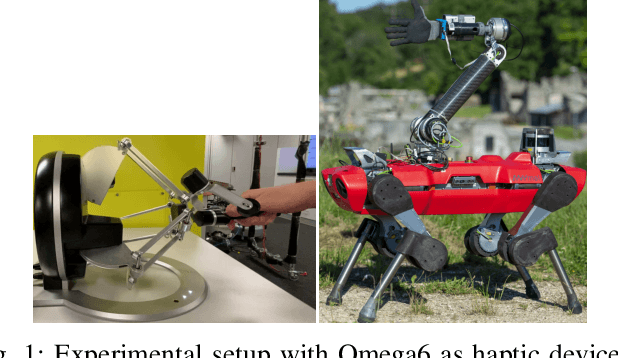

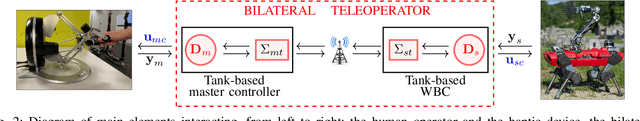

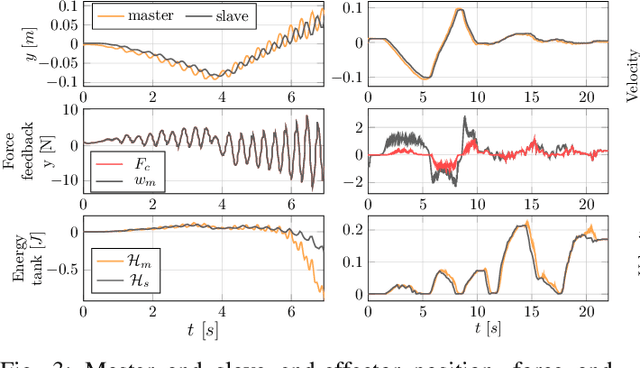

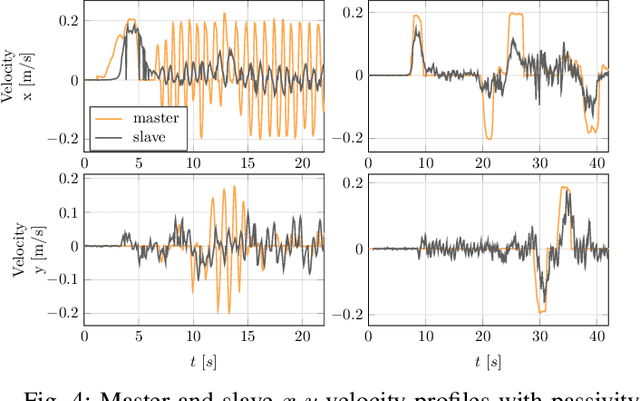

Abstract:When dealing with the haptic teleoperation of multi-limbed mobile manipulators, the problem of mitigating the destabilizing effects arising from the communication link between the haptic device and the remote robot has not been properly addressed. In this work, we propose a passive control architecture to haptically teleoperate a legged mobile manipulator, while remaining stable in the presence of time delays and frequency mismatches in the master and slave controllers. At the master side, a discrete-time energy modulation of the control input is proposed. At the slave side, passivity constraints are included in an optimization-based whole-body controller to satisfy the energy limitations. A hybrid teleoperation scheme allows the human operator to remotely operate the robot's end-effector while in stance mode, and its base velocity in locomotion mode. The resulting control architecture is demonstrated on a quadrupedal robot with an artificial delay added to the network.

Model Predictive Robot-Environment Interaction Control for Mobile Manipulation Tasks

Jun 08, 2021

Abstract:Modern, torque-controlled service robots can regulate contact forces when interacting with their environment. Model Predictive Control (MPC) is a powerful method to solve the underlying control problem, allowing to plan for whole-body motions while including different constraints imposed by the robot dynamics or its environment. However, an accurate model of the robot-environment is needed to achieve a satisfying closed-loop performance. Currently, this necessity undermines the performance and generality of MPC in manipulation tasks. In this work, we combine an MPC-based whole-body controller with two adaptive schemes, derived from online system identification and adaptive control. As a result, we enable a general mobile manipulator to interact with unknown environments, without any need for re-tuning parameters or pre-modeling the interacting objects. In combination with the MPC controller, the two adaptive approaches are validated and benchmarked with a ball-balancing manipulator in door opening and object lifting tasks.

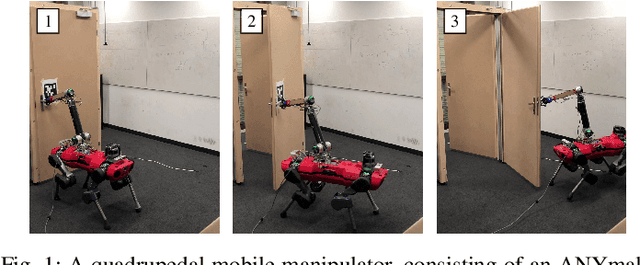

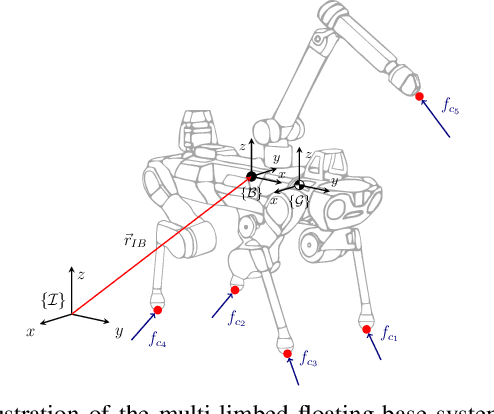

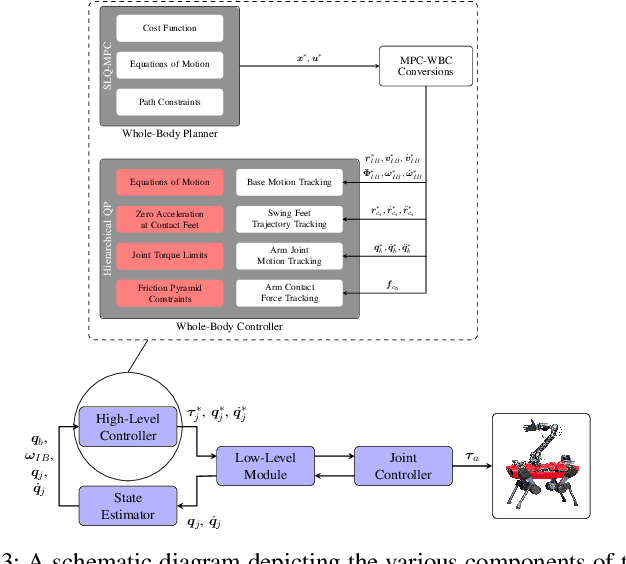

A Unified MPC Framework for Whole-Body Dynamic Locomotion and Manipulation

Mar 01, 2021

Abstract:In this paper, we propose a whole-body planning framework that unifies dynamic locomotion and manipulation tasks by formulating a single multi-contact optimal control problem. We model the hybrid nature of a generic multi-limbed mobile manipulator as a switched system, and introduce a set of constraints that can encode any pre-defined gait sequence or manipulation schedule in the formulation. Since the system is designed to actively manipulate its environment, the equations of motion are composed by augmenting the robot's centroidal dynamics with the manipulated-object dynamics. This allows us to describe any high-level task in the same cost/constraint function. The resulting planning framework could be solved on the robot's onboard computer in real-time within a model predictive control scheme. This is demonstrated in a set of real hardware experiments done in free-motion, such as base or end-effector pose tracking, and while pushing/pulling a heavy resistive door. Robustness against model mismatches and external disturbances is also verified during these test cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge