Marcus Dominguez-Kuhne

Semantic-Metric Bayesian Risk Fields: Learning Robot Safety from Human Videos with a VLM Prior

Dec 09, 2025Abstract:Humans interpret safety not as a binary signal but as a continuous, context- and spatially-dependent notion of risk. While risk is subjective, humans form rational mental models that guide action selection in dynamic environments. This work proposes a framework for extracting implicit human risk models by introducing a novel, semantically-conditioned and spatially-varying parametrization of risk, supervised directly from safe human demonstration videos and VLM common sense. Notably, we define risk through a Bayesian formulation. The prior is furnished by a pretrained vision-language model. In order to encourage the risk estimate to be more human aligned, a likelihood function modulates the prior to produce a relative metric of risk. Specifically, the likelihood is a learned ViT that maps pretrained features, to pixel-aligned risk values. Our pipeline ingests RGB images and a query object string, producing pixel-dense risk images. These images that can then be used as value-predictors in robot planning tasks or be projected into 3D for use in conventional trajectory optimization to produce human-like motion. This learned mapping enables generalization to novel objects and contexts, and has the potential to scale to much larger training datasets. In particular, the Bayesian framework that is introduced enables fast adaptation of our model to additional observations or common sense rules. We demonstrate that our proposed framework produces contextual risk that aligns with human preferences. Additionally, we illustrate several downstream applications of the model; as a value learner for visuomotor planners or in conjunction with a classical trajectory optimization algorithm. Our results suggest that our framework is a significant step toward enabling autonomous systems to internalize human-like risk. Code and results can be found at https://riskbayesian.github.io/bayesian_risk/.

Joint-Space Multi-Robot Motion Planning with Learned Decentralized Heuristics

Nov 21, 2023Abstract:In this paper, we present a method of multi-robot motion planning by biasing centralized, sampling-based tree search with decentralized, data-driven steer and distance heuristics. Over a range of robot and obstacle densities, we evaluate the plain Rapidly-expanding Random Trees (RRT), and variants of our method for double integrator dynamics. We show that whereas plain RRT fails in every instance to plan for $4$ robots, our method can plan for up to 16 robots, corresponding to searching through a very large 65-dimensional space, which validates the effectiveness of data-driven heuristics at combating exponential search space growth. We also find that the heuristic information is complementary; using both heuristics produces search trees with lower failure rates, nodes, and path costs when compared to using each in isolation. These results illustrate the effective decomposition of high-dimensional joint-space motion planning problems into local problems.

Learning Deformable Object Manipulation from Expert Demonstrations

Jul 20, 2022

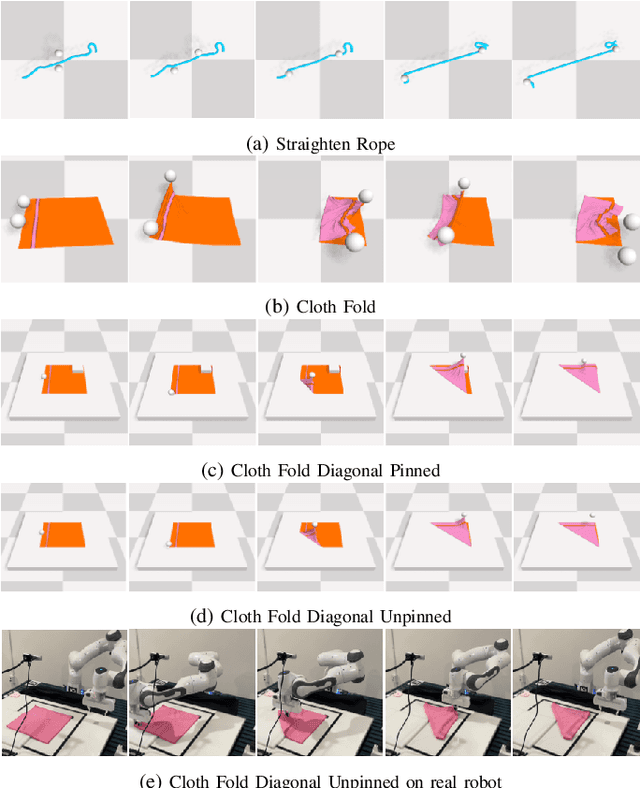

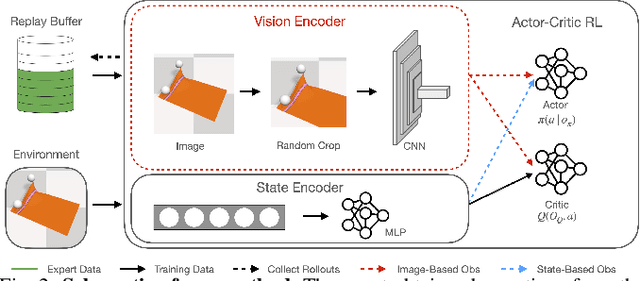

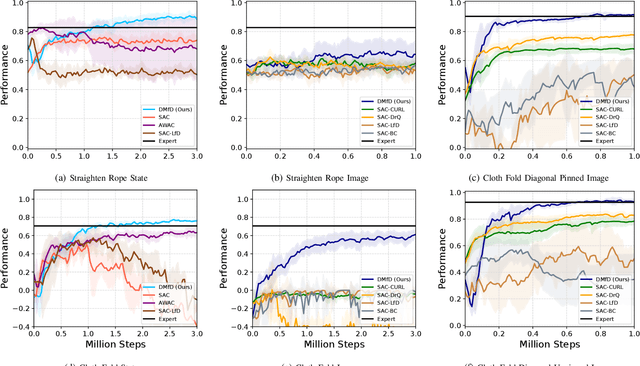

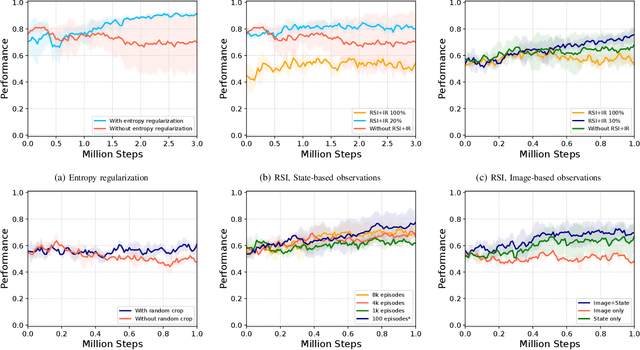

Abstract:We present a novel Learning from Demonstration (LfD) method, Deformable Manipulation from Demonstrations (DMfD), to solve deformable manipulation tasks using states or images as inputs, given expert demonstrations. Our method uses demonstrations in three different ways, and balances the trade-off between exploring the environment online and using guidance from experts to explore high dimensional spaces effectively. We test DMfD on a set of representative manipulation tasks for a 1-dimensional rope and a 2-dimensional cloth from the SoftGym suite of tasks, each with state and image observations. Our method exceeds baseline performance by up to 12.9% for state-based tasks and up to 33.44% on image-based tasks, with comparable or better robustness to randomness. Additionally, we create two challenging environments for folding a 2D cloth using image-based observations, and set a performance benchmark for them. We deploy DMfD on a real robot with a minimal loss in normalized performance during real-world execution compared to simulation (~6%). Source code is on github.com/uscresl/dmfd

* Accepted to IEEE Robotics & Automation Letters (RA-L) and IEEE IROS 2022. Project website: https://uscresl.github.io/dmfd

Mechanical Search on Shelves using Lateral Access X-RAY

Nov 23, 2020

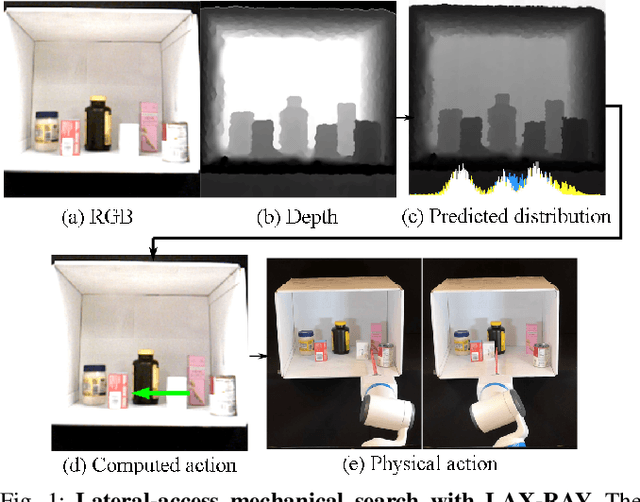

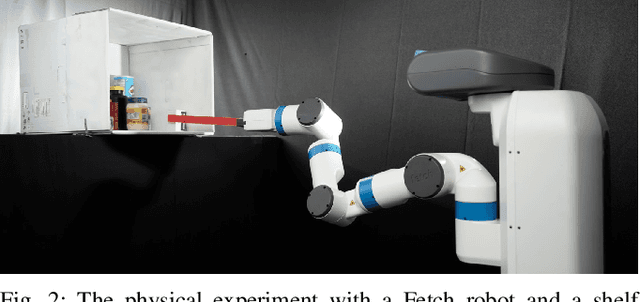

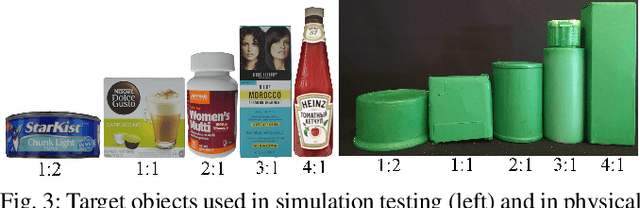

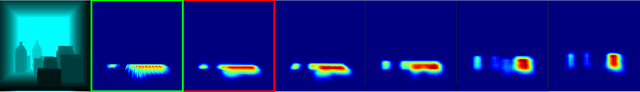

Abstract:Efficiently finding an occluded object with lateral access arises in many contexts such as warehouses, retail, healthcare, shipping, and homes. We introduce LAX-RAY (Lateral Access maXimal Reduction of occupancY support Area), a system to automate the mechanical search for occluded objects on shelves. For such lateral access environments, LAX-RAY couples a perception pipeline predicting a target object occupancy support distribution with a mechanical search policy that sequentially selects occluding objects to push to the side to reveal the target as efficiently as possible. Within the context of extruded polygonal objects and a stationary target with a known aspect ratio, we explore three lateral access search policies: Distribution Area Reduction (DAR), Distribution Entropy Reduction (DER), and Distribution Entropy Reduction over Multiple Time Steps (DER-MT) utilizing the support distribution and prior information. We evaluate these policies using the First-Order Shelf Simulator (FOSS) in which we simulate 800 random shelf environments of varying difficulty, and in a physical shelf environment with a Fetch robot and an embedded PrimeSense RGBD Camera. Average simulation results of 87.3% success rate demonstrate better performance of DER-MT with 2 prediction steps. When deployed on the robot, results show a success rate of at least 80% for all policies, suggesting that LAX-RAY can efficiently reveal the target object in reality. Both results show significantly better performance of the three proposed policies compared to a baseline policy with uniform probability distribution assumption in non-trivial cases, showing the importance of distribution prediction. Code, videos, and supplementary material can be found at https://sites.google.com/berkeley.edu/lax-ray.

Visuomotor Mechanical Search: Learning to Retrieve Target Objects in Clutter

Aug 13, 2020

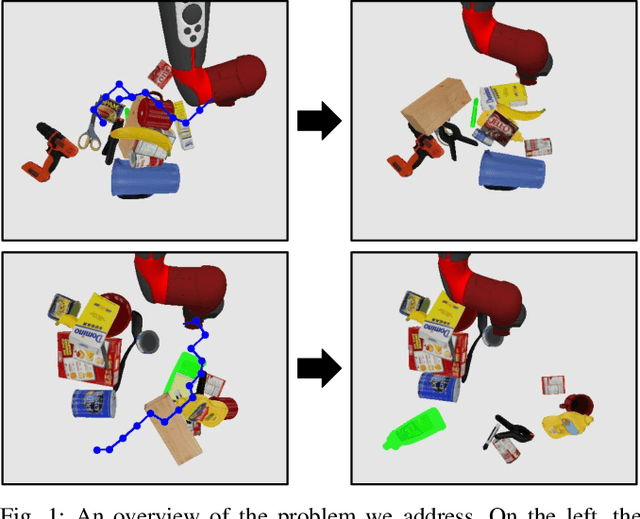

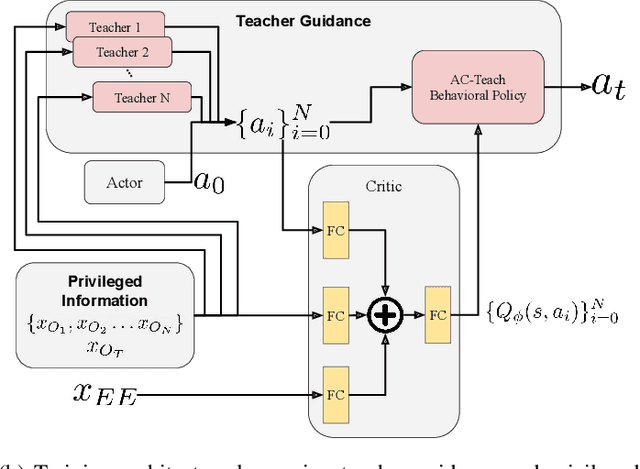

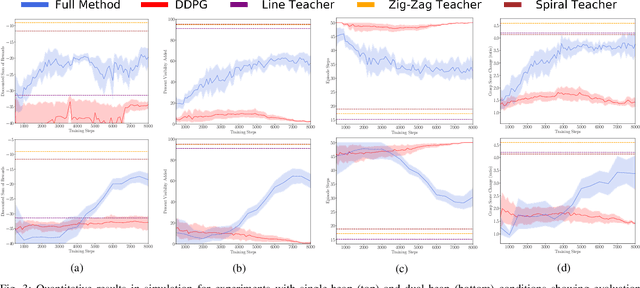

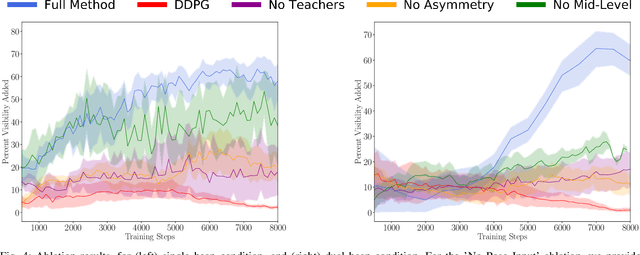

Abstract:When searching for objects in cluttered environments, it is often necessary to perform complex interactions in order to move occluding objects out of the way and fully reveal the object of interest and make it graspable. Due to the complexity of the physics involved and the lack of accurate models of the clutter, planning and controlling precise predefined interactions with accurate outcome is extremely hard, when not impossible. In problems where accurate (forward) models are lacking, Deep Reinforcement Learning (RL) has shown to be a viable solution to map observations (e.g. images) to good interactions in the form of close-loop visuomotor policies. However, Deep RL is sample inefficient and fails when applied directly to the problem of unoccluding objects based on images. In this work we present a novel Deep RL procedure that combines i) teacher-aided exploration, ii) a critic with privileged information, and iii) mid-level representations, resulting in sample efficient and effective learning for the problem of uncovering a target object occluded by a heap of unknown objects. Our experiments show that our approach trains faster and converges to more efficient uncovering solutions than baselines and ablations, and that our uncovering policies lead to an average improvement in the graspability of the target object, facilitating downstream retrieval applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge