Li Shi

DINT Transformer

Jan 29, 2025Abstract:DIFF Transformer addresses the issue of irrelevant context interference by introducing a differential attention mechanism that enhances the robustness of local attention. However, it has two critical limitations: the lack of global context modeling, which is essential for identifying globally significant tokens, and numerical instability due to the absence of strict row normalization in the attention matrix. To overcome these challenges, we propose DINT Transformer, which extends DIFF Transformer by incorporating a differential-integral mechanism. By computing global importance scores and integrating them into the attention matrix, DINT Transformer improves its ability to capture global dependencies. Moreover, the unified parameter design enforces row-normalized attention matrices, improving numerical stability. Experimental results demonstrate that DINT Transformer excels in accuracy and robustness across various practical applications, such as long-context language modeling and key information retrieval. These results position DINT Transformer as a highly effective and promising architecture.

Argumentative Experience: Reducing Confirmation Bias on Controversial Issues through LLM-Generated Multi-Persona Debates

Dec 10, 2024

Abstract:Large language models (LLMs) are enabling designers to give life to exciting new user experiences for information access. In this work, we present a system that generates LLM personas to debate a topic of interest from different perspectives. How might information seekers use and benefit from such a system? Can centering information access around diverse viewpoints help to mitigate thorny challenges like confirmation bias in which information seekers over-trust search results matching existing beliefs? How do potential biases and hallucinations in LLMs play out alongside human users who are also fallible and possibly biased? Our study exposes participants to multiple viewpoints on controversial issues via a mixed-methods, within-subjects study. We use eye-tracking metrics to quantitatively assess cognitive engagement alongside qualitative feedback. Compared to a baseline search system, we see more creative interactions and diverse information-seeking with our multi-persona debate system, which more effectively reduces user confirmation bias and conviction toward their initial beliefs. Overall, our study contributes to the emerging design space of LLM-based information access systems, specifically investigating the potential of simulated personas to promote greater exposure to information diversity, emulate collective intelligence, and mitigate bias in information seeking.

Can KAN Work? Exploring the Potential of Kolmogorov-Arnold Networks in Computer Vision

Nov 14, 2024Abstract:Kolmogorov-Arnold Networks(KANs), as a theoretically efficient neural network architecture, have garnered attention for their potential in capturing complex patterns. However, their application in computer vision remains relatively unexplored. This study first analyzes the potential of KAN in computer vision tasks, evaluating the performance of KAN and its convolutional variants in image classification and semantic segmentation. The focus is placed on examining their characteristics across varying data scales and noise levels. Results indicate that while KAN exhibits stronger fitting capabilities, it is highly sensitive to noise, limiting its robustness. To address this challenge, we propose a smoothness regularization method and introduce a Segment Deactivation technique. Both approaches enhance KAN's stability and generalization, demonstrating its potential in handling complex visual data tasks.

Review of Cloud Service Composition for Intelligent Manufacturing

Aug 03, 2024Abstract:Intelligent manufacturing is a new model that uses advanced technologies such as the Internet of Things, big data, and artificial intelligence to improve the efficiency and quality of manufacturing production. As an important support to promote the transformation and upgrading of the manufacturing industry, cloud service optimization has received the attention of researchers. In recent years, remarkable research results have been achieved in this field. For the sustainability of intelligent manufacturing platforms, in this paper we summarize the process of cloud service optimization for intelligent manufacturing. Further, to address the problems of dispersed optimization indicators and nonuniform/unstandardized definitions in the existing research, 11 optimization indicators that take into account three-party participant subjects are defined from the urgent requirements of the sustainable development of intelligent manufacturing platforms. Next, service optimization algorithms are classified into two categories, heuristic and reinforcement learning. After comparing the two categories, the current key techniques of service optimization are targeted. Finally, research hotspots and future research trends of service optimization are summarized.

TSOM: Small Object Motion Detection Neural Network Inspired by Avian Visual Circuit

Apr 01, 2024

Abstract:Detecting small moving objects in complex backgrounds from an overhead perspective is a highly challenging task for machine vision systems. As an inspiration from nature, the avian visual system is capable of processing motion information in various complex aerial scenes, and its Retina-OT-Rt visual circuit is highly sensitive to capturing the motion information of small objects from high altitudes. However, more needs to be done on small object motion detection algorithms based on the avian visual system. In this paper, we conducted mathematical modeling based on extensive studies of the biological mechanisms of the Retina-OT-Rt visual circuit. Based on this, we proposed a novel tectum small object motion detection neural network (TSOM). The neural network includes the retina, SGC dendritic, SGC Soma, and Rt layers, each layer corresponding to neurons in the visual pathway. The Retina layer is responsible for accurately projecting input content, the SGC dendritic layer perceives and encodes spatial-temporal information, the SGC Soma layer computes complex motion information and extracts small objects, and the Rt layer integrates and decodes motion information from multiple directions to determine the position of small objects. Extensive experiments on pigeon neurophysiological experiments and image sequence data showed that the TSOM is biologically interpretable and effective in extracting reliable small object motion features from complex high-altitude backgrounds.

Using Explainable AI to Cross-Validate Socio-economic Disparities Among Covid-19 Patient Mortality

Feb 16, 2023

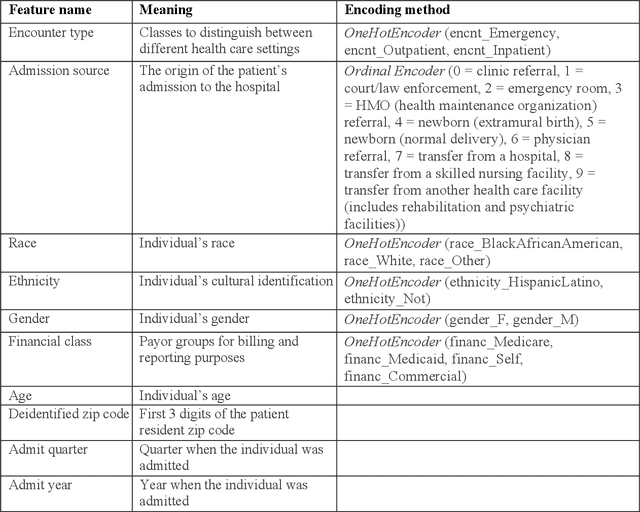

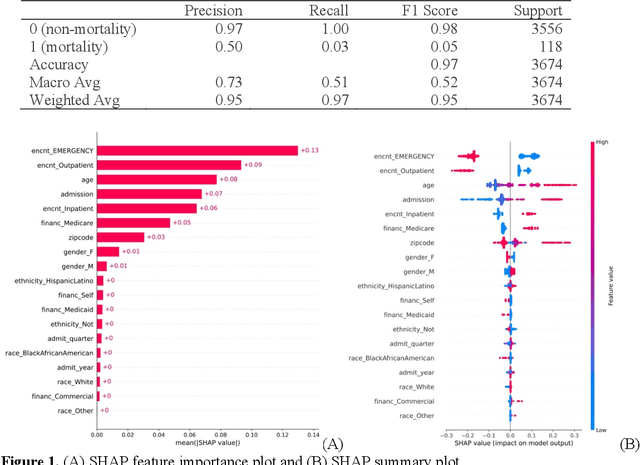

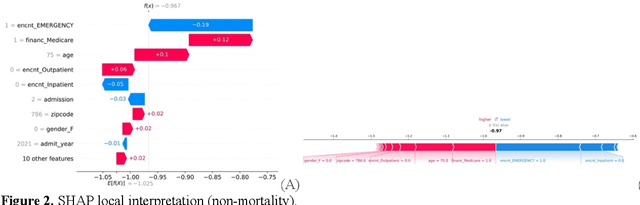

Abstract:This paper applies eXplainable Artificial Intelligence (XAI) methods to investigate the socioeconomic disparities in COVID patient mortality. An Extreme Gradient Boosting (XGBoost) prediction model is built based on a de-identified Austin area hospital dataset to predict the mortality of COVID-19 patients. We apply two XAI methods, Shapley Additive exPlanations (SHAP) and Locally Interpretable Model Agnostic Explanations (LIME), to compare the global and local interpretation of feature importance. This paper demonstrates the advantages of using XAI which shows the feature importance and decisive capability. Furthermore, we use the XAI methods to cross-validate their interpretations for individual patients. The XAI models reveal that Medicare financial class, older age, and gender have high impact on the mortality prediction. We find that LIME local interpretation does not show significant differences in feature importance comparing to SHAP, which suggests pattern confirmation. This paper demonstrates the importance of XAI methods in cross-validation of feature attributions.

Segmentation Network with Compound Loss Function for Hydatidiform Mole Hydrops Lesion Recognition

Apr 11, 2022

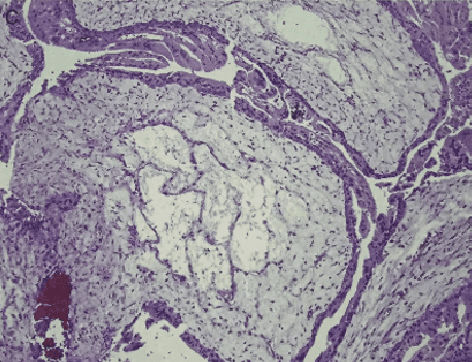

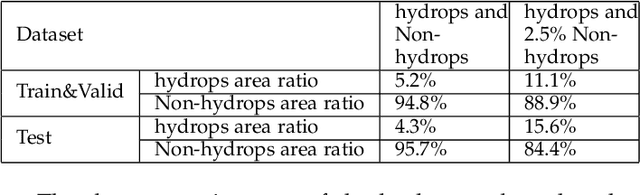

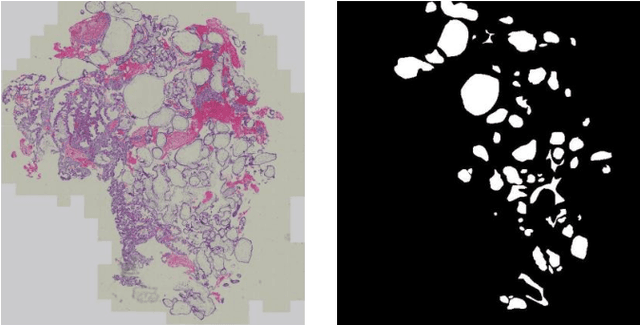

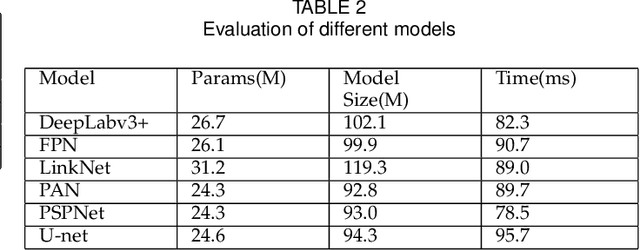

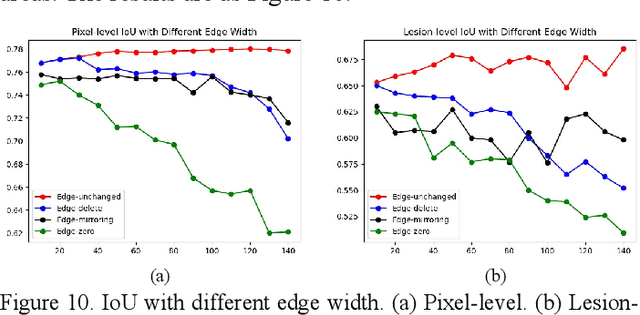

Abstract:Pathological morphology diagnosis is the standard diagnosis method of hydatidiform mole. As a disease with malignant potential, the hydatidiform mole section of hydrops lesions is an important basis for diagnosis. Due to incomplete lesion development, early hydatidiform mole is difficult to distinguish, resulting in a low accuracy of clinical diagnosis. As a remarkable machine learning technology, image semantic segmentation networks have been used in many medical image recognition tasks. We developed a hydatidiform mole hydrops lesion segmentation model based on a novel loss function and training method. The model consists of different networks that segment the section image at the pixel and lesion levels. Our compound loss function assign weights to the segmentation results of the two levels to calculate the loss. We then propose a stagewise training method to combine the advantages of various loss functions at different levels. We evaluate our method on a hydatidiform mole hydrops dataset. Experiments show that the proposed model with our loss function and training method has good recognition performance under different segmentation metrics.

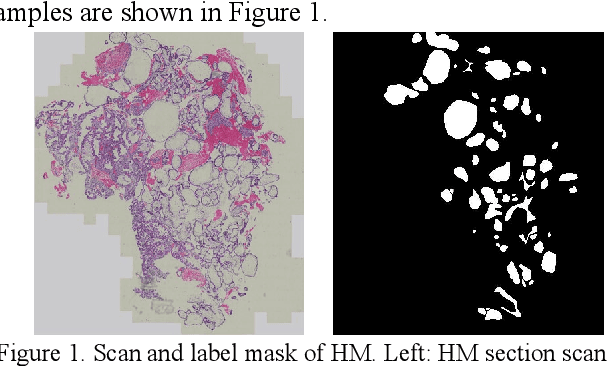

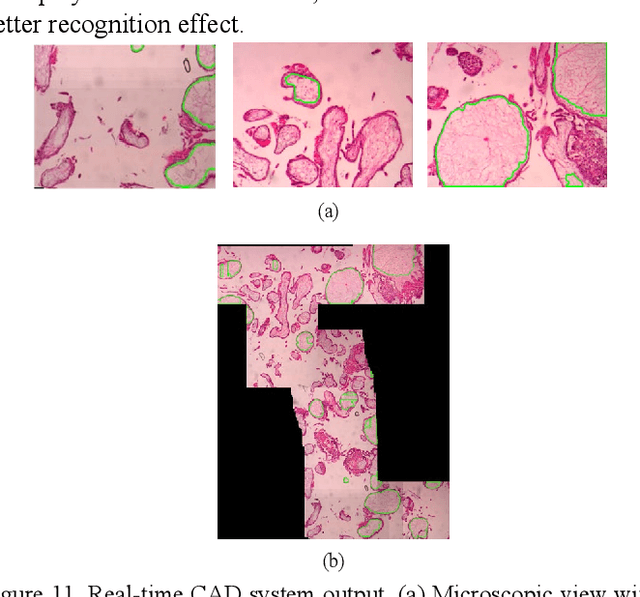

A Semantic Segmentation Network Based Real-Time Computer-Aided Diagnosis System for Hydatidiform Mole Hydrops Lesion Recognition in Microscopic View

Apr 11, 2022

Abstract:As a disease with malignant potential, hydatidiform mole (HM) is one of the most common gestational trophoblastic diseases. For pathologists, the HM section of hydrops lesions is an important basis for diagnosis. In pathology departments, the diverse microscopic manifestations of HM lesions and the limited view under the microscope mean that physicians with extensive diagnostic experience are required to prevent missed diagnosis and misdiagnosis. Feature extraction can significantly improve the accuracy and speed of the diagnostic process. As a remarkable diagnosis assisting technology, computer-aided diagnosis (CAD) has been widely used in clinical practice. We constructed a deep-learning-based CAD system to identify HM hydrops lesions in the microscopic view in real-time. The system consists of three modules; the image mosaic module and edge extension module process the image to improve the outcome of the hydrops lesion recognition module, which adopts a semantic segmentation network, our novel compound loss function, and a stepwise training function in order to achieve the best performance in identifying hydrops lesions. We evaluated our system using an HM hydrops dataset. Experiments show that our system is able to respond in real-time and correctly display the entire microscopic view with accurately labeled HM hydrops lesions.

The Effects of Interactive AI Design on User Behavior: An Eye-tracking Study of Fact-checking COVID-19 Claims

Feb 17, 2022

Abstract:We conducted a lab-based eye-tracking study to investigate how the interactivity of an AI-powered fact-checking system affects user interactions, such as dwell time, attention, and mental resources involved in using the system. A within-subject experiment was conducted, where participants used an interactive and a non-interactive version of a mock AI fact-checking system and rated their perceived correctness of COVID-19 related claims. We collected web-page interactions, eye-tracking data, and mental workload using NASA-TLX. We found that the presence of the affordance of interactively manipulating the AI system's prediction parameters affected users' dwell times, and eye-fixations on AOIs, but not mental workload. In the interactive system, participants spent the most time evaluating claims' correctness, followed by reading news. This promising result shows a positive role of interactivity in a mixed-initiative AI-powered system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge