Redoan Rahman

Analyzing Impact of Socio-Economic Factors on COVID-19 Mortality Prediction Using SHAP Value

Feb 27, 2023Abstract:This paper applies multiple machine learning (ML) algorithms to a dataset of de-identified COVID-19 patients provided by the COVID-19 Research Database. The dataset consists of 20,878 COVID-positive patients, among which 9,177 patients died in the year 2020. This paper aims to understand and interpret the association of socio-economic characteristics of patients with their mortality instead of maximizing prediction accuracy. According to our analysis, a patients households annual and disposable income, age, education, and employment status significantly impacts a machine learning models prediction. We also observe several individual patient data, which gives us insight into how the feature values impact the prediction for that data point. This paper analyzes the global and local interpretation of machine learning models on socio-economic data of COVID patients.

* 10 pages, 10 figures, American Medical Informatics Association(AMIA) Annual Conference 2022, Washington DC, USA, Nov 5-9, 2022

Using Explainable AI to Cross-Validate Socio-economic Disparities Among Covid-19 Patient Mortality

Feb 16, 2023

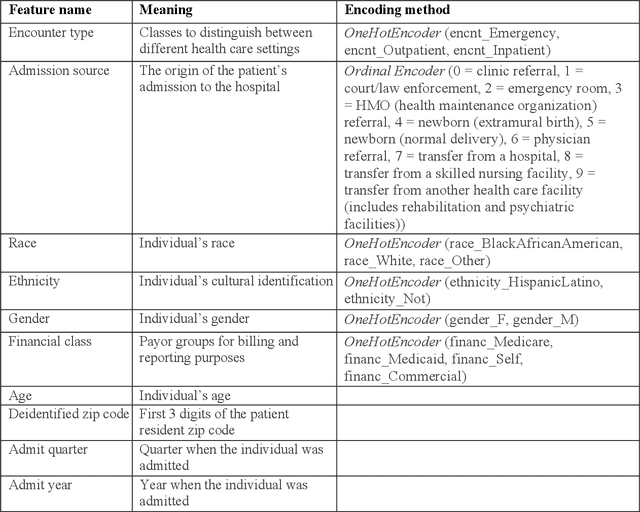

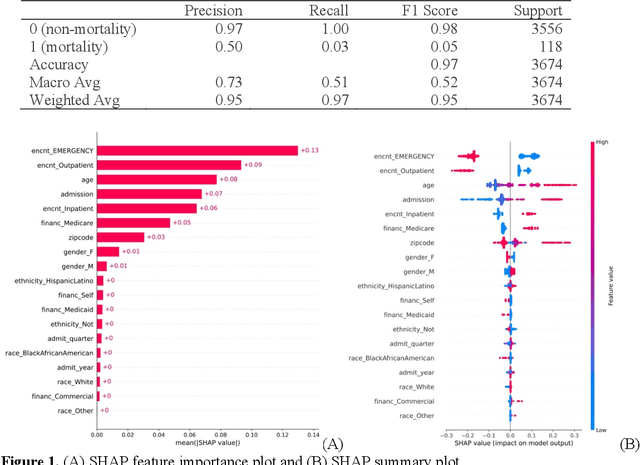

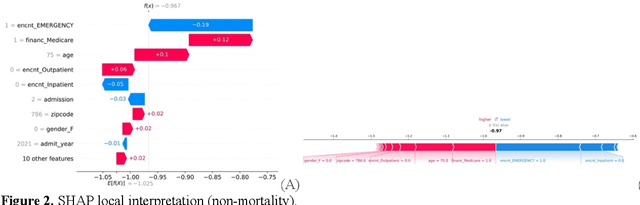

Abstract:This paper applies eXplainable Artificial Intelligence (XAI) methods to investigate the socioeconomic disparities in COVID patient mortality. An Extreme Gradient Boosting (XGBoost) prediction model is built based on a de-identified Austin area hospital dataset to predict the mortality of COVID-19 patients. We apply two XAI methods, Shapley Additive exPlanations (SHAP) and Locally Interpretable Model Agnostic Explanations (LIME), to compare the global and local interpretation of feature importance. This paper demonstrates the advantages of using XAI which shows the feature importance and decisive capability. Furthermore, we use the XAI methods to cross-validate their interpretations for individual patients. The XAI models reveal that Medicare financial class, older age, and gender have high impact on the mortality prediction. We find that LIME local interpretation does not show significant differences in feature importance comparing to SHAP, which suggests pattern confirmation. This paper demonstrates the importance of XAI methods in cross-validation of feature attributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge