Xianxu Zeng

Segmentation Network with Compound Loss Function for Hydatidiform Mole Hydrops Lesion Recognition

Apr 11, 2022

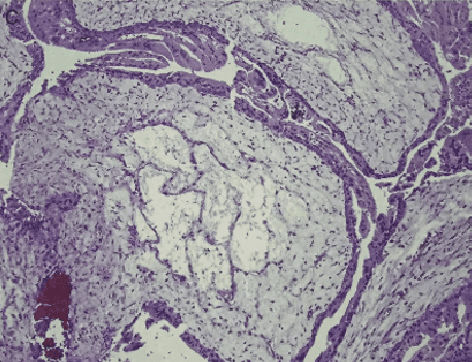

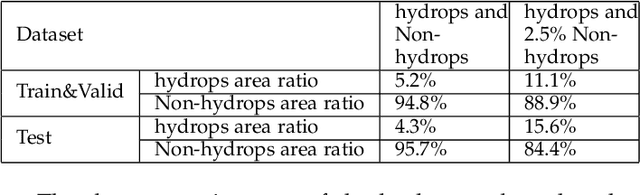

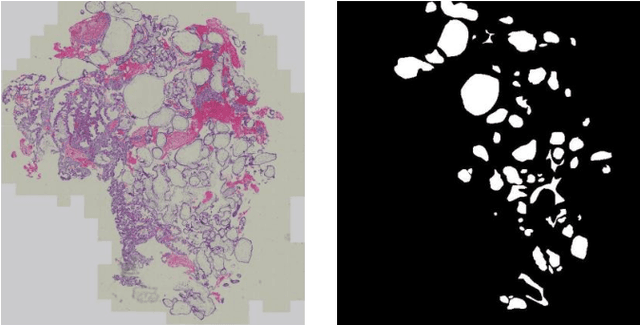

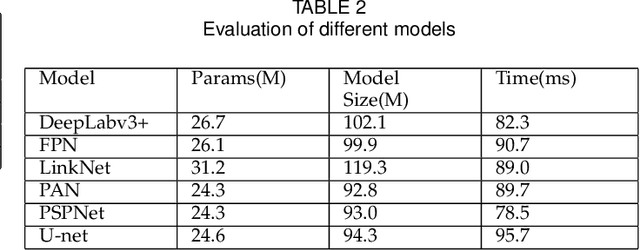

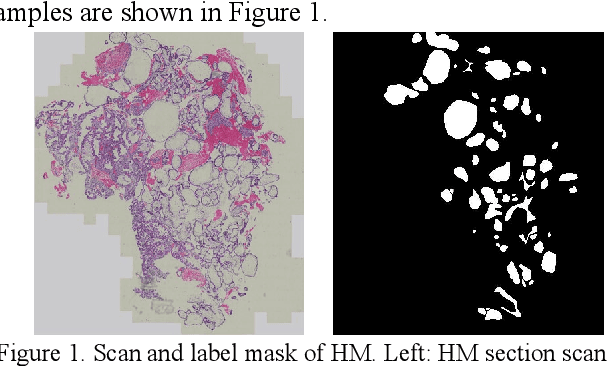

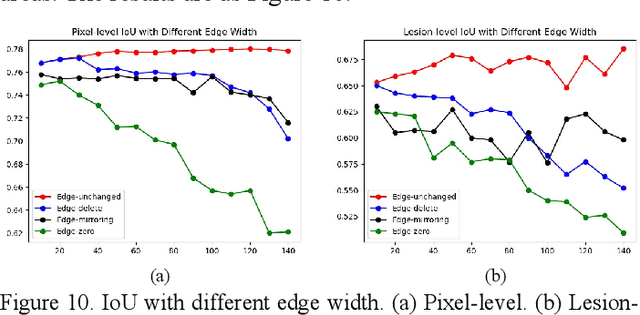

Abstract:Pathological morphology diagnosis is the standard diagnosis method of hydatidiform mole. As a disease with malignant potential, the hydatidiform mole section of hydrops lesions is an important basis for diagnosis. Due to incomplete lesion development, early hydatidiform mole is difficult to distinguish, resulting in a low accuracy of clinical diagnosis. As a remarkable machine learning technology, image semantic segmentation networks have been used in many medical image recognition tasks. We developed a hydatidiform mole hydrops lesion segmentation model based on a novel loss function and training method. The model consists of different networks that segment the section image at the pixel and lesion levels. Our compound loss function assign weights to the segmentation results of the two levels to calculate the loss. We then propose a stagewise training method to combine the advantages of various loss functions at different levels. We evaluate our method on a hydatidiform mole hydrops dataset. Experiments show that the proposed model with our loss function and training method has good recognition performance under different segmentation metrics.

A Semantic Segmentation Network Based Real-Time Computer-Aided Diagnosis System for Hydatidiform Mole Hydrops Lesion Recognition in Microscopic View

Apr 11, 2022

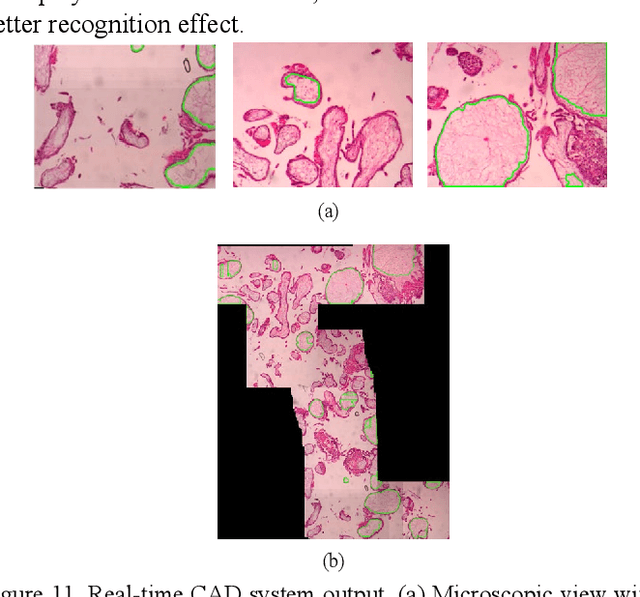

Abstract:As a disease with malignant potential, hydatidiform mole (HM) is one of the most common gestational trophoblastic diseases. For pathologists, the HM section of hydrops lesions is an important basis for diagnosis. In pathology departments, the diverse microscopic manifestations of HM lesions and the limited view under the microscope mean that physicians with extensive diagnostic experience are required to prevent missed diagnosis and misdiagnosis. Feature extraction can significantly improve the accuracy and speed of the diagnostic process. As a remarkable diagnosis assisting technology, computer-aided diagnosis (CAD) has been widely used in clinical practice. We constructed a deep-learning-based CAD system to identify HM hydrops lesions in the microscopic view in real-time. The system consists of three modules; the image mosaic module and edge extension module process the image to improve the outcome of the hydrops lesion recognition module, which adopts a semantic segmentation network, our novel compound loss function, and a stepwise training function in order to achieve the best performance in identifying hydrops lesions. We evaluated our system using an HM hydrops dataset. Experiments show that our system is able to respond in real-time and correctly display the entire microscopic view with accurately labeled HM hydrops lesions.

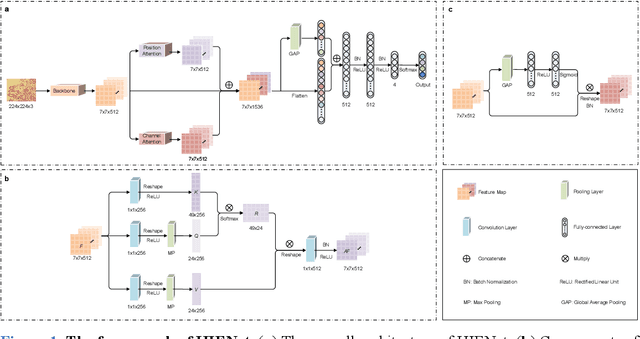

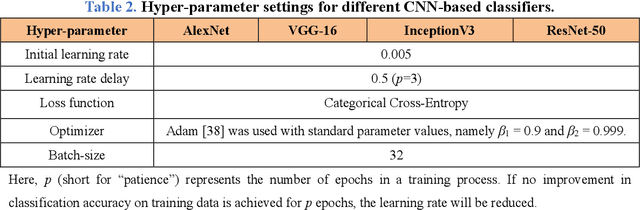

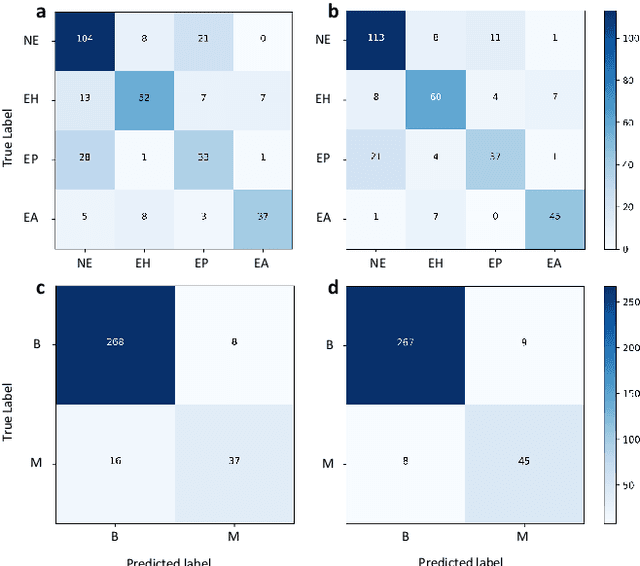

Computer-aided diagnosis in histopathological images of the endometrium using a convolutional neural network and attention mechanisms

Apr 24, 2019

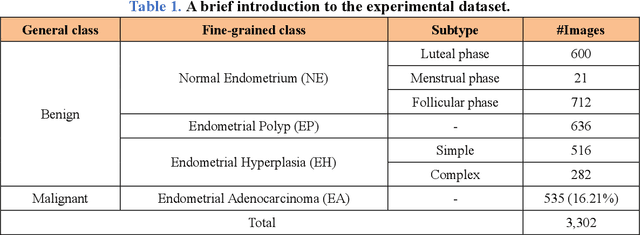

Abstract:Uterine cancer, also known as endometrial cancer, can seriously affect the female reproductive organs, and histopathological image analysis is the gold standard for diagnosing endometrial cancer. However, due to the limited capability of modeling the complicated relationships between histopathological images and their interpretations, these computer-aided diagnosis (CADx) approaches based on traditional machine learning algorithms often failed to achieve satisfying results. In this study, we developed a CADx approach using a convolutional neural network (CNN) and attention mechanisms, called HIENet. Because HIENet used the attention mechanisms and feature map visualization techniques, it can provide pathologists better interpretability of diagnoses by highlighting the histopathological correlations of local (pixel-level) image features to morphological characteristics of endometrial tissue. In the ten-fold cross-validation process, the CADx approach, HIENet, achieved a 76.91 $\pm$ 1.17% (mean $\pm$ s. d.) classification accuracy for four classes of endometrial tissue, namely normal endometrium, endometrial polyp, endometrial hyperplasia, and endometrial adenocarcinoma. Also, HIENet achieved an area-under-the-curve (AUC) of 0.9579 $\pm$ 0.0103 with an 81.04 $\pm$ 3.87% sensitivity and 94.78 $\pm$ 0.87% specificity in a binary classification task that detected endometrioid adenocarcinoma (Malignant). Besides, in the external validation process, HIENet achieved an 84.50% accuracy in the four-class classification task, and it achieved an AUC of 0.9829 with a 77.97% (95% CI, 65.27%-87.71%) sensitivity and 100% (95% CI, 97.42%-100.00%) specificity. In summary, the proposed CADx approach, HIENet, outperformed three human experts and four end-to-end CNN-based classifiers on this small-scale dataset composed of 3,500 hematoxylin and eosin (H&E) images regarding overall classification performance.

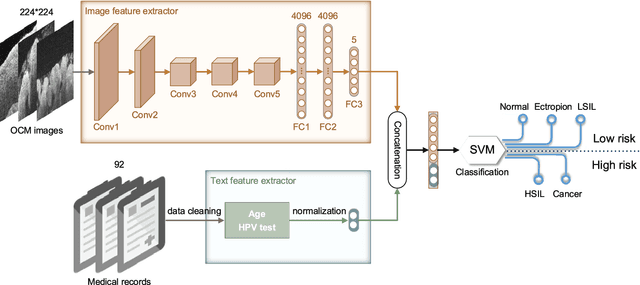

Computer-Aided Diagnosis of Label-Free 3-D Optical Coherence Microscopy Images of Human Cervical Tissue

Sep 17, 2018

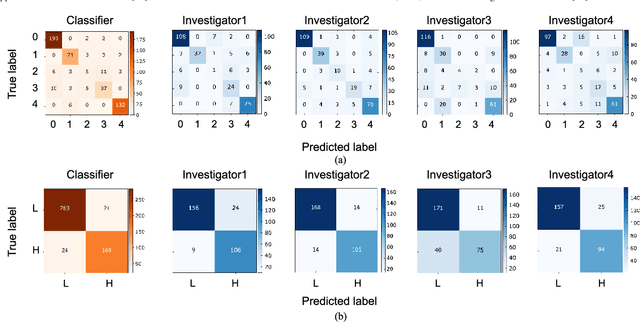

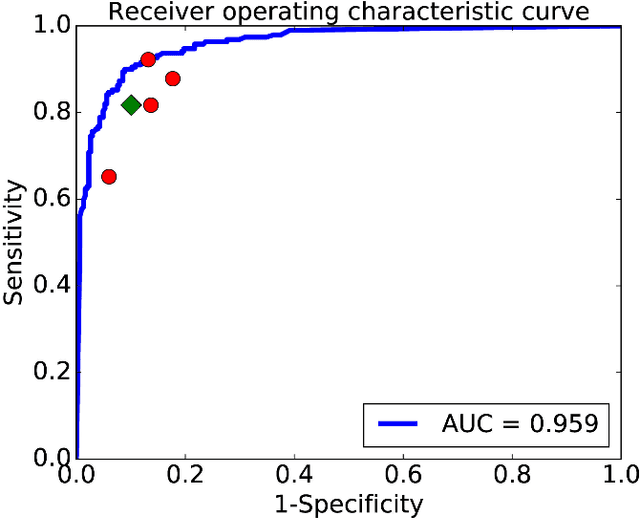

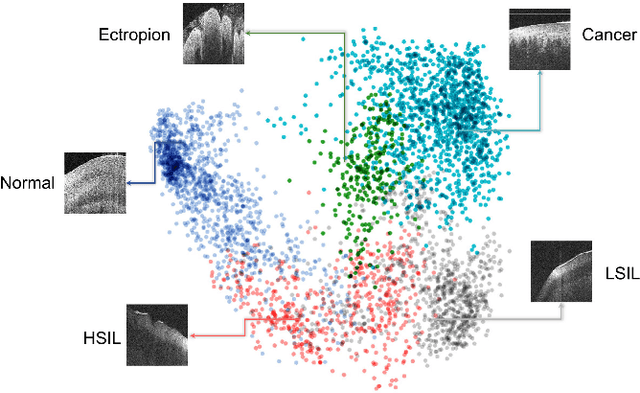

Abstract:Objective: Ultrahigh-resolution optical coherence microscopy (OCM) has recently demonstrated its potential for accurate diagnosis of human cervical diseases. One major challenge for clinical adoption, however, is the steep learning curve clinicians need to overcome to interpret OCM images. Developing an intelligent technique for computer-aided diagnosis (CADx) to accurately interpret OCM images will facilitate clinical adoption of the technology and improve patient care. Methods: 497 high-resolution 3-D OCM volumes (600 cross-sectional images each) were collected from 159 ex vivo specimens of 92 female patients. OCM image features were extracted using a convolutional neural network (CNN) model, concatenated with patient information (e.g., age, HPV results), and classified using a support vector machine classifier. Ten-fold cross-validations were utilized to test the performance of the CADx method in a five-class classification task and a binary classification task. Results: An 88.3 plus or minus 4.9% classification accuracy was achieved for five fine-grained classes of cervical tissue, namely normal, ectropion, low-grade and high-grade squamous intraepithelial lesions (LSIL and HSIL), and cancer. In the binary classification task (low-risk [normal, ectropion and LSIL] vs. high-risk [HSIL and cancer]), the CADx method achieved an area-under-the-curve (AUC) value of 0.959 with an 86.7 plus or minus 11.4% sensitivity and 93.5 plus or minus 3.8% specificity. Conclusion: The proposed deep-learning based CADx method outperformed three human experts. It was also able to identify morphological characteristics in OCM images that were consistent with histopathological interpretations. Significance: Label-free OCM imaging, combined with deep-learning based CADx methods, hold a great promise to be used in clinical settings for the effective screening and diagnosis of cervical diseases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge