Kevin Rösch

PITA: Physics-Informed Trajectory Autoencoder

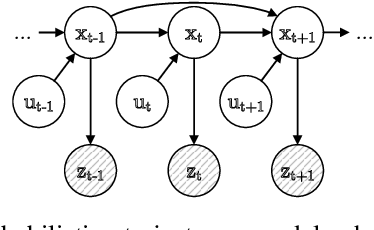

Mar 18, 2024Abstract:Validating robotic systems in safety-critical appli-cations requires testing in many scenarios including rare edgecases that are unlikely to occur, requiring to complement real-world testing with testing in simulation. Generative models canbe used to augment real-world datasets with generated data toproduce edge case scenarios by sampling in a learned latentspace. Autoencoders can learn said latent representation for aspecific domain by learning to reconstruct the input data froma lower-dimensional intermediate representation. However, theresulting trajectories are not necessarily physically plausible, butinstead typically contain noise that is not present in the inputtrajectory. To resolve this issue, we propose the novel Physics-Informed Trajectory Autoencoder (PITA) architecture, whichincorporates a physical dynamics model into the loss functionof the autoencoder. This results in smooth trajectories that notonly reconstruct the input trajectory but also adhere to thephysical model. We evaluate PITA on a real-world dataset ofvehicle trajectories and compare its performance to a normalautoencoder and a state-of-the-art action-space autoencoder.

LDFA: Latent Diffusion Face Anonymization for Self-driving Applications

Feb 17, 2023

Abstract:In order to protect vulnerable road users (VRUs), such as pedestrians or cyclists, it is essential that intelligent transportation systems (ITS) accurately identify them. Therefore, datasets used to train perception models of ITS must contain a significant number of vulnerable road users. However, data protection regulations require that individuals are anonymized in such datasets. In this work, we introduce a novel deep learning-based pipeline for face anonymization in the context of ITS. In contrast to related methods, we do not use generative adversarial networks (GANs) but build upon recent advances in diffusion models. We propose a two-stage method, which contains a face detection model followed by a latent diffusion model to generate realistic face in-paintings. To demonstrate the versatility of anonymized images, we train segmentation methods on anonymized data and evaluate them on non-anonymized data. Our experiment reveal that our pipeline is better suited to anonymize data for segmentation than naive methods and performes comparably with recent GAN-based methods. Moreover, face detectors achieve higher mAP scores for faces anonymized by our method compared to naive or recent GAN-based methods.

Space, Time, and Interaction: A Taxonomy of Corner Cases in Trajectory Datasets for Automated Driving

Oct 17, 2022

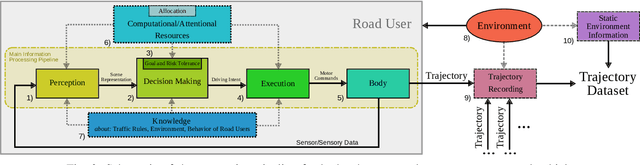

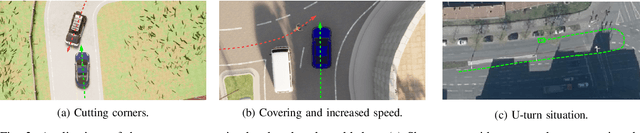

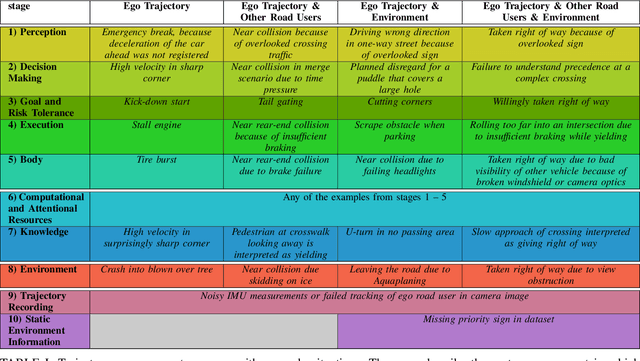

Abstract:Trajectory data analysis is an essential component for highly automated driving. Complex models developed with these data predict other road users' movement and behavior patterns. Based on these predictions - and additional contextual information such as the course of the road, (traffic) rules, and interaction with other road users - the highly automated vehicle (HAV) must be able to reliably and safely perform the task assigned to it, e.g., moving from point A to B. Ideally, the HAV moves safely through its environment, just as we would expect a human driver to do. However, if unusual trajectories occur, so-called trajectory corner cases, a human driver can usually cope well, but an HAV can quickly get into trouble. In the definition of trajectory corner cases, which we provide in this work, we will consider the relevance of unusual trajectories with respect to the task at hand. Based on this, we will also present a taxonomy of different trajectory corner cases. The categorization of corner cases into the taxonomy will be shown with examples and is done by cause and required data sources. To illustrate the complexity between the machine learning (ML) model and the corner case cause, we present a general processing chain underlying the taxonomy.

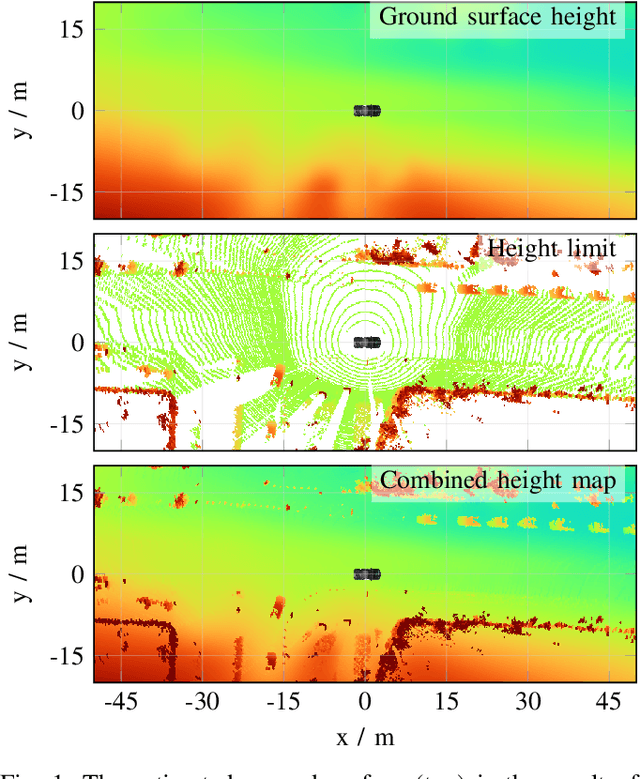

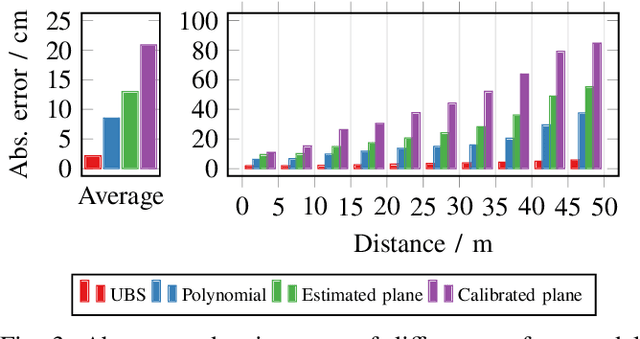

Fast and Robust Ground Surface Estimation from LIDAR Measurements using Uniform B-Splines

Mar 02, 2022

Abstract:We propose a fast and robust method to estimate the ground surface from LIDAR measurements on an automated vehicle. The ground surface is modeled as a UBS which is robust towards varying measurement densities and with a single parameter controlling the smoothness prior. We model the estimation process as a robust LS optimization problem which can be reformulated as a linear problem and thus solved efficiently. Using the SemanticKITTI data set, we conduct a quantitative evaluation by classifying the point-wise semantic annotations into ground and non-ground points. Finally, we validate the approach on our research vehicle in real-world scenarios.

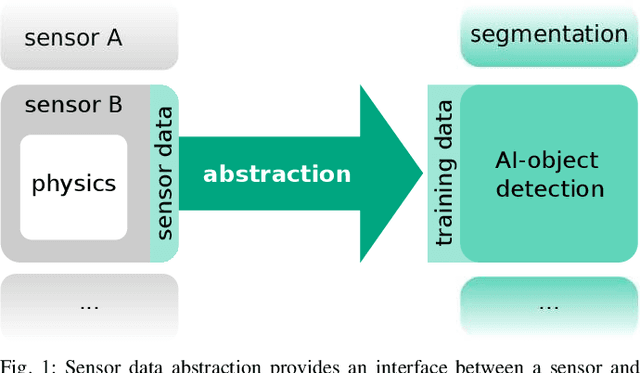

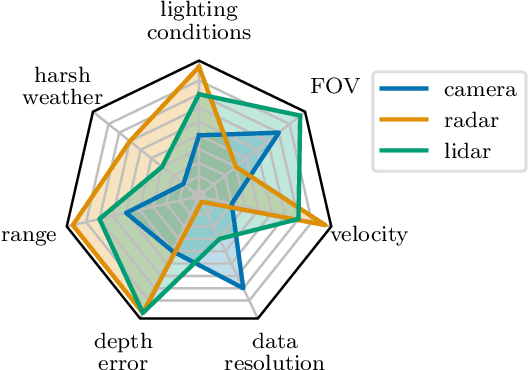

Towards Sensor Data Abstraction of Autonomous Vehicle Perception Systems

May 14, 2021

Abstract:Full-stack autonomous driving perception modules usually consist of data-driven models based on multiple sensor modalities. However, these models might be biased to the sensor setup used for data acquisition. This bias can seriously impair the perception models' transferability to new sensor setups, which continuously occur due to the market's competitive nature. We envision sensor data abstraction as an interface between sensor data and machine learning applications for highly automated vehicles (HAD). For this purpose, we review the primary sensor modalities, camera, lidar, and radar, published in autonomous-driving related datasets, examine single sensor abstraction and abstraction of sensor setups, and identify critical paths towards an abstraction of sensor data from multiple perception configurations.

An Application-Driven Conceptualization of Corner Cases for Perception in Highly Automated Driving

Mar 05, 2021

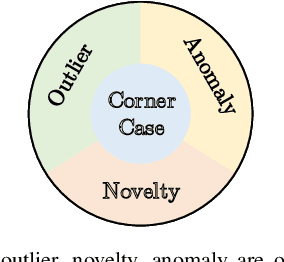

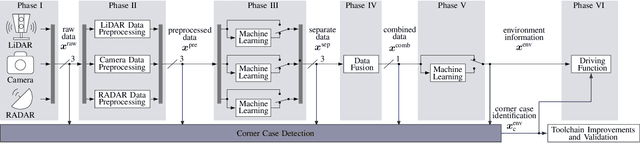

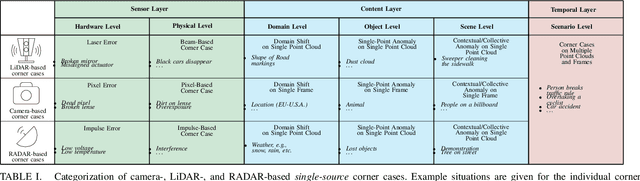

Abstract:Systems and functions that rely on machine learning (ML) are the basis of highly automated driving. An essential task of such ML models is to reliably detect and interpret unusual, new, and potentially dangerous situations. The detection of those situations, which we refer to as corner cases, is highly relevant for successfully developing, applying, and validating automotive perception functions in future vehicles where multiple sensor modalities will be used. A complication for the development of corner case detectors is the lack of consistent definitions, terms, and corner case descriptions, especially when taking into account various automotive sensors. In this work, we provide an application-driven view of corner cases in highly automated driving. To achieve this goal, we first consider existing definitions from the general outlier, novelty, anomaly, and out-of-distribution detection to show relations and differences to corner cases. Moreover, we extend an existing camera-focused systematization of corner cases by adding RADAR (radio detection and ranging) and LiDAR (light detection and ranging) sensors. For this, we describe an exemplary toolchain for data acquisition and processing, highlighting the interfaces of the corner case detection. We also define a novel level of corner cases, the method layer corner cases, which appear due to uncertainty inherent in the methodology or the data distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge