Lukas Lang

Towards Sensor Data Abstraction of Autonomous Vehicle Perception Systems

May 14, 2021

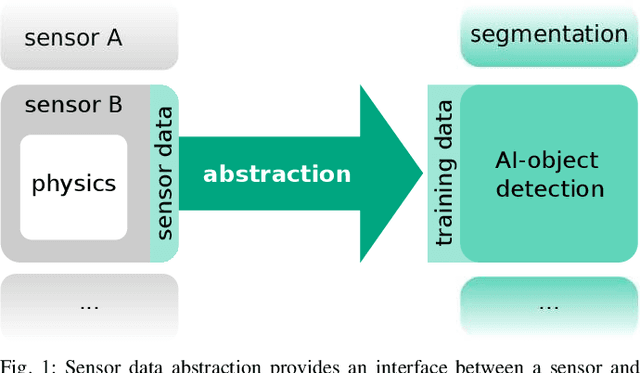

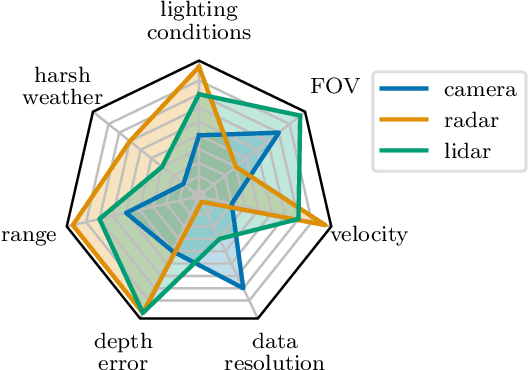

Abstract:Full-stack autonomous driving perception modules usually consist of data-driven models based on multiple sensor modalities. However, these models might be biased to the sensor setup used for data acquisition. This bias can seriously impair the perception models' transferability to new sensor setups, which continuously occur due to the market's competitive nature. We envision sensor data abstraction as an interface between sensor data and machine learning applications for highly automated vehicles (HAD). For this purpose, we review the primary sensor modalities, camera, lidar, and radar, published in autonomous-driving related datasets, examine single sensor abstraction and abstraction of sensor setups, and identify critical paths towards an abstraction of sensor data from multiple perception configurations.

Prediction of progressive lens performance from neural network simulations

Mar 19, 2021

Abstract:Purpose: The purpose of this study is to present a framework to predict visual acuity (VA) based on a convolutional neural network (CNN) and to further to compare PAL designs. Method: A simple two hidden layer CNN was trained to classify the gap orientations of Landolt Cs by combining the feature extraction abilities of a CNN with psychophysical staircase methods. The simulation was validated regarding its predictability of clinical VA from induced spherical defocus (between +/-1.5 D, step size: 0.5 D) from 39 subjectively measured eyes. Afterwards, a simulation for a presbyopic eye corrected by either a generic hard or a soft PAL design (addition power: 2.5 D) was performed including lower and higher order aberrations. Result: The validation revealed consistent offset of +0.20 logMAR +/-0.035 logMAR from simulated VA. Bland-Altman analysis from offset-corrected results showed limits of agreement (+/-1.96 SD) of -0.08 logMAR and +0.07 logMAR, which is comparable to clinical repeatability of VA assessment. The application of the simulation for PALs confirmed a bigger far zone for generic hard design but did not reveal zone width differences for the intermediate or near zone. Furthermore, a horizontal area of better VA at the mid of the PAL was found, which confirms the importance for realistic performance simulations using object-based aberration and physiological performance measures as VA. Conclusion: The proposed holistic simulation tool was shown to act as an accurate model for subjective visual performance. Further, the simulations application for PALs indicated its potential as an effective method to compare visual performance of different optical designs. Moreover, the simulation provides the basis to incorporate neural aspects of visual perception and thus simulate the VA including neural processing in future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge