Siegfried Wahl

Focal Depth Estimation: A Calibration-Free, Subject- and Daytime Invariant Approach

Aug 07, 2024

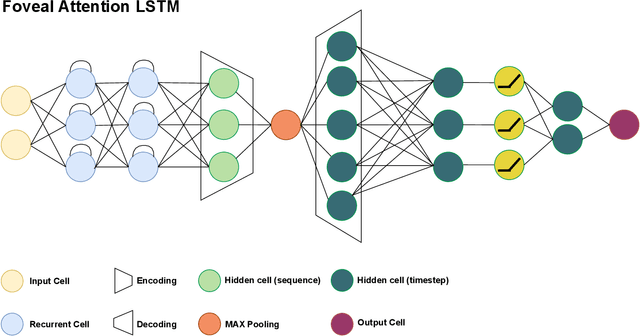

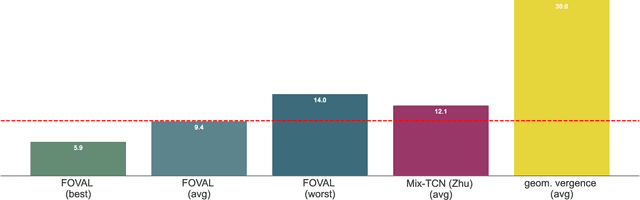

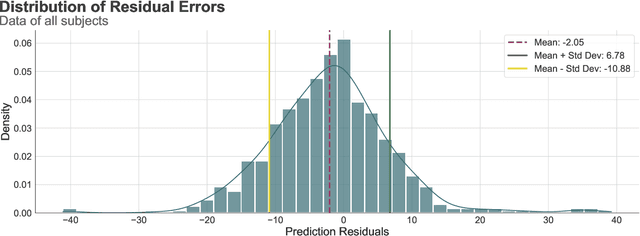

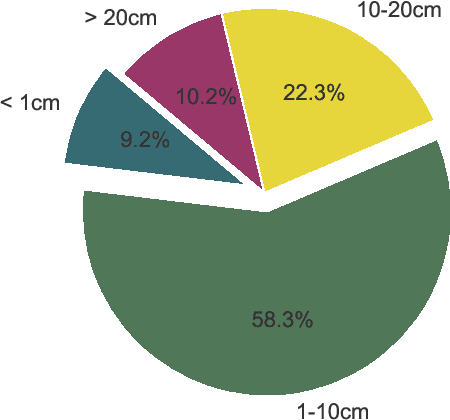

Abstract:In an era where personalized technology is increasingly intertwined with daily life, traditional eye-tracking systems and autofocal glasses face a significant challenge: the need for frequent, user-specific calibration, which impedes their practicality. This study introduces a groundbreaking calibration-free method for estimating focal depth, leveraging machine learning techniques to analyze eye movement features within short sequences. Our approach, distinguished by its innovative use of LSTM networks and domain-specific feature engineering, achieves a mean absolute error (MAE) of less than 10 cm, setting a new focal depth estimation accuracy standard. This advancement promises to enhance the usability of autofocal glasses and pave the way for their seamless integration into extended reality environments, marking a significant leap forward in personalized visual technology.

VisionaryVR: An Optical Simulation Tool for Evaluating and Optimizing Vision Correction Solutions in Virtual Reality

Dec 01, 2023

Abstract:Developing and evaluating vision science methods require robust and efficient tools for assessing their performance in various real-world scenarios. This study presents a novel virtual reality (VR) simulation tool that simulates real-world optical methods while giving high experimental control to the experiment. The tool incorporates an experiment controller, to smoothly and easily handle multiple conditions, a generic eye-tracking controller, that works with most common VR eye-trackers, a configurable defocus simulator, and a generic VR questionnaire loader to assess participants' behavior in virtual reality. This VR-based simulation tool bridges the gap between theoretical and applied research on new optical methods, corrections, and therapies. It enables vision scientists to increase their research tools with a robust, realistic, and fast research environment.

Prediction of progressive lens performance from neural network simulations

Mar 19, 2021

Abstract:Purpose: The purpose of this study is to present a framework to predict visual acuity (VA) based on a convolutional neural network (CNN) and to further to compare PAL designs. Method: A simple two hidden layer CNN was trained to classify the gap orientations of Landolt Cs by combining the feature extraction abilities of a CNN with psychophysical staircase methods. The simulation was validated regarding its predictability of clinical VA from induced spherical defocus (between +/-1.5 D, step size: 0.5 D) from 39 subjectively measured eyes. Afterwards, a simulation for a presbyopic eye corrected by either a generic hard or a soft PAL design (addition power: 2.5 D) was performed including lower and higher order aberrations. Result: The validation revealed consistent offset of +0.20 logMAR +/-0.035 logMAR from simulated VA. Bland-Altman analysis from offset-corrected results showed limits of agreement (+/-1.96 SD) of -0.08 logMAR and +0.07 logMAR, which is comparable to clinical repeatability of VA assessment. The application of the simulation for PALs confirmed a bigger far zone for generic hard design but did not reveal zone width differences for the intermediate or near zone. Furthermore, a horizontal area of better VA at the mid of the PAL was found, which confirms the importance for realistic performance simulations using object-based aberration and physiological performance measures as VA. Conclusion: The proposed holistic simulation tool was shown to act as an accurate model for subjective visual performance. Further, the simulations application for PALs indicated its potential as an effective method to compare visual performance of different optical designs. Moreover, the simulation provides the basis to incorporate neural aspects of visual perception and thus simulate the VA including neural processing in future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge