Björn Severitt

Focal Depth Estimation: A Calibration-Free, Subject- and Daytime Invariant Approach

Aug 07, 2024

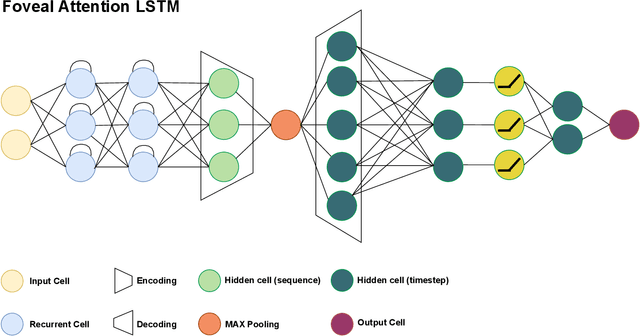

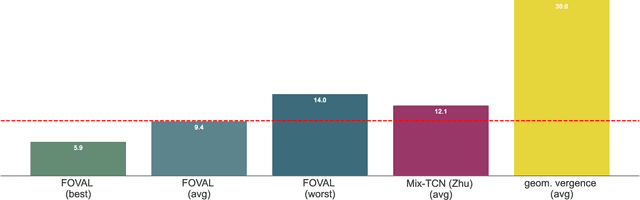

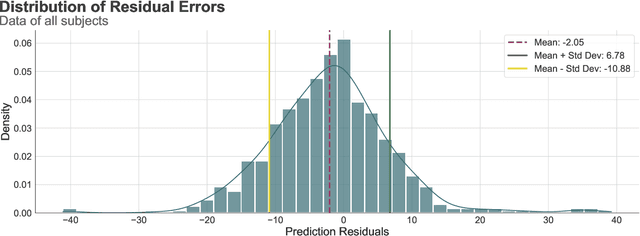

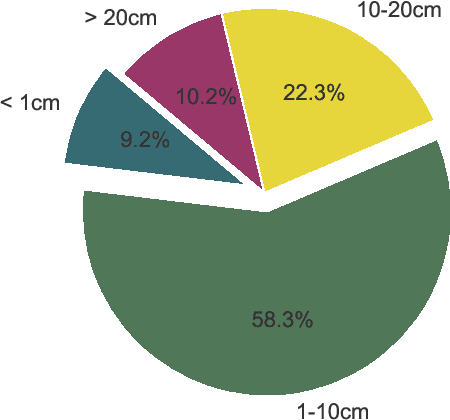

Abstract:In an era where personalized technology is increasingly intertwined with daily life, traditional eye-tracking systems and autofocal glasses face a significant challenge: the need for frequent, user-specific calibration, which impedes their practicality. This study introduces a groundbreaking calibration-free method for estimating focal depth, leveraging machine learning techniques to analyze eye movement features within short sequences. Our approach, distinguished by its innovative use of LSTM networks and domain-specific feature engineering, achieves a mean absolute error (MAE) of less than 10 cm, setting a new focal depth estimation accuracy standard. This advancement promises to enhance the usability of autofocal glasses and pave the way for their seamless integration into extended reality environments, marking a significant leap forward in personalized visual technology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge