J. Marius Zöllner

Efficient Cross-Country Data Acquisition Strategy for ADAS via Street-View Imagery

Feb 02, 2026Abstract:Deploying ADAS and ADS across countries remains challenging due to differences in legislation, traffic infrastructure, and visual conventions, which introduce domain shifts that degrade perception performance. Traditional cross-country data collection relies on extensive on-road driving, making it costly and inefficient to identify representative locations. To address this, we propose a street-view-guided data acquisition strategy that leverages publicly available imagery to identify places of interest (POI). Two POI scoring methods are introduced: a KNN-based feature distance approach using a vision foundation model, and a visual-attribution approach using a vision-language model. To enable repeatable evaluation, we adopt a collect-detect protocol and construct a co-located dataset by pairing the Zenseact Open Dataset with Mapillary street-view images. Experiments on traffic sign detection, a task particularly sensitive to cross-country variations in sign appearance, show that our approach achieves performance comparable to random sampling while using only half of the target-domain data. We further provide cost estimations for full-country analysis, demonstrating that large-scale street-view processing remains economically feasible. These results highlight the potential of street-view-guided data acquisition for efficient and cost-effective cross-country model adaptation.

DenseBEV: Transforming BEV Grid Cells into 3D Objects

Dec 18, 2025

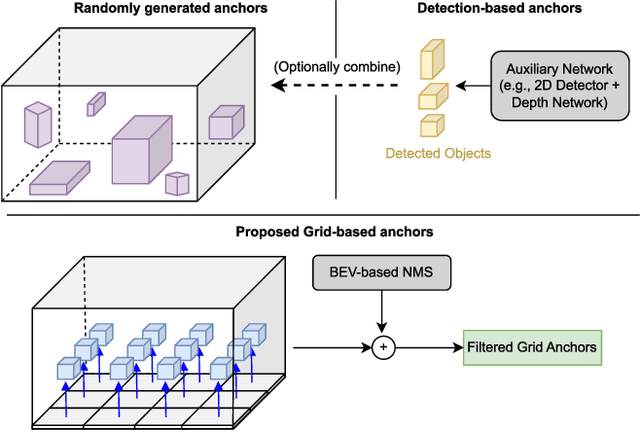

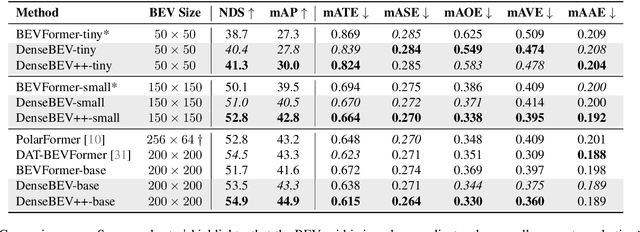

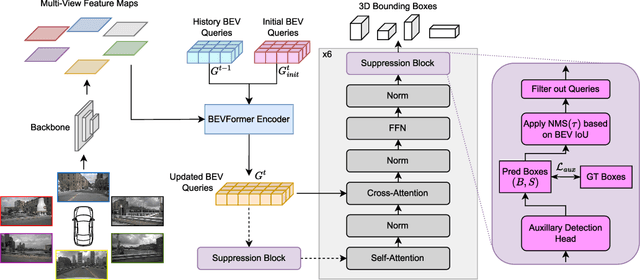

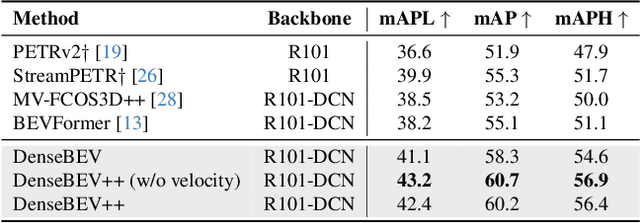

Abstract:In current research, Bird's-Eye-View (BEV)-based transformers are increasingly utilized for multi-camera 3D object detection. Traditional models often employ random queries as anchors, optimizing them successively. Recent advancements complement or replace these random queries with detections from auxiliary networks. We propose a more intuitive and efficient approach by using BEV feature cells directly as anchors. This end-to-end approach leverages the dense grid of BEV queries, considering each cell as a potential object for the final detection task. As a result, we introduce a novel two-stage anchor generation method specifically designed for multi-camera 3D object detection. To address the scaling issues of attention with a large number of queries, we apply BEV-based Non-Maximum Suppression, allowing gradients to flow only through non-suppressed objects. This ensures efficient training without the need for post-processing. By using BEV features from encoders such as BEVFormer directly as object queries, temporal BEV information is inherently embedded. Building on the temporal BEV information already embedded in our object queries, we introduce a hybrid temporal modeling approach by integrating prior detections to further enhance detection performance. Evaluating our method on the nuScenes dataset shows consistent and significant improvements in NDS and mAP over the baseline, even with sparser BEV grids and therefore fewer initial anchors. It is particularly effective for small objects, enhancing pedestrian detection with a 3.8% mAP increase on nuScenes and an 8% increase in LET-mAP on Waymo. Applying our method, named DenseBEV, to the challenging Waymo Open dataset yields state-of-the-art performance, achieving a LET-mAP of 60.7%, surpassing the previous best by 5.4%. Code is available at https://github.com/mdaehl/DenseBEV.

Runtime Safety Monitoring of Deep Neural Networks for Perception: A Survey

Nov 08, 2025Abstract:Deep neural networks (DNNs) are widely used in perception systems for safety-critical applications, such as autonomous driving and robotics. However, DNNs remain vulnerable to various safety concerns, including generalization errors, out-of-distribution (OOD) inputs, and adversarial attacks, which can lead to hazardous failures. This survey provides a comprehensive overview of runtime safety monitoring approaches, which operate in parallel to DNNs during inference to detect these safety concerns without modifying the DNN itself. We categorize existing methods into three main groups: Monitoring inputs, internal representations, and outputs. We analyze the state-of-the-art for each category, identify strengths and limitations, and map methods to the safety concerns they address. In addition, we highlight open challenges and future research directions.

DEAP DIVE: Dataset Investigation with Vision transformers for EEG evaluation

Oct 01, 2025

Abstract:Accurately predicting emotions from brain signals has the potential to achieve goals such as improving mental health, human-computer interaction, and affective computing. Emotion prediction through neural signals offers a promising alternative to traditional methods, such as self-assessment and facial expression analysis, which can be subjective or ambiguous. Measurements of the brain activity via electroencephalogram (EEG) provides a more direct and unbiased data source. However, conducting a full EEG is a complex, resource-intensive process, leading to the rise of low-cost EEG devices with simplified measurement capabilities. This work examines how subsets of EEG channels from the DEAP dataset can be used for sufficiently accurate emotion prediction with low-cost EEG devices, rather than fully equipped EEG-measurements. Using Continuous Wavelet Transformation to convert EEG data into scaleograms, we trained a vision transformer (ViT) model for emotion classification. The model achieved over 91,57% accuracy in predicting 4 quadrants (high/low per arousal and valence) with only 12 measuring points (also referred to as channels). Our work shows clearly, that a significant reduction of input channels yields high results compared to state-of-the-art results of 96,9% with 32 channels. Training scripts to reproduce our code can be found here: https://gitlab.kit.edu/kit/aifb/ATKS/public/AutoSMiLeS/DEAP-DIVE.

CrowdQuery: Density-Guided Query Module for Enhanced 2D and 3D Detection in Crowded Scenes

Sep 10, 2025Abstract:This paper introduces a novel method for end-to-end crowd detection that leverages object density information to enhance existing transformer-based detectors. We present CrowdQuery (CQ), whose core component is our CQ module that predicts and subsequently embeds an object density map. The embedded density information is then systematically integrated into the decoder. Existing density map definitions typically depend on head positions or object-based spatial statistics. Our method extends these definitions to include individual bounding box dimensions. By incorporating density information into object queries, our method utilizes density-guided queries to improve detection in crowded scenes. CQ is universally applicable to both 2D and 3D detection without requiring additional data. Consequently, we are the first to design a method that effectively bridges 2D and 3D detection in crowded environments. We demonstrate the integration of CQ into both a general 2D and 3D transformer-based object detector, introducing the architectures CQ2D and CQ3D. CQ is not limited to the specific transformer models we selected. Experiments on the STCrowd dataset for both 2D and 3D domains show significant performance improvements compared to the base models, outperforming most state-of-the-art methods. When integrated into a state-of-the-art crowd detector, CQ can further improve performance on the challenging CrowdHuman dataset, demonstrating its generalizability. The code is released at https://github.com/mdaehl/CrowdQuery.

Extracting Uncertainty Estimates from Mixtures of Experts for Semantic Segmentation

Sep 05, 2025Abstract:Estimating accurate and well-calibrated predictive uncertainty is important for enhancing the reliability of computer vision models, especially in safety-critical applications like traffic scene perception. While ensemble methods are commonly used to quantify uncertainty by combining multiple models, a mixture of experts (MoE) offers an efficient alternative by leveraging a gating network to dynamically weight expert predictions based on the input. Building on the promising use of MoEs for semantic segmentation in our previous works, we show that well-calibrated predictive uncertainty estimates can be extracted from MoEs without architectural modifications. We investigate three methods to extract predictive uncertainty estimates: predictive entropy, mutual information, and expert variance. We evaluate these methods for an MoE with two experts trained on a semantical split of the A2D2 dataset. Our results show that MoEs yield more reliable uncertainty estimates than ensembles in terms of conditional correctness metrics under out-of-distribution (OOD) data. Additionally, we evaluate routing uncertainty computed via gate entropy and find that simple gating mechanisms lead to better calibration of routing uncertainty estimates than more complex classwise gates. Finally, our experiments on the Cityscapes dataset suggest that increasing the number of experts can further enhance uncertainty calibration. Our code is available at https://github.com/KASTEL-MobilityLab/mixtures-of-experts/.

Robust Experts: the Effect of Adversarial Training on CNNs with Sparse Mixture-of-Experts Layers

Sep 05, 2025Abstract:Robustifying convolutional neural networks (CNNs) against adversarial attacks remains challenging and often requires resource-intensive countermeasures. We explore the use of sparse mixture-of-experts (MoE) layers to improve robustness by replacing selected residual blocks or convolutional layers, thereby increasing model capacity without additional inference cost. On ResNet architectures trained on CIFAR-100, we find that inserting a single MoE layer in the deeper stages leads to consistent improvements in robustness under PGD and AutoPGD attacks when combined with adversarial training. Furthermore, we discover that when switch loss is used for balancing, it causes routing to collapse onto a small set of overused experts, thereby concentrating adversarial training on these paths and inadvertently making them more robust. As a result, some individual experts outperform the gated MoE model in robustness, suggesting that robust subpaths emerge through specialization. Our code is available at https://github.com/KASTEL-MobilityLab/robust-sparse-moes.

DigiT4TAF -- Bridging Physical and Digital Worlds for Future Transportation Systems

Jul 03, 2025Abstract:In the future, mobility will be strongly shaped by the increasing use of digitalization. Not only will individual road users be highly interconnected, but also the road and associated infrastructure. At that point, a Digital Twin becomes particularly appealing because, unlike a basic simulation, it offers a continuous, bilateral connection linking the real and virtual environments. This paper describes the digital reconstruction used to develop the Digital Twin of the Test Area Autonomous Driving-Baden-W\"urttemberg (TAF-BW), Germany. The TAF-BW offers a variety of different road sections, from high-traffic urban intersections and tunnels to multilane motorways. The test area is equipped with a comprehensive Vehicle-to-Everything (V2X) communication infrastructure and multiple intelligent intersections equipped with camera sensors to facilitate real-time traffic flow monitoring. The generation of authentic data as input for the Digital Twin was achieved by extracting object lists at the intersections. This process was facilitated by the combined utilization of camera images from the intelligent infrastructure and LiDAR sensors mounted on a test vehicle. Using a unified interface, recordings from real-world detections of traffic participants can be resimulated. Additionally, the simulation framework's design and the reconstruction process is discussed. The resulting framework is made publicly available for download and utilization at: https://digit4taf-bw.fzi.de The demonstration uses two case studies to illustrate the application of the digital twin and its interfaces: the analysis of traffic signal systems to optimize traffic flow and the simulation of security-related scenarios in the communications sector.

Fool the Stoplight: Realistic Adversarial Patch Attacks on Traffic Light Detectors

Jun 05, 2025Abstract:Realistic adversarial attacks on various camera-based perception tasks of autonomous vehicles have been successfully demonstrated so far. However, only a few works considered attacks on traffic light detectors. This work shows how CNNs for traffic light detection can be attacked with printed patches. We propose a threat model, where each instance of a traffic light is attacked with a patch placed under it, and describe a training strategy. We demonstrate successful adversarial patch attacks in universal settings. Our experiments show realistic targeted red-to-green label-flipping attacks and attacks on pictogram classification. Finally, we perform a real-world evaluation with printed patches and demonstrate attacks in the lab settings with a mobile traffic light for construction sites and in a test area with stationary traffic lights. Our code is available at https://github.com/KASTEL-MobilityLab/attacks-on-traffic-light-detection.

Automatic Curriculum Learning for Driving Scenarios: Towards Robust and Efficient Reinforcement Learning

May 13, 2025Abstract:This paper addresses the challenges of training end-to-end autonomous driving agents using Reinforcement Learning (RL). RL agents are typically trained in a fixed set of scenarios and nominal behavior of surrounding road users in simulations, limiting their generalization and real-life deployment. While domain randomization offers a potential solution by randomly sampling driving scenarios, it frequently results in inefficient training and sub-optimal policies due to the high variance among training scenarios. To address these limitations, we propose an automatic curriculum learning framework that dynamically generates driving scenarios with adaptive complexity based on the agent's evolving capabilities. Unlike manually designed curricula that introduce expert bias and lack scalability, our framework incorporates a ``teacher'' that automatically generates and mutates driving scenarios based on their learning potential -- an agent-centric metric derived from the agent's current policy -- eliminating the need for expert design. The framework enhances training efficiency by excluding scenarios the agent has mastered or finds too challenging. We evaluate our framework in a reinforcement learning setting where the agent learns a driving policy from camera images. Comparative results against baseline methods, including fixed scenario training and domain randomization, demonstrate that our approach leads to enhanced generalization, achieving higher success rates: +9\% in low traffic density, +21\% in high traffic density, and faster convergence with fewer training steps. Our findings highlight the potential of ACL in improving the robustness and efficiency of RL-based autonomous driving agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge