Hans-Christian Reuss

Improving the Intelligent Driver Model by Incorporating Vehicle Dynamics: Microscopic Calibration and Macroscopic Validation

Aug 07, 2024

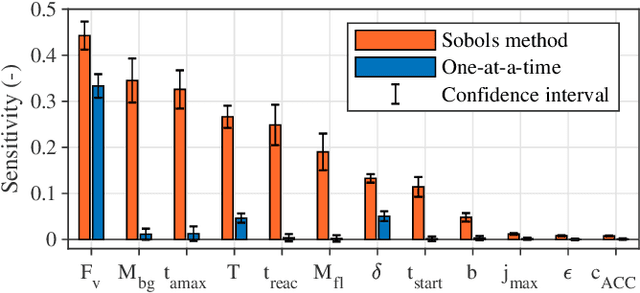

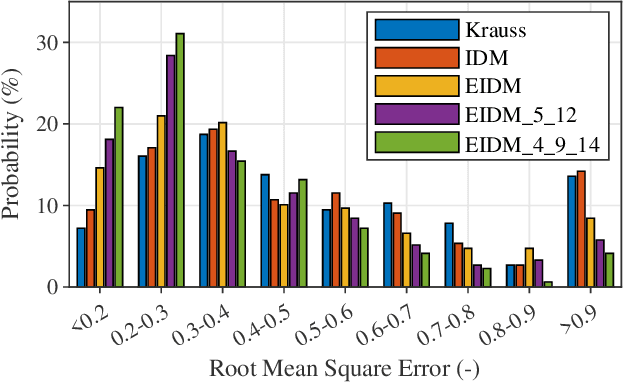

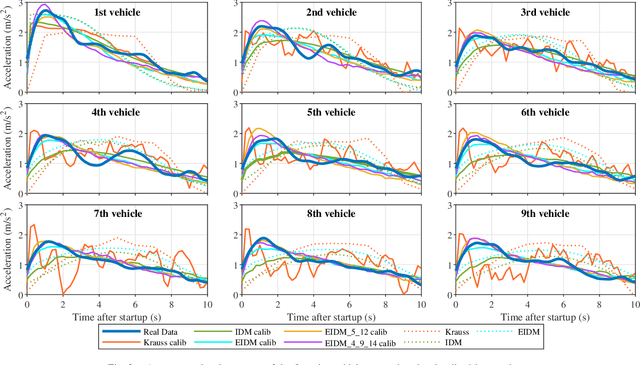

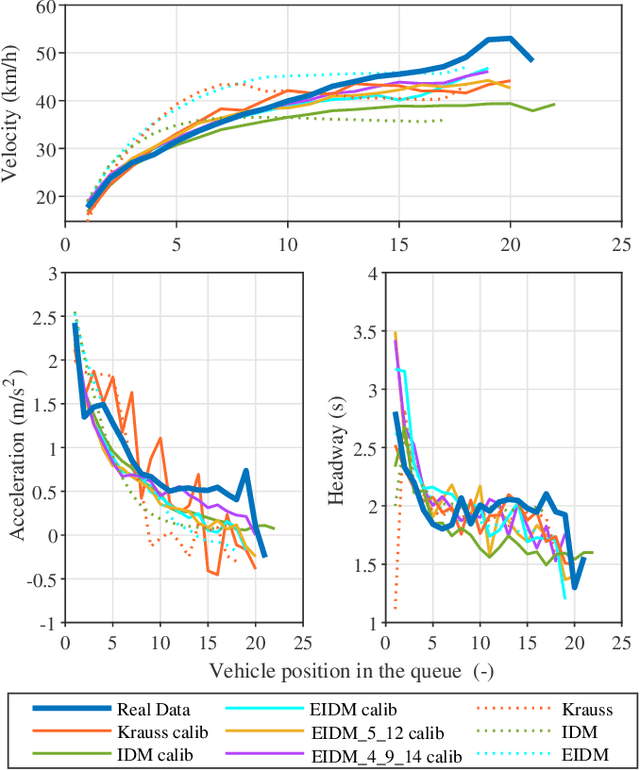

Abstract:Microscopic traffic simulations are used to evaluate the impact of infrastructure modifications and evolving vehicle technologies, such as connected and automated driving. Simulated vehicles are controlled via car-following, lane-changing and junction models, which are designed to imitate human driving behavior. However, physics-based car-following models (CFMs) cannot fully replicate measured vehicle trajectories. Therefore, we present model extensions for the Intelligent Driver Model (IDM), of which some are already included in the Extended Intelligent Driver Model (EIDM), to improve calibration and validation results. They consist of equations based on vehicle dynamics and drive off procedures. In addition, parameter selection plays a decisive role. Thus, we introduce a framework to calibrate CFMs using drone data captured at a signalized intersection in Stuttgart, Germany. We compare the calibration error of the Krauss Model with the IDM and EIDM. In this setup, the EIDM achieves a 17.78 % lower mean error than the IDM, based on the distance difference between real world and simulated vehicles. Adding vehicle dynamics equations to the EIDM further improves the results by an additional 18.97 %. The calibrated vehicle-driver combinations are then investigated by simulating the traffic in three different scenarios: at the original intersection, in a closed loop and in a stop-and-go wave. The data shows that the improved calibration process of individual vehicles, openly available at https://www.github.com/stepeos/pycarmodel_calibration, also provides more accurate macroscopic results.

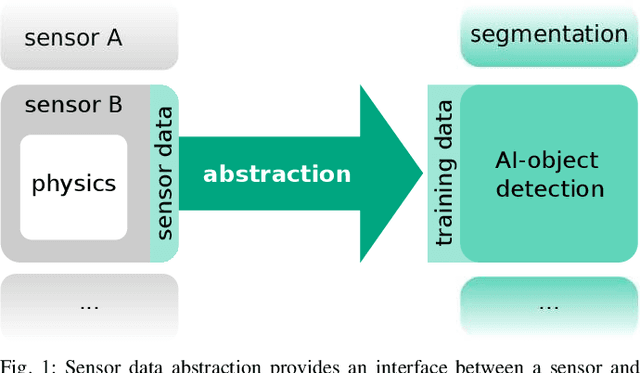

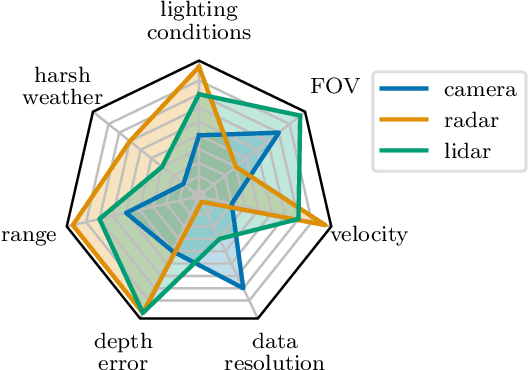

Towards Sensor Data Abstraction of Autonomous Vehicle Perception Systems

May 14, 2021

Abstract:Full-stack autonomous driving perception modules usually consist of data-driven models based on multiple sensor modalities. However, these models might be biased to the sensor setup used for data acquisition. This bias can seriously impair the perception models' transferability to new sensor setups, which continuously occur due to the market's competitive nature. We envision sensor data abstraction as an interface between sensor data and machine learning applications for highly automated vehicles (HAD). For this purpose, we review the primary sensor modalities, camera, lidar, and radar, published in autonomous-driving related datasets, examine single sensor abstraction and abstraction of sensor setups, and identify critical paths towards an abstraction of sensor data from multiple perception configurations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge