Frank Bieder

XD-MAP: Cross-Modal Domain Adaptation using Semantic Parametric Mapping

Jan 20, 2026Abstract:Until open-world foundation models match the performance of specialized approaches, the effectiveness of deep learning models remains heavily dependent on dataset availability. Training data must align not only with the target object categories but also with the sensor characteristics and modalities. To bridge the gap between available datasets and deployment domains, domain adaptation strategies are widely used. In this work, we propose a novel approach to transferring sensor-specific knowledge from an image dataset to LiDAR, an entirely different sensing domain. Our method XD-MAP leverages detections from a neural network on camera images to create a semantic parametric map. The map elements are modeled to produce pseudo labels in the target domain without any manual annotation effort. Unlike previous domain transfer approaches, our method does not require direct overlap between sensors and enables extending the angular perception range from a front-view camera to a full 360 view. On our large-scale road feature dataset, XD-MAP outperforms single shot baseline approaches by +19.5 mIoU for 2D semantic segmentation, +19.5 PQth for 2D panoptic segmentation, and +32.3 mIoU in 3D semantic segmentation. The results demonstrate the effectiveness of our approach achieving strong performance on LiDAR data without any manual labeling.

SDTagNet: Leveraging Text-Annotated Navigation Maps for Online HD Map Construction

Jun 10, 2025Abstract:Autonomous vehicles rely on detailed and accurate environmental information to operate safely. High definition (HD) maps offer a promising solution, but their high maintenance cost poses a significant barrier to scalable deployment. This challenge is addressed by online HD map construction methods, which generate local HD maps from live sensor data. However, these methods are inherently limited by the short perception range of onboard sensors. To overcome this limitation and improve general performance, recent approaches have explored the use of standard definition (SD) maps as prior, which are significantly easier to maintain. We propose SDTagNet, the first online HD map construction method that fully utilizes the information of widely available SD maps, like OpenStreetMap, to enhance far range detection accuracy. Our approach introduces two key innovations. First, in contrast to previous work, we incorporate not only polyline SD map data with manually selected classes, but additional semantic information in the form of textual annotations. In this way, we enrich SD vector map tokens with NLP-derived features, eliminating the dependency on predefined specifications or exhaustive class taxonomies. Second, we introduce a point-level SD map encoder together with orthogonal element identifiers to uniformly integrate all types of map elements. Experiments on Argoverse 2 and nuScenes show that this boosts map perception performance by up to +5.9 mAP (+45%) w.r.t. map construction without priors and up to +3.2 mAP (+20%) w.r.t. previous approaches that already use SD map priors. Code is available at https://github.com/immel-f/SDTagNet

M3TR: Generalist HD Map Construction with Variable Map Priors

Nov 15, 2024Abstract:Autonomous vehicles require road information for their operation, usually in form of HD maps. Since offline maps eventually become outdated or may only be partially available, online HD map construction methods have been proposed to infer map information from live sensor data. A key issue remains how to exploit such partial or outdated map information as a prior. We introduce M3TR (Multi-Masking Map Transformer), a generalist approach for HD map construction both with and without map priors. We address shortcomings in ground truth generation for Argoverse 2 and nuScenes and propose the first realistic scenarios with semantically diverse map priors. Examining various query designs, we use an improved method for integrating prior map elements into a HD map construction model, increasing performance by +4.3 mAP. Finally, we show that training across all prior scenarios yields a single Generalist model, whose performance is on par with previous Expert models that can handle only one specific type of map prior. M3TR thus is the first model capable of leveraging variable map priors, making it suitable for real-world deployment. Code is available at https://github.com/immel-f/m3tr

Generation of Training Data from HD Maps in the Lanelet2 Framework

Jul 24, 2024Abstract:Using HD maps directly as training data for machine learning tasks has seen a massive surge in popularity and shown promising results, e.g. in the field of map perception. Despite that, a standardized HD map framework supporting all parts of map-based automated driving and training label generation from map data does not exist. Furthermore, feeding map perception models with map data as part of the input during real-time inference is not addressed by the research community. In order to fill this gap, we presentlanelet2_ml_converter, an integrated extension to the HD map framework Lanelet2, widely used in automated driving systems by academia and industry. With this addition Lanelet2 unifies map based automated driving, machine learning inference and training, all from a single source of map data and format. Requirements for a unified framework are analyzed and the implementation of these requirements is described. The usability of labels in state of the art machine learning is demonstrated with application examples from the field of map perception. The source code is available embedded in the Lanelet2 framework under https://github.com/fzi-forschungszentrum-informatik/Lanelet2/tree/feature_ml_converter

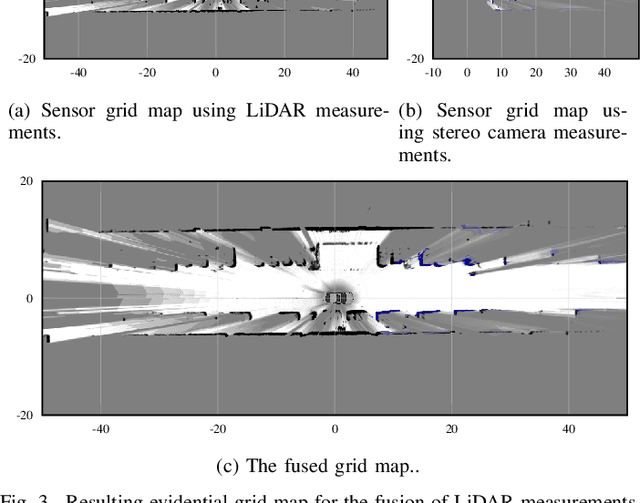

Mapping LiDAR and Camera Measurements in a Dual Top-View Grid Representation Tailored for Automated Vehicles

Apr 21, 2022

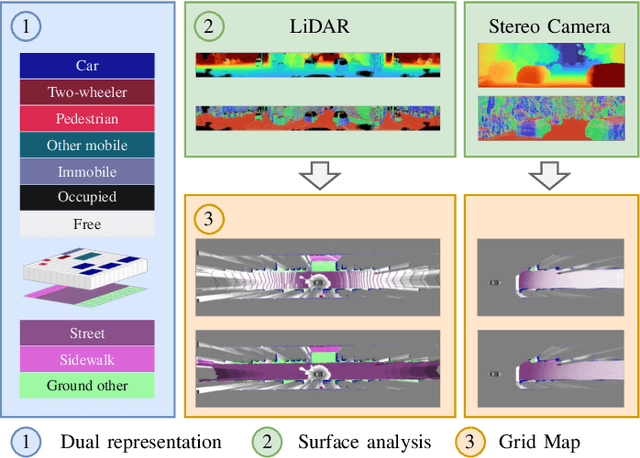

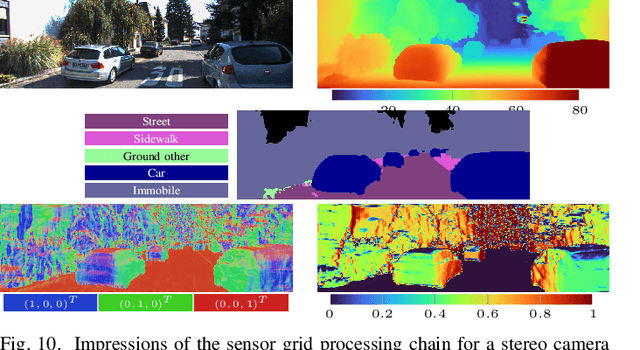

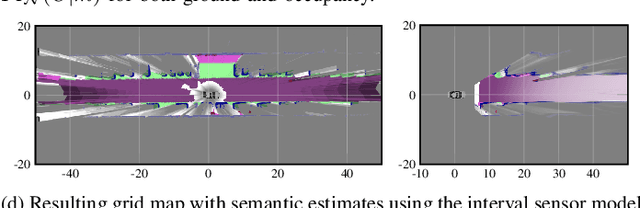

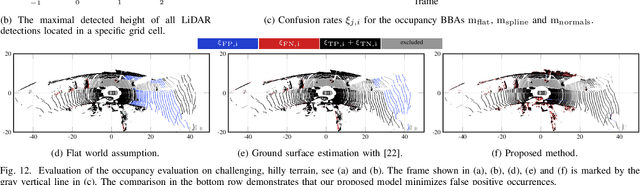

Abstract:We present a generic evidential grid mapping pipeline designed for imaging sensors such as LiDARs and cameras. Our grid-based evidential model contains semantic estimates for cell occupancy and ground separately. We specify the estimation steps for input data represented by point sets, but mainly focus on input data represented by images such as disparity maps or LiDAR range images. Instead of relying on an external ground segmentation only, we deduce occupancy evidence by analyzing the surface orientation around measurements. We conduct experiments and evaluate the presented method using LiDAR and stereo camera data recorded in real traffic scenarios. Our method estimates cell occupancy robustly and with a high level of detail while maximizing efficiency and minimizing the dependency to external processing modules.

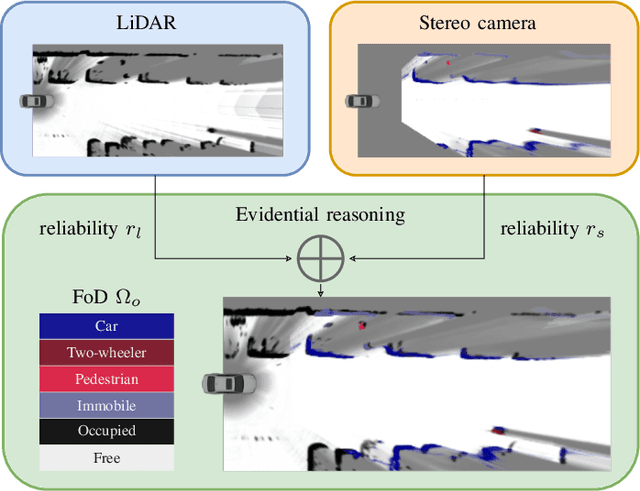

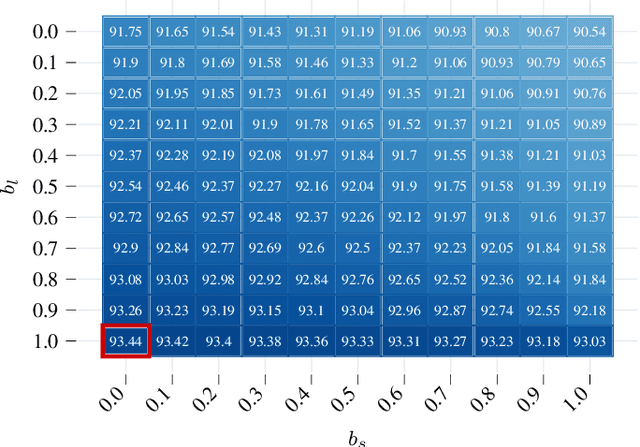

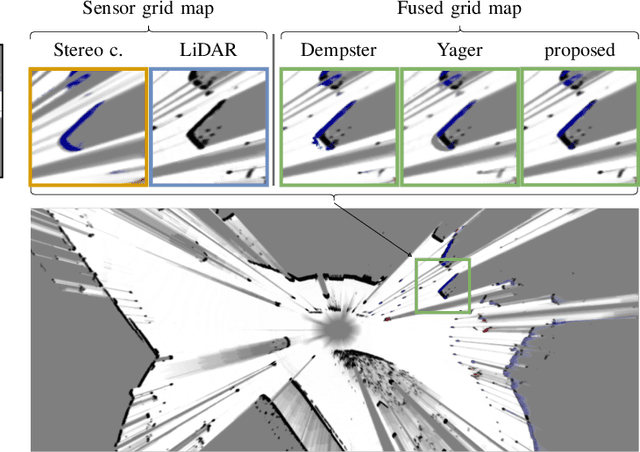

Sensor Data Fusion in Top-View Grid Maps using Evidential Reasoning with Advanced Conflict Resolution

Apr 19, 2022

Abstract:We present a new method to combine evidential top-view grid maps estimated based on heterogeneous sensor sources. Dempster's combination rule that is usually applied in this context provides undesired results with highly conflicting inputs. Therefore, we use more advanced evidential reasoning techniques and improve the conflict resolution by modeling the reliability of the evidence sources. We propose a data-driven reliability estimation to optimize the fusion quality using the Kitti-360 dataset. We apply the proposed method to the fusion of LiDAR and stereo camera data and evaluate the results qualitatively and quantitatively. The results demonstrate that our proposed method robustly combines measurements from heterogeneous sensors and successfully resolves sensor conflicts.

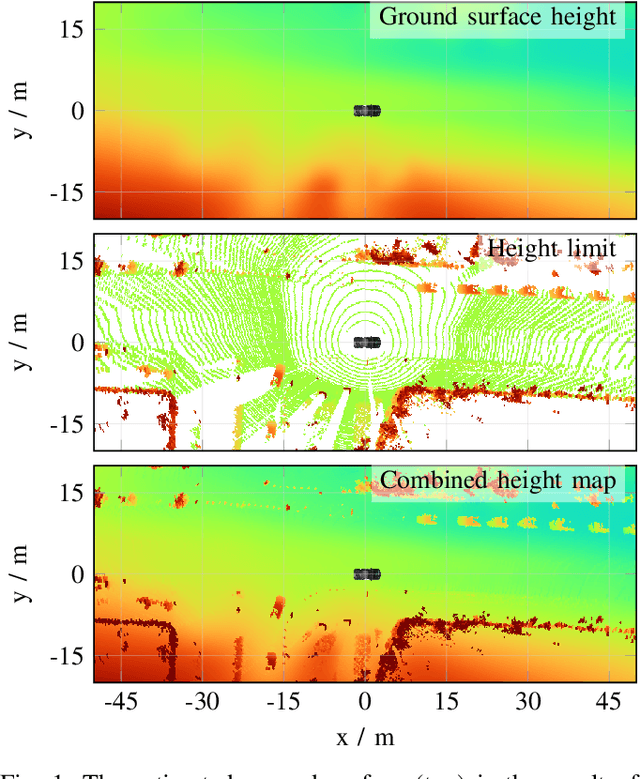

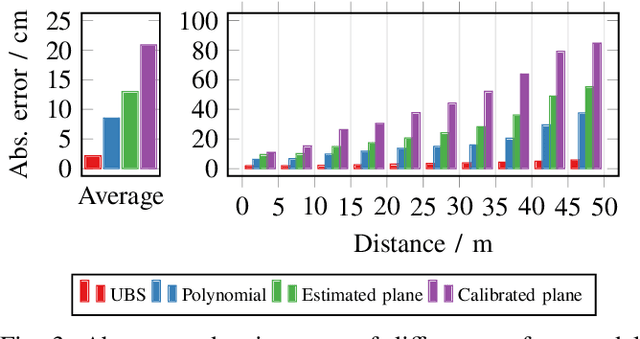

Fast and Robust Ground Surface Estimation from LIDAR Measurements using Uniform B-Splines

Mar 02, 2022

Abstract:We propose a fast and robust method to estimate the ground surface from LIDAR measurements on an automated vehicle. The ground surface is modeled as a UBS which is robust towards varying measurement densities and with a single parameter controlling the smoothness prior. We model the estimation process as a robust LS optimization problem which can be reformulated as a linear problem and thus solved efficiently. Using the SemanticKITTI data set, we conduct a quantitative evaluation by classifying the point-wise semantic annotations into ground and non-ground points. Finally, we validate the approach on our research vehicle in real-world scenarios.

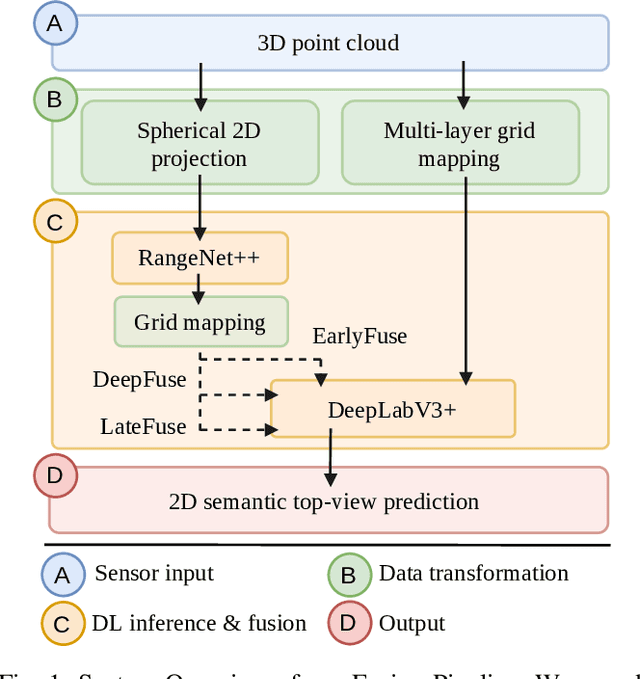

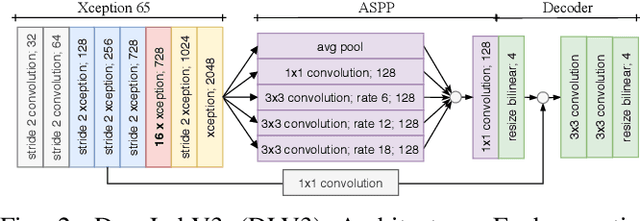

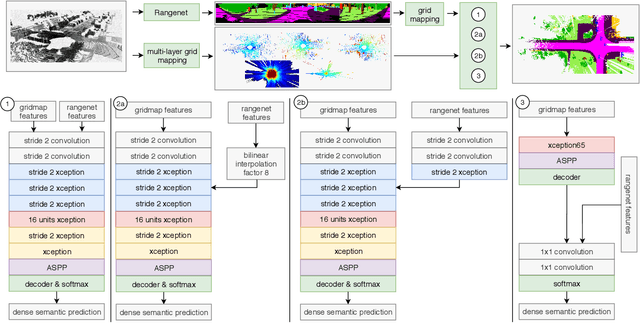

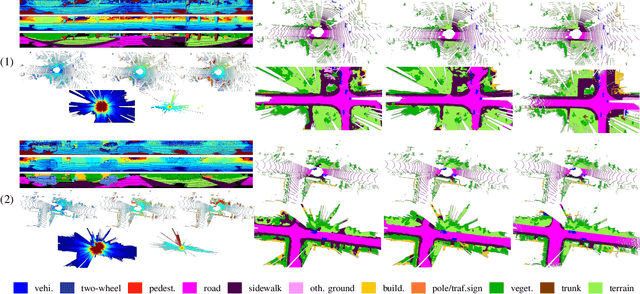

Improving Lidar-Based Semantic Segmentation of Top-View Grid Maps by Learning Features in Complementary Representations

Mar 02, 2022

Abstract:In this paper we introduce a novel way to predict semantic information from sparse, single-shot LiDAR measurements in the context of autonomous driving. In particular, we fuse learned features from complementary representations. The approach is aimed specifically at improving the semantic segmentation of top-view grid maps. Towards this goal the 3D LiDAR point cloud is projected onto two orthogonal 2D representations. For each representation a tailored deep learning architecture is developed to effectively extract semantic information which are fused by a superordinate deep neural network. The contribution of this work is threefold: (1) We examine different stages within the segmentation network for fusion. (2) We quantify the impact of embedding different features. (3) We use the findings of this survey to design a tailored deep neural network architecture leveraging respective advantages of different representations. Our method is evaluated using the SemanticKITTI dataset which provides a point-wise semantic annotation of more than 23.000 LiDAR measurements.

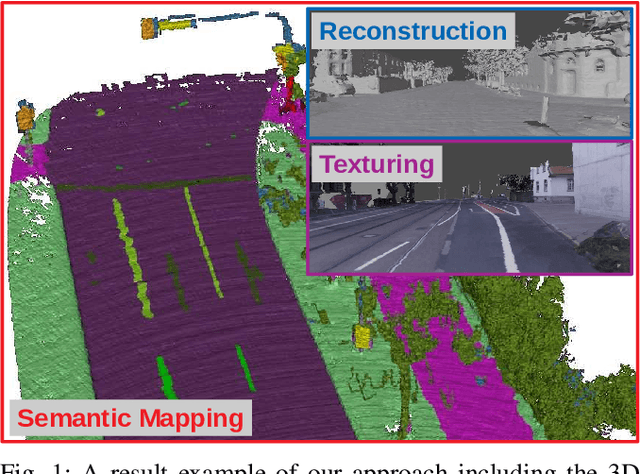

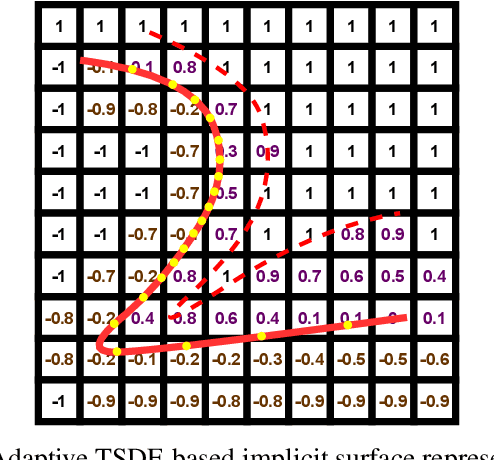

Large-Scale 3D Semantic Reconstruction for Automated Driving Vehicles with Adaptive Truncated Signed Distance Function

Feb 28, 2022

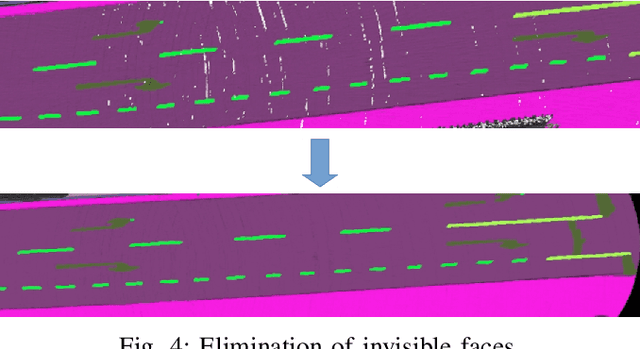

Abstract:The Large-scale 3D reconstruction, texturing and semantic mapping are nowadays widely used for automated driving vehicles, virtual reality and automatic data generation. However, most approaches are developed for RGB-D cameras with colored dense point clouds and not suitable for large-scale outdoor environments using sparse LiDAR point clouds. Since a 3D surface can be usually observed from multiple camera images with different view poses, an optimal image patch selection for the texturing and an optimal semantic class estimation for the semantic mapping are still challenging. To address these problems, we propose a novel 3D reconstruction, texturing and semantic mapping system using LiDAR and camera sensors. An Adaptive Truncated Signed Distance Function is introduced to describe surfaces implicitly, which can deal with different LiDAR point sparsities and improve model quality. The from this implicit function extracted triangle mesh map is then textured from a series of registered camera images by applying an optimal image patch selection strategy. Besides that, a Markov Random Field-based data fusion approach is proposed to estimate the optimal semantic class for each triangle mesh. Our approach is evaluated on a synthetic dataset, the KITTI dataset and a dataset recorded with our experimental vehicle. The results show that the 3D models generated using our approach are more accurate in comparison to using other state-of-the-art approaches. The texturing and semantic mapping achieve also very promising results.

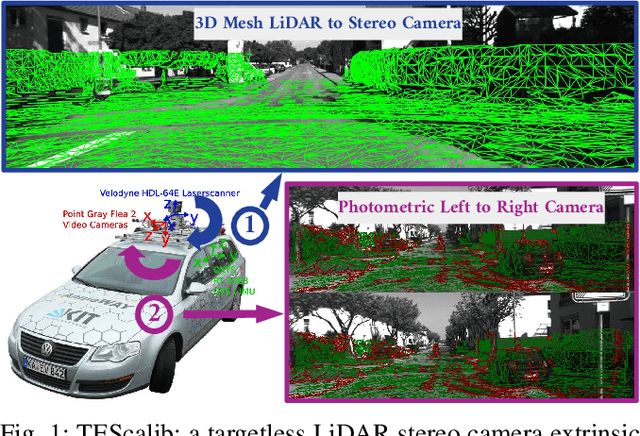

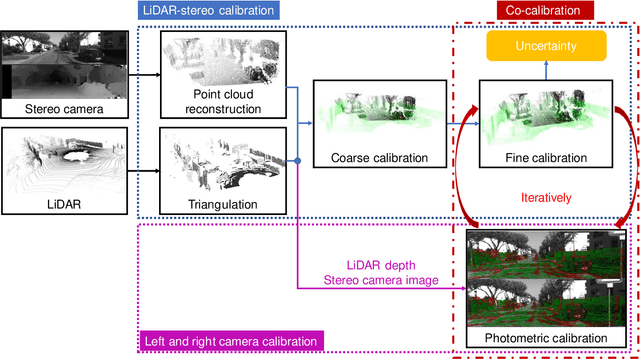

TEScalib: Targetless Extrinsic Self-Calibration of LiDAR and Stereo Camera for Automated Driving Vehicles with Uncertainty Analysis

Feb 28, 2022

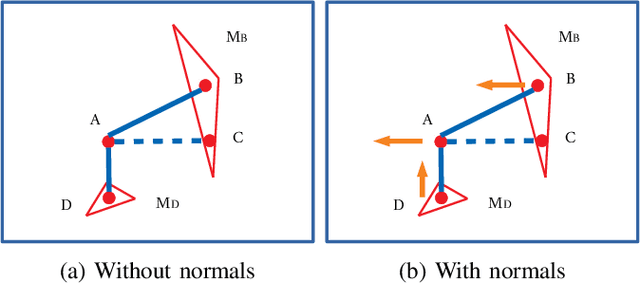

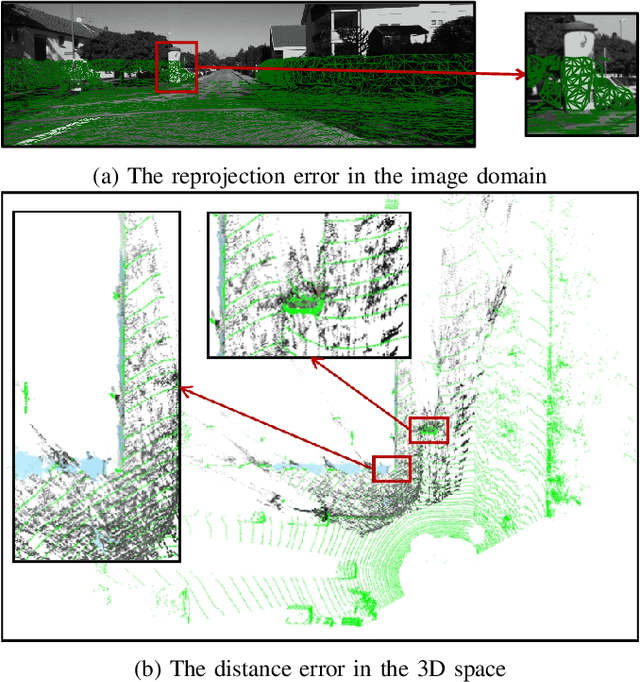

Abstract:In this paper, we present TEScalib, a novel extrinsic self-calibration approach of LiDAR and stereo camera using the geometric and photometric information of surrounding environments without any calibration targets for automated driving vehicles. Since LiDAR and stereo camera are widely used for sensor data fusion on automated driving vehicles, their extrinsic calibration is highly important. However, most of the LiDAR and stereo camera calibration approaches are mainly target-based and therefore time consuming. Even the newly developed targetless approaches in last years are either inaccurate or unsuitable for driving platforms. To address those problems, we introduce TEScalib. By applying a 3D mesh reconstruction-based point cloud registration, the geometric information is used to estimate the LiDAR to stereo camera extrinsic parameters accurately and robustly. To calibrate the stereo camera, a photometric error function is builded and the LiDAR depth is involved to transform key points from one camera to another. During driving, these two parts are processed iteratively. Besides that, we also propose an uncertainty analysis for reflecting the reliability of the estimated extrinsic parameters. Our TEScalib approach evaluated on the KITTI dataset achieves very promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge